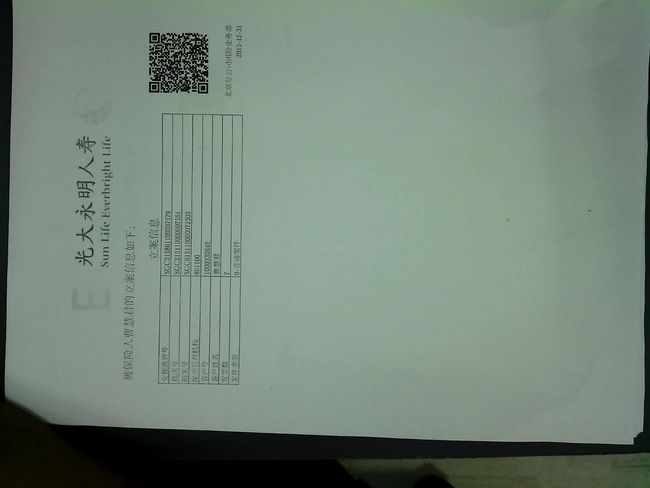

扫描图像二维码抠图(倾斜校正 去黑边)

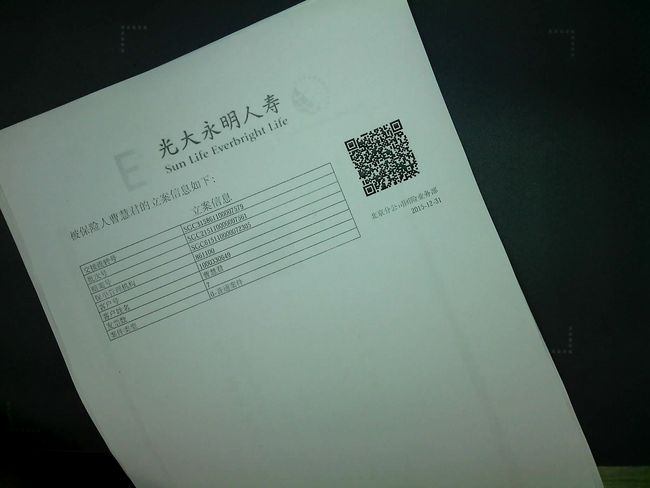

由于要识别扫描仪得到的图片,直接将得到的图片进行识别,并不能得到识别结果,笔者使用的是zbar类,只能正常识别只含有二维码图像的图片。于是将二维码从图中扣出来就成了工作中的一个需求。

(网上有一些收费的控件具有图像预处理的功能,可以进行很好的识别)

简单的使用现有的图像边缘检测和连通域算法,并不能得到很好的效果:

例如canny边沿检测处理结果:

不过观察不难发现二维码的特点,接近于正方形的一个小方块,于是设想是否能够通过简单的画框得到该二维码的区域。

另外由于扫描是可能会产生倾斜,会导致二维码区域变成非矩形的区域(其实二维码的识别是360度的,也许并不需要进行倾斜校正)。

笔者在网上找到倾斜校正的算法:

参见大神博客:http://johnhany.net/2013/11/dft-based-text-rotation-correction/

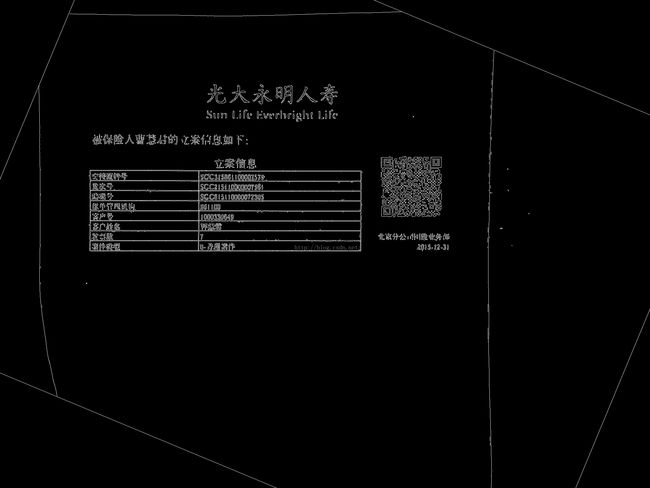

人为增加一点倾斜,如图:

使用大神的算法后得到:

然后在通过边缘检测算法得到:

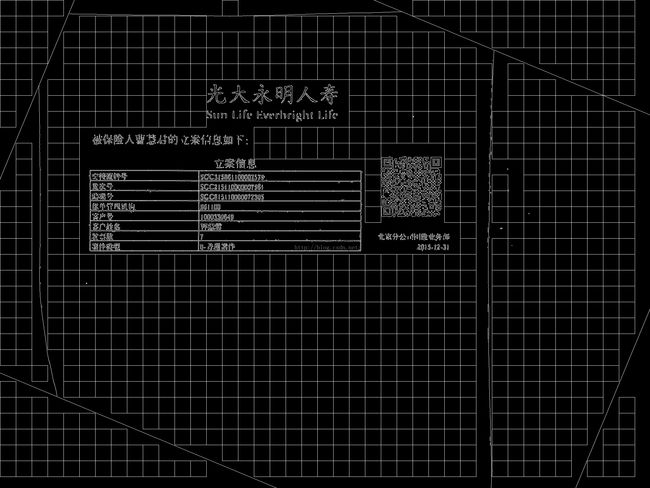

为了方便画框,首先要去掉周围的白线。大致思路是获取最大的黑色连通区域,为了做到这一点,笔者使用横竖画框,就是如果一个50*50的区域内没有白点,则进行画框,初步的结果如下:

然后将相邻的白框进行和并,之后取最大的连通域框,并将周围的区域都变成黑色:

之后将所有的白框置黑:

至此便得到了一个比较干净的图片。下一步就是进行画框,笔者使用的思路是,从左往右进行探测如果10个像素点内出现了白点,则往右移动。出现一个白点,则往右往下延展10个像素点。从上往下 从左往右 依次探测,如此会生成很多个小白框:

同样利用合并的思路,需要进行框的合并,左右合并,上下合并,需要遍历进行两次得到最后的矩形框:

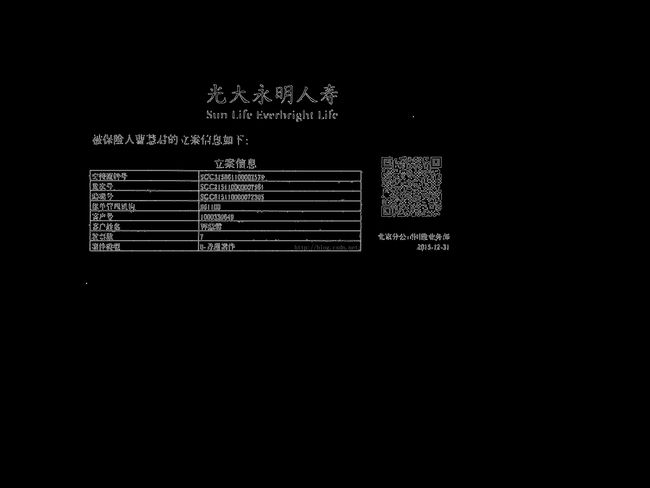

看到二维码周围的那个白框,就看到了预期的结果。在此需要将它和其他的非二维码进行区分,可以设定条件,例如宽高比和宽高像素等。最后将二维码的白框向左上扩大10个像素点,根据这个框的坐标取原图像取对应的图像:

大功告成!

当然其中的算法也存在很多漏洞,例如已经发现的有,如果最大的连通区域不是中间的,那么就需要进行取舍。如果图中含有其他一些黑边什么的会影响最后的画框也不行。针对不同的图形,去白边和画框的参数需要重新设定。

这只是作为图像处理菜鸟的简单算法。对于opencv和cximage都不是很熟悉,也不知道该用什么函数,所以代码很臃肿,但是就图像的本质来说,只不过是二维的矩阵,熟练的掌握了二维数组,那么处理数据也并不是那么困难。

以下是源码,希望大神多多指点:

</pre><pre name="code" class="cpp"><span style="font-size:18px;">/* * 原倾斜校正作者! * Author: John Hany * Website: http://johnhany.net * Source code updates: https://github/johnhany/textRotCorrect * If you have any advice, you could contact me at: [email protected] * Need OpenCV environment! * */ #include "opencv2/core/core.hpp" #include "opencv2/imgproc/imgproc_c.h" #include "opencv2/imgproc/imgproc.hpp" #include "opencv2/highgui/highgui.hpp" #include <iostream> #include <algorithm> #include <stdio.h> #include "opencv/cv.h" #include "opencv/cxcore.h" #include "opencv2/highgui/highgui_c.h" #include "direct.h" #define BOX_WIDTH 50 #define BLACK 0 #define WHITE 255 #define RATE 0.2 // #pragma comment(lib, "ml.lib") // #pragma comment(lib, "cv.lib") // #pragma comment(lib, "cvaux.lib") // #pragma comment(lib, "cvcam.lib") // #pragma comment(lib, "cxcore.lib") // #pragma comment(lib, "cxts.lib") // #pragma comment(lib, "highgui.lib") // #pragma comment(lib, "cvhaartraining.lib using namespace cv; using namespace std; #define GRAY_THRESH 150 #define HOUGH_VOTE 100 //#define DEGREE 27 //图像的轮廓检测下 //By MoreWindows (http://blog.csdn.net/MoreWindows) char szCurrentPath[MAX_PATH]; string getFilePath( const char * szBuf) { string str; str = szCurrentPath; str += "\\"; str += szBuf; //删除已经存在的文件 DeleteFile(str.c_str()); return str; } string strOrigin; string strSave1; string strSave2; string strSave3; string strSave4; string strSave5; string strSave6_0; string strSave6_1; string strSave6; string strSave7; string strSave8; //#pragma comment(linker, "/subsystem:\"windows\" /entry:\"mainCRTStartup\"") IplImage *g_pGrayImage = NULL; const char *pstrWindowsBinaryTitle = "二值图(http://blog.csdn.net/MoreWindows)"; const char *pstrWindowsOutLineTitle = "轮廓图(http://blog.csdn.net/MoreWindows)"; CvSeq *g_pcvSeq = NULL; void on_trackbar(int pos) { // 转为二值图 IplImage *pBinaryImage = cvCreateImage(cvGetSize(g_pGrayImage), IPL_DEPTH_8U, 1); cvThreshold(g_pGrayImage, pBinaryImage, pos, 255, CV_THRESH_BINARY); // 显示二值图 cvShowImage(pstrWindowsBinaryTitle, pBinaryImage); CvMemStorage* cvMStorage = cvCreateMemStorage(); // 检索轮廓并返回检测到的轮廓的个数 cvFindContours(pBinaryImage,cvMStorage, &g_pcvSeq); IplImage *pOutlineImage = cvCreateImage(cvGetSize(g_pGrayImage), IPL_DEPTH_8U, 3); int _levels = 5; cvZero(pOutlineImage); cvDrawContours(pOutlineImage, g_pcvSeq, CV_RGB(255,0,0), CV_RGB(0,255,0), _levels); cvShowImage(pstrWindowsOutLineTitle, pOutlineImage); cvReleaseMemStorage(&cvMStorage); cvReleaseImage(&pBinaryImage); cvReleaseImage(&pOutlineImage); } //调用opencv 轮廓检测 int outLinePic() { const char *pstrWindowsSrcTitle = "原图(http://blog.csdn.net/MoreWindows)"; const char *pstrWindowsToolBarName = "二值化"; // 从文件中加载原图 IplImage *pSrcImage = cvLoadImage("003.jpg", CV_LOAD_IMAGE_UNCHANGED); // 显示原图 cvNamedWindow(pstrWindowsSrcTitle, CV_WINDOW_AUTOSIZE); cvShowImage(pstrWindowsSrcTitle, pSrcImage); // 转为灰度图 g_pGrayImage = cvCreateImage(cvGetSize(pSrcImage), IPL_DEPTH_8U, 1); cvCvtColor(pSrcImage, g_pGrayImage, CV_BGR2GRAY); // 创建二值图和轮廓图窗口 cvNamedWindow(pstrWindowsBinaryTitle, CV_WINDOW_AUTOSIZE); cvNamedWindow(pstrWindowsOutLineTitle, CV_WINDOW_AUTOSIZE); // 滑动条 int nThreshold = 0; cvCreateTrackbar(pstrWindowsToolBarName, pstrWindowsBinaryTitle, &nThreshold, 254, on_trackbar); on_trackbar(1); cvWaitKey(0); cvDestroyWindow(pstrWindowsSrcTitle); cvDestroyWindow(pstrWindowsBinaryTitle); cvDestroyWindow(pstrWindowsOutLineTitle); cvReleaseImage(&pSrcImage); cvReleaseImage(&g_pGrayImage); return 0; } int outLinePic2() { Mat src = imread(strSave1.c_str()); Mat dst; //输入图像 //输出图像 //输入图像颜色通道数 //x方向阶数 //y方向阶数 Sobel(src,dst,src.depth(),1,1); //imwrite("sobel.jpg",dst); //输入图像 //输出图像 //输入图像颜色通道数 Laplacian(src,dst,src.depth()); //imwrite("laplacian.jpg",dst); //输入图像 //输出图像 //彩色转灰度 cvtColor(src,src,CV_BGR2GRAY); //canny只处理灰度图 //输入图像 //输出图像 //低阈值 //高阈值,opencv建议是低阈值的3倍 //内部sobel滤波器大小 Canny(src,dst,50,150,3); imwrite(strSave2.c_str(),dst); //imshow("dst",dst); //waitKey(); return 0; } //连通域分割 int ConnectDomain() { IplImage* src; src=cvLoadImage("imageText_D.jpg",CV_LOAD_IMAGE_GRAYSCALE); IplImage* dst = cvCreateImage( cvGetSize(src), 8, 3 ); CvMemStorage* storage = cvCreateMemStorage(0); CvSeq* contour = 0; cvThreshold( src, src,120, 255, CV_THRESH_BINARY );//二值化 cvNamedWindow( "Source", 1 ); cvShowImage( "Source", src ); //提取轮廓 cvFindContours( src, storage, &contour, sizeof(CvContour), CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE ); cvZero( dst );//清空数组 CvSeq* _contour =contour; double maxarea=0; double minarea=100; int n=-1,m=0;//n为面积最大轮廓索引,m为迭代索引 for( ; contour != 0; contour = contour->h_next ) { double tmparea=fabs(cvContourArea(contour)); if(tmparea < minarea) { cvSeqRemove(contour,0); //删除面积小于设定值的轮廓 continue; } CvRect aRect = cvBoundingRect( contour, 0 ); if ((aRect.width/aRect.height)<1) { cvSeqRemove(contour,0); //删除宽高比例小于设定值的轮廓 continue; } if(tmparea > maxarea) { maxarea = tmparea; n=m; } m++; // CvScalar color = CV_RGB( rand()&255, rand()&255, rand()&255 );//创建一个色彩值 CvScalar color = CV_RGB( 0, 255,255 ); //max_level 绘制轮廓的最大等级。如果等级为0,绘制单独的轮廓。如果为1,绘制轮廓及在其后的相同的级别下轮廓。 //如果值为2,所有的轮廓。如果等级为2,绘制所有同级轮廓及所有低一级轮廓,诸此种种。 //如果值为负数,函数不绘制同级轮廓,但会升序绘制直到级别为abs(max_level)-1的子轮廓。 cvDrawContours( dst, contour, color, color, -1, 1, 8 );//绘制外部和内部的轮廓 } contour =_contour; /*int k=0;*/ int count=0; for( ; contour != 0; contour = contour->h_next ) { count++; double tmparea=fabs(cvContourArea(contour)); if (tmparea==maxarea /*k==n*/) { CvScalar color = CV_RGB( 255, 0, 0); cvDrawContours( dst, contour, color, color, -1, 1, 8 ); } /*k++;*/ } printf("The total number of contours is:%d",count); cvNamedWindow( "Components", 1 ); cvShowImage( "Components", dst ); cvSaveImage("imageText_ConnectDomain.jpg",dst); cvWaitKey(0); cvDestroyWindow( "Source" ); cvReleaseImage(&src); cvDestroyWindow( "Components" ); cvReleaseImage(&dst); return 0; } struct MYBOX{ BOOL bIsBlack; Rect rect; MYBOX() { bIsBlack = FALSE; } }; struct CONNECT_ZON{ vector<RECT> rectList; int nBox; CONNECT_ZON() { nBox = 0; } }; //画框线为一个颜色 void DrowBoxColor(Mat &srcImg, std::vector<RECT> &boxList, int nColor) { int nResultSize = boxList.size(); for (int i = 0; i < nResultSize; i ++) { RECT tempRect = boxList[i]; //上下边线 int y1 = tempRect.top; int y2 = tempRect.bottom; for (int x = tempRect.left; x <= tempRect.right; x ++) { *(srcImg.data + srcImg.step[1] * x + srcImg.step[0] * y1) = nColor; *(srcImg.data + srcImg.step[1] * x + srcImg.step[0] * y2) = nColor; } //左右边线 int x1 = tempRect.left; int x2 = tempRect.right; for (int y = tempRect.top; y <= tempRect.bottom; y ++) { *(srcImg.data + srcImg.step[1] * x1 + srcImg.step[0] * y) = nColor; *(srcImg.data + srcImg.step[1] * x2 + srcImg.step[0] * y) = nColor; } } } //计算两个白框是否临近 BOOL IsRectNear(RECT rect1, RECT rect2) { if ( sqrt(1.0*(rect1.top - rect2.top)* (rect1.top - rect2.top) + 1.0*(rect1.left - rect2.left)*(rect1.left - rect2.left) ) == BOX_WIDTH) { return TRUE; } else { return FALSE; } } //计算两个框是否相交 BOOL IsRectIntersect(RECT rect1, RECT rect2) { int nWidth1 = rect1.right - rect1.left; int nHeight1 = rect1.bottom - rect1.top; int nWidth2 = rect2.right - rect2.left; int nHeight2 = rect2.bottom - rect2.top; if ( rect1.left <= rect2.left) { if(rect2.left - rect1.left <= nWidth1) { if (rect1.top < rect2.top) { if (rect2.top - rect1.top <= nHeight1) { return TRUE; } else { return FALSE; } } else { if (rect1.top - rect2.top <= nHeight2) { return TRUE; } else { return FALSE; } } } else { return FALSE; } } else { if(rect1.left - rect2.left <= nWidth2) { if (rect1.top < rect2.top) { if (rect2.top - rect1.top <= nHeight1) { return TRUE; } else { return FALSE; } } else { if (rect1.top - rect2.top <= nHeight2) { return TRUE; } else { return FALSE; } } } else { return FALSE; } } } //两个区域是否临近 BOOL IsZonNear(CONNECT_ZON zon1, CONNECT_ZON zon2) { for(int i = 0; i < zon1.rectList.size(); i++) { RECT rect1 = zon1.rectList[i]; for(int j = 0; j < zon2.rectList.size(); j ++) { RECT rect2 = zon2.rectList[j]; if (IsRectNear(rect1,rect2) == TRUE) { return TRUE; } } } return FALSE; } //将两个区域合并 void MergZon(CONNECT_ZON& zon1, CONNECT_ZON& zon2) { if (zon1.nBox >= zon2.nBox) { for(int i = 0; i < zon2.nBox; i ++) { zon1.rectList.push_back(zon2.rectList[i]); } zon1.nBox += zon2.nBox; zon2.rectList.clear(); zon2.nBox = 0; } else { for(int i = 0; i < zon1.nBox; i ++) { zon2.rectList.push_back(zon1.rectList[i]); } zon2.nBox += zon1.nBox; zon1.rectList.clear(); zon1.nBox = 0; } } //自定义排序函数 BOOL SortByM1( const RECT &v1, const RECT &v2)//注意:本函数的参数的类型一定要与vector中元素的类型一致 { if (v1.top < v2.top ) //按列升序排列 { return TRUE; } else if (v1.top == v2.top && v1.left < v2.left ) { return TRUE; } else { //如果a==b,则返回的应该是false,如果返回的是true,则会出上面的错。 return FALSE; } } //自定义排序函数 BOOL SortByM2( const CONNECT_ZON &v1, const CONNECT_ZON &v2)//注意:本函数的参数的类型一定要与vector中元素的类型一致 { if (v1.nBox > v2.nBox) { return TRUE; } else { return FALSE; } } //将一部分区域变成黑色或者白色 void SetRectColor(Mat& srcImg, RECT rect, int nColor) { for (int col = rect.top; col < rect.bottom ; col ++) { for (int row = rect.left; row < rect.right; row++) { (*(srcImg.data + srcImg.step[0] * col + srcImg.step[1] * row)) = nColor; } } } //选择一个内部的联通域 第一个返回ture 第二个返回false BOOL IsInnerZon(CONNECT_ZON &zon1, CONNECT_ZON &zon2) { RECT rect1 = zon1.rectList[0]; RECT rect2 = zon2.rectList[0]; //获取两个区域的左上 右下坐标 for (int i = 1; i < zon1.rectList.size(); i ++) { RECT tempRect = zon1.rectList[i]; if (tempRect.left < rect1.left) { rect1.left = tempRect.left; } if (tempRect.right > rect1.right) { rect1.right = tempRect.right; } if (tempRect.top < rect1.top) { rect1.top = tempRect.top; } if (tempRect.bottom > rect1.bottom) { rect1.bottom = tempRect.bottom; } } for (int i = 1; i < zon2.rectList.size(); i ++) { RECT tempRect = zon2.rectList[i]; if (tempRect.left < rect2.left) { rect2.left = tempRect.left; } if (tempRect.right > rect2.right) { rect2.right = tempRect.right; } if (tempRect.top < rect2.top) { rect2.top = tempRect.top; } if (tempRect.bottom > rect2.bottom) { rect2.bottom = tempRect.bottom; } } //评分 int nPoint1 = 0; int nPoint2 = 0; if (rect1.left < rect2.left) { nPoint1 ++; } else { nPoint2 ++; } if (rect1.right > rect2.right) { nPoint1 ++; } else { nPoint2 ++; } if (rect1.top < rect2.top) { nPoint1 ++; } else { nPoint2 ++; } if (rect1.bottom > rect2.bottom) { nPoint1 ++; } else { nPoint2 ++; } if (nPoint1 > nPoint2) { return FALSE; } else { return TRUE; } } //清理图像的边缘,只保留中间的部分 int ClearEdge() { //IplImage* src = cvLoadImage("imageText_D.jpg",CV_LOAD_IMAGE_GRAYSCALE); Mat srcImg = imread(strSave2.c_str(), CV_LOAD_IMAGE_GRAYSCALE); //int nWidth = src->width; //int nHeight = src->height; int nWidth = srcImg.cols; int nHeight = srcImg.rows; int nUp = 210; int nLeft = 140; int nRight = 170; int nDown = 210; //先确定上边界 int nRectSize = 50; vector<RECT > BoxList; for(int i = 0; i < nHeight - nRectSize; i += nRectSize) { for (int j = 0; j < nWidth - nRectSize; j += nRectSize) { //看这个box中的像素是否都为黑 允许一个杂点 BOOL bBlack = TRUE; int nWhite = 0; for (int col = j; col < j + nRectSize; col ++) { for (int row = i; row < i + nRectSize; row++) { int nPixel = (int)(*(srcImg.data + srcImg.step[0] * row + srcImg.step[1] * col)); if ( nPixel == 255) { nWhite ++; if (nWhite >= 0) { bBlack = FALSE; } } } if (bBlack == FALSE) { break; } } if (bBlack) { RECT temRect = {j,i, j + nRectSize, i + nRectSize}; BoxList.push_back(temRect); } } } //将box的边线都编程白色。 int nSize = BoxList.size(); for (int i = 0; i < nSize; i ++) { RECT tempRect = BoxList[i]; //上下边线 int y1 = tempRect.top; int y2 = tempRect.bottom; for (int x = tempRect.left; x <= tempRect.right; x ++) { *(srcImg.data + srcImg.step[1] * x + srcImg.step[0] * y1) = WHITE; *(srcImg.data + srcImg.step[1] * x + srcImg.step[0] * y2) = WHITE; } //左右边线 int x1 = tempRect.left; int x2 = tempRect.right; for (int y = tempRect.top; y <= tempRect.bottom; y ++) { *(srcImg.data + srcImg.step[1] * x1 + srcImg.step[0] * y) = WHITE; *(srcImg.data + srcImg.step[1] * x2 + srcImg.step[0] * y) = WHITE; } } imwrite(strSave3.c_str(),srcImg); vector<CONNECT_ZON> g_ConnectZon; //获取白框最大的联通域 for(int i = 0; i < nSize; i++) { RECT tempRect = BoxList[i]; if (g_ConnectZon.empty()) { CONNECT_ZON connectTemp; connectTemp.rectList.push_back(tempRect); connectTemp.nBox ++; g_ConnectZon.push_back(connectTemp); } else { BOOL bInList = FALSE; for(int j = 0; j < g_ConnectZon.size(); j ++) { CONNECT_ZON connectZon = g_ConnectZon[j]; for (int k = 0; k < connectZon.rectList.size(); k ++) { if (IsRectNear(tempRect, connectZon.rectList[k])) { g_ConnectZon[j].rectList.push_back(tempRect); g_ConnectZon[j].nBox ++; bInList = TRUE; break; } if (bInList) { break; } } } //没有相邻则加入新的 if (bInList == FALSE) { CONNECT_ZON connectTemp; connectTemp.rectList.push_back(tempRect); connectTemp.nBox ++; g_ConnectZon.push_back(connectTemp); } } } //检查任意两个连通域中是否有相邻的框 for (int i = 0; i < g_ConnectZon.size(); i ++) { for(int j = i + 1; j < g_ConnectZon.size(); j ++) { BOOL bZonNear = IsZonNear(g_ConnectZon[i], g_ConnectZon[j]); if (bZonNear) { //相邻则把小的加入到大的之中 //MergZon(g_ConnectZon[i], g_ConnectZon[j]); if (g_ConnectZon[i].nBox >= g_ConnectZon[j].nBox) { for(int k = 0; k < g_ConnectZon[j].nBox; k ++) { g_ConnectZon[i].rectList.push_back(g_ConnectZon[j].rectList[k]); } g_ConnectZon[i].nBox += g_ConnectZon[j].nBox; g_ConnectZon[j].rectList.clear(); g_ConnectZon[j].nBox = 0; } else { for(int k = 0; k < g_ConnectZon[i].nBox; k ++) { g_ConnectZon[j].rectList.push_back(g_ConnectZon[i].rectList[k]); } g_ConnectZon[j].nBox += g_ConnectZon[i].nBox; g_ConnectZon[i].rectList.clear(); g_ConnectZon[i].nBox = 0; } } } } //取最大的联通域boxList int nMaxSize = 0; //如果有两个较大的联通域,则取里面的一个。 std::sort(g_ConnectZon.begin(),g_ConnectZon.end(),SortByM2); CONNECT_ZON maxConnect = g_ConnectZon[0]; //出现另外一个 if (g_ConnectZon.size() > 1) { if (g_ConnectZon[1].nBox > 0) { CONNECT_ZON maxConnectOther = g_ConnectZon[1]; BOOL bInner = IsInnerZon(maxConnect, maxConnectOther); if (!bInner) { maxConnect = maxConnectOther; } } } //将box进行排序,按照从左到右 从上到下。 std::sort(maxConnect.rectList.begin(),maxConnect.rectList.end(),SortByM1); //将之分成多个行。 vector<CONNECT_ZON> LineConnect; int nIndexOfLine = -1; int nLine = -1; for (int i = 0; i < maxConnect.rectList.size(); i ++) { RECT tempRect = maxConnect.rectList[i]; if (nLine != tempRect.top) { CONNECT_ZON tempConnect; tempConnect.rectList.push_back(tempRect); tempConnect.nBox ++; nIndexOfLine ++; LineConnect.push_back(tempConnect); nLine = tempRect.top; } else { LineConnect[nIndexOfLine].rectList.push_back(tempRect); LineConnect[nIndexOfLine].nBox ++; } //从左往右 从上往下。 } //如果没有白色联通域则直接保存结果 if (maxConnect.rectList.size() == 0) { IplImage* src; IplImage* dst; src = cvLoadImage(strSave7.c_str(),1); if(!src) { return 0; } cvSetImageROI(src,cvRect(0,0,nWidth, nHeight)); dst = cvCreateImage(cvSize(nWidth, nHeight), IPL_DEPTH_8U, src->nChannels); cvCopy(src,dst,0); cvResetImageROI(src); //cvNamedWindow("操作后的图像",1); //cvShowImage("操作后的图像",dst); cvSaveImage(strSave8.c_str(), dst); return 0; } //将最大联通域周边的区域都变成黑色 0 //将每一行的左右两边都变成黑色 //上面部分。 RECT rectFirst = LineConnect[0].rectList[0]; RECT rectTop = {0,0,nWidth ,rectFirst.bottom}; SetRectColor(srcImg,rectTop, BLACK); //中间各行 for (int i = 0; i < LineConnect.size(); i ++) { CONNECT_ZON tempConnect = LineConnect[i]; RECT tempRect = tempConnect.rectList[0]; RECT leftRect = {0, tempRect.top, tempRect.right, tempRect.bottom}; SetRectColor(srcImg, leftRect,BLACK); tempRect = tempConnect.rectList[tempConnect.rectList.size() - 1]; RECT rightRect = {tempRect.left, tempRect.top, nWidth, tempRect.bottom }; SetRectColor(srcImg, rightRect, BLACK); } //最下面部分 RECT rectLast = LineConnect[LineConnect.size() - 1].rectList[0]; RECT rectBottom = {0, rectLast.bottom, nWidth, nHeight}; SetRectColor(srcImg, rectBottom,BLACK); imwrite(strSave3.c_str(),srcImg); //将所有的框线都置黑 DrowBoxColor(srcImg, maxConnect.rectList, BLACK); imwrite(strSave4.c_str(),srcImg); //探测矩形框 int nMaxBlackNum = 10; int nMinBoxWidth = 50; int nBlack = 0; vector<RECT > ResultBoxList; int nBoxIndex = -1; BOOL bStartBox = FALSE; int nWhite = 0; for (int col = 0; col < nHeight; col += 1) { for (int row = 0; row < nWidth; row++) { int nPixel = (int)(*(srcImg.data + srcImg.step[1] * row + srcImg.step[0] * col)); if (nPixel == BLACK && bStartBox == FALSE) { nBlack = 0; continue; } //碰到第一个白色像素点开始探测矩形框。 else if ( nPixel == WHITE && bStartBox == FALSE) { //不能超过右 下边界 RECT rectTemp = {row, col, min(row + nMaxBlackNum, nWidth), min(col + nMaxBlackNum, nHeight)}; bStartBox = TRUE; ResultBoxList.push_back(rectTemp); nBoxIndex ++; } else if(nPixel == WHITE && bStartBox == TRUE) { //第二个仍然是白色 宽度加1 或者中间有黑色像素 if (ResultBoxList[nBoxIndex].right < nWidth - 1) { if (nBlack == 0) { ResultBoxList[nBoxIndex].right += 1; } else { ResultBoxList[nBoxIndex].right += nBlack; nBlack = 0; } } } else if(nPixel == BLACK && bStartBox == TRUE) { //碰到黑色 nBlack ++; //连续碰到10个黑点则结束box if (nBlack > nMaxBlackNum) { //框的大小如果小于50 则不计入 // int nWidth = ResultBoxList[nBoxIndex].right - ResultBoxList[nBoxIndex].left; // if (nWidth < nMinBoxWidth) // { // ResultBoxList.erase(ResultBoxList.end() - 1); // } bStartBox = FALSE; } } } } //画框 int nResultSize = ResultBoxList.size(); // Mat Img5 = srcImg; // DrowBoxColor(Img5,ResultBoxList, WHITE); // imwrite(strSave5.c_str(),Img5); //合并框 vector<RECT> mergResultList; int nIndexOfResultList = -1; for(int i = 0; i < nResultSize; i++) { RECT tempRect = ResultBoxList[i]; if (mergResultList.empty()) { mergResultList.push_back(tempRect); nIndexOfResultList ++; } else { BOOL bInList = FALSE; for(int j = 0; j < mergResultList.size(); j ++) { BOOL bIntersect = IsRectIntersect(mergResultList[j], tempRect); if (bIntersect) { //相交则合并。 mergResultList[j].left = min(tempRect.left, mergResultList[j].left); mergResultList[j].top = min(tempRect.top, mergResultList[j].top); mergResultList[j].right = max(tempRect.right, mergResultList[j].right); mergResultList[j].bottom = max(tempRect.bottom, mergResultList[j].bottom); bInList = TRUE; } } //没有相邻则加入新的 if (bInList == FALSE) { mergResultList.push_back(tempRect); nIndexOfResultList ++; } } } //第二次合并 Mat Img6 = srcImg; // DrowBoxColor(Img6, mergResultList, WHITE); // imwrite(strSave6_0.c_str(),Img6); for(int i = 0; i < mergResultList.size(); i ++) { if (mergResultList[i].left == 0 && mergResultList[i].right == 0) { continue; } for(int j = i + 1; j < mergResultList.size(); j ++) { BOOL bIntersect = IsRectIntersect(mergResultList[i], mergResultList[j]); if (bIntersect) { //相交则合并。 mergResultList[i].left = min(mergResultList[j].left, mergResultList[i].left); mergResultList[i].top = min(mergResultList[j].top, mergResultList[i].top); mergResultList[i].right = max(mergResultList[j].right, mergResultList[i].right); mergResultList[i].bottom = max(mergResultList[j].bottom, mergResultList[i].bottom); //被合并的清空 mergResultList[j].left = 0; mergResultList[j].top = 0; mergResultList[j].right = 0; mergResultList[j].bottom = 0; } } } DrowBoxColor(srcImg, mergResultList, WHITE); imwrite(strSave6_1.c_str(),srcImg); //去除宽高比大鱼1.5 和长宽绝对值小于80的 RECT destRect; BOOL bHaveOne = FALSE; for(int i = 0;i < mergResultList.size(); i ++) { int nTempWidth = mergResultList[i].right - mergResultList[i].left; int nTempHeight = mergResultList[i].bottom - mergResultList[i].top; BOOL bRelative = abs(nTempWidth - nTempHeight) < RATE * min(nTempWidth,nTempHeight); if (nTempHeight < 80 || nTempWidth < 80 || !bRelative) { mergResultList[i].left = 0; mergResultList[i].right = 0; mergResultList[i].top = 0; mergResultList[i].bottom = 0; } else { destRect = mergResultList[i]; bHaveOne = TRUE; } } if (bHaveOne == FALSE) { cout<<"can not find one QRCode!"; return 0; } DrowBoxColor(srcImg, mergResultList, WHITE); imwrite(strSave6.c_str(),srcImg); //将box内容取出来。 //Mat sourceImg = imread("imageText_D.bmp", CV_LOAD_IMAGE_GRAYSCALE); //Mat roi_img = sourceImg(Range(destRect.left,destRect.right),Range(destRect.top,destRect.bottom)); //Rect rect(destRect.left, destRect.right, destRect.top, destRect.bottom); //Mat image_roi = sourceImg(rect); IplImage* src; IplImage* dst; src = cvLoadImage(strSave7.c_str(),1); if(!src) { return 0; } // cvNamedWindow("源图像",1); //cvShowImage("源图像",src); //往左上移动10个点 destRect.left -= 10; if (destRect.left < 0) { destRect.left = 0; } destRect.top -= 10; if (destRect.top < 0) { destRect.top = 0; } cvSetImageROI(src,cvRect(destRect.left,destRect.top ,destRect.right - destRect.left, destRect.bottom - destRect.top)); dst = cvCreateImage(cvSize(destRect.right - destRect.left, destRect.bottom - destRect.top), IPL_DEPTH_8U, src->nChannels); cvCopy(src,dst,0); cvResetImageROI(src); //cvNamedWindow("操作后的图像",1); //cvShowImage("操作后的图像",dst); strSave8 = getFilePath("imageText_Clear4.jpg"); cvSaveImage(strSave8.c_str(), dst); return 0; } //倾斜校正 void imageCorrect() { Mat srcImg = imread(strOrigin.c_str(), CV_LOAD_IMAGE_GRAYSCALE); if(srcImg.empty()) return ; //imshow("source", srcImg); Point center(srcImg.cols/2, srcImg.rows/2); #ifdef DEGREE //Rotate source image Mat rotMatS = getRotationMatrix2D(center, DEGREE, 1.0); warpAffine(srcImg, srcImg, rotMatS, srcImg.size(), 1, 0, Scalar(255,255,255)); //imshow("RotatedSrc", srcImg); //imwrite("imageText_R.jpg",srcImg); #endif //Expand image to an optimal size, for faster processing speed //Set widths of borders in four directions //If borderType==BORDER_CONSTANT, fill the borders with (0,0,0) Mat padded; int opWidth = getOptimalDFTSize(srcImg.rows); int opHeight = getOptimalDFTSize(srcImg.cols); copyMakeBorder(srcImg, padded, 0, opWidth-srcImg.rows, 0, opHeight-srcImg.cols, BORDER_CONSTANT, Scalar::all(0)); Mat planes[] = {Mat_<float>(padded), Mat::zeros(padded.size(), CV_32F)}; Mat comImg; //Merge into a double-channel image merge(planes,2,comImg); //Use the same image as input and output, //so that the results can fit in Mat well dft(comImg, comImg); //Compute the magnitude //planes[0]=Re(DFT(I)), planes[1]=Im(DFT(I)) //magnitude=sqrt(Re^2+Im^2) split(comImg, planes); magnitude(planes[0], planes[1], planes[0]); //Switch to logarithmic scale, for better visual results //M2=log(1+M1) Mat magMat = planes[0]; magMat += Scalar::all(1); log(magMat, magMat); //Crop the spectrum //Width and height of magMat should be even, so that they can be divided by 2 //-2 is 11111110 in binary system, operator & make sure width and height are always even magMat = magMat(Rect(0, 0, magMat.cols & -2, magMat.rows & -2)); //Rearrange the quadrants of Fourier image, //so that the origin is at the center of image, //and move the high frequency to the corners int cx = magMat.cols/2; int cy = magMat.rows/2; Mat q0(magMat, Rect(0, 0, cx, cy)); Mat q1(magMat, Rect(0, cy, cx, cy)); Mat q2(magMat, Rect(cx, cy, cx, cy)); Mat q3(magMat, Rect(cx, 0, cx, cy)); Mat tmp; q0.copyTo(tmp); q2.copyTo(q0); tmp.copyTo(q2); q1.copyTo(tmp); q3.copyTo(q1); tmp.copyTo(q3); //Normalize the magnitude to [0,1], then to[0,255] normalize(magMat, magMat, 0, 1, CV_MINMAX); Mat magImg(magMat.size(), CV_8UC1); magMat.convertTo(magImg,CV_8UC1,255,0); //imshow("magnitude", magImg); //imwrite("imageText_mag.jpg",magImg); //Turn into binary image threshold(magImg,magImg,GRAY_THRESH,255,CV_THRESH_BINARY); //imshow("mag_binary", magImg); //imwrite("imageText_bin.jpg",magImg); //Find lines with Hough Transformation vector<Vec2f> lines; float pi180 = (float)CV_PI/180; Mat linImg(magImg.size(),CV_8UC3); HoughLines(magImg,lines,1,pi180,HOUGH_VOTE,0,0); int numLines = lines.size(); for(int l=0; l<numLines; l++) { float rho = lines[l][0], theta = lines[l][1]; Point pt1, pt2; double a = cos(theta), b = sin(theta); double x0 = a*rho, y0 = b*rho; pt1.x = cvRound(x0 + 1000*(-b)); pt1.y = cvRound(y0 + 1000*(a)); pt2.x = cvRound(x0 - 1000*(-b)); pt2.y = cvRound(y0 - 1000*(a)); line(linImg,pt1,pt2,Scalar(255,0,0),3,8,0); } //imshow("lines",linImg); //imwrite("imageText_line.jpg",linImg); //if(lines.size() == 3){ // cout << "found three angels:" << endl; // cout << lines[0][1]*180/CV_PI << endl << lines[1][1]*180/CV_PI << endl << lines[2][1]*180/CV_PI << endl << endl; //} //Find the proper angel from the three found angels float angel=0; float piThresh = (float)CV_PI/90; float pi2 = CV_PI/2; for(int l=0; l<numLines; l++) { float theta = lines[l][1]; if(abs(theta) < piThresh || abs(theta-pi2) < piThresh) continue; else{ angel = theta; break; } } //Calculate the rotation angel //The image has to be square, //so that the rotation angel can be calculate right angel = angel<pi2 ? angel : angel-CV_PI; if(angel != pi2){ float angelT = srcImg.rows*tan(angel)/srcImg.cols; angel = atan(angelT); } float angelD = angel*180/(float)CV_PI; //cout << "the rotation angel to be applied:" << endl << angelD << endl << endl; //Rotate the image to recover Mat rotMat = getRotationMatrix2D(center,angelD,1.0); Mat dstImg = Mat::ones(srcImg.size(),CV_8UC3); warpAffine(srcImg,dstImg,rotMat,srcImg.size(),1,0,Scalar(255,255,255)); //imshow("result",dstImg); imwrite(strSave1,dstImg); } int main(int argc, char **argv) { if(argc < 2) return(1); //获取当前目录 _getcwd(szCurrentPath,MAX_PATH); strOrigin = getFilePath("imageText.jpg"); strSave1 = getFilePath("imageText_D.jpg"); strSave2 = getFilePath("canny.jpg"); strSave3 = getFilePath("imageText_Clear0.jpg"); strSave4 = getFilePath("imageText_Clear1.jpg"); strSave5 = getFilePath("imageText_Clear2.jpg"); strSave6_0 = getFilePath("imageText_Clear3_0.jpg"); strSave6_1 = getFilePath("imageText_Clear3_1.jpg"); strSave6 = getFilePath("imageText_Clear3.jpg"); strSave7 = getFilePath("imageText_D.jpg"); strSave8 = getFilePath("imageText_Clear4.jpg"); CopyFile(argv[1], strOrigin.c_str(), FALSE); imageCorrect(); outLinePic2(); ClearEdge(); return 0; //ConnectDomain(); //Read a single-channel image } </span>

需要注意的是,程序需要opencv的环境,需要自己先安装和设置。需要opencv_imgproc2410d.lib opencv_core2410d.lib opencv_highgui2410d.lib三个lib。 中间的2410是对应的版本号,不同的版本应该也可以,另外别忘了对应的dll。

其中有一些函数并没有用到,只是作为边缘检测效果实验用。

最后保存二维码图片时,不知道该用什么函数来将一个RECT的图像复制出来,mat应该也有对应的函数吧。