轻松搭建hadoop-1.2.1集群(3)--配置hadoop集群软件

轻松搭建hadoop-1.2.1集群(3)--配置hadoop集群软件

1、开始安装JDK和Hadoop:

对jdk和hadoop进行解压:

如果JDK是bin文件增加可执行权限:chmod u+x jdk-6u45-linux-x64.bin

![]()

解压完毕:

2、对解压的软件文件夹改名:

3、在hadoop0主机上进行配置:

配置JDK:

export JAVA_HOME=/usr/local/jdk export JRE_HOME=/usr/local/jdk/jre export CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

配置Hadoop:

#sethadoop environment export HADOOP_HOME=/usr/local/hadoop export PATH=$PATH:$HADOOP_HOME/bin

配置截图:

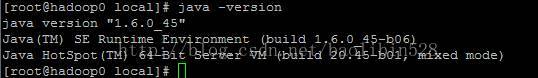

查看jdk版本:

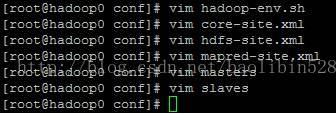

4、下面依次配置:

4.1、hadoop-env.sh :

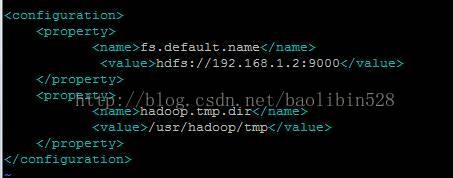

4.2、core-site.xml:

4.3、hdfs-site.xml:

4.4、mapred-site.xml:

4.5、masters:

4.6、slaves:

5、把hadoop0里的/etc/profile和jdk和hadoop拷贝到hadoop1和hadoop2中:

5.1、往hadoop1上拷贝JDK:

![]()

拷贝成功:

5.2、往hadoop2上拷贝JDK:

![]()

拷贝成功:

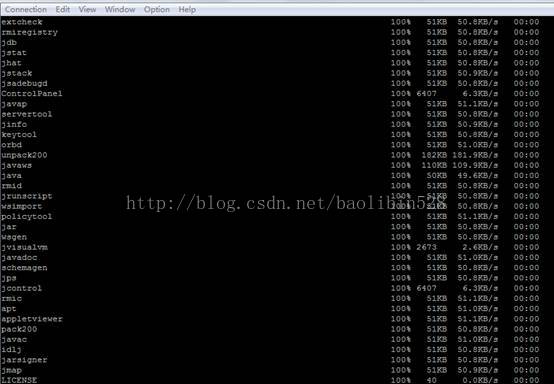

5.3、往hadoop1上拷贝hadoop:

![]()

拷贝成功:

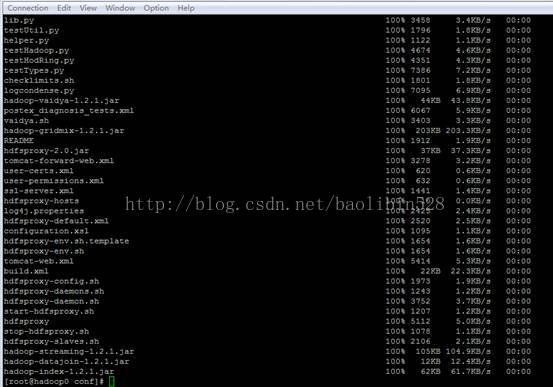

5.4、往hadoop2上拷贝hadoop:

![]()

拷贝成功:

5.5拷贝/etc/profile 文件:

往hadoop1拷贝:

![]()

拷贝过程:

![]()

在hadoop1上执行如下命令,是配置文件生效:

![]()

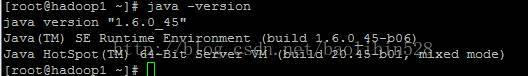

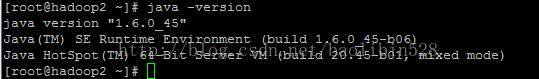

查看Java版本:

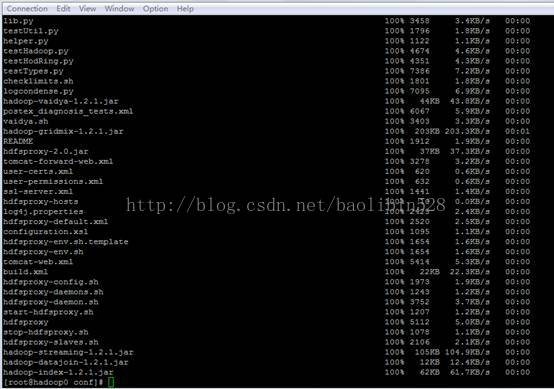

5.6、往hadoop2拷贝:

![]()

拷贝过程:

![]()

在hadoop1上执行如下命令,是配置文件生效:

![]()

查看Java版本:

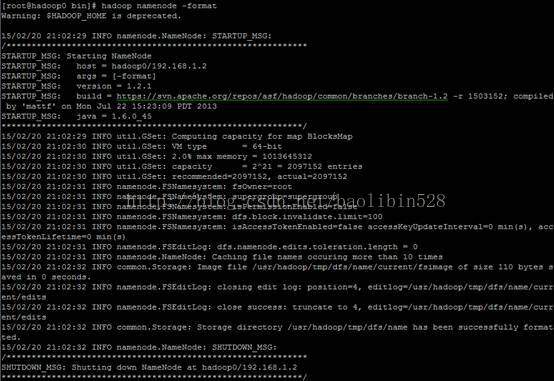

6、在hadoop0上格式化hadoop:

附格式化内容:

[root@hadoop0 bin]# hadoop namenode -format Warning: $HADOOP_HOME is deprecated. 15/02/20 21:02:29 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = hadoop0/192.168.1.2 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 1.2.1 STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.2 -r 1503152; compiled by 'mattf' on Mon Jul 22 15:23:09 PDT 2013 STARTUP_MSG: java = 1.6.0_45 ************************************************************/ 15/02/20 21:02:29 INFO util.GSet: Computing capacity for map BlocksMap 15/02/20 21:02:30 INFO util.GSet: VM type = 64-bit 15/02/20 21:02:30 INFO util.GSet: 2.0% max memory = 1013645312 15/02/20 21:02:30 INFO util.GSet: capacity = 2^21 = 2097152 entries 15/02/20 21:02:30 INFO util.GSet: recommended=2097152, actual=2097152 15/02/20 21:02:31 INFO namenode.FSNamesystem: fsOwner=root 15/02/20 21:02:31 INFO namenode.FSNamesystem: supergroup=supergroup 15/02/20 21:02:31 INFO namenode.FSNamesystem: isPermissionEnabled=false 15/02/20 21:02:31 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100 15/02/20 21:02:31 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s) 15/02/20 21:02:31 INFO namenode.FSEditLog: dfs.namenode.edits.toleration.length = 0 15/02/20 21:02:31 INFO namenode.NameNode: Caching file names occuring more than 10 times 15/02/20 21:02:32 INFO common.Storage: Image file /usr/hadoop/tmp/dfs/name/current/fsimage of size 110 bytes saved in 0 seconds. 15/02/20 21:02:32 INFO namenode.FSEditLog: closing edit log: position=4, editlog=/usr/hadoop/tmp/dfs/name/current/edits 15/02/20 21:02:32 INFO namenode.FSEditLog: close success: truncate to 4, editlog=/usr/hadoop/tmp/dfs/name/current/edits 15/02/20 21:02:32 INFO common.Storage: Storage directory /usr/hadoop/tmp/dfs/name has been successfully formatted. 15/02/20 21:02:32 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at hadoop0/192.168.1.2 ************************************************************/

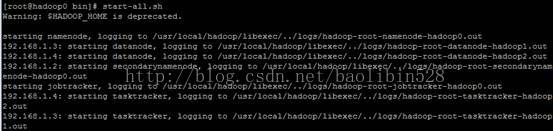

7、在hadoop0上启动hadoop:

附启动内容:

[root@hadoop0 bin]# start-all.sh Warning: $HADOOP_HOME is deprecated. starting namenode, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-namenode-hadoop0.out 192.168.1.3: starting datanode, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-datanode-hadoop1.out 192.168.1.4: starting datanode, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-datanode-hadoop2.out 192.168.1.2: starting secondarynamenode, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-secondarynamenode-hadoop0.out starting jobtracker, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-jobtracker-hadoop0.out 192.168.1.4: starting tasktracker, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-tasktracker-hadoop2.out 192.168.1.3: starting tasktracker, logging to /usr/local/hadoop/libexec/../logs/hadoop-root-tasktracker-hadoop1.out [root@hadoop0 bin]#

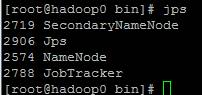

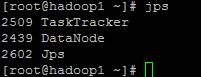

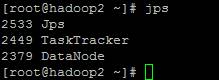

8、在hadoop0上查看启动进程,启动正常:

[root@hadoop0 bin]# jps 2719 SecondaryNameNode 2906 Jps 2574 NameNode 2788 JobTracker [root@hadoop0 bin]#

在hadoop1上查看启动进程,启动正常:

[root@hadoop1 ~]# jps 2509 TaskTracker 2439 DataNode 2602 Jps [root@hadoop1 ~]#

在hadoop2上查看启动进程,启动正常:

[root@hadoop2 ~]# jps 2533 Jps 2449 TaskTracker 2379 DataNode [root@hadoop2 ~]#

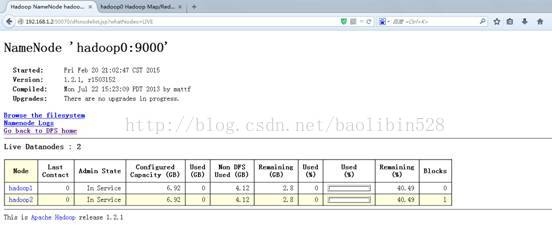

9、查看NameNode:

地址 http://192.168.1.2:50070

点击ClusterSunmary中的Live Nodes:

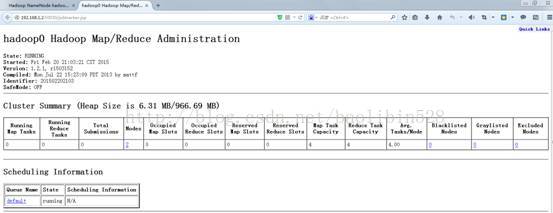

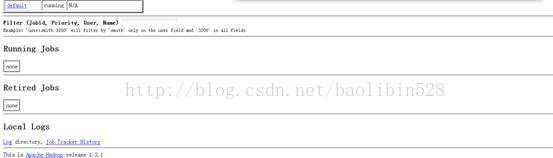

查看jodtracker:

地址 http://192.168.1.2:50030

同一页:

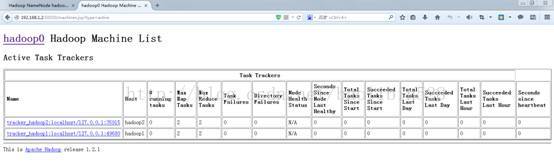

点击ClusterSummary中的Nodes:

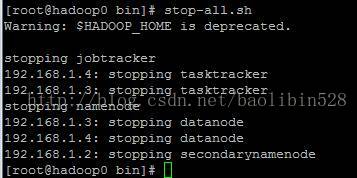

关闭集群:

集群搭建完毕。