Hadoop-2.6.0集群HA搭建

Hadoop-2.6.0集群HA搭建

1、安装克隆四台虚拟机

准备4台虚拟机

192.168.1.2 hadoop000 NameNode 192.168.1.3 hadoop111 NameNode、DataNode、JournalNode 192.168.1.4 hadoop222 DataNode、JournalNode 192.168.1.5 hadoop333 DataNode、JournalNode

子网掩码

255.255.255.0

网关

192.168.1.1

用到软件:

jdk-8u25-linux-x64.gz

hadoop-2.6.0.tar.gz

2、配置SSH免密码登陆

生成无密码密钥对:

[root@hadoop333 ~]# ssh-keygen -t rsa

把 id_rsa.pub 追加到授权的 key 里面去:

[root@hadoop333 ~]# cat ~/.ssh/id_rsa.pub>> ~/.ssh/authorized_keys

hadoop111和hadoop222和hadoop333免密码登陆到hadoop000上:

[root@hadoop333 ~]# ssh-copy-id -i hadoop000 [root@hadoop222 ~]# ssh-copy-id -i hadoop000 [root@hadoop111 ~]# ssh-copy-id -i hadoop000

Hadoop000免密码登陆到hadoop111、hadoop222和hadoop333上

[root@hadoop000 ~]# scp /root/.ssh/authorized_keys hadoop111:/root/.ssh/ [root@hadoop000 ~]# scp /root/.ssh/authorized_keys hadoop222:/root/.ssh/ [root@hadoop000 ~]# scp /root/.ssh/authorized_keys hadoop333:/root/.ssh/

3、安装JDK

解压jdk和hadoop并改名改权限:

[root@hadoop000 local]# ll 总用量 347836 drwxr-xr-x. 9 root root 4096 11月 14 05:20 hadoop drwxr-xr-x. 8 root root 4096 9月 18 08:44 jdk

配置jdk环境变量:

[root@hadoop000 local]# vim /etc/profile

配置内容:

export JAVA_HOME=/usr/local/jdk export JRE_HOME=/usr/local/jdk/jre exportCLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

使配置生效:

[root@hadoop000local]# source /etc/profile

查看jdk版本:

[root@hadoop000 local]# java -version java version "1.8.0_25" Java(TM) SE Runtime Environment (build 1.8.0_25-b17) Java HotSpot(TM) 64-Bit Server VM (build25.25-b02, mixed mode) [root@hadoop000 local]#

把hadoop000上的jdk拷贝到其它三台机器上:

scp -r /usr/local/jdk hadoop111:/usr/local/ scp -r /usr/local/jdk hadoop222:/usr/local/ scp -r /usr/local/jdk hadoop333:/usr/local/

把hadoop000上的配置文件拷贝到其它三台主机上:

[root@hadoop000 hadoop]# scp /etc/profile hadoop111:/etc/ profile 100% 1993 2.0KB/s 00:00 [root@hadoop000 hadoop]# scp /etc/profile hadoop222:/etc/ profile 100%1993 2.0KB/s 00:00 [root@hadoop000 hadoop]# scp /etc/profile hadoop333:/etc/ profile 100% 1993 2.0KB/s 00:00 [root@hadoop000 hadoop]#

在其它三台主机上依次执行使配置生效命令:

[root@hadoop111 ~]# source /etc/profile

查看jdk版本:

[root@hadoop111 ~]# java -version java version "1.8.0_25" Java(TM) SE Runtime Environment (build 1.8.0_25-b17) Java HotSpot(TM) 64-Bit Server VM (build25.25-b02, mixed mode) [root@hadoop111 ~]#

4、安装Hadoop:

进入配置文件所在的目录:

[root@hadoop000hadoop]# pwd /usr/local/hadoop/etc/hadoop

4.1、查看hadoop的配置文件:

[root@hadoop000 hadoop]# ls capacity-scheduler.xml hadoop-metrics2.properties httpfs-signature.secret log4j.properties ssl-client.xml.example configuration.xsl hadoop-metrics.properties httpfs-site.xml mapred-env.cmd ssl-server.xml.example container-executor.cfg hadoop-policy.xml kms-acls.xml mapred-env.sh yarn-env.cmd core-site.xml hdfs-site.xml kms-env.sh mapred-queues.xml.template yarn-env.sh hadoop-env.cmd httpfs-env.sh kms-log4j.properties mapred-site.xml.template yarn-site.xml hadoop-env.sh httpfs-log4j.properties kms-site.xml slaves [root@hadoop000 hadoop]#

4.2、配置hadoop-env.sh:

[root@hadoop000 hadoop]# vim hadoop-env.sh

配置内容:

# The java implementation to use. export JAVA_HOME=/usr/local/jdk

4.3、配置core-site.xml:

[root@hadoop000 hadoop]# vim core-site.xml

配置内容:

<property> <name>fs.defaultFS</name> <value>hdfs://hadoop000:8020</value> <description>The name of the defaultfile system. A URI whose scheme and authority determine the FileSystemimplementation. The uri's scheme determines the config property(fs.SCHEME.impl) naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem.</description> </property>

这些文件夹不用创建,会自动生成:

[root@hadoop000 hadoop]# mkdir /home/hadoop/hdfs

[root@hadoop000 hadoop]# mkdir /home/hadoop/hdfs/name

[root@hadoop000 hadoop]# mkdir /home/hadoop/hdfs/data

[root@hadoop000 hadoop]# mkdir /home/hadoop/hdfs/journal

[root@hadoop000 hadoop]#

4.4、配置vim hdfs-site.xml:

[root@hadoop000 hadoop]# vim hdfs-site.xml

配置内容:

<property>

<name>dfs.nameservices</name>

<value>hadoop-test</value>

<description>

Comma-separated list of nameservices.

</description>

</property>

<property>

<name>dfs.ha.namenodes.hadoop-test</name>

<value>nn1,nn2</value>

<description>

The prefix for a given nameservice,contains a comma-separated

list of namenodes for a given nameservice(eg EXAMPLENAMESERVICE).

</description>

</property>

<property>

<name>dfs.namenode.rpc-address.hadoop-test.nn1</name>

<value>hadoop000:8020</value>

<description>

RPC address for nomenode1 of hadoop-test

</description>

</property>

<property>

<name>dfs.namenode.rpc-address.hadoop-test.nn2</name>

<value>hadoop111:8020</value>

<description>

RPC address for nomenode2 of hadoop-test

</description>

</property>

<property>

<name>dfs.namenode.http-address.hadoop-test.nn1</name>

<value>hadoop000:50070</value>

<description>

The address and the base port where the dfsnamenode1 web ui will listen on.

</description>

</property>

<property>

<name>dfs.namenode.http-address.hadoop-test.nn2</name>

<value>hadoop111:50070</value>

<description>

The address and the base port where the dfsnamenode2 web ui will listen on.

</description>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///home/hadoop/hdfs/name</value>

<description>Determines where on thelocal filesystem the DFS name node

should store the name table(fsimage). If this is a comma-delimited list

of directories then the name table isreplicated in all of the

directories, for redundancy.</description>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop111:8485;hadoop000:8485;hadoop222:8485/hadoop-test</value>

<description>A directory on sharedstorage between the multiple namenodes

in an HA cluster. This directory will bewritten by the active and read

by the standby in order to keep the namespacessynchronized. This directory

does not need to be listed indfs.namenode.edits.dir above. It should be

left empty in a non-HA cluster.

</description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///home/hadoop/hdfs/data</value>

<description>Determines where on thelocal filesystem an DFS data node

should store its blocks. If this is a comma-delimited

list of directories, then data will be storedin all named

directories, typically on different devices.

Directories that do not exist are ignored.

</description>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>false</value>

<description>

Whether automatic failover is enabled. Seethe HDFS High

Availability documentation for details onautomatic HA

configuration.

</description>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/home/hadoop/hdfs/journal/</value>

</property>

4.5、复制生成一份mapred-site.xml:

[root@hadoop000 hadoop]# cp mapred-site.xml.templatemapred-site.xml

配置mapred-site.xml:

[root@hadoop000 hadoop]# vim mapred-site.xml

配置内容:

<property> <name>mapreduce.framework.name</name> <value>yarn</value> <description>The runtime framework forexecuting MapReduce jobs. Can be one of local, classic or yarn. </description> </property> <!--jobhistory properties --> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop111:10020</value> <description>MapReduce JobHistoryServer IPC host:port</description> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop111:19888</value> <description>MapReduce JobHistoryServer Web UI host:port</description> </property>

4.6、配置yarn-site.xml:

[root@hadoop000 hadoop]# vim yarn-site.xml

配置内容:

<property>

<description>The hostname of theRM.</description>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop000</value>

</property>

<property>

<description>The address of theapplications manager interface in the RM.</description>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<property>

<description>The address of thescheduler interface.</description>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<property>

<description>The http address of theRM web application.</description>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<property>

<description>The https adddress ofthe RM web application.</description>

<name>yarn.resourcemanager.webapp.https.address</name>

<value>${yarn.resourcemanager.hostname}:8090</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<property>

<description>The address of the RMadmin interface.</description>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<property>

<description>The class to use as theresource scheduler.</description>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<description>fair-scheduler conflocation</description>

<name>yarn.scheduler.fair.allocation.file</name>

<value>${yarn.home.dir}/etc/hadoop/fairscheduler.xml</value>

</property>

<property>

<description>List of directories tostore localized files in. An

application's localized file directorywill be found in:

${yarn.nodemanager.local-dirs}/usercache/${user}/appcache/application_${appid}.

Individual containers' work directories,called container_${contid}, will

be subdirectories of this.

</description>

<name>yarn.nodemanager.local-dirs</name>

<value>/home/hadoop/yarn/local</value>

</property>

<property>

<description>Whether to enable logaggregation</description>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<description>Where to aggregate logsto.</description>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/tmp/logs</value>

</property>

<property>

<description>Amount of physicalmemory, in MB, that can be allocated

for containers.</description>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>30720</value>

</property>

<property>

<description>Number of CPU cores thatcan be allocated

for containers.</description>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>12</value>

</property>

<property>

<description>the valid service nameshould only contain a-zA-Z0-9_ and can not start withnumbers</description>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

用到的目录,不创建也会自动生成:

[root@hadoop000 hadoop]# mkdir /home/hadoop/yarn

[root@hadoop000 hadoop]# mkdir /home/hadoop/yarn/local

4.7、配置slaves:

[root@hadoop000 hadoop]# vim slaves

配置内容:

hadoop111 hadoop222 hadoop333

4.8、复制生成一份fairscheduler.xml:

[root@hadoop000 hadoop]# cp mapred-site.xmlfairscheduler.xml

配置fairscheduler.xml:

[root@hadoop000 hadoop]# vim fairscheduler.xml

配置内容:

<?xmlversion="1.0"?>

<allocations>

<queue name="infrastructure">

<minResources>102400 mb, 50 vcores</minResources>

<maxResources>153600 mb, 100 vcores</maxResources>

<maxRunningApps>200</maxRunningApps>

<minSharePreemptionTimeout>300</minSharePreemptionTimeout>

<weight>1.0</weight>

<aclSubmitApps>root,yarn,search,hdfs</aclSubmitApps>

</queue>

<queue name="tool">

<minResources>102400 mb, 30vcores</minResources>

<maxResources>153600 mb, 50vcores</maxResources>

</queue>

<queue name="sentiment">

<minResources>102400 mb, 30vcores</minResources>

<maxResources>153600 mb, 50vcores</maxResources>

</queue>

</allocations>

4.9、把hadoop000上配置好的hadoop拷贝到其它三台机器上:

[root@hadoop000 hadoop]# scp -r /usr/local/hadoophadoop111:/usr/local/ [root@hadoop000 hadoop]# scp -r /usr/local/hadoophadoop222:/usr/local/ [root@hadoop000 hadoop]# scp -r /usr/local/hadoophadoop333:/usr/local/

进入目录,开始格式化启动Hadoop:

[root@hadoop000 hadoop]# pwd

/usr/local/hadoop

5、启动Hadoop集群:

5.1、在各个JournalNode节点上,输入以下命令启动journalnode服务:

sbin/hadoop-daemon.sh start journalnode

在hadoop111上启动:

[root@hadoop111 hadoop]# sbin/hadoop-daemon.sh startjournalnode starting journalnode, logging to/usr/local/hadoop/logs/hadoop-root-journalnode-hadoop111.out [root@hadoop111 hadoop]# jps 26001 Jps 25967 JournalNode [root@hadoop111 hadoop]#

在hadoop222上启动:

[root@hadoop222 hadoop]# sbin/hadoop-daemon.sh startjournalnode starting journalnode, logging to/usr/local/hadoop/logs/hadoop-root-journalnode-hadoop222.out [root@hadoop222 hadoop]# jps 25934 JournalNode 25967 Jps [root@hadoop222 hadoop]#

在hadoop333上启动:

[root@hadoop333 hadoop]# sbin/hadoop-daemon.sh startjournalnode starting journalnode, logging to/usr/local/hadoop/logs/hadoop-root-journalnode-hadoop333.out [root@hadoop333 hadoop]# jps 25932 JournalNode 25965 Jps [root@hadoop333 hadoop]#

5.2、在[nn1]上,对其进行格式化,并启动:

bin/hdfs namenode -format

sbin/hadoop-daemon.sh start namenode

格式化:

[root@hadoop000 hadoop]# bin/hdfs namenode -format 15/02/25 19:29:23 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = hadoop000/192.168.1.2 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 2.6.0 STARTUP_MSG: classpath =/usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/jersey-core -1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/share/hadoop/commo n/lib/apacheds-i18n-2.0.0-M15.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/had oop/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations- 2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/li b/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/s hare/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/loca l/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/htrace-core-3. 0.4.jar:/usr/local/hadoop/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/zookee per-3.4.6.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-2.6.0.jar:/usr/local/hadoop/share/hadoop/ common/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/sh are/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-6.1. 26.jar:/usr/local/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/apa cheds-kerberos-codec-2.0.0-M15.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/local/h adoop/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar: /usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-f ramework-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/ha doop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/l ocal/hadoop/share/hadoop/common/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl -1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/h adoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/local/hadoo p/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/usr/lo cal/hadoop/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1. jar:/usr/local/hadoop/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/local/hadoop/share/hadoop/common /lib/curator-client-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hado op/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-digest er-1.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/com mon/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/local /hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java -2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/share/hadoop/commo n/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/local/had oop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-bea nutils-core-1.8.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop/share/hado op/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/ local/hadoop/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-3.2.jar :/usr/local/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/common/l ib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/local/hadoop/ share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress -1.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/ha doop/common/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.6.0.jar:/usr/local/h adoop/share/hadoop/common/hadoop-nfs-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.6.0-test s.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/lo cal/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0. 13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commo ns-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/usr/local/hadoop/share/hadoop/h dfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/h adoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/ hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/u sr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/gua va-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/hdfs/li b/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/local/hadoop/sh are/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/ local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lo gging-1.1.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/c ommons-el-1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/local/hadoop/share/hadoop/ hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/ hadoop/share/hadoop/hdfs/hadoop-hdfs-2.6.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.6.0-tests.ja r:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jerse y-core-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/yarn /lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/h adoop/yarn/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/local/hado op/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/lo cal/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/ local/hadoop/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-util-6.1 .26.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/c ommons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/had oop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-collections-3.2.1.ja r:/usr/local/hadoop/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jsr305-1.3 .9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/act ivation-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/ lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/ share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/us r/local/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jaxb-imp l-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoo p/yarn/lib/jline-0.9.94.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoo p/yarn/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop /share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/ usr/local/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jer sey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/sha re/hadoop/yarn/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.6.0.jar:/usr/loca l/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoo p-yarn-server-resourcemanager-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.6.0.j ar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-registry-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/had oop-yarn-client-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2. 6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.0.jar:/usr/local/hadoop/share/hadoop /yarn/hadoop-yarn-applications-distributedshell-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-ser ver-web-proxy-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.6.0.jar:/usr/local/hadoop/sh are/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapre duce/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/ha doop/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jav ax.inject-1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop /mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/sha re/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.ja r:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/ja ckson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/share /hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0. jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/mapre duce/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoo p/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/us r/local/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce /lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/loc al/hadoop/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapre duce-client-hs-plugins-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar :/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar:/usr/local/hadoop/share/hado op/mapreduce/hadoop-mapreduce-client-shuffle-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapred uce-client-jobclient-2.6.0-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclie nt-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.0.jar:/usr/local/hadoop /share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/had oop-mapreduce-client-hs-2.6.0.jar:/contrib/capacity-scheduler/*.jar STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -re3496499ecb8d220fba99dc5ed4c99c8 f9e33bb1;compiled by 'jenkins' on 2014-11-13T21:10Z STARTUP_MSG: java = 1.8.0_25 ************************************************************/ 15/02/25 19:29:23 INFO namenode.NameNode: registeredUNIX signal handlers for [TERM, HUP, INT] 15/02/25 19:29:23 INFO namenode.NameNode: createNameNode[-format] 15/02/25 19:29:26 WARN util.NativeCodeLoader: Unable toload native-hadoop library for your platform... using builtin-javaclasses where applicable Formatting using clusterid:CID-39624de6-1dca-4881-8552-bc6453c2b0ec 15/02/25 19:29:27 INFO namenode.FSNamesystem: NoKeyProvider found. 15/02/25 19:29:27 INFO namenode.FSNamesystem: fsLock isfair:true 15/02/25 19:29:27 INFO blockmanagement.DatanodeManager:dfs.block.invalidate.limit=1000 15/02/25 19:29:27 INFO blockmanagement.DatanodeManager:dfs.namenode.datanode.registration.ip-hostname-check= true 15/02/25 19:29:27 INFO blockmanagement.BlockManager:dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 15/02/25 19:29:27 INFO blockmanagement.BlockManager: Theblock deletion will start around 2015 二月 25 19:29: 27 15/02/25 19:29:27 INFO util.GSet: Computing capacity formap BlocksMap 15/02/25 19:29:27 INFO util.GSet: VM type = 64-bit 15/02/25 19:29:27 INFO util.GSet: 2.0% max memory 966.7MB = 19.3 MB 15/02/25 19:29:27 INFO util.GSet: capacity = 2^21 = 2097152 entries 15/02/25 19:29:27 INFO blockmanagement.BlockManager:dfs.block.access.token.enable=false 15/02/25 19:29:27 INFO blockmanagement.BlockManager:defaultReplication = 3 15/02/25 19:29:27 INFO blockmanagement.BlockManager:maxReplication = 512 15/02/25 19:29:27 INFO blockmanagement.BlockManager:minReplication = 1 15/02/25 19:29:27 INFO blockmanagement.BlockManager:maxReplicationStreams = 2 15/02/25 19:29:27 INFO blockmanagement.BlockManager:shouldCheckForEnoughRacks = false 15/02/25 19:29:27 INFO blockmanagement.BlockManager:replicationRecheckInterval = 3000 15/02/25 19:29:27 INFO blockmanagement.BlockManager:encryptDataTransfer = false 15/02/25 19:29:27 INFO blockmanagement.BlockManager:maxNumBlocksToLog = 1000 15/02/25 19:29:27 INFO namenode.FSNamesystem:fsOwner = root (auth:SIMPLE) 15/02/25 19:29:27 INFO namenode.FSNamesystem:supergroup = supergroup 15/02/25 19:29:27 INFO namenode.FSNamesystem:isPermissionEnabled = true 15/02/25 19:29:27 INFO namenode.FSNamesystem: Determinednameservice ID: hadoop-test 15/02/25 19:29:27 INFO namenode.FSNamesystem: HAEnabled: true 15/02/25 19:29:27 INFO namenode.FSNamesystem: AppendEnabled: true 15/02/25 19:29:29 INFO util.GSet: Computing capacity formap INodeMap 15/02/25 19:29:29 INFO util.GSet: VM type = 64-bit 15/02/25 19:29:29 INFO util.GSet: 1.0% max memory 966.7MB = 9.7 MB 15/02/25 19:29:29 INFO util.GSet: capacity = 2^20 = 1048576 entries 15/02/25 19:29:29 INFO namenode.NameNode: Caching filenames occuring more than 10 times 15/02/25 19:29:29 INFO util.GSet: Computing capacity formap cachedBlocks 15/02/25 19:29:29 INFO util.GSet: VM type = 64-bit 15/02/25 19:29:29 INFO util.GSet: 0.25% max memory 966.7MB = 2.4 MB 15/02/25 19:29:29 INFO util.GSet: capacity = 2^18 = 262144 entries 15/02/25 19:29:29 INFO namenode.FSNamesystem:dfs.namenode.safemode.threshold-pct = 0.9990000128746033 15/02/25 19:29:29 INFO namenode.FSNamesystem:dfs.namenode.safemode.min.datanodes = 0 15/02/25 19:29:29 INFO namenode.FSNamesystem:dfs.namenode.safemode.extension =30000 15/02/25 19:29:29 INFO namenode.FSNamesystem: Retrycache on namenode is enabled 15/02/25 19:29:29 INFO namenode.FSNamesystem: Retrycache will use 0.03 of total heap and retry cache entry e xpiry time is 600000millis 15/02/25 19:29:29 INFO util.GSet: Computing capacity formap NameNodeRetryCache 15/02/25 19:29:29 INFO util.GSet: VM type = 64-bit 15/02/25 19:29:29 INFO util.GSet: 0.029999999329447746%max memory 966.7 MB = 297.0 KB 15/02/25 19:29:29 INFO util.GSet: capacity = 2^15 = 32768 entries 15/02/25 19:29:29 INFO namenode.NNConf: ACLs enabled?false 15/02/25 19:29:29 INFO namenode.NNConf: XAttrs enabled?true 15/02/25 19:29:29 INFO namenode.NNConf: Maximum size ofan xattr: 16384 15/02/25 19:29:36 INFO namenode.FSImage: Allocated newBlockPoolId: BP-244939959-192.168.1.2-1424863776620 15/02/25 19:29:36 INFO common.Storage: Storage directory/home/hadoop/hdfs/name has been successfully formatt ed. 15/02/25 19:29:40 INFOnamenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 15/02/25 19:29:40 INFO util.ExitUtil: Exiting with status0 15/02/25 19:29:40 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode athadoop000/192.168.1.2 ************************************************************/ [root@hadoop000 hadoop]#

启动:

[root@hadoop000 hadoop]# sbin/hadoop-daemon.sh startnamenode starting namenode, logging to/usr/local/hadoop/logs/hadoop-root-namenode-hadoop000.out [root@hadoop000 hadoop]# jps 26432 Jps 26396 NameNode [root@hadoop000 hadoop]#

5.3、在[nn2]上,同步nn1的元数据信息:

bin/hdfs namenode -bootstrapStandby

[root@hadoop111 hadoop]# bin/hdfs namenode-bootstrapStandby

15/02/25 19:31:37 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hadoop111/192.168.1.3

STARTUP_MSG: args = [-bootstrapStandby]

STARTUP_MSG: version = 2.6.0

STARTUP_MSG: classpath =/usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hado op/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/hamcrest -core-1.3.jar:/usr/local/hadoop/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar: /usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share /hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/had oop-annotations-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar :/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/s hare/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/share/hadoop/common /lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/u sr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/ hadoop/common/lib/htrace-core-3.0.4.jar:/usr/local/hadoop/share/hadoop/common/lib/xz -1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/local/ha doop/share/hadoop/common/lib/hadoop-auth-2.6.0.jar:/usr/local/hadoop/share/hadoop/co mmon/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.j ar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/loca l/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoo p/common/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/apacheds-kerbe ros-codec-2.0.0-M15.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.ja r:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop/s hare/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/common/lib/jsc h-0.1.42.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-framework-2.6.0.jar:/ usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/sha re/hadoop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/ java-xmlbuilder-0.4.jar:/usr/local/hadoop/share/hadoop/common/lib/junit-4.11.jar:/us r/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop /share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/hadoo p/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-1.3.9 .jar:/usr/local/hadoop/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop/ share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/common/lib/act ivation-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/loca l/hadoop/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/local/hadoop/share/h adoop/common/lib/curator-client-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/ mockito-all-1.8.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-runtime-5.5.2 3.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/ hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/ common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j- log4j12-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/u sr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/sh are/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/ snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/api-util-1.0.0-M20 .jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/loca l/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/ share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop/share/hadoop/common/lib/j axb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.3 .jar:/usr/local/hadoop/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop/ share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/common/lib/common s-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-el-1.0.jar:/u sr/local/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/local/hadoop /share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop/share/hadoop/c ommon/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jacks on-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.4.ja r:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.6.0.jar:/usr/local/hadoop/sh are/hadoop/common/hadoop-nfs-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop- common-2.6.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/h adoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-6.1 .26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local /hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/l ib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar :/usr/local/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/share /hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util- 6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/h adoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/ jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.1 3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/sha re/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jasper-r untime-5.5.23.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/ local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/had oop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf- java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/us r/local/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/hdfs /lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar: /usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/had oop/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/hdfs/had oop-hdfs-2.6.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.6.0-tests.jar:/ usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.0.jar:/usr/local/hadoop/share /hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jaxb-ap i-2.2.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/local/h adoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/l ib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/u sr/local/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoo p/yarn/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/u sr/local/hadoop/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/share/ha doop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-util -6.1.26.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/h adoop/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/yarn /lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-core-asl- 1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/us r/local/hadoop/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop /yarn/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/stax-api-1.0-2.ja r:/usr/local/hadoop/share/hadoop/yarn/lib/activation-1.1.jar:/usr/local/hadoop/share /hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-co dec-1.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/lo cal/hadoop/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop/share/hadoo p/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/yarn/lib/protobuf-ja va-2.5.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/loca l/hadoop/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/had oop/yarn/lib/jline-0.9.94.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/u sr/local/hadoop/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/s hare/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/leveldbjn i-all-1.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/loc al/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/h adoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson- mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-io-2.4.jar:/us r/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.6.0.jar:/usr/local/hadoop/share/h adoop/yarn/hadoop-yarn-server-nodemanager-2.6.0.jar:/usr/local/hadoop/share/hadoop/y arn/hadoop-yarn-server-resourcemanager-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn /hadoop-yarn-server-common-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn -registry-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.6.0.jar :/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2. 6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.0.jar:/usr/ local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.0.jar:/ usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.0.jar:/usr/local /hadoop/share/hadoop/yarn/hadoop-yarn-common-2.6.0.jar:/usr/local/hadoop/share/hadoo p/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0.jar:/usr/local/hadoop/sh are/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduc e/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hadoop-anno tations-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/u sr/local/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/share /hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/ xz-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/loc al/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/h adoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jack son-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar: /usr/local/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop /share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/m apreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/as m-3.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hado op/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/ mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/com mons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1. 9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/us r/local/hadoop/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop/share /hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.0.jar:/usr/local/hadoop/sha re/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar:/usr/local/hadoop/share/hado op/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar:/usr/local/hadoop/share/hadoop/m apreduce/hadoop-mapreduce-client-shuffle-2.6.0.jar:/usr/local/hadoop/share/hadoop/ma preduce/hadoop-mapreduce-client-jobclient-2.6.0-tests.jar:/usr/local/hadoop/share/ha doop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0.jar:/usr/local/hadoop/share/h adoop/mapreduce/hadoop-mapreduce-client-app-2.6.0.jar:/usr/local/hadoop/share/hadoop /mapreduce/hadoop-mapreduce-client-common-2.6.0.jar:/usr/local/hadoop/share/hadoop/m apreduce/hadoop-mapreduce-client-hs-2.6.0.jar:/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -re349649 9ecb8d220fba99dc5ed4c99c8f9e33bb1;compiled by 'jenkins' on 2014-11-13T21:10Z

STARTUP_MSG: java = 1.8.0_25

************************************************************/

15/02/25 19:31:37 INFO namenode.NameNode: registeredUNIX signal handlers for [TERM, HUP, INT]

15/02/25 19:31:37 INFO namenode.NameNode: createNameNode[-bootstrapStandby]

15/02/25 19:31:40 WARN util.NativeCodeLoader: Unable toload native-hadoop library f or yourplatform... using builtin-java classes where applicable

=====================================================

About to bootstrap Standby ID nn2 from:

Nameservice ID: hadoop-test

OtherNamenode ID: nn1

Other NN's HTTPaddress: http://hadoop000:50070

Other NN'sIPC address: hadoop000/192.168.1.2:8020

NamespaceID: 391329372

Blockpool ID: BP-244939959-192.168.1.2-1424863776620

Cluster ID: CID-39624de6-1dca-4881-8552-bc6453c2b0ec

Layoutversion: -60

=====================================================

15/02/25 19:31:44 INFO common.Storage: Storage directory/home/hadoop/hdfs/name has beensuccessfully formatted.

15/02/25 19:31:49 INFO namenode.TransferFsImage: Openingconnection to http://hadoop 000:50070/imagetransfer?getimage=1&txid=0&storageInfo=-60:391329372:0:CID-39624de6-1 dca-4881-8552-bc6453c2b0ec

15/02/25 19:31:49 INFO namenode.TransferFsImage: ImageTransfer timeout configured t o 60000milliseconds

15/02/25 19:31:50 INFO namenode.TransferFsImage:Transfer took 0.01s at 0.00 KB/s

15/02/25 19:31:50 INFO namenode.TransferFsImage:Downloaded file fsimage.ckpt_000000 0000000000000size 351 bytes.

15/02/25 19:31:50 INFO util.ExitUtil: Exiting withstatus 0

15/02/25 19:31:50 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode athadoop111/192.168.1.3

************************************************************/

[root@hadoop111 hadoop]#

[root@hadoop111 hadoop]# sbin/hadoop-daemon.sh startnamenode starting namenode, logging to/usr/local/hadoop/logs/hadoop-root-namenode-hadoop111.out [root@hadoop111 hadoop]# jps 26363 Jps 26333 NameNode 26206 JournalNode [root@hadoop111 hadoop]#

5.4、启动[nn2]:

sbin/hadoop-daemon.sh start namenode

经过以上四步操作,nn1和nn2均处理standby状态

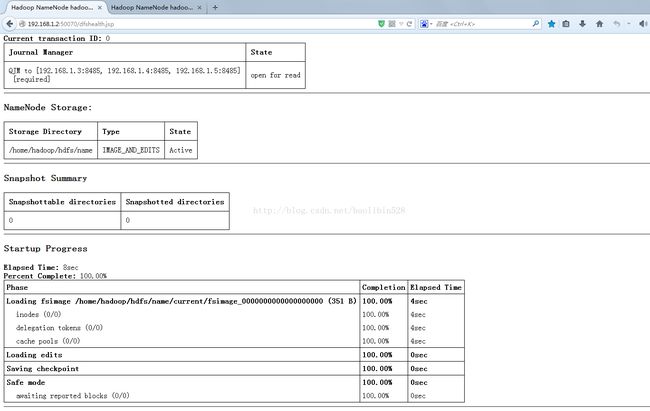

http://192.168.1.2:50070/dfshealth.jsp

http://192.168.1.3:50070/dfshealth.jsp

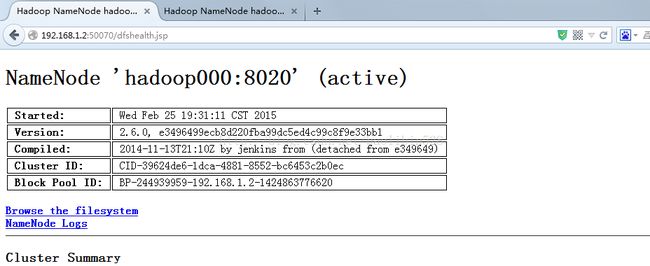

5.5、将[nn1]切换为Active

bin/hdfs haadmin -transitionToActive nn1

[root@hadoop000 hadoop]# bin/hdfs haadmin-transitionToActive nn1 15/02/25 19:36:52 WARN util.NativeCodeLoader: Unable toload native-hadoop library for your platform... using builtin-java classeswhere applicable [root@hadoop000 hadoop]# jps 26596 Jps 26396 NameNode [root@hadoop000 hadoop]#

http://192.168.1.2:50070/dfshealth.jsp

变为active

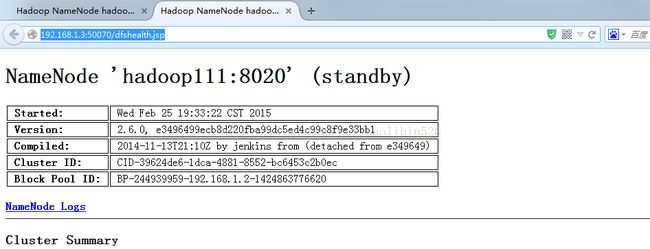

http://192.168.1.3:50070/dfshealth.jsp

5.6、

在[nn1]上,启动所有datanode

sbin/hadoop-daemons.sh start datanode

[root@hadoop000 hadoop]# sbin/hadoop-daemons.sh startdatanode hadoop333: starting datanode, logging to/usr/local/hadoop/logs/hadoop-root-datanode-hadoop333.out hadoop222: starting datanode, logging to/usr/local/hadoop/logs/hadoop-root-datanode-hadoop222.out hadoop111: starting datanode, logging to/usr/local/hadoop/logs/hadoop-root-datanode-hadoop111.out [root@hadoop000 hadoop]#

查看hadoop000上的进程:

[root@hadoop000 hadoop]# jps

26547 Jps

26333 NameNode

[root@hadoop000hadoop]#

查看hadoop111上的进程:

[root@hadoop111 hadoop]# jps

26547 Jps

26507 DataNode

26333 NameNode

26206 JournalNode

[root@hadoop111 hadoop]#

查看hadoop222上的进程:

[root@hadoop222 hadoop]# jps

26080 JournalNode

26183 DataNode

26221 Jps

[root@hadoop222 hadoop]#

查看hadoop333上的进程:

[root@hadoop333 hadoop]# jps

26179 DataNode

26077 JournalNode

26254 Jps

[root@hadoop333 hadoop]#

关闭Hadoop集群:

在[nn1]上,输入以下命令

sbin/stop-dfs.sh

[root@hadoop000 hadoop]# sbin/stop-dfs.sh 15/02/25 19:43:09 WARN util.NativeCodeLoader: Unable toload native-hadoop library for your platform... using builtin-java classeswhere applicable Stopping namenodes on [hadoop000 hadoop111] hadoop000: stopping namenode hadoop111: stopping namenode hadoop222: stopping datanode hadoop111: stopping datanode hadoop333: stopping datanode Stopping journal nodes [hadoop111 hadoop222 hadoop333] hadoop111: stopping journalnode hadoop222: stopping journalnode hadoop333: stopping journalnode 15/02/25 19:44:43 WARN util.NativeCodeLoader: Unable toload native-hadoop library for your platform... using builtin-java classeswhere applicable [root@hadoop000 hadoop]#

关闭集群后查看进程:

显示hadoop000进程:

[root@hadoop000 hadoop]# jps

26929 Jps

[root@hadoop000 hadoop]#

显示hadoop111进程:

[root@hadoop111 hadoop]# jps

26719 Jps

[root@hadoop111 hadoop]#

显示hadoop222进程:

[root@hadoop222 hadoop]# jps

26328 Jps

[root@hadoop222 hadoop]#

显示hadoop222进程:

[root@hadoop333 hadoop]# jps

26325 Jps

[root@hadoop333 hadoop]#

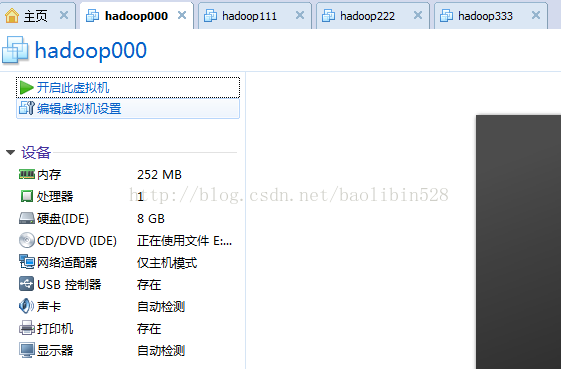

我用的我家台式电脑,2G内存,每个虚拟机内存设的为252M:

四台虚拟机: