通过NFSv3挂载HDFS到本地目录 -- 2安装配置hdfs-nfs网关

通过NFSv3挂载HDFS到本地目录 -- 2安装配置hdfs-nfs网关

4. 透过NFS访问hdfs

最重要的参考文档是这篇,来自官方

http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/HdfsNfsGateway.html

当然里面有坑,两个:

一个是需要停止系统的portmap/rpcbind服务,(也就是说,hdfs-nfs自己实现pormap和nfs server的功能)

另一个是启动Gateway服务服务的时候,要切换到相应的用户。

4.1 虚拟机环境

ip地址 为 172.30.0.129, 机器名 ip-172-30-0-129,其他未变

4.2 HDFS安装

显然,得首先安装HDFS 系统,目前HDFS 已经深度集成到 Hadoop 中了,所以,也就是安装 Hadoop (略)。

4.3 配置core-site.xml文件

在 etc/hadoop/core-site.xml 中添加以下两个节, 样板如下:

(说明: 用在你的集群中启动Gateway服务的用户名代替“nfsserver”)

<property>

<name>hadoop.proxyuser.nfsserver.groups</name>

<value>root,users-group1,users-group2</value>

<description>

The 'nfsserver' user is allowed to proxy all members of the 'users-group1' and

'users-group2' groups. Note that in most cases you will need to include the

group "root" because the user "root" (which usually belonges to "root" group) will

generally be the user that initially executes the mount on the NFS client system.

Set this to '*' to allow nfsserver user to proxy any group.

</description>

</property>

<property>

<name>hadoop.proxyuser.nfsserver.hosts</name>

<value>nfs-client-host1.com</value>

<description>

This is the host where the nfs gateway is running. Set this to '*' to allow

requests from any hosts to be proxied.

</description>

</property>

因此,我的配置文件 添加 如下:

<property>

<name>hadoop.proxyuser.nfsguest.groups</name>

<value>root,nfs-group</value>

</property>

<property>

<name>hadoop.proxyuser.nfsguest.hosts</name>

<value>ip-172-30-0-129</value>

</property>

代理用户是nfsguest,nfs组的名字是 nfs-group

4.3.1 先行配置

a. 机器名

在/etc/hosts 中添加 如下一行, 之后重启

172.30.0.129 ip-172-30-0-129

b. 添加用户, 用户组

[root@ip-172-30-0-129 hdfs]# groupadd nfs-group

[root@ip-172-30-0-129 hdfs]# useradd nfsguest -g nfs-group

[root@ip-172-30-0-129 hdfs]# usermod -a -G nfs-group nfsguest

[root@ip-172-30-0-129 hdfs]# usermod -a -G nfs-group root

查看操作结果

[root@ip-172-30-0-129 hdfs]# grep 'nfsguest' /etc/passwd

nfsguest:x:501:501::/home/nfsguest:/bin/bash

[root@ip-172-30-0-129 hdfs]# grep 'nfs-group' /etc/group

nfs-group:x:501:root,nfsguest

[root@ip-172-30-0-129 hdfs]# su nfsguest

[nfsguest@ip-172-30-0-129 hdfs]

4.4 配置 log4j.property,

在 etc/hadoop/log4j.properties 文件中,增加如下:

log4j.logger.org.apache.hadoop.hdfs.nfs=DEBUG

log4j.logger.org.apache.hadoop.oncrpc=DEBUG

4.5 配置 hdfs-site.xml 文件

4.5.1 安全配置

默认地,export可以被任何客户端挂载。

用户可以更新属性 dfs.nfs.exports.allowed.hosts 。

配置的value包括机器的名字和访问权限,用空格分开。

机器名字格式可以是一台主机,通配符,或者IPv4网卡地址。

访问权限用rw或者指定机器的访问权限readwrite或者readonly。如果访问权限没有被提供,默认的是只读。

条目用“;”分隔。例如: “192.168.0.0/22 rw ; \\w*\\.example\\.com ; host1.test.org ro;”

这个属性被更新后,只有NFSGateway需要重新启动。

例如:

<property>

<name>dfs.nfs.exports.allowed.hosts</name>

<value>172.30.0.243 rw ;172.30.0.129 rw ;</value>

</property>

4.5.2 在文件 core-site.xml 中的其他配置

客户端在存取时间 (NameNode需要重启)

dfs.namenode.accesstime.precision

更新文件转储目录

dfs.nfs3.dump.dir

优化性能

dfs.nfs.rtmax

dfs.nfs.wtmax

4.6 启动 网关 服务 (除特别说明外,都在root下操作)

a. 顺序停止相关服务 hdfs,nfs, rpcbind

[root@ip-172-30-0-129 hadoop-2.7.1]# sbin/stop-dfs.sh

Stopping namenodes on [localhost]

localhost: stopping namenode

localhost: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

[root@ip-172-30-0-129 hadoop-2.7.1]# bin/hdfs dfs -ls /

ls: Call From ip-172-30-0-129/172.30.0.129 to localhost:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

[root@ip-172-30-0-129 hadoop-2.7.1]#

[root@ip-172-30-0-129 hadoop-2.7.1]# service nfs stop

Shutting down NFS daemon: [FAILED]

Shutting down NFS mountd: [FAILED]

Shutting down NFS quotas: [FAILED]

[root@ip-172-30-0-129 hadoop-2.7.1]# service rpcbind stop

Stopping rpcbind: [ OK ]

[root@ip-172-30-0-129 hadoop-2.7.1]#

b. 启动Hadoop的portmap,

[root@ip-172-30-0-129 hadoop-2.7.1]# sbin/hadoop-daemon.sh --script /home/hdfs/hadoop-2.7.1/bin/hdfs start portmap

starting portmap, logging to /home/hdfs/hadoop-2.7.1/logs/hadoop-root-portmap-ip-172-30-0-129.out

[root@ip-172-30-0-129 hadoop-2.7.1]# ls /home/hdfs/hadoop-2.7.1/logs/ -lht

total 153M

-rw-r--r-- 1 root root 714 Jan 22 08:46 hadoop-root-portmap-ip-172-30-0-129.out

-rw-r--r-- 1 root root 14K Jan 22 08:46 hadoop-root-portmap-ip-172-30-0-129.log

(省略)

[root@ip-172-30-0-129 hadoop-2.7.1]# cat /home/hdfs/hadoop-2.7.1/logs/hadoop-root-portmap-ip-172-30-0-129.out

ulimit -a for user root

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 3911

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 3911

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

[root@ip-172-30-0-129 hadoop-2.7.1]# cat /home/hdfs/hadoop-2.7.1/logs/hadoop-root-portmap-ip-172-30-0-129.log

2016-01-22 08:46:45,533 INFO org.apache.hadoop.portmap.Portmap: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting Portmap

STARTUP_MSG: host = ip-172-30-0-129/172.30.0.129

STARTUP_MSG: args = []

STARTUP_MSG: version = 2.7.1

STARTUP_MSG: classpath = (省略).jar

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r 15ecc87ccf4a0228f35af08fc56de536e6ce657a; compiled by 'jenkins' on 2015-06-29T06:04Z

STARTUP_MSG: java = 1.7.0_91

************************************************************/

2016-01-22 08:46:45,543 INFO org.apache.hadoop.portmap.Portmap: registered UNIX signal handlers for [TERM, HUP, INT]

2016-01-22 08:46:45,731 INFO org.apache.hadoop.portmap.Portmap: Portmap server started at tcp:///0.0.0.0:111, udp:///0.0.0.0:111

[root@ip-172-30-0-129 hadoop-2.7.1]#

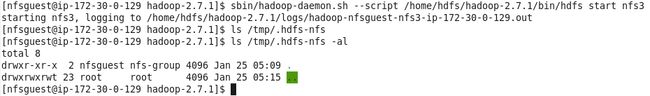

c. 启动 mountd 和 nfsd 服务 (在 代理用户 下操作)

"不安全“模式下,需要切换到 代理用户nfsguest下

[root@ip-172-30-0-129 hadoop-2.7.1]# sbin/hadoop-daemon.sh --script /home/hdfs/hadoop-2.7.1/bin/hdfs start nfs3

starting nfs3, logging to /home/hdfs/hadoop-2.7.1/logs/hadoop-root-nfs3-ip-172-30-0-129.out

[root@ip-172-30-0-129 hadoop-2.7.1]# ls /home/hdfs/hadoop-2.7.1/logs/ -lht

total 153M

-rw-r--r-- 1 root root 17K Jan 22 09:03 hadoop-root-nfs3-ip-172-30-0-129.log

-rw-r--r-- 1 root root 714 Jan 22 09:03 hadoop-root-nfs3-ip-172-30-0-129.out

(省略)

[root@ip-172-30-0-129 hadoop-2.7.1]# cat /home/hdfs/hadoop-2.7.1/logs/hadoop-root-nfs3-ip-172-30-0-129.out

ulimit -a for user root

core file size (blocks, -c) 0

(省略)

file locks (-x) unlimited

[root@ip-172-30-0-129 hadoop-2.7.1]# cat /home/hdfs/hadoop-2.7.1/logs/hadoop-root-nfs3-ip-172-30-0-129.log

2016-01-22 09:03:36,098 INFO org.apache.hadoop.nfs.nfs3.Nfs3Base: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting Nfs3

STARTUP_MSG: host = ip-172-30-0-129/172.30.0.129

STARTUP_MSG: args = []

STARTUP_MSG: version = 2.7.1

STARTUP_MSG: classpath = (省略).jar

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r 15ecc87ccf4a0228f35af08fc56de536e6ce657a; compiled by 'jenkins' on 2015-06-29T06:04Z

STARTUP_MSG: java = 1.7.0_91

************************************************************/

2016-01-22 09:03:36,107 INFO org.apache.hadoop.nfs.nfs3.Nfs3Base: registered UNIX signal handlers for [TERM, HUP, INT]

(省略)

2016-01-22 09:03:38,535 INFO org.mortbay.log: Started [email protected]:50079

2016-01-22 09:03:38,544 INFO org.apache.hadoop.oncrpc.SimpleTcpServer: Started listening to TCP requests at port 2049 for Rpc program: NFS3 at localhost:2049 with workerCount 0

[root@ip-172-30-0-129 hadoop-2.7.1]#

d. 停止服务,使用下面的命令:

sbin/hadoop-daemon.sh --script /home/hdfs/hadoop-2.7.1/bin/hdfs stop portmap

sbin/hadoop-daemon.sh --script /home/hdfs/hadoop-2.7.1/bin/hdfs stop nfs3

e. 验证服务

[root@ip-172-30-0-129 hadoop-2.7.1]# rpcinfo -p ip-172-30-0-129

program vers proto port service

100005 2 tcp 4242 mountd

100000 2 udp 111 portmapper

100000 2 tcp 111 portmapper

100005 1 tcp 4242 mountd

100003 3 tcp 2049 nfs

100005 1 udp 4242 mountd

100005 3 udp 4242 mountd

100005 3 tcp 4242 mountd

100005 2 udp 4242 mountd

[root@ip-172-30-0-129 hadoop-2.7.1]#

如果看到类似的输出,那么所有的服务,都起来了。

f. 验证 hdfs的名字空间是否输出

[root@ip-172-30-0-129 hadoop-2.7.1]# showmount -e ip-172-30-0-129

Export list for ip-172-30-0-129:

/ 172.30.0.243,172.30.0.129

[root@ip-172-30-0-129 hadoop-2.7.1]#

更多情况下,是看到类似这样的输出:

Exports list on $nfs_server_ip :

/ (everyone)

4.7 挂载

[root@ip-172-30-0-129 hadoop-2.7.1]# mount -t nfs -o vers=3,proto=tcp,nolock,noacl,sync ip-172-30-0-129:/ /mnt/hdfs

[root@ip-172-30-0-129 hadoop-2.7.1]# ls /mnt/hdfs -lh

total 11K

drwxr-xr-x 2 root 2584148964 64 Dec 24 11:21 slog

drwxr-xr-x 5 root 2584148964 160 Dec 24 12:10 solr

-rw-r--r-- 1 root 2584148964 5.5K Jan 21 02:36 test.txt

[root@ip-172-30-0-129 hadoop-2.7.1]# bin/hdfs dfs -ls /

Found 3 items

drwxr-xr-x - root supergroup 0 2015-12-24 11:21 /slog

drwxr-xr-x - root supergroup 0 2015-12-24 12:10 /solr

-rw-r--r-- 1 root supergroup 5538 2016-01-21 02:36 /test.txt

[root@ip-172-30-0-129 hadoop-2.7.1]#