Face Alignment by 3000 FPS系列学习总结(一)

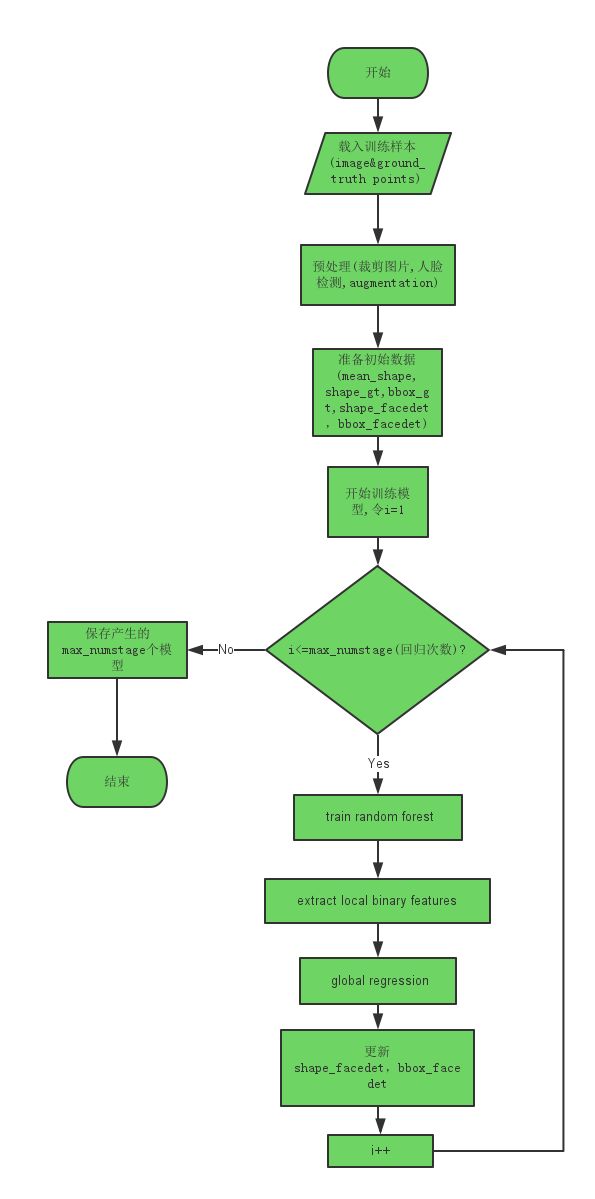

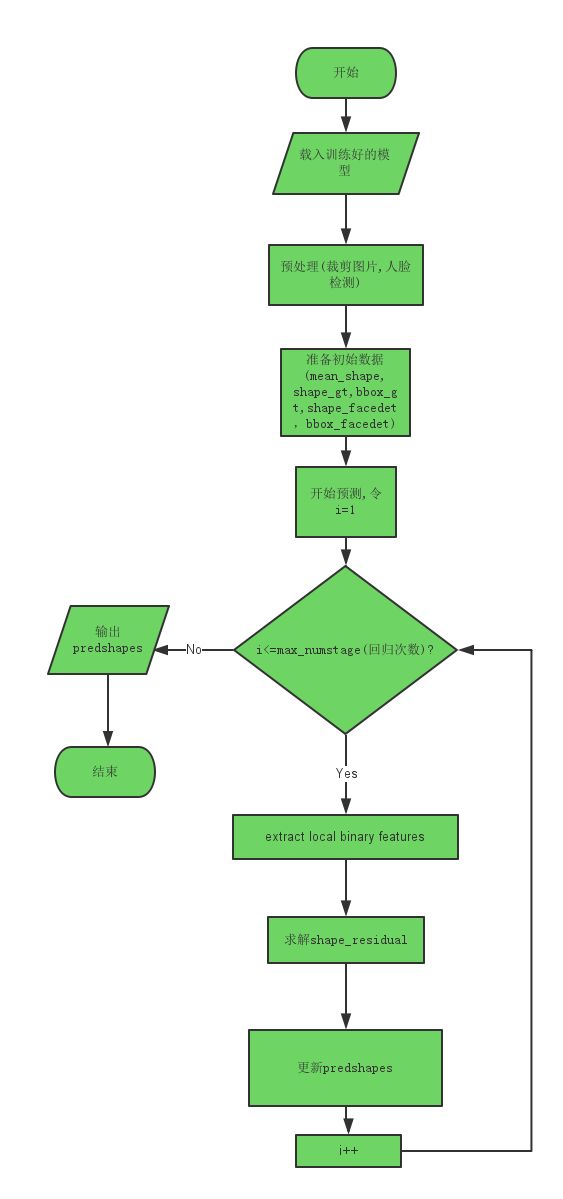

face alignment 流程图

train阶段

测试阶段

预处理

裁剪图片

- tr_data = loadsamples(imgpathlistfile, 2);

说明: 本函数用于将原始图片取ground-truth points的包围盒,然后将其向左上角放大一倍。然后截取此部分图像,同时变换ground-truth points.hou,然后为了节省内存,使用了缩放,将其缩放在150*150的大小内,作为我们后续处理的图像及ground-truth points。

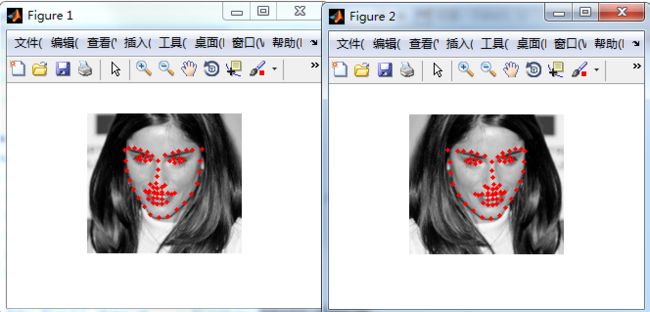

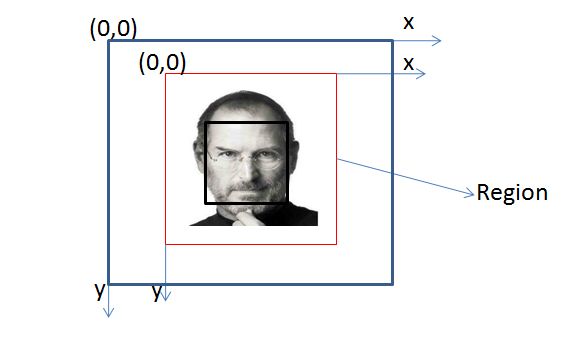

截取前示意图:

截取后示意图:

缩放后示意图:

注意: loadsamples的第二个参数是2,表明需要进行缩放,但是测试时,并没有进行缩放,参数为1.

To do list:本函数使用的真实特征点的包围盒截取的图像,在实际作用时,肯定不能去真实特征点截取图像了。我们应该改为人脸检测框代替这个真实特征点的包围盒,如果检测不到,再用真实特征点的包围盒替代.

function Data = loadsamples(imgpathlistfile, exc_setlabel)

%LOADSAMPLES Summary of this function goes here

% Function: load samples from dbname database

% Detailed explanation goes here

% Input:

% dbname: the name of one database

% exc_setlabel: excluded set label

% Output:

% Data: loaded data from the database

% 基本步骤:

% 1. 载入图片,取ground_truth shape的包围盒,然后放大一倍,截取图像。

% 同时相应地变换shape.

% 2. 为了防止图片过大,我们把图像控制在150*150内.

% 3. 用matlab自带的人脸检测求解或者直接拿bbox代替bbox_facedet

% 4. 后面还为了防止train文件下夹杂了test的图片,做了排除处理.

%

imgpathlist = textread(imgpathlistfile, '%s', 'delimiter', '\n');

Data = cell(length(imgpathlist), 1);

setnames = {'train' 'test'};

% Create a cascade detector object.

% faceDetector = vision.CascadeObjectDetector();

% bboxes_facedet = zeros(length(imgpathlist), 4);

% bboxes_gt = zeros(length(imgpathlist), 4);

% isdetected = zeros(length(imgpathlist), 1);

parfor i = 1:length(imgpathlist)

img = im2uint8(imread(imgpathlist{i}));

Data{i}.width_orig = size(img, 2);

Data{i}.height_orig = size(img, 1);

% Data{i}.img = img

% shapepath = strrep(imgpathlist{i}, 'png', 'pts');%这一句和下一句是一样的用途

shapepath = strcat(imgpathlist{i}(1:end-3), 'pts');

Data{i}.shape_gt = double(loadshape(shapepath));

% Data{i}.shape_gt = Data{i}.shape_gt(params.ind_usedpts, :);

% bbox = bounding_boxes_allsamples{i}.bb_detector; %

Data{i}.bbox_gt = getbbox(Data{i}.shape_gt); % [bbox(1) bbox(2) bbox(3)-bbox(1) bbox(4)-bbox(2)]; %shape的包围盒

% cut original image to a region which is a bit larger than the face

% bounding box

region = enlargingbbox(Data{i}.bbox_gt, 2.0); %将true_shape的包围盒放大一倍,形成更大的包围盒,以此裁剪人脸,也有通过人脸检测框放大的

region(2) = double(max(region(2), 1)); %防止放大后的region超过图像,这样一旦超过坐标会变成负数。因此取了和1的最大值.

region(1) = double(max(region(1), 1));

bottom_y = double(min(region(2) + region(4) - 1, Data{i}.height_orig)); %同理防止过高,过宽

right_x = double(min(region(1) + region(3) - 1, Data{i}.width_orig));

img_region = img(region(2):bottom_y, region(1):right_x, :);

Data{i}.shape_gt = bsxfun(@minus, Data{i}.shape_gt, double([region(1) region(2)])); %68*2的矩阵减去一个向量

% to save memory cost during training

if exc_setlabel == 2

ratio = min(1, sqrt(single(150 * 150) / single(size(img_region, 1) * size(img_region, 2)))); %如果图像小于150*150,则不用缩放,否则缩放到150*150以内

img_region = imresize(img_region, ratio);

Data{i}.shape_gt = Data{i}.shape_gt .* ratio;

end

Data{i}.ori_img=img;

Data{i}.lefttop=[region(1) region(2)]; %自己补充的

Data{i}.bbox_gt = getbbox(Data{i}.shape_gt);

Data{i}.bbox_facedet = getbbox(Data{i}.shape_gt); %应该改为人脸检测框

% perform face detection using matlab face detector

%{

bbox = step(faceDetector, img_region);

if isempty(bbox)

% if face detection is failed

isdetected(i) = 1;

Data{i}.bbox_facedet = getbbox(Data{i}.shape_gt);

else

int_ratios = zeros(1, size(bbox, 1));

for b = 1:size(bbox, 1)

area = rectint(Data{i}.bbox_gt, bbox(b, :));

int_ratios(b) = (area)/(bbox(b, 3)*bbox(b, 4) + Data{i}.bbox_gt(3)*Data{i}.bbox_gt(4) - area);

end

[max_ratio, max_ind] = max(int_ratios);

if max_ratio < 0.4 % detection fail

isdetected(i) = 0;

else

Data{i}.bbox_facedet = bbox(max_ind, 1:4);

isdetected(i) = 1;

% imgOut = insertObjectAnnotation(img_region,'rectangle',Data{i}.bbox_facedet,'Face');

% imshow(imgOut);

end

end

%}

% recalculate the location of groundtruth shape and bounding box

% Data{i}.shape_gt = bsxfun(@minus, Data{i}.shape_gt, double([region(1) region(2)]));

% Data{i}.bbox_gt = getbbox(Data{i}.shape_gt);

if size(img_region, 3) == 1

Data{i}.img_gray = img_region;

else

% hsv = rgb2hsv(img_region);

Data{i}.img_gray = rgb2gray(img_region);

end

Data{i}.width = size(img_region, 2);

Data{i}.height = size(img_region, 1);

end

ind_valid = ones(1, length(imgpathlist));

parfor i = 1:length(imgpathlist)

if ~isempty(exc_setlabel)

ind = strfind(imgpathlist{i}, setnames{exc_setlabel}); %strfind是找imgpathlist{i}中含有setnames{exc_setlabel}='test'的地址

if ~isempty(ind) % | ~isdetected(i)

ind_valid(i) = 0;

end

end

end

% learn the linear transformation from detected bboxes to groundtruth bboxes

% bboxes = [bboxes_gt bboxes_facedet];

% bboxes = bboxes(ind_valid == 1, :);

Data = Data(ind_valid == 1); %找到含有test的地址,并排除出去,保证Data都是train_data

end

function shape = loadshape(path)

% function: load shape from pts file

file = fopen(path);

if ~isempty(strfind(path, 'COFW'))

shape = textscan(file, '%d16 %d16 %d8', 'HeaderLines', 3, 'CollectOutput', 3);

else

shape = textscan(file, '%d16 %d16', 'HeaderLines', 3, 'CollectOutput', 2);

end

fclose(file);

shape = shape{1};

end

function region = enlargingbbox(bbox, scale)

region(1) = floor(bbox(1) - (scale - 1)/2*bbox(3));

region(2) = floor(bbox(2) - (scale - 1)/2*bbox(4));

region(3) = floor(scale*bbox(3));

region(4) = floor(scale*bbox(4));

% region.right_x = floor(region.left_x + region.width - 1);

% region.bottom_y = floor(region.top_y + region.height - 1);

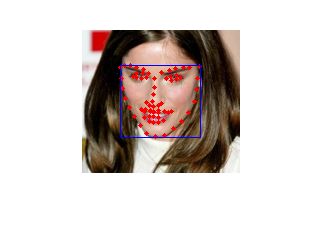

end示意图:

对一幅图片,实际上我们感兴趣的就是人脸区域,至于其他部分是无关紧要的。因此出于节省内存的原因,我们需要裁剪图片。前面已经讲述了裁剪的方法。从坐标系的角度来看,实际上是从原始坐标系转化到以扩充后的盒子为基础的坐标系。即如图蓝色的为原始坐标系,就是一张图片的坐标系。红色的为后来裁剪后的坐标系,而黑色框为人脸框(可以是人脸检测获得的,也可以是ground_truth points的包围盒)。那么坐标系的变化,简单而言可以写作:

shapes_gt˜=shapes_gt−[Region(1),Region(2)]

上式就是原来的shape经过裁剪后的坐标。后来还加上了缩放,即:

shapes_gt˜=shapes_gt˜.∗Ratio

左右翻转图片

train_model.m 第60行

% Augmentate data for traing: assign multiple initial shapes to each

% image(为每一张图片增加多个初始shape)

Data = Tr_Data; % (1:10:end);

Param = params;

if Param.flipflag % if conduct flipping

Data_flip = cell(size(Data, 1), 1);

for i = 1:length(Data_flip)

Data_flip{i}.img_gray = fliplr(Data{i}.img_gray);%左右翻转

Data_flip{i}.width_orig = Data{i}.width_orig;

Data_flip{i}.height_orig = Data{i}.height_orig;

Data_flip{i}.width = Data{i}.width;

Data_flip{i}.height = Data{i}.height;

Data_flip{i}.shape_gt = flipshape(Data{i}.shape_gt);

Data_flip{i}.shape_gt(:, 1) = Data{i}.width - Data_flip{i}.shape_gt(:, 1);

Data_flip{i}.bbox_gt = Data{i}.bbox_gt;

Data_flip{i}.bbox_gt(1) = Data_flip{i}.width - Data_flip{i}.bbox_gt(1) - Data_flip{i}.bbox_gt(3);

Data_flip{i}.bbox_facedet = Data{i}.bbox_facedet;

Data_flip{i}.bbox_facedet(1) = Data_flip{i}.width - Data_flip{i}.bbox_facedet(1) - Data_flip{i}.bbox_facedet(3);

end

Data = [Data; Data_flip];

end