轻松搭建hadoop-1.2.1集群(5)—配置HBase集群

轻松搭建hadoop-1.2.1集群(5)—配置HBase集群

1、解压hbase:

hbase解压在 /usr/local/ 目录里面:

[root@hadoop0 local]# pwd /usr/local

[root@hadoop0 local]# ll 总用量 216592 drwxr-xr-x. 7 root root 4096 2月 23 14:31 hbase -rw-r--r--. 1 root root 69385590 2月 23 14:30 hbase-0.98.0-hadoop1-bin.tar.gz

2、设置环境变量:

配置环境变量:

[root@hadoop0 hbase]# vim /etc/profile

添加如下内容:

#set hbase path export HBASE_HOME=/usr/local/hbase export PATH=.:$HBASE_HOME/bin:$PATH

使配置生效:

[root@hadoop0 hbase]# source /etc/profile

3、配置hbase-env.sh文件:

查看 /usr/local/hbase/conf 目录里面的配置文件:

[root@hadoop0 conf]# pwd /usr/local/hbase/conf [root@hadoop0 conf]# ls hadoop-metrics2-hbase.properties hbase-env.sh hbase-site.xml regionservers hbase-env.cmd hbase-policy.xml log4j.properties [root@hadoop0 conf]#

执行vi编辑命令:

[root@hadoop0 conf]# vim hbase-env.sh

添加内容1,制定jdk路径:

export JAVA_HOME=/usr/local/jdk

添加内容2,不用hbase自带的zookeeper:

export HBASE_MANAGES_ZK=false

4、配置hbase-site.xml 文件:

执行vi编辑命令:

[root@hadoop0 conf]# vim hbase-site.xml

添加内容如下:

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://192.168.1.2:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop0,hadoop1,hadoop2</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

5、配置regionservers文件:

[root@hadoop0 conf]# vim regionservers

添加内容如下:

192.168.1.3 192.168.1.4

6、复制hadoop0中的hbase文件夹到hadoop1、hadoop2中

往hadoop1上拷贝:

[root@hadoop0 local]# scp -r hbase192.168.1.3:/usr/local/

往hadoop2上拷贝:

[root@hadoop0 local]# scp -r hbase192.168.1.4:/usr/local/

7、复制hadoop0中的/etc/profile配置文件到hadoop1、hadoop2中

往hadoop1上拷贝:

[root@hadoop0 local]# scp /etc/profile 192.168.1.3:/etc/profile 100% 2291 2.2KB/s 00:00

往hadoop2上拷贝:

[root@hadoop0 local]# scp /etc/profile192.168.1.4:/etc/ profile 100% 2291 2.2KB/s 00:00

在hadoop1上执行下面命令使配置生效:

[root@hadoop1 ~]# source /etc/profile

在hadoop2上执行下面命令使配置生效:

[root@hadoop2 ~]# source /etc/profile

8、启动hbase:

先启动hadoop,再启动zookeeper,最后启动hbase:

第一先启动hadoop,执行命令 start-all.sh:

[root@hadoop0 ~]# start-all.sh Warning: $HADOOP_HOME is deprecated. starting namenode, logging to/usr/local/hadoop/libexec/../logs/hadoop-root-namenode-hadoop0.out 192.168.1.3: starting datanode, logging to/usr/local/hadoop/libexec/../logs/hadoop-root-datanode-hadoop1.out 192.168.1.4: starting datanode, logging to/usr/local/hadoop/libexec/../logs/hadoop-root-datanode-hadoop2.out 192.168.1.2: starting secondarynamenode,logging to/usr/local/hadoop/libexec/../logs/hadoop-root-secondarynamenode-hadoop0.out starting jobtracker, logging to/usr/local/hadoop/libexec/../logs/hadoop-root-jobtracker-hadoop0.out 192.168.1.4: starting tasktracker, loggingto /usr/local/hadoop/libexec/../logs/hadoop-root-tasktracker-hadoop2.out 192.168.1.3: starting tasktracker, loggingto /usr/local/hadoop/libexec/../logs/hadoop-root-tasktracker-hadoop1.out

查看hadoop0上进程:

[root@hadoop0 ~]# jps 4575 JobTracker 4345 NameNode 4492 SecondaryNameNode 4652 Jps [root@hadoop0 ~]#

查看hadoop1上进程:

[root@hadoop1 ~]# jps 3459 DataNode 3593 Jps 3535 TaskTracker

查看hadoop2上进程:

[root@hadoop2 ~]# jps 3505 Jps 3428 TaskTracker 3351 DataNode

第二启动zookeeper:

在hadoop0上启动zookeeper:

[root@hadoop0 ~]# zkServer.sh start JMX enabled by default Using config:/usr/local/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED

在hadoop1上启动zookeeper:

[root@hadoop1 ~]# zkServer.sh start JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED

在hadoop2上启动zookeeper:

[root@hadoop2 ~]# zkServer.sh start JMX enabled by default Using config:/usr/local/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED

查看hadoop0上zookeeper状态:

[root@hadoop0 ~]# zkServer.sh status JMX enabled by default Using config:/usr/local/zookeeper/bin/../conf/zoo.cfg Mode: follower

查看hadoop1上zookeeper状态:

[root@hadoop1 ~]# zkServer.sh status JMX enabled by default Using config:/usr/local/zookeeper/bin/../conf/zoo.cfg Mode: leader

查看hadoop2上zookeeper状态:

[root@hadoop2 ~]# zkServer.sh status JMX enabled by default Using config:/usr/local/zookeeper/bin/../conf/zoo.cfg Mode: follower

查看hadoop0上进程:

[root@hadoop0 ~]# jps 4575 JobTracker 4796 Jps 4744 QuorumPeerMain 4345 NameNode 4492 SecondaryNameNode

查看hadoop1上进程:

[root@hadoop1 ~]# jps 3459 DataNode 3711 Jps 3535 TaskTracker 3651 QuorumPeerMain

查看hadoop2上进程:

[root@hadoop2 ~]# jps 3428 TaskTracker 3546 QuorumPeerMain 3351 DataNode 3600 Jps

第三启动HBase:

执行启动命令 start-hbase.sh :

[root@hadoop0 ~]# start-hbase.sh starting master, logging to/usr/local/hbase/logs/hbase-root-master-hadoop0.out 192.168.1.3: starting regionserver, loggingto /usr/local/hbase/bin/../logs/hbase-root-regionserver-hadoop1.out 192.168.1.4: starting regionserver, loggingto /usr/local/hbase/bin/../logs/hbase-root-regionserver-hadoop2.out [root@hadoop0 ~]#

查看hadoop0上进程:

[root@hadoop0 ~]# jps 4575 JobTracker 6258 HMaster 4744 QuorumPeerMain 4345 NameNode 4492 SecondaryNameNode 6428 Jps

查看hadoop1上进程:

[root@hadoop1 ~]# jps 3459 DataNode 4268 HRegionServer 3535 TaskTracker 3651 QuorumPeerMain 4426 Jps

查看hadoop2上进程:

[root@hadoop2 ~]# jps 4005 Jps 3880 HRegionServer 3428 TaskTracker 3546 QuorumPeerMain 3351 DataNode

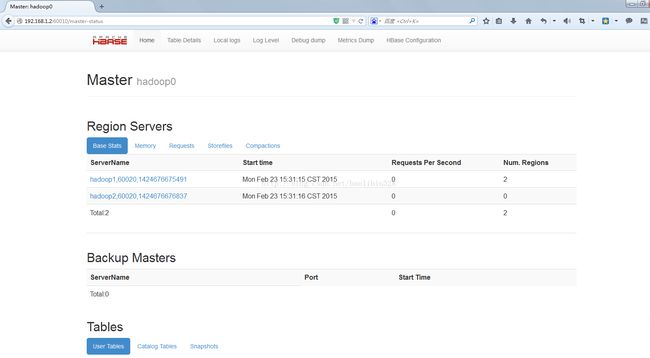

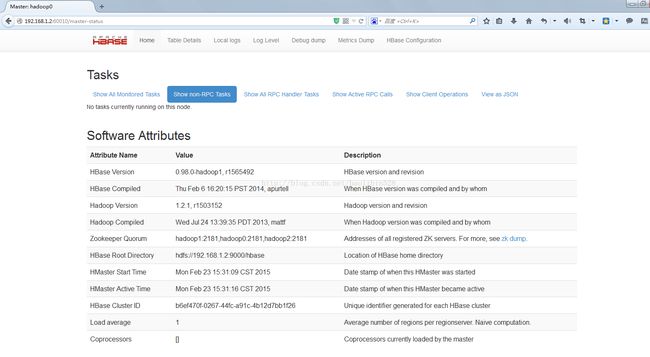

9、在浏览器上输入如下网址,查看hbase集群状态:

http://192.168.1.2:60010/master-status

HMaster

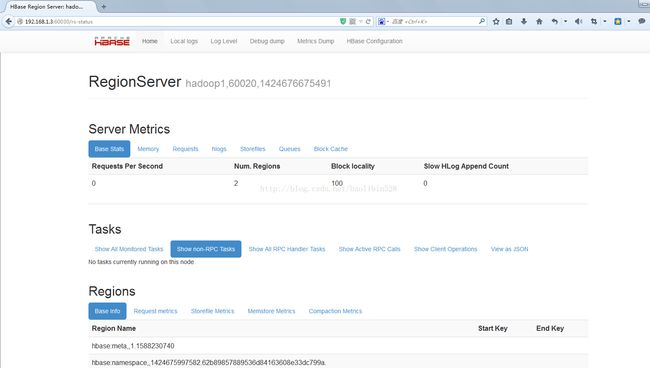

查看HRegionServer状态:

http://192.168.1.3:60030/rs-status

除了IP与主机名绑定的部分,最好都用主机名,这样统一设置方便各个步骤正常进行。

如果不统一可能哪个地方会出错,连接拒绝,找不到主机名等。

我之前写的hadoop集群搭建最好把一些IP地址换成主机名,这样层次条理分明些。

10、进入hbase shell命令操作:

进入hbase shell:

[root@hadoop0 ~]# hbase shell HBase Shell; enter 'help<RETURN>' forlist of supported commands. Type "exit<RETURN>" toleave the HBase Shell Version 0.98.0-hadoop1, r1565492, ThuFeb 6 16:20:15 PST 2014 hbase(main):001:0>

查看hbase集群状态:

hbase(main):002:0> status 2 servers, 0 dead, 1.0000 average load hbase(main):003:0>

查看hbase版本:

hbase(main):003:0> version 0.98.0-hadoop1, r1565492, Thu Feb 6 16:20:15 PST 2014 hbase(main):004:0>

查看表:

hbase(main):008:0> list TABLE 0 row(s) in 0.1950 seconds => [] hbase(main):009:0>

创建一个表:

hbase(main):009:0> create 'baozi','address','info' 0 row(s) in 49.7070 seconds => Hbase::Table - baozi hbase(main):010:0>

查看表:

hbase(main):010:0> list TABLE baozi 1 row(s) in 8.3030 seconds => ["baozi"] hbase(main):011:0>

退出hbase shell :

hbase(main):011:0> quit [root@hadoop0 ~]#

11、关闭hbase 集群:

先关闭hbase,在关闭zookeeper,最后关闭hadoop:

第一步关闭hbase集群,执行命令 stop-hbase.sh :

[root@hadoop0 ~]# stop-hbase.sh stoppinghbase.................................

查看hadoop0进程:

[root@hadoop0 ~]# jps 4575 JobTracker 7054 Jps 4744 QuorumPeerMain 4345 NameNode 4492 SecondaryNameNode

查看hadoop1进程:

[root@hadoop1 ~]# jps 4613 Jps 3459 DataNode 3535 TaskTracker 3651 QuorumPeerMain

查看hadoop2进程:

[root@hadoop2 ~]# jps 3428 TaskTracker 3546 QuorumPeerMain 3351 DataNode 4186 Jps

第二关闭zookeeper集群:

在hadoop0上执行命令zkServer.sh stop:

[root@hadoop0 ~]# zkServer.sh stop JMX enabled by default Using config:/usr/local/zookeeper/bin/../conf/zoo.cfg Stopping zookeeper ... STOPPED [root@hadoop0 ~]#

在hadoop1上执行命令zkServer.sh stop:

[root@hadoop1 ~]# zkServer.sh stop JMX enabled by default Using config:/usr/local/zookeeper/bin/../conf/zoo.cfg Stopping zookeeper ... STOPPED [root@hadoop1 ~]#

在hadoop2上执行命令zkServer.sh stop:

[root@hadoop2 ~]# zkServer.sh stop JMX enabled by default Using config:/usr/local/zookeeper/bin/../conf/zoo.cfg Stopping zookeeper ... STOPPED [root@hadoop2 ~]#

查看hadoop0进程:

[root@hadoop0 ~]# jps

4575 JobTracker 7080 Jps 4345 NameNode 4492 SecondaryNameNode

查看hadoop1进程:

[root@hadoop1 ~]# jps 3459 DataNode 3535 TaskTracker 4636 Jps

查看hadoop2进程:

[root@hadoop2 ~]# jps 4209 Jps 3428 TaskTracker 3351 DataNode

第三执行关闭hadoop命令:

执行关闭hadoop命令 stop-all.sh :

[root@hadoop0 ~]# stop-all.sh Warning: $HADOOP_HOME is deprecated. stopping jobtracker 192.168.1.4: stopping tasktracker 192.168.1.3: stopping tasktracker stopping namenode 192.168.1.3: stopping datanode 192.168.1.4: stopping datanode 192.168.1.2: stopping secondarynamenode

查看hadoop0进程:

[root@hadoop0 ~]# jps 7548 Jps [root@hadoop0 ~]#

查看hadoop1进程:

[root@hadoop1 ~]# jps 4780 Jps [root@hadoop1 ~]#

查看hadoop2进程:

[root@hadoop2 ~]# jps 4353 Jps [root@hadoop2 ~]#