K-means ++

K-means ++是解决K-means聚类中心初始化的问题,能有效地选择初始点。 保证初始的聚类中心之间的相互距离要尽可能的远。wiki上对该算法的描述是如下:

- 从输入的数据点集合中随机选择一个点作为第一个聚类中心

- 对于数据集中的每一个点x,计算它与最近聚类中心(指已选择的聚类中心)的距离D(x)

- 选择一个新的数据点作为新的聚类中心,选择的原则是:D(x)较大的点,被选取作为聚类中心的概率较大

- 重复2和3直到k个聚类中心被选出来

- 利用这k个初始的聚类中心来运行标准的k-means算法

K-Means主要有两个最重大的缺陷——都和初始值有关:

1、K 是事先给定的,这个 K 值的选定是非常难以估计的。很多时候,事先并不知道给定的数据集应该分成多少个类别才最合适。( ISODATA 算法通过类的自动合并和分裂,得到较为合理的类型数目 K)

2、K-Means算法需要用初始随机种子点来搞,这个随机种子点太重要,不同的随机种子点会有得到完全不同的结果。(K-Means++算法可以用来解决这个问题,其可以有效地选择初始点)

K-Means++算法步骤:

- 先从我们的数据库随机挑个随机点当“种子点”。

- 对于每个点,我们都计算其和最近的一个“种子点”的距离D(x)并保存在一个数组里,然后把这些距离加起来得到Sum(D(x))。

- 然后,再取一个随机值,用权重的方式来取计算下一个“种子点”。这个算法的实现是,先取一个能落在Sum(D(x))中的随机值Random,然后用Random -= D(x),直到其<=0,此时的点就是下一个“种子点”。

- 重复第(2)和第(3)步直到所有的K个种子点都被选出来。

- 进行K-Means算法。

K-means++算法关键在第3步,选取聚类中心的初始点。但为什么距离D(x)较大的点,就可以选为聚类中心,这篇文章给出了简单的解释 http://www.cnblogs.com/shelocks/archive/2012/12/20/2826787.html

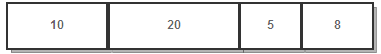

假设A、B、C、D的D(x)如上图所示,当算法取值Sum(D(x))*random时,该值会以较大的概率落入D(x)较大的区间内,所以对应的点会以较大的概率被选中作为新的聚类中心。

下面的K-means算法的Python代码实现,不知道是否正确,参照这篇博文http://blog.chinaunix.net/uid-24774106-id-3412491.html?page=2 的C语言

import pdb

from numpy import *

import time

import matplotlib.pyplot as plt

# show your cluster only available with 2-D data

def showCluster(dataSet, k, centroids, clusterAssment):

numSamples, dim = dataSet.shape

if dim != 2:

print "Sorry! I can not draw because the dimension of your data is not 2!"

return 1

mark = ['or', 'ob', 'og', 'ok', '^r', '+r', 'sr', 'dr', '<r', 'pr']

if k > len(mark):

print "Sorry! Your k is too large! please contact Zouxy"

return 1

# draw all samples

for i in xrange(numSamples):

markIndex = int(clusterAssment[i, 0])

plt.plot(dataSet[i, 0], dataSet[i, 1], mark[markIndex])

mark = ['Dr', 'Db', 'Dg', 'Dk', '^b', '+b', 'sb', 'db', '<b', 'pb']

# draw the centroids

for i in range(k):

plt.plot(centroids[i, 0], centroids[i, 1], mark[i], markersize = 12)

plt.show()

# calculate Euclidean distance

def euclDistance(vector1, vector2):

return sqrt(sum(power(vector2 - vector1, 2)))

def findCentroids(dataSet, k):

minDist = 1000;

minIndex = 0

numSamples, dim = dataSet.shape

dist = zeros(numSamples,float)

centroids = zeros((k, dim))

index = int(random.uniform(0, numSamples))

centroids[0, :] = dataSet[index, :]

for i in range(1,k):

sum = 0

for j in range(numSamples):

for n in range(i):

d = euclDistance(centroids[n,:],dataSet[j,:])

if minDist > d:

minDist = d

minIndex = n

dist[j] = minDist

sum = sum + minDist

minDist = 1000

sum = float(random.uniform(0,sum))

for m in range(numSamples):

sum = sum - dist[m]

if (sum > 0):

continue

centroids[i,:] = dataSet[m]

break;

return centroids

# k-means cluster

def kmeanspp(dataSet, k):

numSamples = dataSet.shape[0]

# first column stores which cluster this sample belongs to,

# second column stores the error between this sample and its centroid

clusterAssment = mat(zeros((numSamples, 2)))

clusterChanged = True

## step 1: init centroids

centroids = findCentroids(dataSet, k)

showCluster(dataSet, k, centroids, clusterAssment)

##pdb.set_trace()

while clusterChanged:

clusterChanged = False

## for each sample

for i in xrange(numSamples):

minDist = 100000.0

minIndex = 0

## for each centroid

## step 2: find the centroid who is closest

for j in range(k):

distance = euclDistance(centroids[j, :], dataSet[i, :])

if distance < minDist:

minDist = distance

minIndex = j

## step 3: update its cluster

if clusterAssment[i, 0] != minIndex:

clusterChanged = True

clusterAssment[i, :] = minIndex, minDist**2

## step 4: update centroids

for j in range(k):

pointsInCluster = dataSet[nonzero(clusterAssment[:, 0].A == j)[0]]

centroids[j, :] = mean(pointsInCluster, axis = 0)

##showCluster(dataSet, k, centroids, clusterAssment)

print 'Congratulations, cluster complete!'

return centroids, clusterAssment

if __name__ == '__main__':

## step 1: load data

print "step 1: load data..."

dataSet = []

fileIn = open('C://Python27/test/testSet.txt')

for line in fileIn.readlines():

lineArr = line.strip().split('\t')

dataSet.append([float(lineArr[0]), float(lineArr[1])])

#pdb.set_trace()

## step 2: clustering...

print "step 2: clustering..."

dataSet = mat(dataSet)

k = 4

centroids, clusterAssment = kmeanspp(dataSet, k)

## step 3: show the result

print "step 3: show the result..."

showCluster(dataSet, k, centroids, clusterAssment)

参考资料:

1)http://coolshell.cn/articles/7779.html

2)http://en.wikipedia.org/wiki/K-means%2B%2B

3)http://blog.chinaunix.net/uid-24774106-id-3412491.html?page=2

4)http://www.cnblogs.com/shelocks/archive/2012/12/20/2826787.html