ceph存储 ceph中PG的意义

ceph中引入了PG(placement group)的概念,PG是一个虚拟的概念而已,并不对应什么实体,具体的解释下面很清楚。

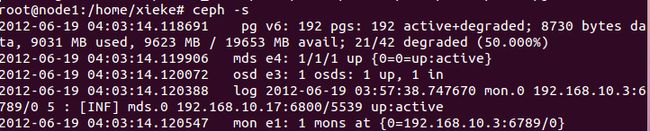

下图中可以看出,ceph先将object映射成PG,然后从PG映射成OSD。object可以是数据文件的一部分,也可以是journal file,也可以目录文件(包括内嵌的inode节点)

如果有一个OSD,默认有192个PG。

如果有两个OSD,默认有2*192=384个PG

PG (Placement Group) notes

Miscellaneous copy-pastes from emails, when this gets cleaned up itshould move out of /dev.

Overview

PG = “placement group”. When placing data in the cluster, objects aremapped into PGs, and those PGs are mapped onto OSDs. We use theindirection so that we can group objects, which reduces the amount ofper-object metadata we need to keep track of and processes we need torun (it would be prohibitively expensive to track eg the placementhistory on a per-object basis). Increasing the number of PGs canreduce the variance in per-OSD load across your cluster, but each PGrequires a bit more CPU and memory on the OSDs that are storing it. Wetry and ballpark it at 100 PGs/OSD, although it can vary widelywithout ill effects depending on your cluster. You hit a bug in how wecalculate the initial PG number from a cluster description.

There are a couple of different categories of PGs; the 6 that exist(in the original emailer’sceph-s output) are “local” PGs whichare tied to a specific OSD. However, those aren’t actually used in astandard Ceph configuration.

Mapping algorithm (simplified)

Many objects map to one PG.

Each object maps to exactly one PG.

One PG maps to a single list of OSDs, where the first one in the listis the primary and the rest are replicas.

Many PGs can map to one OSD.

A PG represents nothing but a grouping of objects; you configure thenumber of PGs you want (seehttp://ceph.com/wiki/Changing_the_number_of_PGs ), number ofOSDs * 100 is a good starting point, and all of your stored objectsare pseudo-randomly evenly distributed to the PGs. So a PG explicitlydoes NOT represent a fixed amount of storage; it represents 1/pg_num‘th of the storage you happen to have on your OSDs.

Ignoring the finer points of CRUSH and custom placement, it goessomething like this in pseudocode:

locator = object_name

obj_hash = hash(locator)

pg = obj_hash % num_pg

osds_for_pg = crush(pg) # returns a list of osds

primary = osds_for_pg[0]

replicas = osds_for_pg[1:]

If you want to understand the crush() part in the above, imagine aperfectly spherical datacenter in a vacuum ;) that is, if all osdshave weight 1.0, and there is no topology to the data center (all OSDsare on the top level), and you use defaults, etc, it simplifies toconsistent hashing; you can think of it as:

def crush(pg):

all_osds = ['osd.0', 'osd.1', 'osd.2', ...]

result = []

# size is the number of copies; primary+replicas

while len(result) < size:

r = hash(pg)

chosen = all_osds[ r % len(all_osds) ]

if chosen in result:

# osd can be picked only once

continue

result.append(chosen)

return result

User-visible PG States

Todo

diagram of states and how they can overlap

- creating

- the PG is still being created

- active

- requests to the PG will be processed

- clean

- all objects in the PG are replicated the correct number of times

- down

- a replica with necessary data is down, so the pg is offline

- replay

- the PG is waiting for clients to replay operations after an OSD crashed

- splitting

- the PG is being split into multiple PGs (not functional as of 2012-02)

- scrubbing

- the PG is being checked for inconsistencies

- degraded

- some objects in the PG are not replicated enough times yet

- inconsistent

- replicas of the PG are not consistent (e.g. objects arethe wrong size, objects are missing from one replicaafter recoveryfinished, etc.)

- peering

- the PG is undergoing the Peering process

- repair

- the PG is being checked and any inconsistencies found will be repaired (if possible)

- recovering

- objects are being migrated/synchronized with replicas

- backfill

- a special case of recovery, in which the entire contents ofthe PG are scanned and synchronized, instead of inferring whatneeds to be transferred from the PG logs of recent operations

- incomplete

- a pg is missing a necessary period of history from itslog. If you see this state, report a bug, and try to start anyfailed OSDs that may contain the needed information.

- stale

- the PG is in an unknown state - the monitors have not receivedan update for it since the PG mapping changed.

- remapped

- the PG is temporarily mapped to a different set of OSDs from whatCRUSH specified