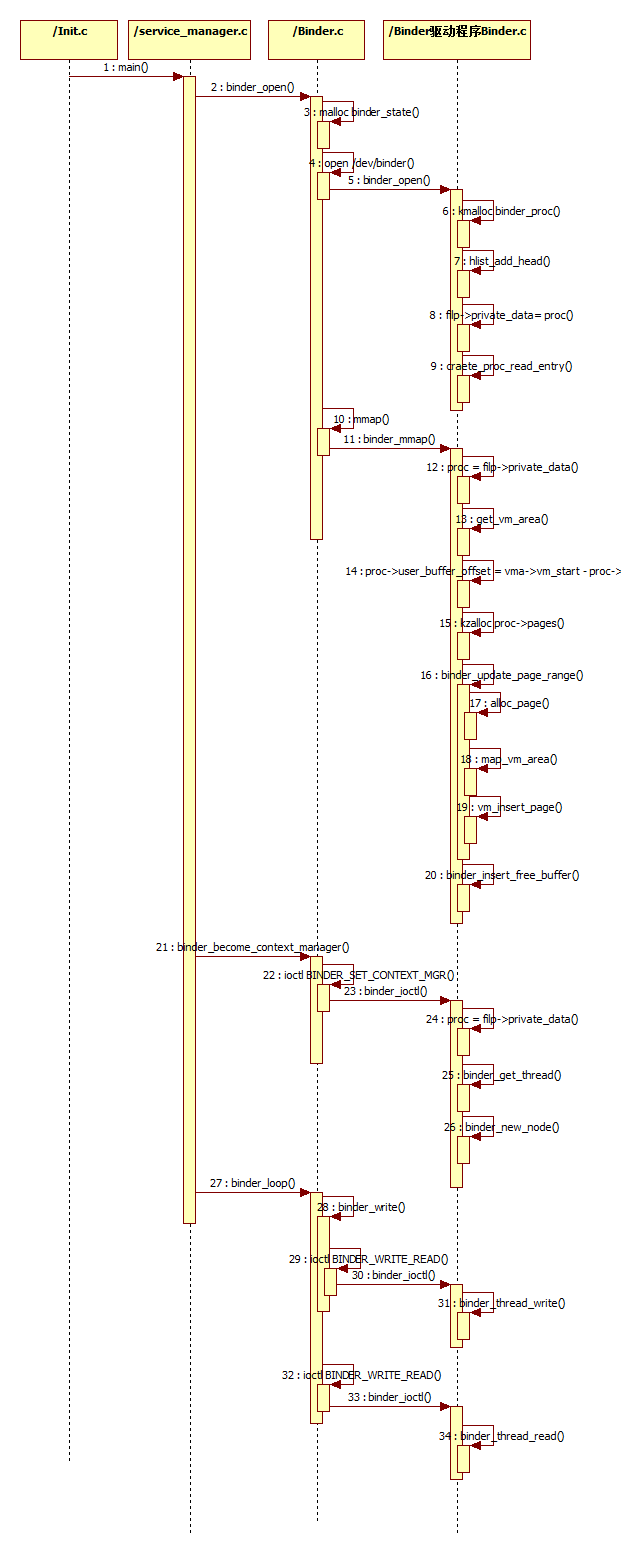

Binder进程间通信机制的Service Manager组件的启动过程

Service Manager组件在Binder进程间通信中是上下文管理者,负责管理Service组件,并且向Client组件提供获取Service代理对象的服务,其启动过程可以分为以下三个阶段:

1.打开并映射Binder设备文件;

2. 成为上下文管理者;

3.循环等待Client进程请求。

下面从源码中了解这三个阶段。

Binder驱动中的Service Manager组件由init进程负责启动,启动脚本如下:

#以服务的形式启动,进程名为servicewmanager,程序文件名为/system/bin/servicemanager

service servicemanager /system/bin/servicemanager

#核心类

class core

#以系统用户system的身份运行

user system

#属于system组

group system

#关键服务,一旦退出,系统重启,

critical

#一旦重启,healthd,zygote,media,surfaceflinger,drm进程也重启

onrestart restart healthd

onrestart restart zygote

onrestart restart media

onrestart restart surfaceflinger

onrestart restart drm

Service Manager程序的入口函数main在文件service_manager.c中,从源码中来了解其启动过程

int main(int argc, char **argv)

{

struct binder_state *bs;

//1. 打开Binder驱动的设备文件/dev/binder 并映射到本进程的地址空间

bs = binder_open(128*1024);

if (!bs) {

ALOGE("failed to open binder driver\n");

return -1;

}

//2. 将自己注册为Binder 进程间通信机制的上下文管理者

if (binder_become_context_manager(bs)) {

ALOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

selinux_enabled = is_selinux_enabled();

sehandle = selinux_android_service_context_handle();

if (selinux_enabled > 0) {

if (sehandle == NULL) {

ALOGE("SELinux: Failed to acquire sehandle. Aborting.\n");

abort();

}

if (getcon(&service_manager_context) != 0) {

ALOGE("SELinux: Failed to acquire service_manager context. Aborting.\n");

abort();

}

}

union selinux_callback cb;

cb.func_audit = audit_callback;

selinux_set_callback(SELINUX_CB_AUDIT, cb);

cb.func_log = selinux_log_callback;

selinux_set_callback(SELINUX_CB_LOG, cb);

svcmgr_handle = BINDER_SERVICE_MANAGER;

//3. 循环等待Client进程的通信请求并处理

binder_loop(bs, svcmgr_handler);

return 0;

}

1. 打开并映射Binder设备文件

struct binder_state *binder_open(size_t mapsize)

{

struct binder_state *bs;

struct binder_version vers;

bs = malloc(sizeof(*bs));

if (!bs) {

errno = ENOMEM;

return NULL;

}

//打开设备文件,实际调用的是Binder驱动程序的binder_open

bs->fd = open("/dev/binder", O_RDWR);

if (bs->fd < 0) {

fprintf(stderr,"binder: cannot open device (%s)\n",

strerror(errno));

goto fail_open;

}

if ((ioctl(bs->fd, BINDER_VERSION, &vers) == -1) ||

(vers.protocol_version != BINDER_CURRENT_PROTOCOL_VERSION)) {

fprintf(stderr, "binder: driver version differs from user space\n");

goto fail_open;

}

//将要映射的地址空间的大小保存在binder_state结构体bs的成员变量mapsize中

bs->mapsize = mapsize;

//将设备文件映射到进程的地址空间,实际调用的是Binder驱动程序的binder_mmap

/*将映射得到的地址空间的起始地址保存在binder_state结构体bs的成员变量mapped中*/

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

if (bs->mapped == MAP_FAILED) {

fprintf(stderr,"binder: cannot map device (%s)\n",

strerror(errno));

goto fail_map;

}

//返回binder_state结构体bs

return bs;

fail_map:

close(bs->fd);

fail_open:

free(bs);

return NULL;

}

2. 成为上下文管理者

int binder_become_context_manager(struct binder_state *bs)

{

//实际上调用的是Binder驱动的程序的binder_ioctl

return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0);

}

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

//获取binder_open中创建的binder_proc结构体,保存在binder_proc结构体proc中

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

/*printk(KERN_INFO "binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

return ret;

mutex_lock(&binder_lock);

//为当前线程创建binder_thread结构体,当前线程即为Service Manager进程的主线程,也是Service Manager进程中的一个Binder线程

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

static struct binder_thread *binder_get_thread(struct binder_proc *proc)

{

struct binder_thread *thread = NULL;

struct rb_node *parent = NULL;

struct rb_node **p = &proc->threads.rb_node;

//在binder_proc结构体的成员变量threads所描述的红黑树中查找当前线程的PID

while (*p) {

parent = *p;

thread = rb_entry(parent, struct binder_thread, rb_node);

if (current->pid < thread->pid)

p = &(*p)->rb_left;

else if (current->pid > thread->pid)

p = &(*p)->rb_right;

else

break;

}

if (*p == NULL) {

//查不到,则为当前线程创建一个binder_thread结构体,并初始化后返回

thread = kzalloc(sizeof(*thread), GFP_KERNEL);

if (thread == NULL)

return NULL;

binder_stats.obj_created[BINDER_STAT_THREAD]++;

thread->proc = proc;

thread->pid = current->pid;

init_waitqueue_head(&thread->wait);

INIT_LIST_HEAD(&thread->todo);

rb_link_node(&thread->rb_node, parent, p);

rb_insert_color(&thread->rb_node, &proc->threads);

thread->looper |= BINDER_LOOPER_STATE_NEED_RETURN;

thread->return_error = BR_OK;

thread->return_error2 = BR_OK;

}

return thread;

}

case BINDER_SET_CONTEXT_MGR:

if (binder_context_mgr_node != NULL) {

//已经有主件将自己注册为Binder进程间通信机制的上下文管理者,不允许重复注册,直接出错返回

printk(KERN_ERR "binder: BINDER_SET_CONTEXT_MGR already set\n");

ret = -EBUSY;

goto err;

}

if (binder_context_mgr_uid != -1) {

//已经有主件将自己注册为Binder进程间通信机制的上下文管理者

if (binder_context_mgr_uid != current->cred->euid) {

//出错返回

printk(KERN_ERR "binder: BINDER_SET_"

"CONTEXT_MGR bad uid %d != %d\n",

current->cred->euid,

binder_context_mgr_uid);

ret = -EPERM;

goto err;

}

} else

binder_context_mgr_uid = current->cred->euid;

//为Service Manager创建一个Binder实体对象并保存在全局变量binder_context_mgr_node中

binder_context_mgr_node = binder_new_node(proc, NULL, NULL);

if (binder_context_mgr_node == NULL) {

ret = -ENOMEM;

goto err;

}

//初始换内部弱引用计数

binder_context_mgr_node->local_weak_refs++;

//初始化内部强引用计数

binder_context_mgr_node->local_strong_refs++;

//因为系统运行期间Service Manager一直存在,且不需要增加引用计数,因为只有一个,所以直接将成员变量has_strong_ref和has_weak_ref 直接置为1

binder_context_mgr_node->has_strong_ref = 1;

binder_context_mgr_node->has_weak_ref = 1;

break;

/* 创建Binder实体对象

参数proc指向要处理的binder_proc结构体

参数ptr指向Binder本地对象内部的一个弱引用计数对象的地址值

参数cookie指向Binder本地对象的地址值

如果proc指向Service Manager,ptr和coolie都为NULL*/

static struct binder_node *

binder_new_node(struct binder_proc *proc, void __user *ptr, void __user *cookie)

{

struct rb_node **p = &proc->nodes.rb_node;

struct rb_node *parent = NULL;

struct binder_node *node;

//在binder_proc结构体proc的成员变量nodes所描述的红黑树中查找为prt和coolie所描述的Binder本地对象所创建的Binder实体对象

while (*p) {

parent = *p;

node = rb_entry(parent, struct binder_node, rb_node);

if (ptr < node->ptr)

p = &(*p)->rb_left;

else if (ptr > node->ptr)

p = &(*p)->rb_right;

else

//查到则返回空

return NULL;

}

//查不到则创建新的Binder实体对象

node = kzalloc(sizeof(*node), GFP_KERNEL);

if (node == NULL)

return NULL;

binder_stats.obj_created[BINDER_STAT_NODE]++;

rb_link_node(&node->rb_node, parent, p);

//将新创建的Binder实体对象加入到binder_proc结构体proc的成员变量nodes所描述的红黑树中

rb_insert_color(&node->rb_node, &proc->nodes);

//初始化Binder实体对象

node->debug_id = ++binder_last_id;

node->proc = proc;

node->ptr = ptr;

node->cookie = cookie;

node->work.type = BINDER_WORK_NODE;

INIT_LIST_HEAD(&node->work.entry);

INIT_LIST_HEAD(&node->async_todo);

if (binder_debug_mask & BINDER_DEBUG_INTERNAL_REFS)

printk(KERN_INFO "binder: %d:%d node %d u%p c%p created\n",

proc->pid, current->pid, node->debug_id,

node->ptr, node->cookie);

return node;

}

3.循环等待Client进程请求

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

//binder_write_read结构体bwr用来描述进程间通信过程中所传输的数据

//write表示输入(写入)数据,即从用户空间的进程到内核空间的Binder驱动程序

//read表示输出(读出)数据,即从内核空间的Binder驱动程序返回到用户空间的进程,是进程间通信的结果

struct binder_write_read bwr;

uint32_t readbuf[32];

//"write"是指往Binder驱动程序写入命令协议

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

/*Service Manager使用BC_ENTER_LOOPER协议将自己注册到Binder驱动程序中

主动成为一个Binder线程,以便Binder驱动程序将进程间通信请求分发给它处理*/

//将协议代码BC_ENTER_LOOPER写到缓冲区readbuf中

readbuf[0] = BC_ENTER_LOOPER;

//将缓冲区中的协议代码写入到Binder驱动程序中

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

//"read"是指从Binder驱动程序中读出进程间通信请求

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

//循环使用IO控制命令BINDER_WRITE_READ来检查Binder驱动程序是否有新的进程间通信请求需要处理

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

//处理Binder驱动程序发来的进程间通信请求

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

if (res == 0) {

ALOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}

int binder_write(struct binder_state *bs, void *data, size_t len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

//将参数data所指向的一块缓冲区作为binder_write_read结构体bwr的输入缓冲区(写入)

bwr.write_buffer = (uintptr_t) data;

//将binder_write_read结构体bwr输出缓冲区(读出)置为空,使当前线程将自己注册到Binder驱动程序之后立刻返回到用户空间,不会在Binder驱动程序中等待Client进程的通信请求

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

//调用Binder驱动程序的binder_ioctl将当前线程注册到Binder驱动程序中

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder_write: ioctl failed (%s)\n",

strerror(errno));

}

return res;

}

switch (cmd) {

case BINDER_WRITE_READ: {

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto err;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

if (binder_debug_mask & BINDER_DEBUG_READ_WRITE)

printk(KERN_INFO "binder: %d:%d write %ld at %08lx, read %ld at %08lx\n",

proc->pid, thread->pid, bwr.write_size, bwr.write_buffer, bwr.read_size, bwr.read_buffer);

if (bwr.write_size > 0) {

//处理BC_ENTER_LOOPER协议

ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (bwr.read_size > 0) {

//检查Service Manager是否有新的进程间通信请求需要处理

ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

case BC_ENTER_LOOPER:

if (binder_debug_mask & BINDER_DEBUG_THREADS)

printk(KERN_INFO "binder: %d:%d BC_ENTER_LOOPER\n",

proc->pid, thread->pid);

if (thread->looper & BINDER_LOOPER_STATE_REGISTERED) {

thread->looper |= BINDER_LOOPER_STATE_INVALID;

binder_user_error("binder: %d:%d ERROR:"

" BC_ENTER_LOOPER called after "

"BC_REGISTER_LOOPER\n",

proc->pid, thread->pid);

}

//将目标线程thread的姿态置为BINDER_LOOPER_STATE_ENTERED表明该线程是Binder线程可以处理进程间通信请求

thread->looper |= BINDER_LOOPER_STATE_ENTERED;

break;

static int

binder_thread_read(struct binder_proc *proc, struct binder_thread *thread,

void __user *buffer, int size, signed long *consumed, int non_block)

{

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

/*只有当没有在等待其他线程完成另外的事务和没有未处理的事项的时候

才会将wait_for_proc_work置为1*/

wait_for_proc_work = thread->transaction_stack == NULL && list_empty(&thread->todo);

if (thread->return_error != BR_OK && ptr < end) {

if (thread->return_error2 != BR_OK) {

if (put_user(thread->return_error2, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (ptr == end)

goto done;

thread->return_error2 = BR_OK;

}

if (put_user(thread->return_error, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

thread->return_error = BR_OK;

goto done;

}

//线程处于空闲状态

thread->looper |= BINDER_LOOPER_STATE_WAITING;

if (wait_for_proc_work)

//当前线程所属进程又多了一个空闲的Binder线程

proc->ready_threads++;

mutex_unlock(&binder_lock);

if (wait_for_proc_work) {

//检查当前线程检查所属进程的todo队列中是否有未处理工作项

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("binder: %d:%d ERROR: Thread waiting "

"for process work before calling BC_REGISTER_"

"LOOPER or BC_ENTER_LOOPER (state %x)\n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

}

//将当前线程的优先级设为所属进程的优先级

binder_set_nice(proc->default_priority);

if (non_block) {

if (!binder_has_proc_work(proc, thread))

ret = -EAGAIN;

} else

ret = wait_event_interruptible_exclusive(proc->wait, binder_has_proc_work(proc, thread));

} else {

if (non_block) {

if (!binder_has_thread_work(thread))

ret = -EAGAIN;

} else

ret = wait_event_interruptible(thread->wait, binder_has_thread_work(thread));

}

mutex_lock(&binder_lock);

if (wait_for_proc_work)

//减少当前线程所属进程空闲的Binder线程

proc->ready_threads--;

thread->looper &= ~BINDER_LOOPER_STATE_WAITING;

if (ret)

return ret;

while (1) {

//循环处理工作项

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

if (!list_empty(&thread->todo))

w = list_first_entry(&thread->todo, struct binder_work, entry);

else if (!list_empty(&proc->todo) && wait_for_proc_work)

w = list_first_entry(&proc->todo, struct binder_work, entry);

else {

if (ptr - buffer == 4 && !(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN)) /* no data added */

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4)

break;

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

t = container_of(w, struct binder_transaction, work);

} break;

case BINDER_WORK_TRANSACTION_COMPLETE: {

cmd = BR_TRANSACTION_COMPLETE;

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

binder_stat_br(proc, thread, cmd);

if (binder_debug_mask & BINDER_DEBUG_TRANSACTION_COMPLETE)

printk(KERN_INFO "binder: %d:%d BR_TRANSACTION_COMPLETE\n",

proc->pid, thread->pid);

list_del(&w->entry);

kfree(w);

binder_stats.obj_deleted[BINDER_STAT_TRANSACTION_COMPLETE]++;

} break;

case BINDER_WORK_NODE: {

struct binder_node *node = container_of(w, struct binder_node, work);

uint32_t cmd = BR_NOOP;

const char *cmd_name;

int strong = node->internal_strong_refs || node->local_strong_refs;

int weak = !hlist_empty(&node->refs) || node->local_weak_refs || strong;

if (weak && !node->has_weak_ref) {

cmd = BR_INCREFS;

cmd_name = "BR_INCREFS";

node->has_weak_ref = 1;

node->pending_weak_ref = 1;

node->local_weak_refs++;

} else if (strong && !node->has_strong_ref) {

cmd = BR_ACQUIRE;

cmd_name = "BR_ACQUIRE";

node->has_strong_ref = 1;

node->pending_strong_ref = 1;

node->local_strong_refs++;

} else if (!strong && node->has_strong_ref) {

cmd = BR_RELEASE;

cmd_name = "BR_RELEASE";

node->has_strong_ref = 0;

} else if (!weak && node->has_weak_ref) {

cmd = BR_DECREFS;

cmd_name = "BR_DECREFS";

node->has_weak_ref = 0;

}

if (cmd != BR_NOOP) {

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (put_user(node->ptr, (void * __user *)ptr))

return -EFAULT;

ptr += sizeof(void *);

if (put_user(node->cookie, (void * __user *)ptr))

return -EFAULT;

ptr += sizeof(void *);

binder_stat_br(proc, thread, cmd);

if (binder_debug_mask & BINDER_DEBUG_USER_REFS)

printk(KERN_INFO "binder: %d:%d %s %d u%p c%p\n",

proc->pid, thread->pid, cmd_name, node->debug_id, node->ptr, node->cookie);

} else {

list_del_init(&w->entry);

if (!weak && !strong) {

if (binder_debug_mask & BINDER_DEBUG_INTERNAL_REFS)

printk(KERN_INFO "binder: %d:%d node %d u%p c%p deleted\n",

proc->pid, thread->pid, node->debug_id, node->ptr, node->cookie);

rb_erase(&node->rb_node, &proc->nodes);

kfree(node);

binder_stats.obj_deleted[BINDER_STAT_NODE]++;

} else {

if (binder_debug_mask & BINDER_DEBUG_INTERNAL_REFS)

printk(KERN_INFO "binder: %d:%d node %d u%p c%p state unchanged\n",

proc->pid, thread->pid, node->debug_id, node->ptr, node->cookie);

}

}

} break;

case BINDER_WORK_DEAD_BINDER:

case BINDER_WORK_DEAD_BINDER_AND_CLEAR:

case BINDER_WORK_CLEAR_DEATH_NOTIFICATION: {

struct binder_ref_death *death = container_of(w, struct binder_ref_death, work);

uint32_t cmd;

if (w->type == BINDER_WORK_CLEAR_DEATH_NOTIFICATION)

cmd = BR_CLEAR_DEATH_NOTIFICATION_DONE;

else

cmd = BR_DEAD_BINDER;

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (put_user(death->cookie, (void * __user *)ptr))

return -EFAULT;

ptr += sizeof(void *);

if (binder_debug_mask & BINDER_DEBUG_DEATH_NOTIFICATION)

printk(KERN_INFO "binder: %d:%d %s %p\n",

proc->pid, thread->pid,

cmd == BR_DEAD_BINDER ?

"BR_DEAD_BINDER" :

"BR_CLEAR_DEATH_NOTIFICATION_DONE",

death->cookie);

if (w->type == BINDER_WORK_CLEAR_DEATH_NOTIFICATION) {

list_del(&w->entry);

kfree(death);

binder_stats.obj_deleted[BINDER_STAT_DEATH]++;

} else

list_move(&w->entry, &proc->delivered_death);

if (cmd == BR_DEAD_BINDER)

goto done; /* DEAD_BINDER notifications can cause transactions */

} break;

}

if (!t)

continue;

BUG_ON(t->buffer == NULL);

if (t->buffer->target_node) {

struct binder_node *target_node = t->buffer->target_node;

tr.target.ptr = target_node->ptr;

tr.cookie = target_node->cookie;

t->saved_priority = task_nice(current);

if (t->priority < target_node->min_priority &&

!(t->flags & TF_ONE_WAY))

binder_set_nice(t->priority);

else if (!(t->flags & TF_ONE_WAY) ||

t->saved_priority > target_node->min_priority)

binder_set_nice(target_node->min_priority);

cmd = BR_TRANSACTION;

} else {

tr.target.ptr = NULL;

tr.cookie = NULL;

cmd = BR_REPLY;

}

tr.code = t->code;

tr.flags = t->flags;

tr.sender_euid = t->sender_euid;

if (t->from) {

struct task_struct *sender = t->from->proc->tsk;

tr.sender_pid = task_tgid_nr_ns(sender, current->nsproxy->pid_ns);

} else {

tr.sender_pid = 0;

}

tr.data_size = t->buffer->data_size;

tr.offsets_size = t->buffer->offsets_size;

tr.data.ptr.buffer = (void *)t->buffer->data + proc->user_buffer_offset;

tr.data.ptr.offsets = tr.data.ptr.buffer + ALIGN(t->buffer->data_size, sizeof(void *));

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (copy_to_user(ptr, &tr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_stat_br(proc, thread, cmd);

if (binder_debug_mask & BINDER_DEBUG_TRANSACTION)

printk(KERN_INFO "binder: %d:%d %s %d %d:%d, cmd %d"

"size %zd-%zd ptr %p-%p\n",

proc->pid, thread->pid,

(cmd == BR_TRANSACTION) ? "BR_TRANSACTION" : "BR_REPLY",

t->debug_id, t->from ? t->from->proc->pid : 0,

t->from ? t->from->pid : 0, cmd,

t->buffer->data_size, t->buffer->offsets_size,

tr.data.ptr.buffer, tr.data.ptr.offsets);

list_del(&t->work.entry);

t->buffer->allow_user_free = 1;

if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) {

t->to_parent = thread->transaction_stack;

t->to_thread = thread;

thread->transaction_stack = t;

} else {

t->buffer->transaction = NULL;

kfree(t);

binder_stats.obj_deleted[BINDER_STAT_TRANSACTION]++;

}

break;

}

done:

*consumed = ptr - buffer;

if (proc->requested_threads + proc->ready_threads == 0 &&

proc->requested_threads_started < proc->max_threads &&

(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED)) /* the user-space code fails to */

/*spawn a new thread if we leave this out */) {

//请求当前线程所属进程增加一个新的Binder线程来处理进程间通信请求

proc->requested_threads++;

if (binder_debug_mask & BINDER_DEBUG_THREADS)

printk(KERN_INFO "binder: %d:%d BR_SPAWN_LOOPER\n",

proc->pid, thread->pid);

if (put_user(BR_SPAWN_LOOPER, (uint32_t __user *)buffer))

return -EFAULT;

}

return 0;

}