How HEVC/H.265 works, technical details & diagrams(转自国外论坛)

To start with: HEVC is actually a bit simpler conceptually than AVC, and lots of things in the spec are done to make life easier for the hardware codec designer (like me).

Picture partitioning

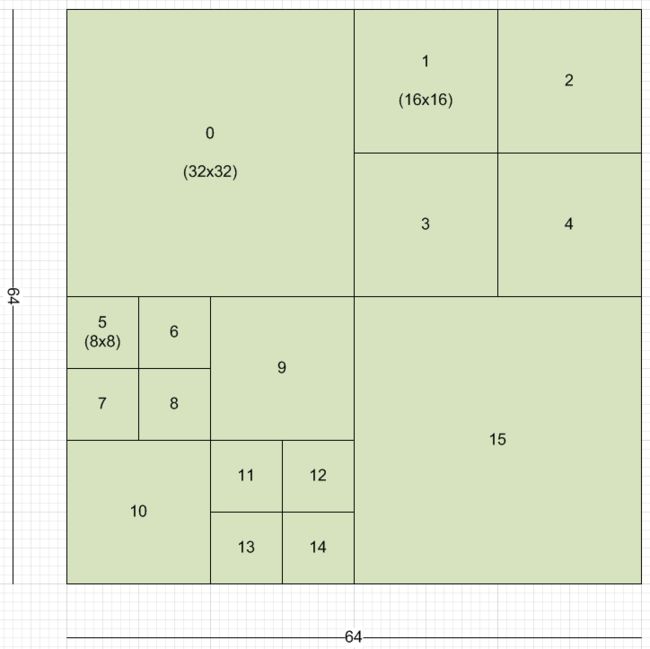

Instead of macroblocks, HEVC pictures are divided into so-called coding tree blocks, or CTBs for short, which appear in the picture in raster order. Depending on the stream parameters, they are either 64x64, 32x32 or 16x16. Each CTB can be split recursively in a quad-tree structure, all the way down to 8x8. So for example a 32x32 CTB can consist of three 16x16 and four 8x8 regions. These regions are called coding units, or CUs. CUs are the basic unit of prediction in HEVC. If you have been paying attention you have already inferred that CUs can be 64x64, 32x32, 16x16 or 8x8. The CUs in a CTB are traversed and coded in Z-order. Example ordering in a 64x64 CTB:

Like in AVC, a sequence of CTBs is called a slice. A picture can be split up into any number of slices, or the whole picture can be just one slice. In turn, each slice is split up into one or more “slice segments”, each in its own NAL unit. Only the first slice segment of a slice contains the full slice header, and the rest of the segments are referred to as dependent slice segments. A dependent slice segment is not decodable on its own; the decoder must have access to the first slice segment of the slice. This splitting of slices exists to allow for low-delay transmission of pictures without the coding efficiency loss of using many full slices. For example, a camera could send out a slice segment of the first CTB row so that the playback device on the other side of the network can begin drawing the picture before the camera is done coding the second CTB row. This can help achieve low-latency video conferencing.

HEVC does not support any interlaced tools (no more MBAFF hooray!). Interlaced video can still be coded, but it must be coded as a sequence of field pictures. No mixing of field and frame pictures.

Residual coding

For each CU, a residual signal is coded. HEVC supports four transform sizes: 4x4, 8x8, 16x16 and 32x32. Like AVC, the transforms are integer transforms based on the DCT. However the transform used for intra 4x4 is based on the DST (discrete sine transform). There is no Hadamard-transform like in AVC. The basis matrix uses coefficients requiring 7 bit storage, so it is quite a bit more precise than AVC. The higher precision and larger sizes of the transforms are one of the main reasons HEVC performs so much better than AVC.

The residual signal of a CU consists of one or more transform units, or TUs. The CU is recursively split with the same quad-tree method as the CTB splitting, with the smallest allowable block being of course 4x4, the smallest TU. For example a 16x16 CU could contain three 8x8 TUs and four 4x4 TUs. For each luma TU there is a corresponding chroma TU of one quarter the size, so a 16x16 luma TU comes with two 8x8 chroma TUs. Since there is no 64x64 transform, a 64x64 CU must be split at least once into four 32x32 TUs. The only exception to this is for skipped CUs, when there is no residual signal at all. Note that there is no 2x2 chroma TU size. Since the smallest possible CU is 8x8, there are always at least four 4x4 luma TUs in an 8x8 region, and that region thus consists of four luma 4x4’s and two chroma 4x4s (as opposed to 8 2x2s). Like the CUs in a CTB, TUs within a CU are also traversed in Z-order.

If a TU has size 4x4, the encoder has the option to signal a so-called “transform skip” flag, where the transform is simply bypassed all together, and the transmitted coefficients are really just spatial residual samples. This can help code crisp small text for example.

Inverse quantization is essentially the same as in AVC.

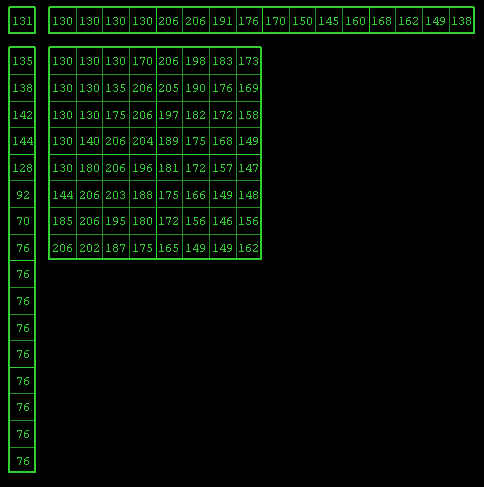

The way a TUs coefficients are coded in the bitstream is vastly different from AVC. First, the bitstream signals a last xy postion, indicating the position of the last coefficient in scan order. Then the decoder, starting at this last position, scans backwards until it reaches position 0,0, known as the DC coefficient. The coefficients are grouped into 4x4 coefficient groups. The coefficients are scanned diagonally (down and left) with each group, and the groups are scanned diagonally as well. For each group, the bitstream signals if it contains any coefficients. If so, it then signals a bit for each of the 16 coefficients in the group to indicate which are non-zero. Then for each of the non-zero coefficients in a group the remainder of the level is signaled. Finally the signs of all the non-zero coefficients in the group are decoded, and the decoder moves on to the next group. HEVC has an optional tool called sign bit hiding. If enabled and there are enough coefficients in the group, one of the sign bits is not coded, but rather inferred. The missing sign is inferred to be equal to the least significant bit of the sum of all the coefficient’s absolute values. This means that when the encoder was coding the coefficient group in question and the inferred sign was not the correct one, it had to adjust one of the coefficients up or down to fix that. The reason this tool works is that sign bits are coded in bypass mode (not compressed) and thus are expensive to code. By not coding some of the sign bits, the savings more than makes for any distortion caused by adjusting one of the coefficients.

Example of the scan process of a 16x16 TU:

Prediction units

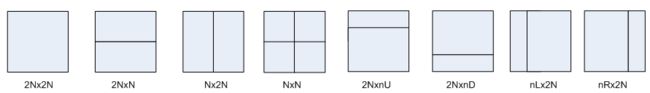

A CU is split using one of eight partition modes. These eight modes have the following mnemonics: 2Nx2N, 2NxN, Nx2N, NxN, 2NxnU, 2NxnD, nLx2N, nRx2N. Here the uppercase N represents half the length of a CU’s side and lowercase n represents one quarter. For a 32x32 CU, N = 16 and n = 8.

Thus a CU consists of one, two or four prediction units, or PUs. Note that this division is not recursive. A CU is either inter- or intra- coded, so if a CU is split into two PUs, both of them are inter- or both of them are intra-coded. Intra-coded CUs may only use the partition modes 2Nx2N or NxN, so intra PUs are always square. A CU may also be skipped, which implies inter coding and a partition of mode of 2Nx2N. NxN partition mode is only allowed when the CU is the smallest size allowed (8x8 normally). The idea is that if you want four separate predictions in a CU, you might as well just split (if you can) and create four separate CUs. Also, inter CUs are not allowed to be NxN if the CU is 8x8, meaning no 4x4 motion compensation at all. The smallest block size is 8x4 and 4x8, and these can never be bidirectional. This was done to minimize worst case memory bandwidth (see section below on motion compensation).

Intra prediction

Intra prediction in a CU follows the TU tree exactly. When an intra CU is coded using the NxN partition mode, the TU tree is forcibly split at least once, ensuring the intra and TU tree match. This means that the intra operation is always 32x32, 16x16, 8x8 or 4x4.

In HEVC, there are, wait for it, 35 different intra modes, as opposed to the 9 in AVC. 33 are directional and there is a DC and Planar mode as well. Like AVC, intra prediction requires a two 1D arrays that contain the upper and left neighboring samples, as well as an upper-left sample. The arrays are twice as long as the intra block size, extending below and right of the block. Example for an 8x8 block:

Depending on the position of the intra prediction block, any number of these neighboring samples may not be available. For example they could be outside the picture, in another slice, or belong to a CU that will be decoded in the future (causality violation). Any samples that are not available are filled in using a well-defined process after which the neighboring arrays are completely full of valid samples. Depending on the block size and intra mode, the neighboring arrays are filtered (smoothed).

The angular prediction process is similar to AVC, just with modes and a unified algorithm that can handle all block size. In addition to the 33 angular modes, there is a DC mode which simply uses a single value for the prediction, and Planar, which does a smooth gradient of the neighbor samples.

Intra mode coding is done by building a 3-entry list of modes. This list is generated using the left and above modes, along with some special derivations of them to come up with 3 unique modes. If the desired mode is in the list, the index is sent, otherwise the mode is sent explicitly.

Inter prediction – motion vector prediction

Like AVC, HEVC has two reference lists: L0 and L1. They can hold 16 references each, but the maximum total number of unique pictures is 8. This means that to max out the lists you have to add the same picture more than once. The encoder may choose to do this to be able to predict off the same picture with different weights (weighted prediction).

If you thought AVC had complex motion vector prediction, you haven’t seen anything yet. HEVC uses candidate list indexing. There are two MV prediction modes: Merge and AMVP (advanced motion vector prediction, although the spec doesn’t specifically call it that). The encoder decides between these two modes for each PU and signals it in the bitstream with a flag. Only the AMVP process can result in any desired MV, since it is the only one that codes an MV delta. Each mode builds a list of candidate MVs, and then selects one of them using an index coded in the bitstream.

AMVP process: This process is performed once for each MV; so once per L0 or L1 PU, or twice for a bidirectional PU. The bitstream specifies the reference picture to use for each MV. A two-deep candidate list is formed: First, attempt to obtain the left predictor. Prefer A0 over A1, prefer the same list over the opposite list, and prefer a neighbor that point to the same picture over one that doesn’t. If no neighbor points to the same picture, scale the vector to match the picture distance (similar process as AVC temporal direct mode). If all this resulted in a valid candidate, add it to the candidate list. Next, attempt to obtain the upper predictor. Prefer B0 over B1, over B2, prefer a neighbor MV that points to the same picture over one that doesn’t. Neighbor scaling for the upper predictor is only done if it wasn’t done for the left neighbor, ensuring no more than one scaling operation per PU. Add the candidate to the list if one was found. If the list now still contains less than 2 candidates, find the temporal candidate (scaled MV according to picture distance), which is co-located with the right bottom of the PU. If that lies outside the CTB row, or outside the picture, or if the co-located PU is intra, try again with the center position. Add the temporal candidate to the list if one was found. If the candidate list is still empty, just add 0,0 vectors until full. Finally, with the transmitted index, select the right candidate and add in the transmitted MV delta.

Phew, now merge mode: The merge process results in a candidate list of up to 5 entries deep, configured in the slice header. Each entry might end up being L0, L1 or bidirectional. First add at most 4 spatial candidates in this order: A1, B1, B0, A0, B2. A candidate cannot be added to the list if it is the same as one of the earlier candidates. Then, if the list still has room, add the temporal candidate, which is found by the same process as in AMVP. Then, if the list still has room, add bidirectional candidates formed by making combinations of the L0 and L1 vectors of the other candidates already in the list. Then finally if the list still isn’t full, add 0,0 MVs with increasing reference indices. The final motion is obtained by picking one of the up-to-5 candidates as signaled in the bitstream.

Note that HEVC sub-samples the temporal motion vectors on a 16x16 grid. That means that a decoder only needs make room for two motion vectors (L0 and L1) per 16x16 region in the picture when it allocates the temporal motion vector buffer. When the decoder calculates the co-located position, it zeroes out the lower 4 bits of the x/y position, snapping the location to a multiple of 16. Regarding which picture is considered the co-located picture, that is signaled in the slice header. This picture must be the same for all slices in a picture, which is a great feature for hardware decoders since it enables the motion vectors to be queued up ahead of time without having to worry about slice boundaries.

Inter prediction – motion compensation

Like AVC, HEVC specifies motion vectors in 1/4-pel, but uses an 8-tap filter for luma (all positions), and a 4-tap 1/8-pel filter for chroma. This is up from 6-tap and bilinear (2-tap) in AVC, respectively.

Because of the 8-tap filter, any given NxM sized block will need extra pixels on all sides (3 left/above, 4 right and below) to provide the filter with the data it needs. With small blocks like an 8x4, you really need to read (8+7)x(4+7) = 15x11 pixels. You can see that the more small blocks you have, the more you have to read from memory. That means more access to DRAM, which costs more time and power (battery life!), so this is why HEVC limits the smallest block to be uni-directional and 4x4 is not possible.

HEVC supports weighted prediction for both uni- and bi-directional PUs. However the weights are always explicitly transmitted in the slice header, there is no implicit weighted prediction like in AVC.

Deblocking

Deblocking in HEVC is performed on the 8x8 grid only, unlike AVC which deblocks every 4x4 grid edge. All vertical edges in the picture are deblocked first, followed by all horizontal edges. The actual filter is very similar to AVC, but only boundary strengths 2, 1 and 0 are supported. Because of the 8-pixel separation between edges, edges do not depend on each other enabling a highly parallelized implementation. In theory you could perform the vertical edge filtering with one thread per 8-pixel column in the picture. Chroma is only deblocked when one of the PUs on either side of a particular edge is intra-coded.

SAO

After deblocking is performed, a second filter optionally processes the picture. This filter is called Sample Adaptive Offset, or SAO. This relatively simple process is done on a per-CTB basis, and operates once on each pixel (so 64*64 + 32*32 + 32*32 = 6144 times in a 64x64 CTB). For each CTB, the bitstream codes a filter type and four offset values, which range from -7..7 (in 8-bit video).

There are two types of filters: Band and Edge.

Band Filter: Divide the sample range into 32 bands. A sample’s band is simply the upper 5 bits of its value. So samples with value 0..7 are in band 0, 8..15 in band 1 and so on. Then a band index is transmitted, along with the four offsets, that identifies four adjacent bands. So if the band index is 4, it means bands 4, 5, 6 and 7. If a pixel falls into one of these bands, add the corresponding offset to it.

Edge Filter: Along with the four offsets, and edge mode is transmitted: 0-degree, 90-degree, 45-degree or 135-degree. Depending on the mode, two adjacent neighbor samples are picked from the 3x3 sample around the current sample. 90-degree means use the above and lower samples, 45-degree means upper-right and lower-left and so on. Each of these two neighbors can be less than, greater than or equal to the current sample. Depending on the outcome of these two comparisons, the sample is either unchanged or one of the four offsets is added to it.

The offsets and filter modes are picked by the encoder in an attempt to make the CTB more closely match the source image. Often you will see in regions that have no motion or simple linear motion (like panning shots), the SAO filter will get turned off for inter pictures, since the “fixing” that the SAO filter did in the intra-picture carries forward through the inter pictures.

Entropy coding

HEVC performs entropy coding using CABAC only; there is no choice between CABAC and CAVLC like in AVC. Yay! The CABAC algorithm is nearly identical to AVC, but with a few minor improvements. There are about half as many context state variables as in AVC, and the initialization process is much simpler. In the design of the syntax (the sequence of values read from the bitstream), great care has been taken to group bypass-coded bins together as much as possible. CABAC decoding is inherently a very serial operation, making fast hardware implementations of CABAC difficult. However it is possible to decode more than one bypass bin at a time, and the bypass-bin grouping ensures hardware decoders can take advantage of this property.

Parallel tools

HEVC has two tools that are specifically designed to enable a multi-threaded decoder to decode a single picture with threads: Tiles and Wavefront.

Tiles: The picture is divided into a rectangular grid of CTBs, up to 20 columns and 22 rows. Each tile contains an integer number of independently decodable CTBs. This means motion vector prediction and intra-prediction is not performed across tile boundaries, it is as if each tile is a separate picture. The only exception to this independence is that the two in-loop filters can filter across the tile boundaries. The slice header contains byte-offsets for each tile, so that multiple decoder threads can seek to the start of their respective tiles right away. A single-threaded decoder would simply process the tiles one by one in raster order. So how does this work with slices and slice segments? To avoid difficult situations, the HEVC spec says that if a tile contains multiple slices, the slices must not contain any CTBs outside that tile. Conversely, if a slice contains multiple tiles, the slice must start with the CTB at the beginning of the first tile and end with the CTB at the end of the last tile. These same rules apply to the dependent slice segments. The tile structure (size and number) can vary from picture to picture, allowing a smart multi-threaded encoder to load balance.

Wavefront: Each CTB row can be decoded by its own thread. After a particular thread has completed the decoding of the second CTB in its row, the entropy decoder’s state is saved and transferred to the row below. Then the thread of the row below can start. This way there are no cross-thread prediction dependency issues. It does however require careful implementation to ensure a lower thread does not advance too far. Inter-thread communication is required.

Profiles/Levels:

There are 3 profiles in the spec: Main, Main 10 and Still Picture. For almost all content, Main is the only one that counts. It adds a few notable limitations: Bit depth is 8, tiles must be at least 256x64, tiles and wavefront may not be enabled at the same time. Many levels are specified, from 1 to 6.2. A 6.2 stream could be 8192x4320@120fps. See the spec for details.