【hadoop学习】--(2)安装和配置hadoop伪分布式

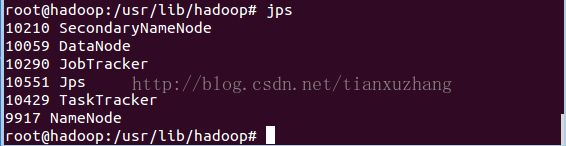

先上一张成功的截图

下面是安装和配置的过程

-----------------------------------

接上文

【hadoop学习】--(1)hadoop安装前准备

9 安装hadoop

<1>安装tar -zvxf hadoop-1.1.2.tar.gz

mv hadoop-1.1.2 /usr/lib/hadoop

<2>环境变量

执行gedit /etc/profile添加和修改后为:

export JAVA_HOME=/usr/lib/jvm

export HADOOP_HOME=/usr/lib/hadoop/

export PATH=.:$JAVA_HOME/bin:$HADOOP_HOME/bin:$PATH

source /etc/profile

10 配置hadoop伪分布式

<1>/usr/lib/hadoop/conf# gedit hadoop-env.sh

第9行改为:

export JAVA_HOME=/usr/lib/jvm

<2>/usr/lib/hadoop/conf# gedit core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://hadoop:9000</value>

<description>hadoop</description>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/lib/hadoop/temp</value>

</property>

</configuration>

<3>/usr/lib/hadoop/conf# gedit hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

<4>/usr/lib/hadoop/conf# gedit mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>hadoop:9001</value>

</property>

</configuration>

<5>格式化hdfs

执行:hadoop namenode -format

<6>启动hadoop

/usr/lib# start-all.sh输出下面的消息:

Warning: $HADOOP_HOME is deprecated.

starting namenode, logging to /usr/lib/hadoop/libexec/../logs/hadoop-root-namenode-hadoop.out

localhost: starting datanode, logging to /usr/lib/hadoop/libexec/../logs/hadoop-root-datanode-hadoop.out

localhost: starting secondarynamenode, logging to /usr/lib/hadoop/libexec/../logs/hadoop-root-secondarynamenode-hadoop.out

starting jobtracker, logging to /usr/lib/hadoop/libexec/../logs/hadoop-root-jobtracker-hadoop.out

localhost: starting tasktracker, logging to /usr/lib/hadoop/libexec/../logs/hadoop-root-tasktracker-hadoop.out

<7>测试的url

http://localhost:50030/ -Hadoop 管理介面

http://localhost:50060/ -Hadoop Task Tracker 状态

http://localhost:50070/ -Hadoop DFS 状态

测试进程

jps有除jps外有5个进程

<8>支持windows下面浏览器测试

修改C:\Windows\System32\drivers\etc\hosts文件,增加

192.168.80.100 hadoop

注:如果jps进程数不足5个代表安装配置失败

请检查配置文件有没有敲错了

确认正确了->执行stop-all.sh->删除temp文件夹->重新格式化一下