Nutch1.8+Hadoop1.2+Solr4.3分布式集群配置

Nutch 是一个开源Java 实现的搜索引擎。它提供了我们运行自己的搜索引擎所需的全部工具。包括全文搜索和Web爬虫。当然在百度百科上这种方法在Nutch1.2之后,已经不再适合这样描述Nutch了,因为在1.2版本之后,Nutch专注的只是爬取数据,而全文检索的部分彻底的交给Lucene和Solr,ES来做了,当然因为他们都是近亲关系,所以Nutch抓取完后的数据,非常easy的就能生成全文索引。

下面散仙,进入正题,Nutch目前最新的版本是2.2.1,其中2.x的版本支持gora提供多种存储方式,1.x版本最新的是1.8只支持HDFS存储,散仙在这里用的还是Nutch1.8,那么,散仙为什么选择1.x系列呢? 这其实和自己的Hadoop环境有关系,2.x的Nutch用的Hadoop2.x的版本,当然如果你不嫌麻烦,你完全可以改改jar的配置,使Nutch2.x跑在Hadoop1.x的集群上。使用1.x的Nutch就可以很轻松的跑在1.x的hadoop里。下面是散仙,本次测试Nutch+Hadoop+Solr集群的配置情况:

下面开始,正式的启程

1, 首先确保你的ant环境配置成功,一切的进行,最好在Linux下进行,windows上出问题的几率比较大,下载完的nutch源码,进入nutch的根目录下,执行ant,等待编译完成。编译完后,会有runtime目录,里面有Nutch启动的命令,local模式和deploy分布式集群模式

2, 配置nutch-site.xml加入如下内容:

3, 在hadoop集群上创建urls文件夹和mydir文件夹

,前者用于存储种子文件地址,后者存放爬取完后的数据。

hadoop fs -mkdir urls --创建文件夹

hadoop fs -put HDFS路径 本地路径 --上传种子文件到HDFS上。

hadoop fs -ls / ---查看路径下内容

4,配置好hadoop集群,以及它的环境变量HADOOP_HOME这个很重要,Nutch运行时候,会根据Hadoop的环境变量,提交作业。

配置完成之后,可以使用which hadoop命令,检测是否配置正确:

5, 配置solr服务,需要将Nutch的conf下的schema.xml文件,拷贝到solr的里面,覆盖掉solr原来的schema.xml文件,并加入IK分词,内容如下:

6, 配置好后,进行nutch的/root/apache-nutch-1.8/runtime/deploy/bin目录下

执行如下命令:

./crawl urls mydir http://192.168.211.36:9001/solr/ 2

启动集群抓取任务。

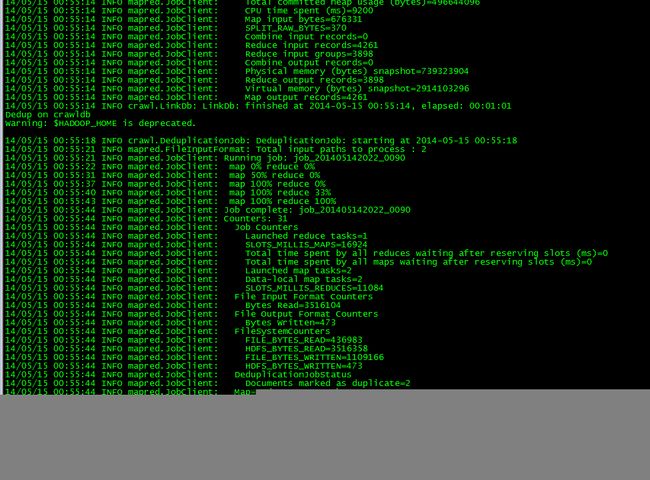

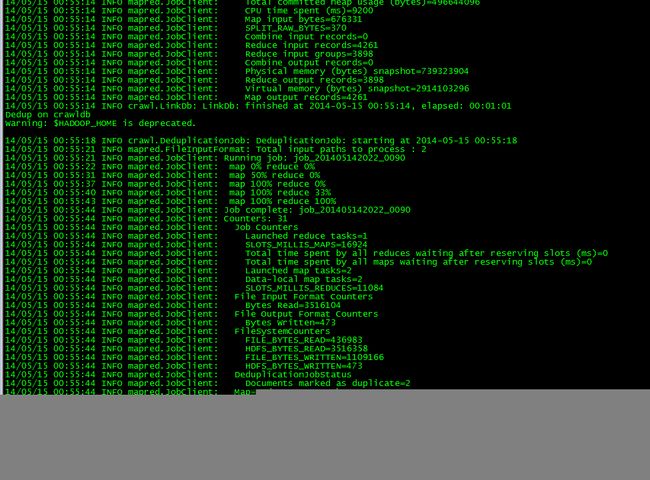

抓取中的MapReduce截图如下:

抓取完,我们就可以去solr中查看抓取的内容了,截图如下:

至此,一个简单的抓取,搜索系统就搞定了,非常轻松,使用都是Lucene系列开源的工程。

总结:配置过程中遇到几个比较典型的错误,记录如下:

在执行抓取的shell命令时,发现

使用 bin/crawl urls mydir http://192.168.211.36:9001/solr/ 2 命令有时候会出现,一些HDFS上的目录不能正确访问的问题,所以推荐使用下面的这个命令:

下面散仙,进入正题,Nutch目前最新的版本是2.2.1,其中2.x的版本支持gora提供多种存储方式,1.x版本最新的是1.8只支持HDFS存储,散仙在这里用的还是Nutch1.8,那么,散仙为什么选择1.x系列呢? 这其实和自己的Hadoop环境有关系,2.x的Nutch用的Hadoop2.x的版本,当然如果你不嫌麻烦,你完全可以改改jar的配置,使Nutch2.x跑在Hadoop1.x的集群上。使用1.x的Nutch就可以很轻松的跑在1.x的hadoop里。下面是散仙,本次测试Nutch+Hadoop+Solr集群的配置情况:

| 序号 | 名称 | 职责描述 |

| 1 | Nutch1.8 | 主要负责爬取数据,支持分布式 |

| 2 | Hadoop1.2.0 | 使用MapReduce进行并行爬取,使用HDFS存储数据,Nutch的任务提交在Hadoop集群上,支持分布式 |

| 3 | Solr4.3.1 | 主要负责检索,对爬完后的数据进行搜索,查询,海量数据支持分布式 |

| 4 | IK4.3 | 主要负责,对网页内容与标题进行分词,便于全文检索 |

| 5 | Centos6.5 | Linux系统,在上面运行nutch,hadoop等应用 |

| 6 | Tomcat7.0 | 应用服务器,给Solr提供容器运行 |

| 7 | JDK1.7 | 提供JAVA运行环境 |

| 8 | Ant1.9 | 提供Nutch等源码编译 |

| 9 | 屌丝软件工程师一名 | 主角 |

下面开始,正式的启程

1, 首先确保你的ant环境配置成功,一切的进行,最好在Linux下进行,windows上出问题的几率比较大,下载完的nutch源码,进入nutch的根目录下,执行ant,等待编译完成。编译完后,会有runtime目录,里面有Nutch启动的命令,local模式和deploy分布式集群模式

2, 配置nutch-site.xml加入如下内容:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>http.agent.name</name>

<value>mynutch</value>

</property>

<property>

<name>http.robots.agents</name>

<value>mynutch,*</value>

<description>The agent strings we'll look for in robots.txt files,

comma-separated, in decreasing order of precedence. You should

put the value of http.agent.name as the first agent name, and keep the

default * at the end of the list. E.g.: BlurflDev,Blurfl,*

</description>

</property>

<property>

<name>plugin.folders</name>

<!-- local模式下使用下面的配置 -->

<value>./src/plugin</value>

<!-- 集群模式下,使用下面的配置 -->

<value>plugins</value>

<!-- <value>D:\nutch编译好的1.8\Nutch1.8\src\plugin</value> -->

<description>Directories where nutch plugins are located. Each

element may be a relative or absolute path. If absolute, it is used

as is. If relative, it is searched for on the classpath.</description>

</property>

</configuration>

3, 在hadoop集群上创建urls文件夹和mydir文件夹

,前者用于存储种子文件地址,后者存放爬取完后的数据。

hadoop fs -mkdir urls --创建文件夹

hadoop fs -put HDFS路径 本地路径 --上传种子文件到HDFS上。

hadoop fs -ls / ---查看路径下内容

4,配置好hadoop集群,以及它的环境变量HADOOP_HOME这个很重要,Nutch运行时候,会根据Hadoop的环境变量,提交作业。

export HADOOP_HOME=/root/hadoop1.2 export PATH=$HADOOP_HOME/bin:$PATH ANT_HOME=/root/apache-ant-1.9.2 export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL export JAVA_HOME=/root/jdk1.7 export PATH=$JAVA_HOME/bin:$ANT_HOME/bin:$PATH export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

配置完成之后,可以使用which hadoop命令,检测是否配置正确:

[root@master bin]# which hadoop /root/hadoop1.2/bin/hadoop [root@master bin]#

5, 配置solr服务,需要将Nutch的conf下的schema.xml文件,拷贝到solr的里面,覆盖掉solr原来的schema.xml文件,并加入IK分词,内容如下:

<?xml version="1.0" encoding="UTF-8" ?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or

more contributor license agreements. See the NOTICE file

distributed with this work for additional information regarding

copyright ownership. The ASF licenses this file to You under the

Apache License, Version 2.0 (the "License"); you may not use

this file except in compliance with the License. You may obtain

a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0 Unless required by

applicable law or agreed to in writing, software distributed

under the License is distributed on an "AS IS" BASIS, WITHOUT

WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions

and limitations under the License.

-->

<!--

Description: This document contains Solr 3.1 schema definition to

be used with Solr integration currently build into Nutch. See

https://issues.apache.org/jira/browse/NUTCH-442

https://issues.apache.org/jira/browse/NUTCH-699

https://issues.apache.org/jira/browse/NUTCH-994

https://issues.apache.org/jira/browse/NUTCH-997

https://issues.apache.org/jira/browse/NUTCH-1058

https://issues.apache.org/jira/browse/NUTCH-1232

and

http://svn.apache.org/viewvc/lucene/dev/branches/branch_3x/solr/

example/solr/conf/schema.xml?view=markup

for more info.

-->

<schema name="nutch" version="1.5">

<types>

<fieldType name="string" class="solr.StrField" sortMissingLast="true"

omitNorms="true"/>

<fieldType name="long" class="solr.TrieLongField" precisionStep="0"

omitNorms="true" positionIncrementGap="0"/>

<fieldType name="float" class="solr.TrieFloatField" precisionStep="0"

omitNorms="true" positionIncrementGap="0"/>

<fieldType name="date" class="solr.TrieDateField" precisionStep="0"

omitNorms="true" positionIncrementGap="0"/>

<!-- 配置IK分词 -->

<fieldType name="ik" class="solr.TextField" positionIncrementGap="100">

<analyzer type="index">

<tokenizer class="org.wltea.analyzer.lucene.IKTokenizerFactory" useSmart="false" />

<!-- in this example, we will only use synonyms at query time

<filter class="solr.SynonymFilterFactory" synonyms="index_synonyms.txt" ignoreCase="true" expand="false"/>

-->

<!-- <filter class="solr.LowerCaseFilterFactory"/> -->

</analyzer>

<analyzer type="query">

<tokenizer class="org.wltea.analyzer.lucene.IKTokenizerFactory" useSmart="false" />

<!--<filter class="org.wltea.analyzer.lucene.IKSynonymFilterFactory" autoupdate="false" synonyms="synonyms.txt" flushtime="20" /> -->

<!--<filter class="solr.SynonymFilterFactory" synonyms="synonyms.txt" ignoreCase="true" expand="true"/> -->

<!--<filter class="solr.SynonymFilterFactory" synonyms="synonyms.txt" ignoreCase="true" expand="true"/> -->

<!-- <filter class="solr.LowerCaseFilterFactory"/> -->

</analyzer>

</fieldType>

<fieldType name="text" class="solr.TextField" positionIncrementGap="100">

<analyzer type="index">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="stopwords.txt" enablePositionIncrements="true" />

<!-- in this example, we will only use synonyms at query time

<filter class="solr.SynonymFilterFactory" synonyms="index_synonyms.txt" ignoreCase="true" expand="false"/>

-->

<filter class="solr.LowerCaseFilterFactory"/>

</analyzer>

<analyzer type="query">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="stopwords.txt" enablePositionIncrements="true" />

<filter class="solr.SynonymFilterFactory" synonyms="synonyms.txt" ignoreCase="true" expand="true"/>

<filter class="solr.LowerCaseFilterFactory"/>

</analyzer>

</fieldType>

<fieldType name="url" class="solr.TextField"

positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.WordDelimiterFilterFactory"

generateWordParts="1" generateNumberParts="1"/>

</analyzer>

</fieldType>

</types>

<fields>

<field name="_version_" type="long" indexed="true" stored="true"/>

<field name="id" type="string" stored="true" indexed="true"/>

<!-- core fields -->

<field name="segment" type="string" stored="true" indexed="false"/>

<field name="text" type="string" stored="true" indexed="false"/>

<field name="digest" type="string" stored="true" indexed="false"/>

<field name="boost" type="float" stored="true" indexed="false"/>

<!-- fields for index-basic plugin -->

<field name="host" type="string" stored="false" indexed="true"/>

<field name="url" type="url" stored="true" indexed="true"

required="true"/>

<field name="content" type="ik" stored="true" indexed="true"/>

<field name="title" type="ik" stored="true" indexed="true"/>

<field name="cache" type="string" stored="true" indexed="false"/>

<field name="tstamp" type="date" stored="true" indexed="false"/>

<!-- fields for index-anchor plugin -->

<field name="anchor" type="string" stored="true" indexed="true"

multiValued="true"/>

<!-- fields for index-more plugin -->

<field name="type" type="string" stored="true" indexed="true"

multiValued="true"/>

<field name="contentLength" type="long" stored="true"

indexed="false"/>

<field name="lastModified" type="date" stored="true"

indexed="false"/>

<field name="date" type="date" stored="true" indexed="true"/>

<!-- fields for languageidentifier plugin -->

<field name="lang" type="string" stored="true" indexed="true"/>

<!-- fields for subcollection plugin -->

<field name="subcollection" type="string" stored="true"

indexed="true" multiValued="true"/>

<!-- fields for feed plugin (tag is also used by microformats-reltag)-->

<field name="author" type="string" stored="true" indexed="true"/>

<field name="tag" type="string" stored="true" indexed="true" multiValued="true"/>

<field name="feed" type="string" stored="true" indexed="true"/>

<field name="publishedDate" type="date" stored="true"

indexed="true"/>

<field name="updatedDate" type="date" stored="true"

indexed="true"/>

<!-- fields for creativecommons plugin -->

<field name="cc" type="string" stored="true" indexed="true"

multiValued="true"/>

<!-- fields for tld plugin -->

<field name="tld" type="string" stored="false" indexed="false"/>

</fields>

<uniqueKey>id</uniqueKey>

<defaultSearchField>content</defaultSearchField>

<solrQueryParser defaultOperator="OR"/>

</schema>

6, 配置好后,进行nutch的/root/apache-nutch-1.8/runtime/deploy/bin目录下

执行如下命令:

./crawl urls mydir http://192.168.211.36:9001/solr/ 2

启动集群抓取任务。

抓取中的MapReduce截图如下:

抓取完,我们就可以去solr中查看抓取的内容了,截图如下:

至此,一个简单的抓取,搜索系统就搞定了,非常轻松,使用都是Lucene系列开源的工程。

总结:配置过程中遇到几个比较典型的错误,记录如下:

java.lang.Exception: java.lang.RuntimeException: Error in configuring object at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:354) Caused by: java.lang.RuntimeException: Error in configuring object at org.apache.hadoop.util.ReflectionUtils.setJobConf(ReflectionUtils.java:93) at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:64) at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:117) at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:426) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:366) at org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:223) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:441) at java.util.concurrent.FutureTask$Sync.innerRun(FutureTask.java:303) at java.util.concurrent.FutureTask.run(FutureTask.java:138) at java.util.concurrent.ThreadPoolExecutor$Worker.runTask(ThreadPoolExecutor.java:886) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:908) at java.lang.Thread.run(Thread.java:662) Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25) at java.lang.reflect.Method.invoke(Method.java:597) at org.apache.hadoop.util.ReflectionUtils.setJobConf(ReflectionUtils.java:88) ... 11 more Caused by: java.lang.RuntimeException: Error in configuring object at org.apache.hadoop.util.ReflectionUtils.setJobConf(ReflectionUtils.java:93) at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:64) at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:117) at org.apache.hadoop.mapred.MapRunner.configure(MapRunner.java:34) ... 16 more Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25) at java.lang.reflect.Method.invoke(Method.java:597) at org.apache.hadoop.util.ReflectionUtils.setJobConf(ReflectionUtils.java:88) ... 19 more Caused by: java.lang.RuntimeException: x point org.apache.nutch.net.URLNormalizer not found. at org.apache.nutch.net.URLNormalizers.<init>(URLNormalizers.java:123) at org.apache.nutch.crawl.Injector$InjectMapper.configure(Injector.java:74) ... 24 more 2013-09-05 20:40:49,329 INFO mapred.JobClient (JobClient.java:monitorAndPrintJob(1393)) - map 0% reduce 0% 2013-09-05 20:40:49,332 INFO mapred.JobClient (JobClient.java:monitorAndPrintJob(1448)) - Job complete: job_local1315110785_0001 2013-09-05 20:40:49,332 INFO mapred.JobClient (Counters.java:log(585)) - Counters: 0 2013-09-05 20:40:49,333 INFO mapred.JobClient (JobClient.java:runJob(1356)) - Job Failed: NA Exception in thread "main" java.io.IOException: Job failed! at org.apache.hadoop.mapred.JobClient.runJob(JobClient.java:1357) at org.apache.nutch.crawl.Injector.inject(Injector.java:281) at org.apache.nutch.crawl.Crawl.run(Crawl.java:132) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:65) at org.apache.nutch.crawl.Crawl.main(Crawl.java:55) ========================================================================== 解决方法:在nutch-site.xml里面加入如下配置。 <property> <name>plugin.folders</name> <value>./src/plugin</value> <description>Directories where nutch plugins are located. Each element may be a relative or absolute path. If absolute, it is used as is. If relative, it is searched for on the classpath.</description> </property>

在执行抓取的shell命令时,发现

使用 bin/crawl urls mydir http://192.168.211.36:9001/solr/ 2 命令有时候会出现,一些HDFS上的目录不能正确访问的问题,所以推荐使用下面的这个命令:

./crawl urls mydir http://192.168.211.36:9001/solr/ 2

转载自:http://itindex.net/detail/49582-nutch1.8-hadoop1.2-solr4.3