ubuntu下eclipse中hadoop中环境变量的设置

环境:ubuntu12.04(32位) eclipse-jee-luna-SR1-linux-gtk.tar.gz(32位) hadoop-2.2.0

1.安装hadoop

前一篇博客中有详细介绍 http://blog.csdn.net/nana_93/article/details/41912257

这里就不再详细说了

2.安装eclipse

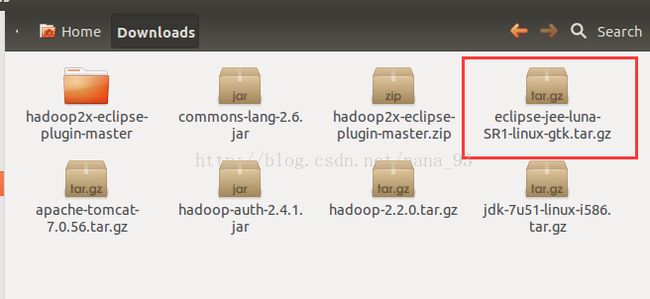

下载软件:

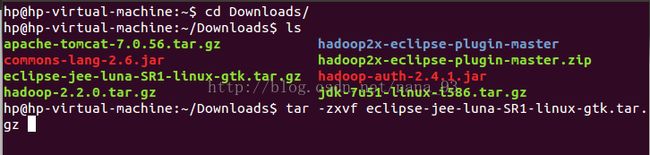

解压后,将eclipse放在路径 /opt/中

tar -zxvf eclipse-jee-luna-SR1-linux-gtk.tar.gz

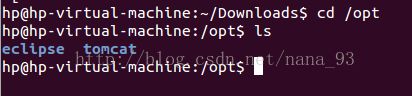

移动到/opt如下所示

给让当前用户分配opt/目录的权限

sudo chown hp:hp /opt/

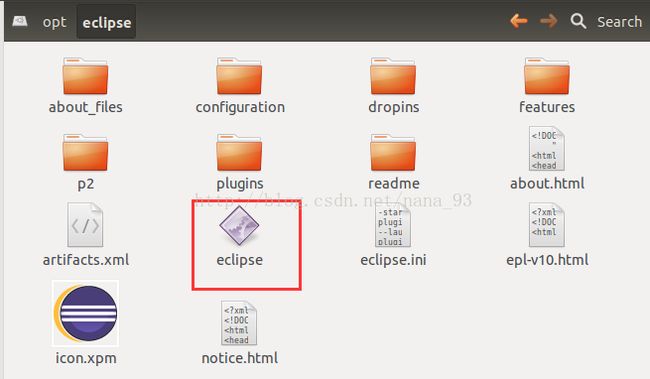

进入eclipse的路径中,双击即可运行

3.在eclipse中配置hadoop环境

下载编译eclipse插件

或者直接下载已经编译好的插件:http://download.csdn.net/detail/zythy/6735167

配置hadoop插件

将下载的hadoop-eclipse-plugin-2.2.0.jar文件放到Eclipse的plugins目录下,重启Eclipse即可看到该插件已生效。

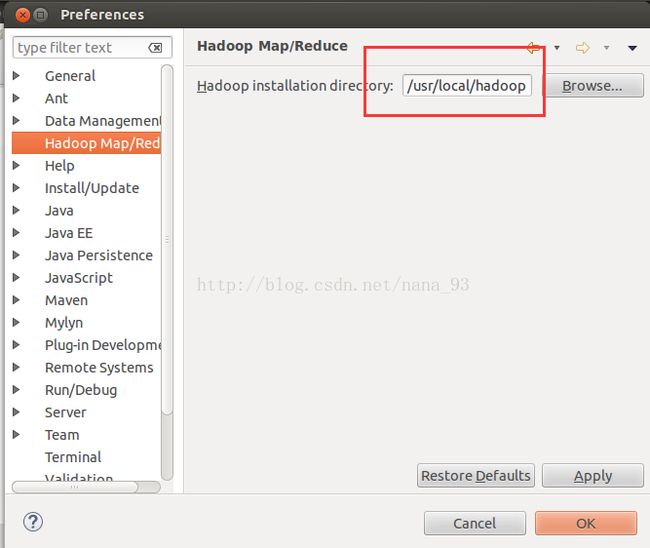

配置hadoop的安装路径windows->perspective

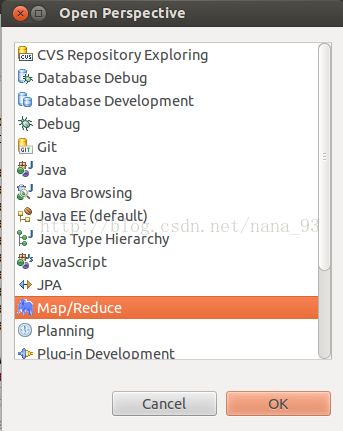

通过Open Perspective菜单打开Map Reduce视图,如下:

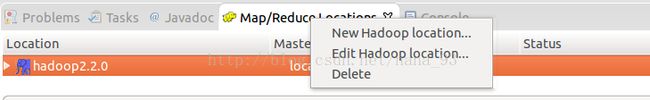

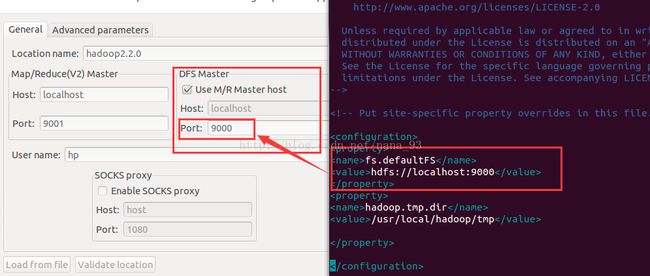

选中大象图标,右键点击New Hadoop Location编辑Hadoop配置信息:

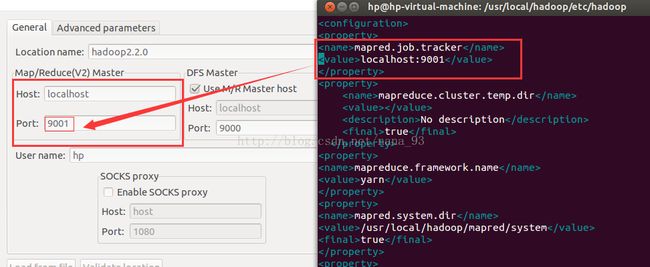

填写正确的Map/Reduce和HDFS信息。(具体根据您的配置而定)

注意:MR Master和DFS Master配置必须和mapred-site.xml和core-site.xml等配置文件一致

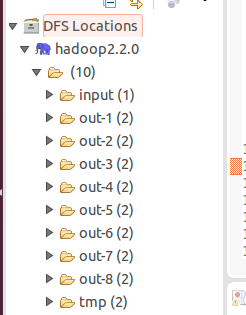

打开Project Explorer,查看HDFS文件系统:

4.wordcount算法程序实现

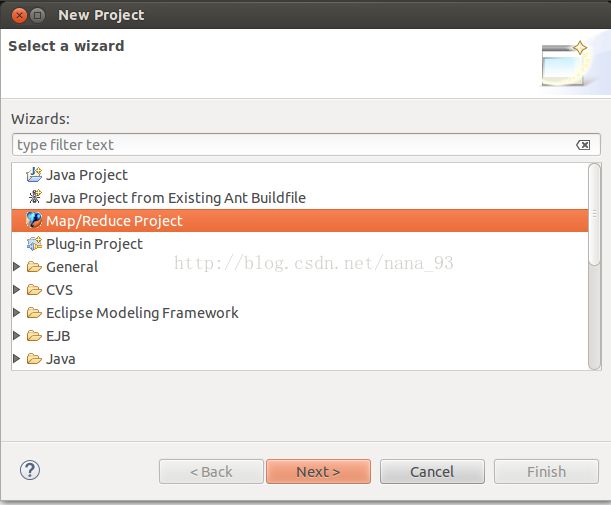

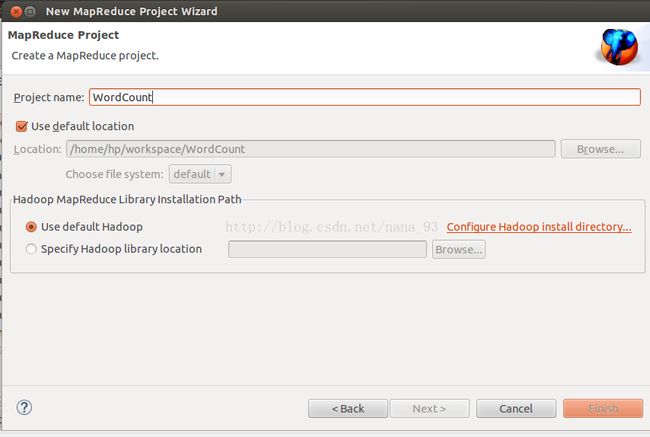

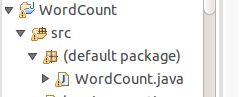

1.新建Mapreduce项目

右键new一个WordCount的类

2.编写代码:

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class WordCount {

public static class TokenizerMapper extends Mapper<Object,Text,Text,IntWritable>

{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key,Text value,Context context)

throws IOException,InterruptedException{

StringTokenizer itr = new StringTokenizer(value.toString());

while(itr.hasMoreTokens()){

word.set(itr.nextToken());

context.write(word, one);

}

}

public static class IntSumReducer extends Reducer<Text,IntWritable,Text,IntWritable>

{

private IntWritable result = new IntWritable();

public void reduce(Text key,Iterable<IntWritable> values,Context context) throws IOException,InterruptedException

{

int sum=0;

for(IntWritable val:values){

sum+=val.get();

}

result.set(sum);

context.write(key,result);

}

}

public static void main(String[] args) throws Exception

{

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount <in> <out>");

System.exit(2);

}

Job job = new Job(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

}

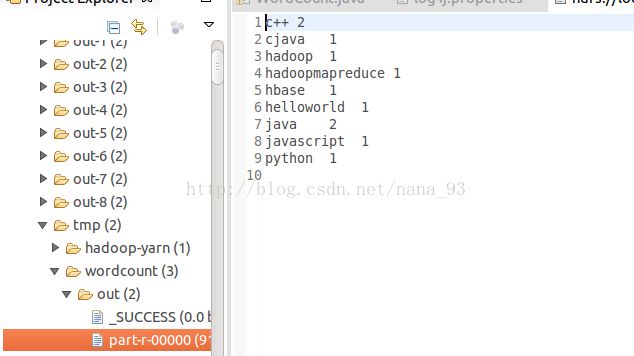

3. 在外面新建一个文本文档word.txt,里面随便写点什么,如

java c++ python cjava c++ javascript helloworld hadoopmapreduce java hadoop hbase

然后在HDFS下新建文件夹 wordcount,将word.txt上传到wordcount下,如图所示。

hadoop fs -mkdir /tmp/wordcount

hadoop fs -copyFromLocal /home/hp/workspace/word.txt /tmp/wordcount/word.txt

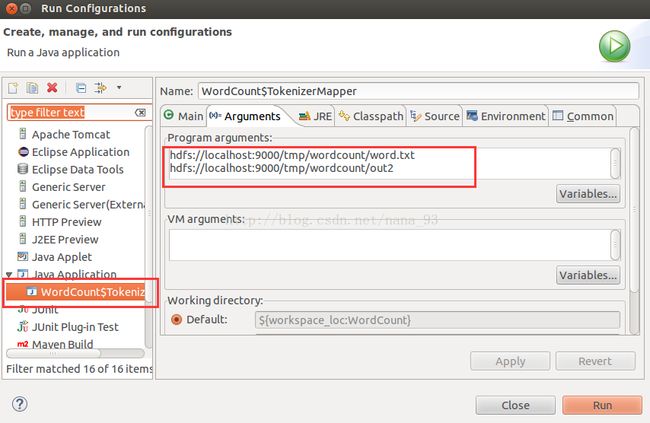

4. 运行项目

选中WordCount,右击, Run AS--> Run Configuration. 在Java Application内new一个

是那个对话框内,Arguments那里写 hdfs://localhost:9000/tmp/wordcount/word.txt hdfs://localhost:9000/tmp/wordcount/out

点击run

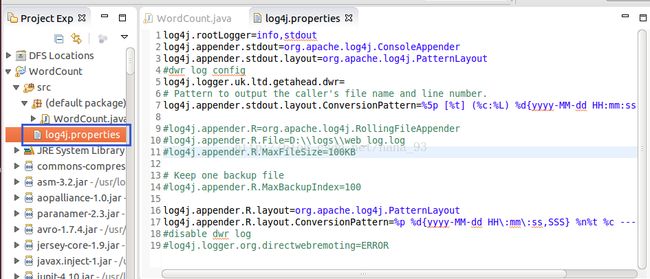

可能会出现类似如下的这种警告

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

解决办法

在src下面新建file名为log4j.properties内容如下:文件名就是这个,一个字也不能错

log4j.rootLogger=info,stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

#dwr log config

log4j.logger.uk.ltd.getahead.dwr=

# Pattern to output the caller's file name and line number.

log4j.appender.stdout.layout.ConversionPattern=%5p [%t] (%c:%L) %d{yyyy-MM-dd HH:mm:ss,SSS} ---- %m%n

#log4j.appender.R=org.apache.log4j.RollingFileAppender

#log4j.appender.R.File=D:\\logs\\web_log.log

#log4j.appender.R.MaxFileSize=100KB

# Keep one backup file

#log4j.appender.R.MaxBackupIndex=100

log4j.appender.R.layout=org.apache.log4j.PatternLayout

log4j.appender.R.layout.ConversionPattern=%p %d{yyyy-MM-dd HH\:mm\:ss,SSS} %n%t %c ---- %m%n

#disable dwr log

#log4j.logger.org.directwebremoting=ERROR

再次运行则不会出现警告了

运行结果