- 【Elasticsearch】most_fields、best_fields、cross_fields 的区别与用法

G皮T

elasticsearch大数据搜索引擎multi_matchbest_fieldsmost_fieldscross_fields

most_fields、best_fields、cross_fields的区别与用法1.核心区别概述2.详细解析与用法2.1best_fields(最佳字段匹配)2.2most_fields(多字段匹配)2.3cross_fields(跨字段匹配)3.对比案例3.1使用best_fields搜索3.2使用most_fields搜索3.3使用cross_fields搜索4.选型建议1.核心区别概述这

- 【数字后端】- 什么是NDR规则?

LogicYarn

数字后端硬件架构

NDR是指与工艺库的默认规则(DR)不同的特殊物理规则:常见的有:间距规则(spacing):增加信号线与邻近线之间的距离,降低Crosstalk串扰。线宽规则(width):加宽信号线,降低电阻和电感,提高信号驱动能力金属层指定:指定使用低电阻或低串扰的金属层(如高层金属)端点规则:如加强端点接触等为什么要有NDR?这就要提到金属的EM(电迁移electro-migration)问题。由于电子的

- 【机器人-深度估计】双目深度估计原理解析

文章目录一、基本原理二、主要处理流程2.1.匹配代价(MatchingCost)(1)常见匹配代价函数1.绝对差(SAD,SumofAbsoluteDifferences)2.平方差(SSD,SumofSquaredDifferences)3.归一化互相关(NCC,NormalizedCross-Correlation)4.Census变换(2)匹配代价函数对比2.2.代价体(CostVolume

- Python中*号解包列表中的参数

以科技求富强

Python学习编程问题专栏python开发语言

遇到的报错W=tensor[np.ix_(p[i]foriinrange(d))]Traceback(mostrecentcalllast):File“E:\Projects\PythonProjects\TuckerCross\tuckercross\DEIM_FS_Tucker.py”,line206,inS_F_iter,U_F_iter=deim_fs_iterative(tensor,r

- CVPR2025

摸鱼的肚子

论文阅读深度学习

CVPR论文列表大论文相关,abstactSphereUFormer:AU-ShapedTransformerforSpherical360Perception对360rgb图的深度进行估计CroCoDL:Cross-deviceCollaborativeDatasetforLocalization(没有)SemAlign3D:SemanticCorrespondencebetweenRGB-Im

- CVPR2025|底层视觉(超分辨率,图像恢复,去雨,去雾,去模糊,去噪等)相关论文汇总(附论文链接/开源代码)【持续更新】

Kobaayyy

图像处理与计算机视觉论文相关底层视觉计算机视觉算法CVPR2025图像超分辨率图像复原图像增强

CVPR2025|底层视觉相关论文汇总(如果觉得有帮助,欢迎点赞和收藏)1.超分辨率(Super-Resolution)AdaptiveDropout:UnleashingDropoutacrossLayersforGeneralizableImageSuper-ResolutionADD:AGeneralAttribution-DrivenDataAugmentationFrameworkfor

- VINS_MONO视觉导航算法【三】ROS基础知识介绍

凳子花❀

SLAM立体视觉SLAMVINS_Mono

文章目录其他文章说明ROSlaunch文件基本概念定义用途文件结构根标签常用标签\\\\\\\示例基本示例嵌套示例使用方法启动*.launch文件传递参数总结ROStopicTopic的基本概念Topic的工作原理常用命令示例总结ROS常用命令rosrunroslaunchrosbag主要功能roscorerosnoderostopicrosservicerosparamrqtros::spin(

- Vue 比较两个数组对象,页面展示差异数据值

Aotman_

前端es6javascriptvue.js前端框架

需求:页面要展示出被删除和添加的数据,知道哪些被删除和新添加的数据!如下图:实现:Vue中使用Lodash的differenceBy函数可以方便地比较两个数组并找出它们的差异。安装和引入Lodash首先,你需要在项目中安装Lodash库。可以通过npm进行安装:npmi--savelodash然后,在需要使用differenceBy函数的组件中引入Lodash:import_from'lodash

- oracle sql 前100条数据库,oracle/mysql/sqlserver三种数据库查询表获取表数据的前100条数据与排序时获取指定的条数....

1.oracle获取表的前100条数据.select*fromt_stu_copywhererownum100;正确或者:select*fromt_stu_copywherestuidbetween101and200;2.mysql获取表的前100条数据.select*fromt_stu_copylimit0,100;(从1行开始取100行数据,第一行到第100行数据)补充:先降序排序再获取第10

- Pyeeg模块部分功能介绍

脑电情绪识别

脑电情绪识别python神经网络深度学习pycharm

1.pyeeg简单介绍PyEEG是一个Python模块(即函数库),用于提取EEG(脑电)特征。正在添加更多功能。它包含构建用于特征提取的数据的函数,例如从给定的时间序列构建嵌入序列。它还能够将功能导出为svmlight格式,以便调用机器学习及深度学习工具。2.部分函数介绍1.pyeeg.ap_entropy(X,M,R)pyeeg.ap_entropy(X, M, R)计算时间序列X的近似熵(A

- Cross-stitch Networks for Multi-task Learning 项目教程

童香莺Wyman

Cross-stitchNetworksforMulti-taskLearning项目教程Cross-stitch-Networks-for-Multi-task-LearningATensorflowimplementationofthepaperarXiv:1604.03539项目地址:https://gitcode.com/gh_mirrors/cr/Cross-stitch-Network

- 探索多任务学习的新维度:Cross-stitch Networks

计蕴斯Lowell

探索多任务学习的新维度:Cross-stitchNetworksCross-stitch-Networks-for-Multi-task-LearningATensorflowimplementationofthepaperarXiv:1604.03539项目地址:https://gitcode.com/gh_mirrors/cr/Cross-stitch-Networks-for-Multi-t

- 【定位问题】基于Chan氏算法的TDOA定位仿真及GDOP计算的MATLAB实现

天天Matlab代码科研顾问

算法matlab开发语言

✅作者简介:热爱科研的Matlab仿真开发者,擅长数据处理、建模仿真、程序设计、完整代码获取、论文复现及科研仿真。往期回顾关注个人主页:Matlab科研工作室个人信条:格物致知,完整Matlab代码及仿真咨询内容私信。内容介绍基于到达时间差(TimeDifferenceofArrival,TDOA)的定位技术因其不需要知道发射源的具体发射时间,在无线定位领域得到了广泛的应用。Chan氏算法是一种经

- Druid 配置参数

小莫分享

数据库javaspring

keepAlive保持连接的有效性,也就是跟数据库续租;当连接的空闲时间大于keepAliveBetweenTimeMillis(默认2分钟),但是小于minEvictableIdleTimeMillis(默认30分钟),Druid会通过调用validationQuery保持该连接的有效性。当连接空闲时间大于minEvictableIdleTimeMillis,Druid会直接将该连接关闭,kee

- SpringBoot电脑商城项目--AOP统计业务方法耗时

保持学习ing

springbootjava后端AOP切面

AOP统计业务方法耗时AOP(Aspect-OrientedProgramming,面向切面编程)是一种编程范式,旨在提高代码的模块化,通过分离**横切关注点**(cross-cuttingconcerns)来增强代码的可维护性和复用性。1.概念1.1核心概念切面(Aspect)一个模块化的单元,封装了与业务逻辑无关的公共行为(如日志、权限控制)。例如,`LoggingAspect`是一个典型的切

- mqtt/kafka

leijmdas

kafka分布式

MQTT(MessageQueuingTelemetryTransport)MQTTisalightweight,publish-subscribenetworkprotocolthattransportsmessagesbetweendevices.It'sdesignedforconnectionswithremotelocationswhereasmallcodefootprintisreq

- 【matplotlib】热力图

captain811

matplotlib可视化

博客已经搬家到“捕获完成”:https://www.v2python.com热力图"""Demonstratessimilaritiesbetweenpcolor,pcolormesh,imshowandpcolorfastfordrawingquadrilateralgrids."""importmatplotlib.pyplotaspltimportnumpyasnp#makethesesma

- 编译问题libgazebo_ros_moveit_planning_scene.so问题

炎芯随笔

嵌入式自动驾驶

编译问题如下[98%]BuildingCXXobjectCMakeFiles/icw.dir/src/runtime/src/icwnode.cpp.o/usr/bin/aarch64-linux-gnu-g++--sysroot=/usr/ubuntu_crossbuild_devkit/mdc_crossbuild_sysroot-DHAS_DDS_BINDING-I/home/yk/ecli

- 大批量数据分析挖掘思路-Kaggle项目:保险销售预测

江枫渔火A

数据分析机器学习python

1、问题背景Kaggle在6月份的季赛是保险销售预测问题,其原始数据集381109条的保险销售,季赛由利用原数据集的模型生成扩充而来。本篇文章以原始数据集为基础,用以抛砖引玉,探讨该问题的高效解法。原始数据地址:HealthInsuranceCrossSellPrediction(kaggle.com)2、问题描述原文:我们的客户是一家为其客户提供健康保险的保险公司,现在他们需要您的帮助来建立一个

- OpenLayers 计算GeoTIFF影像NDVI

GIS之路

OpenLayersWebGIS前端信息可视化

前言NDVI(NormalizedDifferenceVegetationIndex)即归一化植被指数,是反应农作物长势和营养信息的重要参数之一,用于监测植物生长状态、植被覆盖度和消除部分辐射误差。其值在[-1,1]之间,-1表示可见光高反射;0表示有岩石或裸土等,NIR和R近似相等;正值,表示有植被覆盖,且值越大,表明植被覆盖度越高。计算公式:NDVI=(NIR-RED)/(NIR+RED)1.

- java获取天气信息

java获取天气信息注册后获取APIKeyhttps://www.visualcrossing.com/引入依赖org.apache.httpcomponentshttpclient4.5.13com.opencsvopencsv5.5.2org.apache.poipoi-ooxml5.2.2demoimportjava.io.*;importjava.net.*;importjava.nio.

- 激活层为softmax时,CrossEntropy损失函数对激活层输入Z的梯度

Jcldcdmf

AI机器学习损失函数交叉熵softmax

∂L∂Z=y^−y\frac{\partialL}{\partialZ}=\hat{y}-y∂Z∂L=y^−y其中yyy为真实值,采用one-hot编码,y^\hat{y}y^为softmax输出的预测值证明:\textbf{证明:}证明:根据softmax公式:y^i=ezi∑j=1nezj\hat{y}_i=\frac{e^{z_i}}{\sum_{j=1}^ne^{z_j}}y^i=∑j=1

- 《Effective Python》第2章 字符串和切片操作——深入理解Python 中的字符数据类型(bytes 与 str)的差异

不学无术の码农

EffectivePython精读笔记python开发语言

引言本篇博客基于学习《EffectivePython》第三版Chapter2:StringsandSlicing中的Item10:KnowtheDifferencesBetweenbytesandstr的总结与延伸。在Python编程中,字符串处理是几乎每个开发者都会频繁接触的基础操作。然而,Python中的bytes和str两种类型常常让初学者甚至有经验的开发者感到困惑。误用这两种类型可能导致编

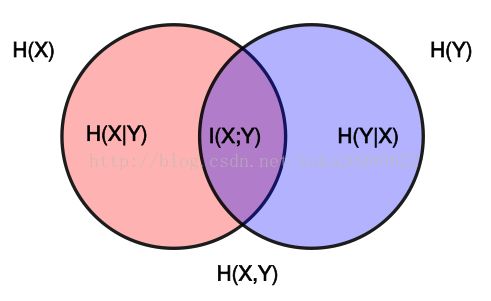

- 理解自信息和信息熵——为什么自信息这样算?

Colin_Downey

随笔信息熵机器学习概率论

一直对香农的信息熵(InformationEntropy)都没有一个非常感性的认识,今日摸鱼学习了一下这个问题。我们先来看看香农是怎么看待交流中的“信息”:“Thefundamentalproblemofcommunicationisthatofreproducingatonepointeitherexactlyorapproximatelyamessageselectedatanotherpoi

- 理解Logits、Softmax和softmax_cross_entropy_with_logits的区别

1010n111

机器学习

理解Logits、Softmax和softmax_cross_entropy_with_logits的区别技术背景在机器学习尤其是深度学习中,分类问题是一个常见的任务。在解决分类问题时,我们需要将模型的输出转换为概率分布,以便确定每个类别的可能性。同时,我们需要一个损失函数来衡量模型预测结果与真实标签之间的差异,从而进行模型的训练和优化。在TensorFlow中,logits、softmax和so

- SOEM vscode 交叉编译

m0_55576290

电机嵌入式vscodeide编辑器

GithubSOEM#arm-linux-gnueabihf.cmake#CMaketoolchainfileforARMLinuxcross-compilation#Setthetargetsystemset(CMAKE_SYSTEM_NAMELinux)set(CMAKE_SYSTEM_PROCESSORarm)#Specifythecrosscompilerset(CMAKE_C_COMPI

- Spring AOP核心原理与实战应用

刘一说

springbootJava后端技术栈springjava服务器面试后端

SpringAOP(Aspect-OrientedProgramming,面向切面编程)是Spring框架的核心模块之一,用于将横切关注点(如日志、事务、安全等)与核心业务逻辑解耦。以下是SpringAOP的详细解析,涵盖其核心概念、工作原理、使用方式及典型应用场景。一、AOP核心概念横切关注点(Cross-CuttingConcerns)系统中多个模块共用的功能(如日志、权限校验、事务管理),传

- AtCoder Beginner Contest 196 A~E题解

GoodCoder666

C++算法竞赛#AtCoderAtCoder

ABC196A~E[A-DifferenceMax](https://atcoder.jp/contests/abc196/tasks/abc196_a)题目大意输入格式输出格式样例分析代码[B-RoundDown](https://atcoder.jp/contests/abc196/tasks/abc196_b)题目大意输入格式输出格式样例分析代码[C-Doubled](https://atc

- 【python】简单演示 gateway、service、client的工作原理

等风来不如迎风去

网络服务入门与实战pythongateway开发语言

gateway看起来主要是做协议转换的Agatewayisanetworknodethatactsasanentranceandexitpoint,connectingtwonetworksthatusedifferentprotocols.Itallowsdatatoflowbetweenthesenetworks,essentiallyactingasatranslatorbetweendif

- dwm 开源项目启动与配置教程

dwm开源项目启动与配置教程dwmDenoWindowManager:Cross-platformwindowcreationandmanagement项目地址:https://gitcode.com/gh_mirrors/dwm4/dwm1.项目目录结构及介绍dwm项目是一个轻量级的窗口管理器,其目录结构如下:dwm/├──config.h#配置文件头文件├──dwm.c#主程序文件├──dwm

- java解析APK

3213213333332132

javaapklinux解析APK

解析apk有两种方法

1、结合安卓提供apktool工具,用java执行cmd解析命令获取apk信息

2、利用相关jar包里的集成方法解析apk

这里只给出第二种方法,因为第一种方法在linux服务器下会出现不在控制范围之内的结果。

public class ApkUtil

{

/**

* 日志对象

*/

private static Logger

- nginx自定义ip访问N种方法

ronin47

nginx 禁止ip访问

因业务需要,禁止一部分内网访问接口, 由于前端架了F5,直接用deny或allow是不行的,这是因为直接获取的前端F5的地址。

所以开始思考有哪些主案可以实现这样的需求,目前可实施的是三种:

一:把ip段放在redis里,写一段lua

二:利用geo传递变量,写一段

- mysql timestamp类型字段的CURRENT_TIMESTAMP与ON UPDATE CURRENT_TIMESTAMP属性

dcj3sjt126com

mysql

timestamp有两个属性,分别是CURRENT_TIMESTAMP 和ON UPDATE CURRENT_TIMESTAMP两种,使用情况分别如下:

1.

CURRENT_TIMESTAMP

当要向数据库执行insert操作时,如果有个timestamp字段属性设为

CURRENT_TIMESTAMP,则无论这

- struts2+spring+hibernate分页显示

171815164

Hibernate

分页显示一直是web开发中一大烦琐的难题,传统的网页设计只在一个JSP或者ASP页面中书写所有关于数据库操作的代码,那样做分页可能简单一点,但当把网站分层开发后,分页就比较困难了,下面是我做Spring+Hibernate+Struts2项目时设计的分页代码,与大家分享交流。

1、DAO层接口的设计,在MemberDao接口中定义了如下两个方法:

public in

- 构建自己的Wrapper应用

g21121

rap

我们已经了解Wrapper的目录结构,下面可是正式利用Wrapper来包装我们自己的应用,这里假设Wrapper的安装目录为:/usr/local/wrapper。

首先,创建项目应用

&nb

- [简单]工作记录_多线程相关

53873039oycg

多线程

最近遇到多线程的问题,原来使用异步请求多个接口(n*3次请求) 方案一 使用多线程一次返回数据,最开始是使用5个线程,一个线程顺序请求3个接口,超时终止返回 缺点 测试发现必须3个接

- 调试jdk中的源码,查看jdk局部变量

程序员是怎么炼成的

jdk 源码

转自:http://www.douban.com/note/211369821/

学习jdk源码时使用--

学习java最好的办法就是看jdk源代码,面对浩瀚的jdk(光源码就有40M多,比一个大型网站的源码都多)从何入手呢,要是能单步调试跟进到jdk源码里并且能查看其中的局部变量最好了。

可惜的是sun提供的jdk并不能查看运行中的局部变量

- Oracle RAC Failover 详解

aijuans

oracle

Oracle RAC 同时具备HA(High Availiablity) 和LB(LoadBalance). 而其高可用性的基础就是Failover(故障转移). 它指集群中任何一个节点的故障都不会影响用户的使用,连接到故障节点的用户会被自动转移到健康节点,从用户感受而言, 是感觉不到这种切换。

Oracle 10g RAC 的Failover 可以分为3种:

1. Client-Si

- form表单提交数据编码方式及tomcat的接受编码方式

antonyup_2006

JavaScripttomcat浏览器互联网servlet

原帖地址:http://www.iteye.com/topic/266705

form有2中方法把数据提交给服务器,get和post,分别说下吧。

(一)get提交

1.首先说下客户端(浏览器)的form表单用get方法是如何将数据编码后提交给服务器端的吧。

对于get方法来说,都是把数据串联在请求的url后面作为参数,如:http://localhost:

- JS初学者必知的基础

百合不是茶

js函数js入门基础

JavaScript是网页的交互语言,实现网页的各种效果,

JavaScript 是世界上最流行的脚本语言。

JavaScript 是属于 web 的语言,它适用于 PC、笔记本电脑、平板电脑和移动电话。

JavaScript 被设计为向 HTML 页面增加交互性。

许多 HTML 开发者都不是程序员,但是 JavaScript 却拥有非常简单的语法。几乎每个人都有能力将小的

- iBatis的分页分析与详解

bijian1013

javaibatis

分页是操作数据库型系统常遇到的问题。分页实现方法很多,但效率的差异就很大了。iBatis是通过什么方式来实现这个分页的了。查看它的实现部分,发现返回的PaginatedList实际上是个接口,实现这个接口的是PaginatedDataList类的对象,查看PaginatedDataList类发现,每次翻页的时候最

- 精通Oracle10编程SQL(15)使用对象类型

bijian1013

oracle数据库plsql

/*

*使用对象类型

*/

--建立和使用简单对象类型

--对象类型包括对象类型规范和对象类型体两部分。

--建立和使用不包含任何方法的对象类型

CREATE OR REPLACE TYPE person_typ1 as OBJECT(

name varchar2(10),gender varchar2(4),birthdate date

);

drop type p

- 【Linux命令二】文本处理命令awk

bit1129

linux命令

awk是Linux用来进行文本处理的命令,在日常工作中,广泛应用于日志分析。awk是一门解释型编程语言,包含变量,数组,循环控制结构,条件控制结构等。它的语法采用类C语言的语法。

awk命令用来做什么?

1.awk适用于具有一定结构的文本行,对其中的列进行提取信息

2.awk可以把当前正在处理的文本行提交给Linux的其它命令处理,然后把直接结构返回给awk

3.awk实际工

- JAVA(ssh2框架)+Flex实现权限控制方案分析

白糖_

java

目前项目使用的是Struts2+Hibernate+Spring的架构模式,目前已经有一套针对SSH2的权限系统,运行良好。但是项目有了新需求:在目前系统的基础上使用Flex逐步取代JSP,在取代JSP过程中可能存在Flex与JSP并存的情况,所以权限系统需要进行修改。

【SSH2权限系统的实现机制】

权限控制分为页面和后台两块:不同类型用户的帐号分配的访问权限是不同的,用户使

- angular.forEach

boyitech

AngularJSAngularJS APIangular.forEach

angular.forEach 描述: 循环对obj对象的每个元素调用iterator, obj对象可以是一个Object或一个Array. Iterator函数调用方法: iterator(value, key, obj), 其中obj是被迭代对象,key是obj的property key或者是数组的index,value就是相应的值啦. (此函数不能够迭代继承的属性.)

- java-谷歌面试题-给定一个排序数组,如何构造一个二叉排序树

bylijinnan

二叉排序树

import java.util.LinkedList;

public class CreateBSTfromSortedArray {

/**

* 题目:给定一个排序数组,如何构造一个二叉排序树

* 递归

*/

public static void main(String[] args) {

int[] data = { 1, 2, 3, 4,

- action执行2次

Chen.H

JavaScriptjspXHTMLcssWebwork

xwork 写道 <action name="userTypeAction"

class="com.ekangcount.website.system.view.action.UserTypeAction">

<result name="ssss" type="dispatcher">

- [时空与能量]逆转时空需要消耗大量能源

comsci

能源

无论如何,人类始终都想摆脱时间和空间的限制....但是受到质量与能量关系的限制,我们人类在目前和今后很长一段时间内,都无法获得大量廉价的能源来进行时空跨越.....

在进行时空穿梭的实验中,消耗超大规模的能源是必然

- oracle的正则表达式(regular expression)详细介绍

daizj

oracle正则表达式

正则表达式是很多编程语言中都有的。可惜oracle8i、oracle9i中一直迟迟不肯加入,好在oracle10g中终于增加了期盼已久的正则表达式功能。你可以在oracle10g中使用正则表达式肆意地匹配你想匹配的任何字符串了。

正则表达式中常用到的元数据(metacharacter)如下:

^ 匹配字符串的开头位置。

$ 匹配支付传的结尾位置。

*

- 报表工具与报表性能的关系

datamachine

报表工具birt报表性能润乾报表

在选择报表工具时,性能一直是用户关心的指标,但是,报表工具的性能和整个报表系统的性能有多大关系呢?

要回答这个问题,首先要分析一下报表的处理过程包含哪些环节,哪些环节容易出现性能瓶颈,如何优化这些环节。

一、报表处理的一般过程分析

1、用户选择报表输入参数后,报表引擎会根据报表模板和输入参数来解析报表,并将数据计算和读取请求以SQL的方式发送给数据库。

2、

- 初一上学期难记忆单词背诵第一课

dcj3sjt126com

wordenglish

what 什么

your 你

name 名字

my 我的

am 是

one 一

two 二

three 三

four 四

five 五

class 班级,课

six 六

seven 七

eight 八

nince 九

ten 十

zero 零

how 怎样

old 老的

eleven 十一

twelve 十二

thirteen

- 我学过和准备学的各种技术

dcj3sjt126com

技术

语言VB https://msdn.microsoft.com/zh-cn/library/2x7h1hfk.aspxJava http://docs.oracle.com/javase/8/C# https://msdn.microsoft.com/library/vstudioPHP http://php.net/manual/en/Html

- struts2中token防止重复提交表单

蕃薯耀

重复提交表单struts2中token

struts2中token防止重复提交表单

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

蕃薯耀 2015年7月12日 11:52:32 星期日

ht

- 线性查找二维数组

hao3100590

二维数组

1.算法描述

有序(行有序,列有序,且每行从左至右递增,列从上至下递增)二维数组查找,要求复杂度O(n)

2.使用到的相关知识:

结构体定义和使用,二维数组传递(http://blog.csdn.net/yzhhmhm/article/details/2045816)

3.使用数组名传递

这个的不便之处很明显,一旦确定就是不能设置列值

//使

- spring security 3中推荐使用BCrypt算法加密密码

jackyrong

Spring Security

spring security 3中推荐使用BCrypt算法加密密码了,以前使用的是md5,

Md5PasswordEncoder 和 ShaPasswordEncoder,现在不推荐了,推荐用bcrpt

Bcrpt中的salt可以是随机的,比如:

int i = 0;

while (i < 10) {

String password = "1234

- 学习编程并不难,做到以下几点即可!

lampcy

javahtml编程语言

不论你是想自己设计游戏,还是开发iPhone或安卓手机上的应用,还是仅仅为了娱乐,学习编程语言都是一条必经之路。编程语言种类繁多,用途各 异,然而一旦掌握其中之一,其他的也就迎刃而解。作为初学者,你可能要先从Java或HTML开始学,一旦掌握了一门编程语言,你就发挥无穷的想象,开发 各种神奇的软件啦。

1、确定目标

学习编程语言既充满乐趣,又充满挑战。有些花费多年时间学习一门编程语言的大学生到

- 架构师之mysql----------------用group+inner join,left join ,right join 查重复数据(替代in)

nannan408

right join

1.前言。

如题。

2.代码

(1)单表查重复数据,根据a分组

SELECT m.a,m.b, INNER JOIN (select a,b,COUNT(*) AS rank FROM test.`A` A GROUP BY a HAVING rank>1 )k ON m.a=k.a

(2)多表查询 ,

使用改为le

- jQuery选择器小结 VS 节点查找(附css的一些东西)

Everyday都不同

jquerycssname选择器追加元素查找节点

最近做前端页面,频繁用到一些jQuery的选择器,所以特意来总结一下:

测试页面:

<html>

<head>

<script src="jquery-1.7.2.min.js"></script>

<script>

/*$(function() {

$(documen

- 关于EXT

tntxia

ext

ExtJS是一个很不错的Ajax框架,可以用来开发带有华丽外观的富客户端应用,使得我们的b/s应用更加具有活力及生命力。ExtJS是一个用 javascript编写,与后台技术无关的前端ajax框架。因此,可以把ExtJS用在.Net、Java、Php等各种开发语言开发的应用中。

ExtJs最开始基于YUI技术,由开发人员Jack

- 一个MIT计算机博士对数学的思考

xjnine

Math

在过去的一年中,我一直在数学的海洋中游荡,research进展不多,对于数学世界的阅历算是有了一些长进。为什么要深入数学的世界?作为计算机的学生,我没有任何企图要成为一个数学家。我学习数学的目的,是要想爬上巨人的肩膀,希望站在更高的高度,能把我自己研究的东西看得更深广一些。说起来,我在刚来这个学校的时候,并没有预料到我将会有一个深入数学的旅程。我的导师最初希望我去做的题目,是对appe