ffmpeg学习4--ffmpeg类的简单封装,包含解码和定时录像功能

ffmpeg学习4--ffmpeg类的简单封装,包含解码和定时录像功能

参考网上的资料,简单封装了一下ffmpeg,这里记录一下,其它传感器编码及项目中用到的已经抽离,这里只包含解码和录像部分。这只是个玩具级别的测试。完整测试代码下载:代码下载

ffmpegDeCode.h

#pragma once

#include "stdafx.h"

#include<iostream>

using namespace std;

extern char *VideoPath;

extern int CameraIndex;

extern int fps;

extern "C"

{

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include <libavdevice/avdevice.h>

};

class ffmpegDeCode

{

public:

ffmpegDeCode();

~ffmpegDeCode();

bool DecoderInit(const char *sdpurl);

void DecodeFrame();

void DecodeLastFrame();

void StopDecodeFrame();

void GetopencvFrame();

//////////解码部分////////

AVFrame *pFrame;//解码后的帧数据

AVFormatContext *pFormatCtx ;//视频流编码上下文的指针

int videoindex;//视频流索引

AVCodecContext *pCodecCtx;//用于编解码器的类型(视频,音频...)及设置

AVCodec *pCodec ;//解码器指针

long m_framenums;//帧序号

SwsContext *img_convert_ctx;//根据编码信息设置渲染格式

AVPacket *packet;////是存储压缩编码数据相关信息的结构体,数据流分包(一帧的分包)

//1个AVPacket的data通常对应一个NAL。

IplImage * pCVFrame ;

char datatime[60];

char Recordname[100];

//////录像部分/////////

AVStream *i_video_stream;

AVFormatContext *opFormatCtx;

AVStream *o_video_stream;

void WriteVideoHead();

void WriteVideoTail();

int m_recordframenums;

int m_Toatlframenums;

int last_pts ;

int last_dts;

int64_t pts, dts;

int count;//录像文件计数

};

ffmpegDeCode.cpp

#include "stdafx.h"

#include"stdio.h"

#include"ffmpegDeCode.h"

#include "sqlite/sqlite3.h"

#include <fstream>

#include <windows.h>

SYSTEMTIME sys; //系统时间

char systime[50];

ofstream outfile;//打印异常信息

ffmpegDeCode :: ffmpegDeCode()

{

pFrame = NULL/**pFrameRGB = NULL*/;

pFormatCtx = NULL;

videoindex=0;

pCodecCtx = NULL;

pCodec = NULL;

m_framenums = 0;

img_convert_ctx = NULL;

packet = NULL;

pCVFrame = NULL;

//////////////

i_video_stream= NULL;

opFormatCtx= NULL;

o_video_stream= NULL;

m_recordframenums=1;

last_pts = 0;

last_dts = 0;

pts=0;

dts=0;

count=1;

m_Toatlframenums=fps*60*60;//fps*3600

memset(datatime, 0, 100*sizeof(char));

memset(Recordname, 0, 100*sizeof(char));

}

ffmpegDeCode :: ~ffmpegDeCode()

{

WriteVideoTail();

if(pCVFrame)

cvReleaseImage(&pCVFrame);

if(pCodecCtx)

avcodec_close(pCodecCtx);

if(pFormatCtx)

avformat_close_input(&pFormatCtx);

m_framenums=0;

}

void ffmpegDeCode::StopDecodeFrame()//WriteVideoTail()手动

{

WriteVideoTail();//关闭

if(pCVFrame)

cvReleaseImage(&pCVFrame);

if(pCodecCtx)

{

avcodec_close(pCodecCtx);

pCodecCtx=NULL;

}

if(pFormatCtx)

{

avformat_close_input(&pFormatCtx);

pFormatCtx=NULL;

}

m_framenums=0;

}

/*ffmpeg及相关变量初始化*/

bool ffmpegDeCode::DecoderInit(const char *sdpurl)

{

//1)ffmpeg注册复用器,编码器等的函数。

av_register_all();

avformat_network_init();//需要播放网络视频

//avdevice_register_all();//打开摄像头时需要

pFormatCtx = avformat_alloc_context();

//打开视频文件,通过参数filepath来获得文件名。这个函数读取文件的头部并且把信息保存到我们给的AVFormatContext结构体中。

//最后2个参数用来指定特殊的文件格式,缓冲大小和格式参数,但如果把它们设置为空NULL或者0,libavformat将自动检测这些参数。

/////2)打开文件或摄像头或网络流

if(avformat_open_input(&pFormatCtx,sdpurl,NULL,NULL)!=0)

//avformat_open_input(&pFormatCtx,url,NULL,&avdic)

{

GetLocalTime( &sys );

sprintf(systime,"\n%4d-%02d-%02d-%02d:%02d:%02d.%03d",sys.wYear,sys.wMonth,sys.wDay,sys.wHour,sys.wMinute, sys.wSecond,sys.wMilliseconds);

outfile<<systime<<"-->Couldn't open stream!";

StopDecodeFrame();

return false;

}

//3)查找文件的流信息,avformat_open_input函数只是检测了文件的头部,接着要检查在文件中的流的信息

if(av_find_stream_info(pFormatCtx)<0)

{

GetLocalTime( &sys );

sprintf(systime,"\n%4d-%02d-%02d-%02d:%02d:%02d.%03d",sys.wYear,sys.wMonth,sys.wDay,sys.wHour,sys.wMinute, sys.wSecond,sys.wMilliseconds);

outfile<<systime<<"-->Couldn't find stream information!";

StopDecodeFrame();

return false;

}

//遍历文件的各个流,找到第一个视频流,并记录该流的编码信息

videoindex=-1;

for(int i=0; i<pFormatCtx->nb_streams; i++)

{

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO)

{

videoindex=i;

i_video_stream = pFormatCtx->streams[i];

break;

}

}

if(videoindex==-1)

{

GetLocalTime( &sys );

sprintf(systime,"\n%4d-%02d-%02d-%02d:%02d:%02d.%03d",sys.wYear,sys.wMonth,sys.wDay,sys.wHour,sys.wMinute, sys.wSecond,sys.wMilliseconds);

outfile<<systime<<"-->Couldn't find a video stream!";

StopDecodeFrame();

return false;

}

pCodecCtx=pFormatCtx->streams[videoindex]->codec;

//4)在库里面查找支持该格式的解码器

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL)

{

GetLocalTime( &sys );

sprintf(systime,"\n%4d-%02d-%02d-%02d:%02d:%02d.%03d",sys.wYear,sys.wMonth,sys.wDay,sys.wHour,sys.wMinute, sys.wSecond,sys.wMilliseconds);

outfile<<systime<<"-->Couldn't find Codec!";

StopDecodeFrame();

return false;

}

//5)打开解码器

if(avcodec_open2(pCodecCtx, pCodec,NULL)<0)//avcodec_open2(pCodecCtx, pCodec,NULL)

{

GetLocalTime( &sys );

sprintf(systime,"\n%4d-%02d-%02d-%02d:%02d:%02d.%03d",sys.wYear,sys.wMonth,sys.wDay,sys.wHour,sys.wMinute, sys.wSecond,sys.wMilliseconds);

outfile<<systime<<"-->Couldn't open codec!";

StopDecodeFrame();

return false;

}

int y_size = pCodecCtx->width * pCodecCtx->height;

packet=(AVPacket *)malloc(sizeof(AVPacket));//分配(一帧的分包)内存

av_new_packet(packet, y_size);

if (NULL==pCVFrame)

{

pCVFrame=cvCreateImage(cvSize(pCodecCtx->width, pCodecCtx->height),8,3);

}

return true;

}

/*将解码后的RGB转化成opencv的帧结构*/

void ffmpegDeCode::GetopencvFrame()

{

AVFrame *pFrameRGB = avcodec_alloc_frame();//分配一个帧指针,指向解码后的转换帧

uint8_t * out_bufferRGB=new uint8_t[avpicture_get_size( PIX_FMT_RGB24,

pCodecCtx->width, pCodecCtx->height)];//给out_bufferRGB帧分配内存;

avpicture_fill((AVPicture *)pFrameRGB,

out_bufferRGB, PIX_FMT_RGB24, pCodecCtx->width, pCodecCtx->height);

//(RGB转换成opencv的BGR)

uchar *p = NULL;

p = pFrame->data[1];

pFrame->data[1] = pFrame->data[2];

pFrame->data[2] = p;

//设置图像转换上下文

sws_scale(img_convert_ctx,

pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameRGB->data, pFrameRGB->linesize);//格式转换,YUV420转换成RGB

memcpy(pCVFrame->imageData,out_bufferRGB,pCodecCtx->width*pCodecCtx->height*3);

pCVFrame->widthStep=pCodecCtx->width*3;// (3通道的)

pCVFrame->origin=0;

delete[] out_bufferRGB;

av_free(pFrameRGB);

}

void ffmpegDeCode::DecodeFrame()

{

int decode_result=0;

int got_picture=0;//

pFrame=avcodec_alloc_frame();//分配一个帧指针,指向解码后的帧

///一个AVPacket最多只包含一个AVFrame,而一个AVFrame可能包含好几个AVPacket,

///AVPacket是种数据流分包的概念。记录一些音视频相关的属性值,如pts,dts等

if(pFormatCtx==NULL)

exit(1);

if(av_read_frame(pFormatCtx,packet)<0)

exit(1);

//SavedSensorData();//解析传感器数据

if(packet->stream_index==videoindex)

{

decode_result = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

////////分时段录像//////////////////

if(m_framenums==30)//避免开始传感器数据不正常或没有

WriteVideoHead();//写视频头,第一段视频头

if(m_framenums>=30)

{

packet->flags|=AV_PKT_FLAG_KEY;

pts = packet->pts;

packet->pts += last_pts;

dts = packet->dts;

packet->dts += last_dts;

packet->stream_index = 0;

m_recordframenums++;

av_interleaved_write_frame(opFormatCtx,packet);

if(m_recordframenums==m_Toatlframenums)

{

WriteVideoTail();

WriteVideoHead();

}

}

//////////////////////

if(decode_result<0)

{

GetLocalTime( &sys );

sprintf(systime,"\n%4d-%02d-%02d-%02d:%02d:%02d.%03d",sys.wYear,sys.wMonth,sys.wDay,sys.wHour,sys.wMinute, sys.wSecond,sys.wMilliseconds);

outfile<<systime<<"\n-->解码错误!\n";

return ;

}

if(got_picture)//got_picture标志解码出完整一帧

{

////////////////////

//printf("帧号: %d,帧大小:%d,关键帧标志:%d,显示时间戳:%d,解码时间戳%d\n",

// m_framenums,packet->size,packet->data,packet->pts,packet->dts);

////printf("帧号: %d,帧大小:%d,关键帧标志:%d,显示时间戳:%d,解码时间戳%d\n",

// //m_framenums,pFrame->data,pFrame->key_frame);

//////////////////////

m_framenums++;

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height, PIX_FMT_RGB24, SWS_BICUBIC, NULL, NULL, NULL);

if(img_convert_ctx != NULL)

{

GetopencvFrame();

sws_freeContext(img_convert_ctx);

}

}

}

if(pFrame)

av_free(pFrame);

if(packet)

av_free_packet(packet);

}

void ffmpegDeCode ::WriteVideoHead()

{

sprintf(Recordname,"%sCam%d-%s.mp4",VideoPath,CameraIndex+1,datatime);

avformat_alloc_output_context2(&opFormatCtx, NULL, NULL, Recordname);

//int y_size = pCodecCtx->width * pCodecCtx->height;

//packet2=(AVPacket *)malloc(sizeof(AVPacket));//分配(一帧的分包)内存

//av_new_packet(packet2, y_size);

o_video_stream = avformat_new_stream(opFormatCtx, NULL);

AVCodecContext *c;

c = o_video_stream->codec;

//c->bit_rate = 900000;

c->bit_rate = i_video_stream->codec->bit_rate;

c->codec_id = i_video_stream->codec->codec_id;

c->codec_type = i_video_stream->codec->codec_type;

c->time_base.num = i_video_stream->time_base.num;

c->time_base.den = i_video_stream->time_base.den;

//fprintf(stderr, "time_base.num = %d time_base.den = %d\n", c->time_base.num, c->time_base.den);

c->width = i_video_stream->codec->width;

c->height = i_video_stream->codec->height;

c->pix_fmt = i_video_stream->codec->pix_fmt;

//printf("%d %d %d", c->width, c->height, c->pix_fmt);

c->flags = i_video_stream->codec->flags;

c->flags |= CODEC_FLAG_GLOBAL_HEADER;

c->me_range = i_video_stream->codec->me_range;

c->max_qdiff = i_video_stream->codec->max_qdiff;

c->qmin = i_video_stream->codec->qmin;

c->qmax = i_video_stream->codec->qmax;

c->qcompress = i_video_stream->codec->qcompress;

avio_open(&opFormatCtx->pb,Recordname, AVIO_FLAG_WRITE);

if(avformat_write_header(opFormatCtx, NULL)>=0)

{

GetLocalTime( &sys );

sprintf(systime,"\n%4d-%02d-%02d-%02d:%02d:%02d.%03d",sys.wYear,sys.wMonth,sys.wDay,sys.wHour,sys.wMinute, sys.wSecond,sys.wMilliseconds);

outfile<<systime<<"-->写入视频头文件成功!";

}

}

void ffmpegDeCode ::WriteVideoTail()

{

last_dts += dts;

last_pts += pts;

if(opFormatCtx)

{

if(av_write_trailer(opFormatCtx)>=0)

{

GetLocalTime( &sys );

sprintf(systime,"\n%4d-%02d-%02d-%02d:%02d:%02d.%03d",sys.wYear,sys.wMonth,sys.wDay,sys.wHour,sys.wMinute, sys.wSecond,sys.wMilliseconds);

outfile<<systime<<"-->写入视频尾文件成功!";

}

avcodec_close(opFormatCtx->streams[0]->codec);

av_freep(&opFormatCtx->streams[0]->codec);

av_freep(&opFormatCtx->streams[0]);

avio_close(opFormatCtx->pb);

av_free(opFormatCtx);

}

/*if(packet2)

av_free_packet(packet2);*/

m_recordframenums=1;

last_pts = 0;

last_dts = 0;

}

测试代码:

#include "stdafx.h"

#include "ffmpegDecode.h"

#include "highgui.h"

#include "cv.h"

#include "cxcore.h"

#include <windows.h>

char *VideoPath="D:\\";

int CameraIndex=3;//测试数据存在Cam4_SensorData

int fps=25;

char *sdpurl1 = "test1.sdp";//test.sdp D:\\DataSaved\\HC_Record_ch04_20160410145430.mp4

ffmpegDeCode deCode1;

int _tmain(int argc, _TCHAR* argv[])

{

///////////初始化///////

if(!deCode1.DecoderInit(sdpurl1))

{

cout<<"播放失败!"<<endl;

}

cvNamedWindow("Decode1");

//////解码播放//////////

while(1)

{

deCode1.DecodeFrame();

cout<<"------->第1路第"<<deCode1.m_framenums<<"帧"<<endl;

if(deCode1.pCVFrame)

{

cvShowImage("Decode1",deCode1.pCVFrame);

cvWaitKey(5);//标准1000/fps

}

}

return 0;

}

测试截图:

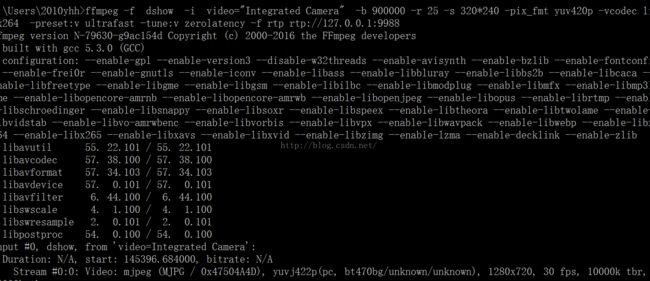

解码rtp流:

这里的rtp流以笔记本自带的摄像头发送rtp流来测试,具体有发送端的板子或另外一台电脑也可以来发送rtp流也可以。

ffmpeg -f dshow -i video="Integrated Camera" -b 1500000 -r 25 -s 320*240 -pix_fmt yuv420p -vcodec libx264 -preset:v ultrafast -tune:v zerolatency -f rtp rtp://127.0.0.1:9988>test1.sdp