sparkStreamming和高级数据源kafka

对于SparkStreaming+Kafka的组合,有两种方法。

Approach 1: Receiver-based Approach

Approach 2: Direct Approach (No Receivers)

实例1----KafkaReceive

----------------------------------------------------------前提---------------------------------------------------------------------------------

启动zookeeper集群

启动kafka集群

-------------------------------------------------------------------------------------------------------------------------------------------------

1、在kafka下创建一个“sparkStreamingOnKafkaReceive”的topic

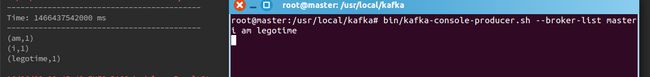

root@master:/usr/local/kafka# bin/kafka-topics.sh --create --zookeeper master:2181,worker1:2181,worker2:2181 --replication-factor 2 --partitions 1 --topic sparkStreamingOnKafkaReceive2、启动這个topic的producer

bin/kafka-console-producer.sh --broker-list master:9092,worker1:9092,worker2:9092 --topic sparkStreamingOnKafkaReceive

3、运行sparkStream程序,程序如下:

import org.apache.spark.streaming.kafka.KafkaUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.{SparkConf, SparkContext}

import scala.collection._

object streamingOnKafkaReceive {

def main(args: Array[String]) {

val conf = new SparkConf().setMaster("local[4]").setAppName("streamingOnKafkaReceive")

val sc = new SparkContext(conf)

val ssc = new StreamingContext(sc,Seconds(6))

ssc.checkpoint("/Res")

val topic = immutable.Map("sparkStreamingOnKafkaReceive" -> 2)

val lines = KafkaUtils.createStream(ssc, "Master:2181,Worker1:2181,Worker2:2181","MyStreamingGroup",topic).map(_._2)

val words = lines.flatMap(_.split(" "))

val wordCount = words.map(x => (x,1)).reduceByKey(_+_)

wordCount.print()

ssc.start()

ssc.awaitTermination()

}

}

4、随便输入一些字符串,运行结果

实列2----DirectStream

import kafka.serializer.StringDecoder

import org.apache.spark.streaming._

import org.apache.spark.streaming.kafka._

import org.apache.spark.SparkConf

object DirectKafkaWordCount {

def main(args: Array[String]) {

if (args.length < 2) {

System.err.println(s"""

|Usage: DirectKafkaWordCount <brokers> <topics>

| <brokers> is a list of one or more Kafka brokers

| <topics> is a list of one or more kafka topics to consume from

|

""".stripMargin)

System.exit(1)

}

val Array(brokers, topics) = args

// Create context with 2 second batch interval

val sparkConf = new SparkConf().setAppName("DirectKafkaWordCount").setMaster("local[2]")

val ssc = new StreamingContext(sparkConf, Seconds(2))

// Create direct kafka stream with brokers and topics

val topicsSet = topics.split(",").toSet

val kafkaParams = Map[String, String]("metadata.broker.list" -> brokers)

val messages = KafkaUtils.createDirectStream[String, String, StringDecoder, StringDecoder](

ssc, kafkaParams, topicsSet)

// Get the lines, split them into words, count the words and print

val lines = messages.map(_._2)

val words = lines.flatMap(_.split(" "))

val wordCounts = words.map(x => (x, 1L)).reduceByKey(_ + _)

wordCounts.print()

// Start the computation

ssc.start()

ssc.awaitTermination()

}

}

// scalastyle:on println