SparkML之回归(三)保序回归

在写這篇博客的时候,翻阅了一些互联网上的资料,发现文献[1]写的比较系统。所以推荐大家读读文献[1].但是出现了一些错误,所以我在此简述一些。如果推理不过去了。可以看看我的简述。

------------------------------------前言

背景:

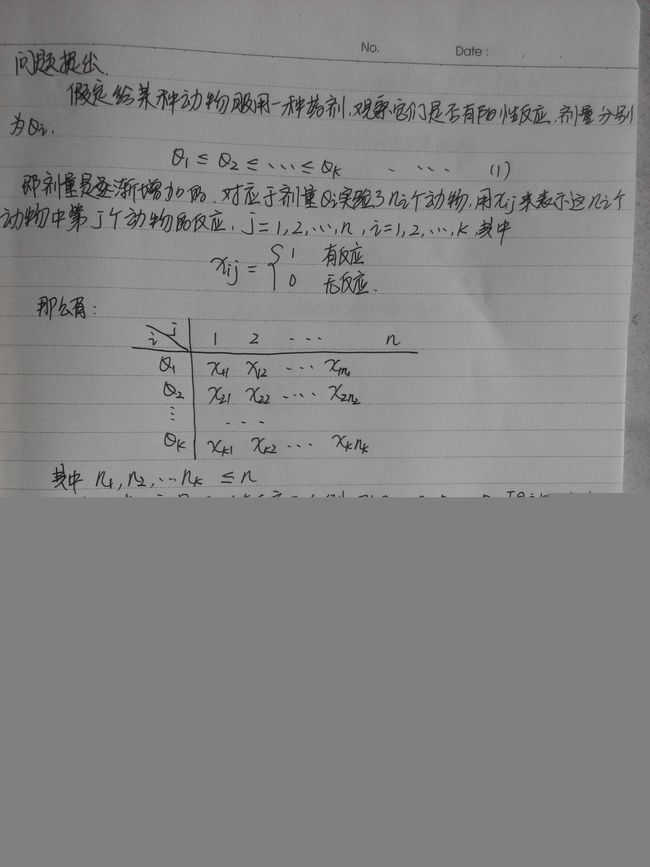

(1)在医学领域药物剂量反应中,随着药物剂量的增加,疗效和副作用会呈现一定趋势。比如剂量越高,疗效越

高,剂量越高,毒性越大等

(2)评估药物在不同剂量水平下的毒性,并且建议一个对病人既安全又有效的剂量称为最大耐受剂量(Maximum Tolerated Dose)简称 MTD。

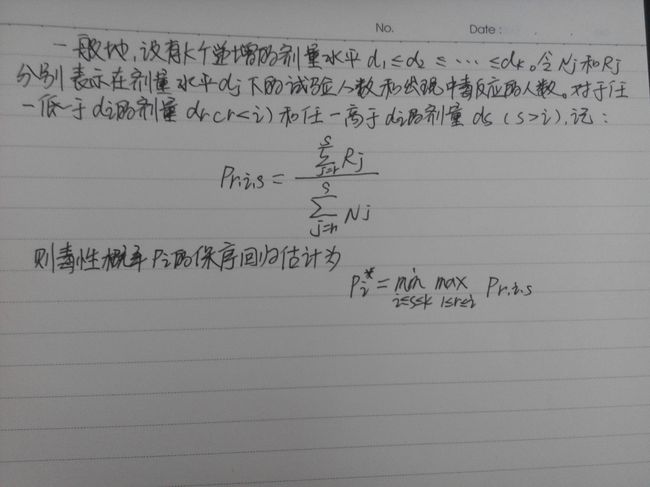

(3)随着药物的增加,药物的毒性是非减的。MTD被定义为毒性概率不超过毒性靶水平的最高剂量水平

(4)基于每个剂量水平下病人的毒性反应的比率估计不同,剂量水平下的毒性概率可能不是剂量水平的非减函

数,于是我们可以采用保序回归的方法

L2保序回归

L2保序回归算法

一些具体的定义和命题查看文献[1]

Spark源码分析(大图见附录)

/**

* 保序回归模型

*

* @param boundaries 用于预测的边界数组,它必须是排好顺序的。(分段函数的分段点数组)

* @param predictions 保序回归的结果,即分段点x对应的预测值

* @param isotonic 升序还是降序(true为升)

*/

@Since("1.3.0")

class IsotonicRegressionModel @Since("1.3.0") (

@Since("1.3.0") val boundaries: Array[Double],

@Since("1.3.0") val predictions: Array[Double],

@Since("1.3.0") val isotonic: Boolean) extends Serializable with Saveable {

private val predictionOrd = if (isotonic) Ordering[Double] else Ordering[Double].reverse

require(boundaries.length == predictions.length)

assertOrdered(boundaries)

assertOrdered(predictions)(predictionOrd)

/**

* A Java-friendly constructor that takes two Iterable parameters and one Boolean parameter.

*/

@Since("1.4.0")

def this(boundaries: java.lang.Iterable[Double],

predictions: java.lang.Iterable[Double],

isotonic: java.lang.Boolean) = {

this(boundaries.asScala.toArray, predictions.asScala.toArray, isotonic)

}

/** 序列顺序的检测 */

private def assertOrdered(xs: Array[Double])(implicit ord: Ordering[Double]): Unit = {

var i = 1

val len = xs.length

while (i < len) {

require(ord.compare(xs(i - 1), xs(i)) <= 0,

s"Elements (${xs(i - 1)}, ${xs(i)}) are not ordered.")

i += 1

}

}

/**

* 利用分段函数的线性函数,输入feature进行预测

*

* @param testData Features to be labeled.

* @return Predicted labels.

*

*/

@Since("1.3.0")

def predict(testData: RDD[Double]): RDD[Double] = {

testData.map(predict)

}

/**

* 利用分段函数的线性函数,输入feature进行预测

*

* @param testData Features to be labeled.

* @return Predicted labels.

*

*/

@Since("1.3.0")

def predict(testData: JavaDoubleRDD): JavaDoubleRDD = {

JavaDoubleRDD.fromRDD(predict(testData.rdd.retag.asInstanceOf[RDD[Double]]))

}

/**

* 利用分段函数的线性函数,输入feature进行预测

*

* @param testData Feature to be labeled.

* @return Predicted label.

* 1) 如果testdata可以精确匹配到一个边界数组,那么就返回对应的数值,如果多个,那么随机返回一个

* 2) 如果testdata 低于或者高于所有的边界数组,那么返回第一个或者最后一个If testData is lower or higher than all boundaries then first or last prediction

* 3) 如果testdat在两个边界数组之间,那么采用分段函数的线性插值方法得到的数值

*

*/

@Since("1.3.0")

def predict(testData: Double): Double = {

def linearInterpolation(x1: Double, y1: Double, x2: Double, y2: Double, x: Double): Double = {

y1 + (y2 - y1) * (x - x1) / (x2 - x1)

}

val foundIndex = binarySearch(boundaries, testData)

val insertIndex = -foundIndex - 1

// Find if the index was lower than all values,

// higher than all values, in between two values or exact match.

if (insertIndex == 0) {

predictions.head

} else if (insertIndex == boundaries.length) {

predictions.last

} else if (foundIndex < 0) {

linearInterpolation(

boundaries(insertIndex - 1),

predictions(insertIndex - 1),

boundaries(insertIndex),

predictions(insertIndex),

testData)

} else {

predictions(foundIndex)

}

}

/** A convenient method for boundaries called by the Python API. */

private[mllib] def boundaryVector: Vector = Vectors.dense(boundaries)

/** A convenient method for boundaries called by the Python API. */

private[mllib] def predictionVector: Vector = Vectors.dense(predictions)

@Since("1.4.0")

override def save(sc: SparkContext, path: String): Unit = {

IsotonicRegressionModel.SaveLoadV1_0.save(sc, path, boundaries, predictions, isotonic)

}

override protected def formatVersion: String = "1.0"

}

@Since("1.4.0")

object IsotonicRegressionModel extends Loader[IsotonicRegressionModel] {

import org.apache.spark.mllib.util.Loader._

private object SaveLoadV1_0 {

def thisFormatVersion: String = "1.0"

/** Hard-code class name string in case it changes in the future */

def thisClassName: String = "org.apache.spark.mllib.regression.IsotonicRegressionModel"

/** Model data for model import/export */

case class Data(boundary: Double, prediction: Double)

def save(

sc: SparkContext,

path: String,

boundaries: Array[Double],

predictions: Array[Double],

isotonic: Boolean): Unit = {

val sqlContext = SQLContext.getOrCreate(sc)

val metadata = compact(render(

("class" -> thisClassName) ~ ("version" -> thisFormatVersion) ~

("isotonic" -> isotonic)))

sc.parallelize(Seq(metadata), 1).saveAsTextFile(metadataPath(path))

sqlContext.createDataFrame(

boundaries.toSeq.zip(predictions).map { case (b, p) => Data(b, p) }

).write.parquet(dataPath(path))

}

def load(sc: SparkContext, path: String): (Array[Double], Array[Double]) = {

val sqlContext = SQLContext.getOrCreate(sc)

val dataRDD = sqlContext.read.parquet(dataPath(path))

checkSchema[Data](dataRDD.schema)

val dataArray = dataRDD.select("boundary", "prediction").collect()

val (boundaries, predictions) = dataArray.map { x =>

(x.getDouble(0), x.getDouble(1))

}.toList.sortBy(_._1).unzip

(boundaries.toArray, predictions.toArray)

}

}

@Since("1.4.0")

override def load(sc: SparkContext, path: String): IsotonicRegressionModel = {

implicit val formats = DefaultFormats

val (loadedClassName, version, metadata) = loadMetadata(sc, path)

val isotonic = (metadata \ "isotonic").extract[Boolean]

val classNameV1_0 = SaveLoadV1_0.thisClassName

(loadedClassName, version) match {

case (className, "1.0") if className == classNameV1_0 =>

val (boundaries, predictions) = SaveLoadV1_0.load(sc, path)

new IsotonicRegressionModel(boundaries, predictions, isotonic)

case _ => throw new Exception(

s"IsotonicRegressionModel.load did not recognize model with (className, format version):" +

s"($loadedClassName, $version). Supported:\n" +

s" ($classNameV1_0, 1.0)"

)

}

}

}

/**

* Isotonic regression.

* Currently implemented using parallelized pool adjacent violators algorithm.

* Only univariate (single feature) algorithm supported.

*

* Sequential PAV implementation based on:

* Tibshirani, Ryan J., Holger Hoefling, and Robert Tibshirani.

* "Nearly-isotonic regression." Technometrics 53.1 (2011): 54-61.

* Available from [[http://www.stat.cmu.edu/~ryantibs/papers/neariso.pdf]]

*

* Sequential PAV parallelization based on:

* Kearsley, Anthony J., Richard A. Tapia, and Michael W. Trosset.

* "An approach to parallelizing isotonic regression."

* Applied Mathematics and Parallel Computing. Physica-Verlag HD, 1996. 141-147.

* Available from [[http://softlib.rice.edu/pub/CRPC-TRs/reports/CRPC-TR96640.pdf]]

*

* @see [[http://en.wikipedia.org/wiki/Isotonic_regression Isotonic regression (Wikipedia)]]

*/

@Since("1.3.0")

class IsotonicRegression private (private var isotonic: Boolean) extends Serializable {

/**

* 构建IsotonicRegression实例的默认参数:isotonic = true

*

* @return New instance of IsotonicRegression.

*/

@Since("1.3.0")

def this() = this(true)

/**

* 设置序列的参数(Sets the isotonic parameter).

*

* @param isotonic 序列是递增的还是递减的

* @return This instance of IsotonicRegression.

*/

@Since("1.3.0")

def setIsotonic(isotonic: Boolean): this.type = {

this.isotonic = isotonic

this

}

/**

* 运行保序回归算法,来构建保序回归模型

* @param input 输入一个 RDD 内部数据形式为 tuples (label, feature, weight) ,其中,label 是对每次计算都会改变

* feature 特征变量 你weight 权重(默认为1)

* @return Isotonic regression model.

*/

@Since("1.3.0")

def run(input: RDD[(Double, Double, Double)]): IsotonicRegressionModel = {

val preprocessedInput = if (isotonic) {

input

} else {

input.map(x => (-x._1, x._2, x._3))

}

val pooled = parallelPoolAdjacentViolators(preprocessedInput)

val predictions = if (isotonic) pooled.map(_._1) else pooled.map(-_._1)

val boundaries = pooled.map(_._2)

new IsotonicRegressionModel(boundaries, predictions, isotonic)

}

/**

* Run pool adjacent violators algorithm to obtain isotonic regression model.

*

* @param input JavaRDD of tuples (label, feature, weight) where label is dependent variable

* for which we calculate isotonic regression, feature is independent variable

* and weight represents number of measures with default 1.

* If multiple labels share the same feature value then they are ordered before

* the algorithm is executed.

* @return Isotonic regression model.

*/

@Since("1.3.0")

def run(input: JavaRDD[(JDouble, JDouble, JDouble)]): IsotonicRegressionModel = {

run(input.rdd.retag.asInstanceOf[RDD[(Double, Double, Double)]])

}

/**

* Performs a pool adjacent violators algorithm (PAV算法).

* @param input 输入的数据 形式为: (label, feature, weight).

* @return 按照保序回归的定义,返回一个有序的序列

*/

private def poolAdjacentViolators(

input: Array[(Double, Double, Double)]): Array[(Double, Double, Double)] = {

if (input.isEmpty) {

return Array.empty

}

// Pools sub array within given bounds assigning weighted average value to all elements.

def pool(input: Array[(Double, Double, Double)], start: Int, end: Int): Unit = {

val poolSubArray = input.slice(start, end + 1)

val weightedSum = poolSubArray.map(lp => lp._1 * lp._3).sum

val weight = poolSubArray.map(_._3).sum

var i = start

while (i <= end) {

input(i) = (weightedSum / weight, input(i)._2, input(i)._3)

i = i + 1

}

}

var i = 0

val len = input.length

while (i < len) {

var j = i

// Find monotonicity violating sequence, if any.

while (j < len - 1 && input(j)._1 > input(j + 1)._1) {

j = j + 1

}

// If monotonicity was not violated, move to next data point.

if (i == j) {

i = i + 1

} else {

// Otherwise pool the violating sequence

// and check if pooling caused monotonicity violation in previously processed points.

while (i >= 0 && input(i)._1 > input(i + 1)._1) {

pool(input, i, j)

i = i - 1

}

i = j

}

}

// For points having the same prediction, we only keep two boundary points.

val compressed = ArrayBuffer.empty[(Double, Double, Double)]

var (curLabel, curFeature, curWeight) = input.head

var rightBound = curFeature

def merge(): Unit = {

compressed += ((curLabel, curFeature, curWeight))

if (rightBound > curFeature) {

compressed += ((curLabel, rightBound, 0.0))

}

}

i = 1

while (i < input.length) {

val (label, feature, weight) = input(i)

if (label == curLabel) {

curWeight += weight

rightBound = feature

} else {

merge()

curLabel = label

curFeature = feature

curWeight = weight

rightBound = curFeature

}

i += 1

}

merge()

compressed.toArray

}

/**

* Performs并行PAV算法实现

* 将pav应用在每个分区,之后再进行合并。

* @param input Input data of tuples (label, feature, weight).

* @return Result tuples (label, feature, weight) where labels were updated

* to form a monotone sequence as per isotonic regression definition.

*/

private def parallelPoolAdjacentViolators(

input: RDD[(Double, Double, Double)]): Array[(Double, Double, Double)] = {

val parallelStepResult = input

.sortBy(x => (x._2, x._1))

.glom()

.flatMap(poolAdjacentViolators)

.collect()

.sortBy(x => (x._2, x._1)) // Sort again because collect() doesn't promise ordering.

poolAdjacentViolators(parallelStepResult)

}

}

spark实验

import org.apache.spark.mllib.regression.{IsotonicRegression, IsotonicRegressionModel} import org.apache.spark.{SparkConf, SparkContext} object IsotonicRegressionExample { def main(args: Array[String]) { val conf = new SparkConf().setAppName("IsotonicRegressionExample").setMaster("local") val sc = new SparkContext(conf) val data = sc.textFile("C:\\Users\\alienware\\IdeaProjects\\sparkCore\\data\\mllib\\sample_isotonic_regression_data.txt") // Create label, feature, weight tuples from input data with weight set to default value 1.0. val parsedData = data.map { line => val parts = line.split(',').map(_.toDouble) (parts(0), parts(1), 1.0) } // Split data into training (60%) and test (40%) sets. val splits = parsedData.randomSplit(Array(0.6, 0.4), seed = 11L) val training = splits(0) val test = splits(1) // Create isotonic regression model from training data. // Isotonic parameter defaults to true so it is only shown for demonstration val model = new IsotonicRegression().setIsotonic(true).run(training) // Create tuples of predicted and real labels. val predictionAndLabel = test.map { point => val predictedLabel = model.predict(point._2) (predictedLabel, point._1) } //predictionAndLabel.foreach(println) /** * (0.16868944399999988,0.31208567) (0.16868944399999988,0.35900051) (0.16868944399999988,0.03926568) (0.16868944399999988,0.12952575) (0.16868944399999988,0.0) (0.16868944399999988,0.01376849) (0.16868944399999988,0.13105558) (0.19545421571428565,0.13717491) (0.19545421571428565,0.19020908) (0.19545421571428565,0.19581846) (0.31718510999999966,0.29576747) (0.5322114566666667,0.4854666) (0.5368859433333334,0.49209587) (0.5602243760000001,0.5017848) (0.5701674724126985,0.58286588) (0.5801105688253968,0.64660887) (0.5900536652380952,0.65782764) (0.5900536652380952,0.63029067) (0.5900536652380952,0.63233044) (0.5900536652380952,0.33299337) (0.5900536652380952,0.36206017) (0.5900536652380952,0.56348802) (0.5900536652380952,0.48393677) (0.5900536652380952,0.46965834) (0.5900536652380952,0.45843957) (0.5900536652380952,0.47118817) (0.5900536652380952,0.51555329) (0.5900536652380952,0.56297807) (0.6881693,0.65119837) (0.7135390099999999,0.66598674) (0.861295255,0.91330954) (0.903875573,0.90719021) (0.9275879659999999,0.93115757) (0.9275879659999999,0.91942886) */ // Calculate mean squared error between predicted and real labels. val meanSquaredError = predictionAndLabel.map { case (p, l) => math.pow((p - l), 2) }.mean() println("Mean Squared Error = " + meanSquaredError) //Mean Squared Error = 0.010049744711808193 // Save and load model model.save(sc, "target/tmp/myIsotonicRegressionModel") val sameModel = IsotonicRegressionModel.load(sc, "target/tmp/myIsotonicRegressionModel") } }

参考文献

1、http://wenku.baidu.com/link?url=rbcbI3L7M83F62Aey_kyGZk7kwuJxr5ZW61EqFH5T45umsdZOCrAbfpl8a1yuMyzObd1_kG-kQ9DPcSTl7wnoX6UyNN_gT5bBYh_p1yMgD7url=rbcbI3L7M83F62Aey_kyGZk7kwuJxr5ZW61EqFH5T45umsdZOCrAbfpl8a1yuMyzObd1_kG-kQ9DPcSTl7wnoX6UyNN_gT5bBYh_p1yMgD7

附录

链接:http://pan.baidu.com/s/1i4DwQs1 密码:moor