Spark组件之SparkR学习2--使用spark-submit向集群提交R代码文件dataframe.R

更多代码请见:https://github.com/xubo245/SparkLearning

环境:

spark1.5.2,R-3.2.1

1.examples1 dataframe.R

1.1 文件来源:参考【1】

./bin/spark-submit examples/src/main/r/dataframe.R中代码运行有问题:

hadoop@Master:~/cloud/testByXubo/spark/R$ spark-submit dataframe.R

WARNING: ignoring environment value of R_HOME

Loading required package: methods

Attaching package: ‘SparkR’

The following objects are masked from ‘package:stats’:

filter, na.omit

The following objects are masked from ‘package:base’:

intersect, rbind, sample, subset, summary, table, transform

root

|-- name: string (nullable = true)

|-- age: double (nullable = true)

16/04/20 11:14:25 ERROR RBackendHandler: jsonFile on 1 failed

Error in invokeJava(isStatic = FALSE, objId$id, methodName, ...) :

java.io.IOException: No input paths specified in job

at org.apache.hadoop.mapred.FileInputFormat.listStatus(FileInputFormat.java:201)

at org.apache.hadoop.mapred.FileInputFormat.getSplits(FileInputFormat.java:313)

at org.apache.spark.rdd.HadoopRDD.getPartitions(HadoopRDD.scala:207)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:239)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:237)

at scala.Option.getOrElse(Option.scala:120)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:237)

at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:239)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:237)

at scala.Option.getOrElse(Option.scala:120)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:237)

at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2

Calls: jsonFile -> callJMethod -> invokeJava

Execution halted

1.2 代码:

hadoop@Master:~/cloud/testByXubo/spark/R$ cat dataframe.R

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

library(SparkR)

# Initialize SparkContext and SQLContext

sc <- sparkR.init(appName="SparkR-DataFrame-example")

sqlContext <- sparkRSQL.init(sc)

# Create a simple local data.frame

localDF <- data.frame(name=c("John", "Smith", "Sarah"), age=c(19, 23, 18))

# Convert local data frame to a SparkR DataFrame

df <- createDataFrame(sqlContext, localDF)

# Print its schema

printSchema(df)

# root

# |-- name: string (nullable = true)

# |-- age: double (nullable = true)

# Create a DataFrame from a JSON file

path <- file.path("/examples/src/main/resources/people.json")

peopleDF <- jsonFile(sqlContext, path)

printSchema(peopleDF)

# Register this DataFrame as a table.

registerTempTable(peopleDF, "people")

# SQL statements can be run by using the sql methods provided by sqlContext

teenagers <- sql(sqlContext, "SELECT name FROM people WHERE age >= 13 AND age <= 19")

# Call collect to get a local data.frame

teenagersLocalDF <- collect(teenagers)

# Print the teenagers in our dataset

print(teenagersLocalDF)

# Stop the SparkContext now

sparkR.stop()

1.3 运行指令:

spark-submit --master spark://Master:7077 dataframe.R

或

spark-submit dataframe.R

1.4 运行结果:

hadoop@Master:~/cloud/testByXubo/spark/R$ spark-submit --master spark://219.219.220.149:7077 dataframe.R

WARNING: ignoring environment value of R_HOME

Loading required package: methods

Attaching package: ‘SparkR’

The following objects are masked from ‘package:stats’:

filter, na.omit

The following objects are masked from ‘package:base’:

intersect, rbind, sample, subset, summary, table, transform

root

|-- name: string (nullable = true)

|-- age: double (nullable = true)

root

|-- age: long (nullable = true)

|-- name: string (nullable = true)

name

1 Justin

或者默认:

hadoop@Master:~/cloud/testByXubo/spark/R$ spark-submit dataframe.R

WARNING: ignoring environment value of R_HOME

Loading required package: methods

Attaching package: ‘SparkR’

The following objects are masked from ‘package:stats’:

filter, na.omit

The following objects are masked from ‘package:base’:

intersect, rbind, sample, subset, summary, table, transform

root

|-- name: string (nullable = true)

|-- age: double (nullable = true)

root

|-- age: long (nullable = true)

|-- name: string (nullable = true)

name

1 Justin

1.5 分析

1.5.1 默认是本地执行:

App ID App Name Started Completed Duration Spark User Last Updated local-1461125367768 SparkR-DataFrame-example 2016/04/20 12:09:25 2016/04/20 12:09:32 7 s hadoop 2016/04/20 12:09:32 app-20160420111855-0007 SparkR-DataFrame-example 2016/04/20 11:18:52 2016/04/20 11:19:10 17 s hadoop 2016/04/20 11:19:10

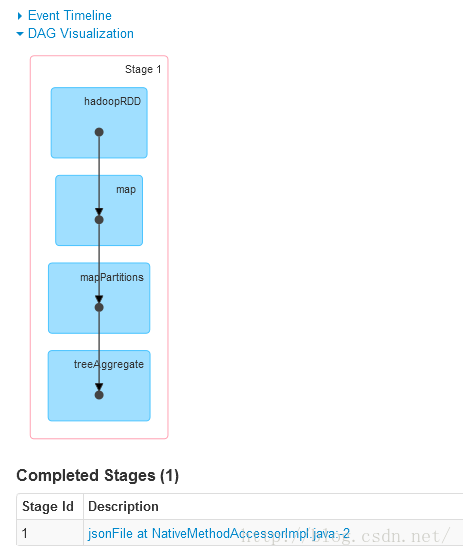

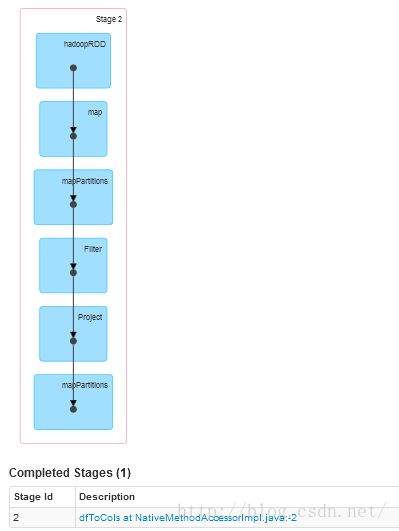

1.5.2 执行时有3个stage:

参考:

【1】 https://github.com/apache/spark/tree/master/R