pyspark特征工程常用方法(一)

本文记录特征工程中常用的五种方法:MinMaxScaler,Normalization,OneHotEncoding,PCA以及QuantileDiscretizer 用于分箱

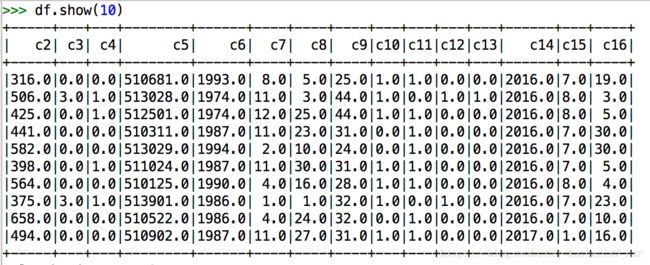

原有数据集如下图:

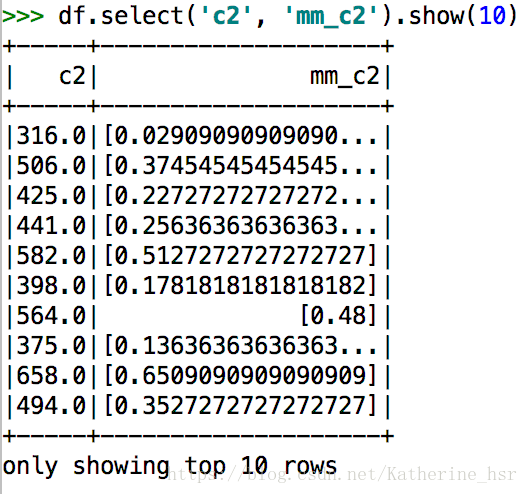

1. MinMaxScaler

from pyspark.ml.feature import MinMaxScaler

# 首先将c2列转换为vector的形式

vecAssembler = VectorAssembler(inputCols=["c2"], outputCol="c2_new")

# minmax tranform

mmScaler = MinMaxScaler(inputCol='c2_new', outputCol='mm_c2')

pipeline = Pipeline(stages=[vecAssembler, mmScaler])

pipeline_fit = pipeline.fit(df)

df = pipeline_fit.transform(df)2. Normalization

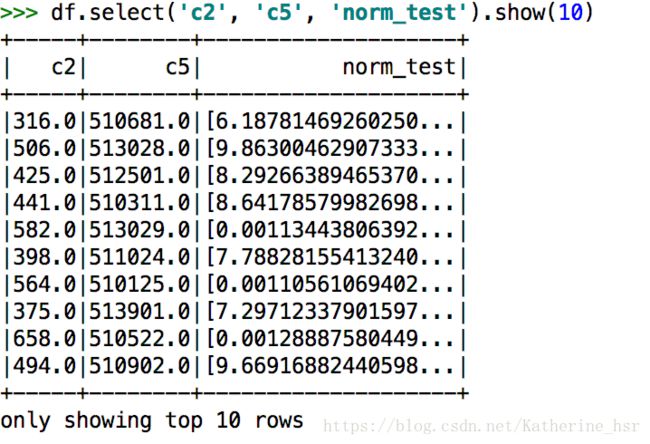

from pyspark.ml.feature import Normalizer

vecAssembler = VectorAssembler(inputCols=['c2', 'c5'], outputCol="norm_features")

normalizer = Normalizer(p=2.0, inputCol="norm_features", outputCol="norm_test")

pipeline = Pipeline(stages=[vecAssembler, normalizer])

pipeline_fit = pipeline.fit(df)

df = pipeline_fit.transform(df)3. OneHotEncoding

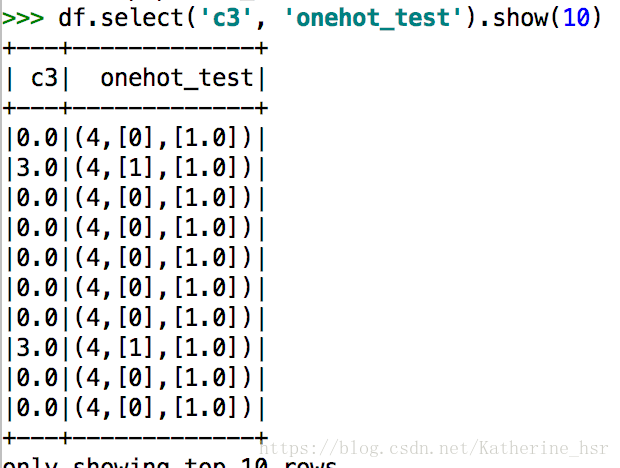

使用OneHotEncoder

from pyspark.ml.feature import OneHotEncoder

from pyspark.ml.feature import StringIndexer

stringindexer = StringIndexer(inputCol='c3', outputCol='onehot_feature')

encoder = OneHotEncoder(dropLast=False, inputCols='onehot_feature', outputCols='onehot_test')

pipeline = Pipeline(stages=[stringindexer, encoder])

pipeline_fit = pipeline.fit(df)

df = pipeline_fit.transform(df)转换结果如图:

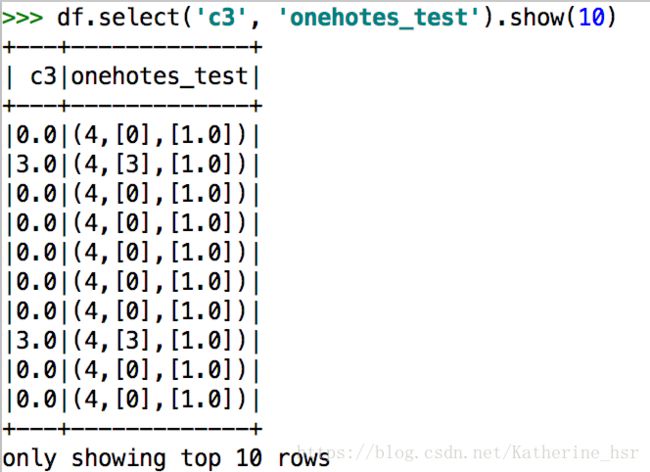

使用OneHotEncoderEstimator

from pyspark.ml.feature import OneHotEncoderEstimator

encoder = OneHotEncoderEstimator(inputCols=['c3'], outputCols=['onehotes_test'], dropLast=False)

pipeline = Pipeline(stages=[vecAssembler, encoder])

pipeline_fit = pipeline.fit(df)

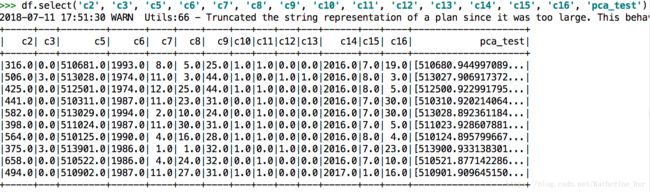

df = pipeline_fit.transform(df)4. PCA

from pyspark.ml.feature import PCA

from pyspark.ml.feature import VectorAssembler

input_col = ['c2', 'c3', 'c5', 'c6', 'c7', 'c8', 'c9', 'c10', 'c11', 'c12', 'c13', 'c14', 'c15', 'c16']

vecAssembler = VectorAssembler(inputCols=input_col, outputCol='features')

pca = PCA(k=5, inputCol = 'features', outputCol='pca_test')

pipeline = Pipeline(stages=[vecAssembler, pca])

pipeline_fit = pipeline.fit(df)

df = pipeline_fit.transform(df)5. QuantileDiscretizer 用于分箱

对于空值的处理 handleInvalid = ‘keep’时,可以将空值单独分到一箱

# (5)QuantileDiscretizer 用于分箱, 对于空值的处理 handleInvalid = 'keep'时,可以将空值单独分到一箱

from pyspark.ml.feature import QuantileDiscretizer

quantileDiscretizer = QuantileDiscretizer(numBuckets=4, inputCol='c2', outputCol='quantile_c2', relativeError=0.01, handleInvalid='error')

quantileDiscretizer_model = quantileDiscretizer.fit(df)

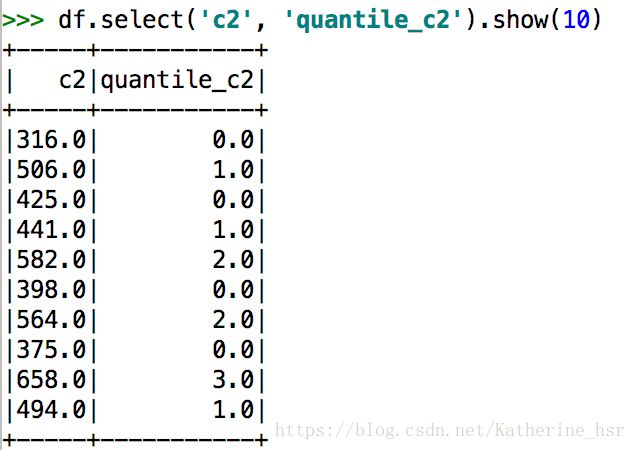

df = quantileDiscretizer_model.transform(df)

df = quantileDiscretizer.setHandleInvalid("keep").fit(df).transform(df) # 保留空值

df = quantileDiscretizer.setHandleInvalid("skip").fit(df).transform(df) # 不要空值

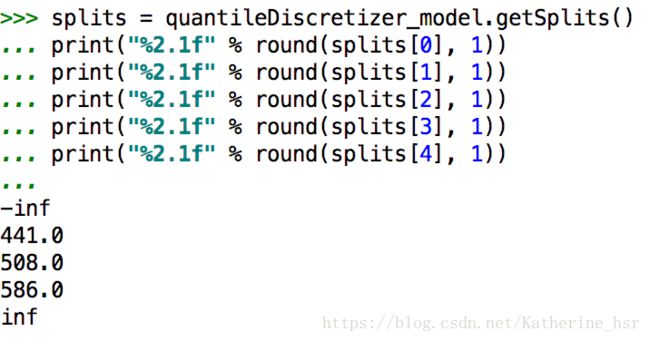

# 查看分箱点

splits = quantileDiscretizer_model.getSplits()

print("%2.1f" % round(splits[0], 1))

print("%2.1f" % round(splits[1], 1))

print("%2.1f" % round(splits[2], 1))

print("%2.1f" % round(splits[3], 1))

print("%2.1f" % round(splits[4], 1))