【部分原创】python实现视频内的face swap(换脸)

1.准备工作,按博主的环境为准

Python 3.5

Opencv 3

Tensorflow 1.3.1

Keras 2

cudnn和CUDA,如果你的GPU足够厉害并且支持的话,可以选择安装

那就先安装起来,有兴趣的朋友给我个暗示,好让我有动力写下去,想实现整套的功能还是有点复杂的

第一部分,数据采集,及视频内人物脸

![]()

import cv2

save_path = 'your save path'

cascade = cv2.CascadeClassifier('haarcascade_frontalface_alt.xml path')

cap = cv2.VideoCapture('your video path')

i = 0

while True:

ret,frame = cap.read()

gray = cv2.cvtColor(frame,cv2.COLOR_BGR2GRAY)

rect = cascade.detectMultiScale(gray,scaleFactor=1.3,minNeighbors=9,minSize=(50,50),flags = cv2.CASCADE_SCALE_IMAGE)

print ("rect",rect)

if not rect is ():

for x,y,z,w in rect:

roiImg = frame[y:y+w,x:x+z]

cv2.imwrite(save_path+str(i)+'.jpg',roiImg)

cv2.rectangle(frame,(x,y),(x+z,y+w),(0,0,255),2)

i +=1

cv2.imshow('frame',frame)

if cv2.waitKey(1) &0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

![]()

第二部分,国外大神开源代码,用于模型训练

![]()

import cv2

import numpy

from utils import get_image_paths, load_images, stack_images

from training_data import get_training_data

from model import autoencoder_A

from model import autoencoder_B

from model import encoder, decoder_A, decoder_B

try:

encoder .load_weights( "models/encoder.h5" )

decoder_A.load_weights( "models/decoder_A.h5" )

decoder_B.load_weights( "models/decoder_B.h5" )

except:

pass

def save_model_weights():

encoder .save_weights( "models/encoder.h5" )

decoder_A.save_weights( "models/decoder_A.h5" )

decoder_B.save_weights( "models/decoder_B.h5" )

print( "save model weights" )

images_A = get_image_paths( "data/trump" )

images_B = get_image_paths( "data/cage" )

images_A = load_images( images_A ) / 255.0

images_B = load_images( images_B ) / 255.0

images_A += images_B.mean( axis=(0,1,2) ) - images_A.mean( axis=(0,1,2) )

print( "press 'q' to stop training and save model" )

for epoch in range(1000000):

batch_size = 64

warped_A, target_A = get_training_data( images_A, batch_size )

warped_B, target_B = get_training_data( images_B, batch_size )

loss_A = autoencoder_A.train_on_batch( warped_A, target_A )

loss_B = autoencoder_B.train_on_batch( warped_B, target_B )

print( loss_A, loss_B )

if epoch % 100 == 0:

save_model_weights()

test_A = target_A[0:14]

test_B = target_B[0:14]

figure_A = numpy.stack([

test_A,

autoencoder_A.predict( test_A ),

autoencoder_B.predict( test_A ),

], axis=1 )

figure_B = numpy.stack([

test_B,

autoencoder_B.predict( test_B ),

autoencoder_A.predict( test_B ),

], axis=1 )

figure = numpy.concatenate( [ figure_A, figure_B ], axis=0 )

figure = figure.reshape( (4,7) + figure.shape[1:] )

figure = stack_images( figure )

figure = numpy.clip( figure * 255, 0, 255 ).astype('uint8')

cv2.imshow( "", figure )

key = cv2.waitKey(1)

if key == ord('q'):

save_model_weights()

exit()

![]()

第三部分,国外大神开源代码,人脸输出

![]()

import cv2

import numpy

from pathlib import Path

from utils import get_image_paths

from model import autoencoder_A

from model import autoencoder_B

from model import encoder, decoder_A, decoder_B

encoder .load_weights( "models/encoder.h5" )

decoder_A.load_weights( "models/decoder_A.h5" )

decoder_B.load_weights( "models/decoder_B.h5" )

images_A = get_image_paths( "data/trump" )

images_B = get_image_paths( "data/cage" )

def convert_one_image( autoencoder, image ):

assert image.shape == (256,256,3)

crop = slice(48,208)

face = image[crop,crop]

face = cv2.resize( face, (64,64) )

face = numpy.expand_dims( face, 0 )

new_face = autoencoder.predict( face / 255.0 )[0]

new_face = numpy.clip( new_face * 255, 0, 255 ).astype( image.dtype )

new_face = cv2.resize( new_face, (160,160) )

new_image = image.copy()

new_image[crop,crop] = new_face

return new_image

output_dir = Path( 'output' )

output_dir.mkdir( parents=True, exist_ok=True )

for fn in images_A:

image = cv2.imread(fn)

new_image = convert_one_image( autoencoder_B, image )

output_file = output_dir / Path(fn).name

cv2.imwrite( str(output_file), new_image )

![]()

第四部分,人脸替换

![]()

#import necessary libraries

import cv2

import glob as gb

# import numpy

#capture video from the webcam

cap = cv2.VideoCapture('your video path')

fourcc = cv2.VideoWriter_fourcc(*'XVID')

out = cv2.VideoWriter('your output video path', fourcc, 20.0, (1920, 1080))

#load the face finder

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_alt.xml path')

#load the face that will be swapped in

img_path = gb.glob("your image path")

#start loop

for path in img_path:

face_img = cv2.imread(path)

while True:

ret, img = cap.read() # read image

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.3, 3) # find faces

# for all the faces found in the frame

for (x, y, w, h) in faces:

# resize and blend the face to be swapped in

face = cv2.resize(face_img, (h, w), interpolation=cv2.INTER_CUBIC)

face = cv2.addWeighted(img[y:y + h, x:x + w], .5, face, .5, 1)

# swap faces

img[y:y + h, x:x + w] = face

out.write(img)

# show the image

cv2.imshow('img', img)

key = cv2.waitKey(1)

if key == ord('q'):

exit()

cap.release()

cv2.destroyAllWindows()

![]()

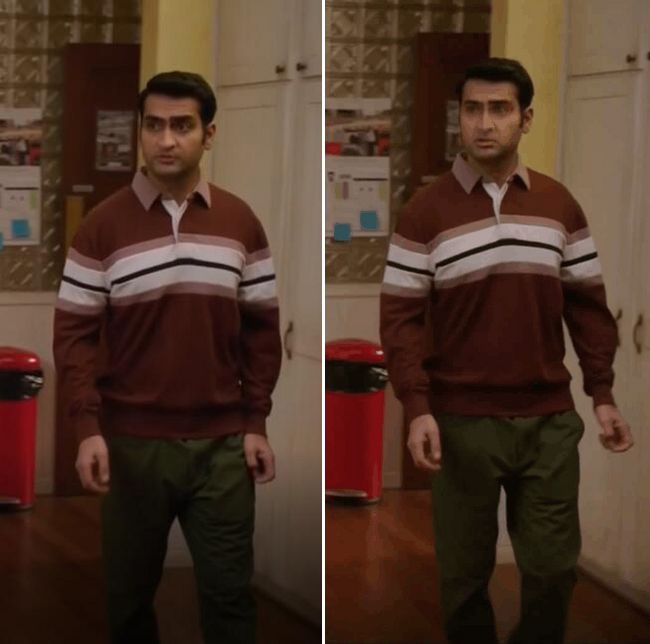

最后放一张训练一小时后的视频截图,用的是尼古拉斯凯奇的脸

面朝大海--\(˙<>˙)/--落霞与孤鹜齐飞