# encoding=utf-8

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data/', one_hot=True)

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

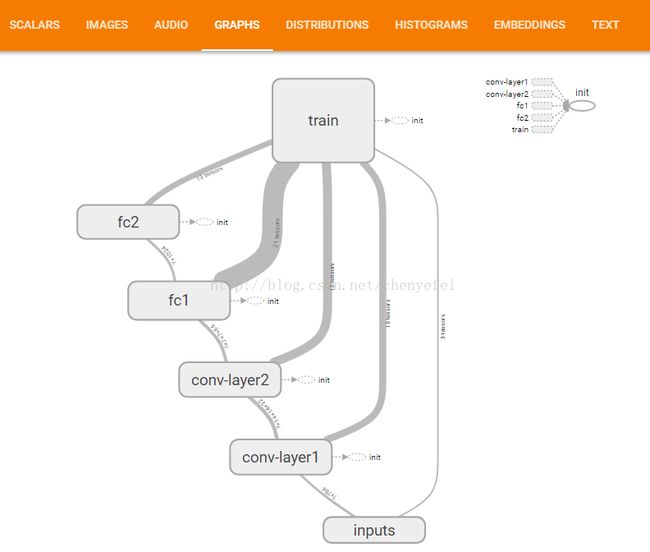

myGraph = tf.Graph()

with myGraph.as_default():

with tf.name_scope('inputs'):

x_raw = tf.placeholder(tf.float32, shape=[None, 784])

y = tf.placeholder(tf.float32, shape=[None, 10])

with tf.name_scope('conv-layer1'):

x = tf.reshape(x_raw, shape=[-1,28,28,1])

W_conv1 = weight_variable([5,5,1,32])

b_conv1 = bias_variable([32])

l_conv1 = tf.nn.relu(tf.nn.conv2d(x,W_conv1, strides=[1,1,1,1],padding='SAME') + b_conv1)

l_pool1 = tf.nn.max_pool(l_conv1, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

tf.summary.image('x_input', x, max_outputs=10)

tf.summary.histogram('W_conv1', W_conv1)

tf.summary.histogram('b_conv1', b_conv1)

with tf.name_scope('conv-layer2'):

W_conv2 = weight_variable([5,5,32,64])

b_conv2 = bias_variable([64])

l_conv2 = tf.nn.relu(tf.nn.conv2d(l_pool1, W_conv2, strides=[1,1,1,1], padding='SAME')+b_conv2)

l_pool2 = tf.nn.max_pool(l_conv2, ksize=[1,2,2,1],strides=[1,2,2,1], padding='SAME')

tf.summary.histogram('W_conv2', W_conv2)

tf.summary.histogram('b_conv2', b_conv2)

with tf.name_scope('fc1'):

W_fc1 = weight_variable([64*7*7, 1024])

b_fc1 = bias_variable([1024])

l_pool2_flat = tf.reshape(l_pool2, [-1, 64*7*7])

l_fc1 = tf.nn.relu(tf.matmul(l_pool2_flat, W_fc1) + b_fc1)

keep_prob = tf.placeholder(tf.float32)

l_fc1_drop = tf.nn.dropout(l_fc1, keep_prob)

tf.summary.histogram('W_fc1', W_fc1)

tf.summary.histogram('b_fc1', b_fc1)

with tf.name_scope('fc2'):

W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv = tf.matmul(l_fc1_drop, W_fc2) + b_fc2

tf.summary.histogram('W_fc1', W_fc1)

tf.summary.histogram('b_fc1', b_fc1)

with tf.name_scope('train'):

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=y_conv, labels=y))

train_step = tf.train.AdamOptimizer(learning_rate=1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv,1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

tf.summary.scalar('loss', cross_entropy)

tf.summary.scalar('accuracy', accuracy)

with tf.Session(graph=myGraph) as sess:

sess.run(tf.global_variables_initializer())

merged = tf.summary.merge_all()

summary_writer = tf.summary.FileWriter('mnist-tensorboard/', graph=sess.graph)

for i in range(1001):

batch = mnist.train.next_batch(50)

sess.run(train_step, feed_dict={x_raw:batch[0], y:batch[1], keep_prob:0.7})

if i%100 == 0:

result, acc = sess.run([merged, accuracy], feed_dict={x_raw:batch[0], y:batch[1], keep_prob: 1.})

print("Iter " + str(i) + ", Training Accuracy= " + "{:.5f}".format(acc))

summary_writer.add_summary(result, i)

print("Optimization Finished!")

test_accuracy = sess.run(accuracy, feed_dict={x_raw: mnist.test.images[:256],

y: mnist.test.labels[:256], keep_prob: 1.})

print("Testing Accuracy:", test_accuracy)

from __future__ import print_function

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

learning_rate = 0.001

training_iters = 200000

batch_size = 128

display_step = 10

n_input = 784

n_classes = 10

dropout = 0.75

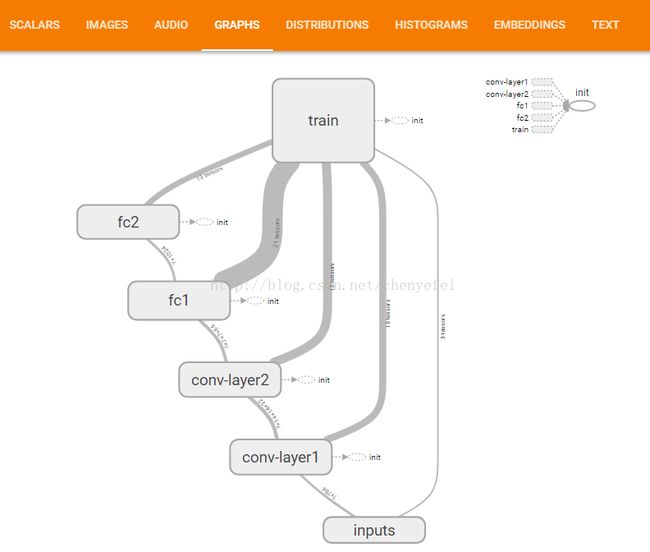

with tf.name_scope('inputs'):

x = tf.placeholder(tf.float32, [None, n_input])

y = tf.placeholder(tf.float32, [None, n_classes])

keep_prob = tf.placeholder(tf.float32)

def myconv2d(x, W, b, strides=1):

x = tf.nn.conv2d(x, W, strides=[1, strides, strides, 1], padding='SAME')

x = tf.nn.bias_add(x, b)

return tf.nn.relu(x)

def maxpool2d(x, k=2):

return tf.nn.max_pool(x, ksize=[1, k, k, 1], strides=[1, k, k, 1], padding='SAME')

def conv_net(x, weights, biases, dropout):

# 输入-1代表图片数量不定 , 28*28图片,1通道灰色图

with tf.name_scope('x'):

x = tf.reshape(x, shape=[-1, 28, 28, 1])

############# 第一层卷积 #####################

with tf.name_scope('conv1'):

conv1 = myconv2d(x, weights['wc1'], biases['bc1'])

conv1 = maxpool2d(conv1, k=2)

############# 第二层卷积 #####################

with tf.name_scope('conv2'):

conv2 = myconv2d(conv1, weights['wc2'], biases['bc2'])

conv2 = maxpool2d(conv2, k=2)

############# 第三层全连接 ###################

with tf.name_scope('fc1'):

fc1 = tf.reshape(conv2, [-1, weights['wd1'].get_shape().as_list()[0]])

fc1 = tf.add(tf.matmul(fc1, weights['wd1']), biases['bd1'])

fc1 = tf.nn.relu(fc1)

fc1 = tf.nn.dropout(fc1, dropout)

############# 第四层全连接 ###################

with tf.name_scope('fc2'):

out = tf.add(tf.matmul(fc1, weights['out']), biases['out'])

return out

weights = {

# 5x5 conv, 1 input, 32 outputs, 第一层卷积结果14*14*32

'wc1': tf.Variable(tf.random_normal([5, 5, 1, 32])),

# 5x5 conv, 32 inputs, 64 outputs, 第二层卷积结果7*7*64

'wc2': tf.Variable(tf.random_normal([5, 5, 32, 64])),

# fully connected, 7*7*64 inputs, 1024 outputs

'wd1': tf.Variable(tf.random_normal([7*7*64, 1024])),

# 1024 inputs, 10 outputs (class prediction)

'out': tf.Variable(tf.random_normal([1024, n_classes]))

}

biases = {

# xx通道输出,对应xx偏置量

'bc1': tf.Variable(tf.random_normal([32])),

'bc2': tf.Variable(tf.random_normal([64])),

'bd1': tf.Variable(tf.random_normal([1024])),

'out': tf.Variable(tf.random_normal([n_classes]))

}

predict = conv_net(x, weights, biases, keep_prob)

############# 逻辑回归分类 #############

with tf.name_scope('cost'):

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=predict, labels=y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)

tf.summary.scalar('cost', cost)

############# 预测 #####################

with tf.name_scope('train'):

correct_pred = tf.equal(tf.argmax(predict, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

tf.summary.scalar('accuracy', accuracy)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

step = 1

merged =tf.summary.merge_all()

writer = tf.summary.FileWriter('tmp-logs/', sess.graph)

############# 训练 #####################

while step * batch_size < training_iters:

batch_x, batch_y = mnist.train.next_batch(batch_size)

sess.run(optimizer, feed_dict={x: batch_x, y: batch_y, keep_prob: dropout})

if step % display_step == 0:

result, loss, acc = sess.run([merged, cost, accuracy],

feed_dict={x: batch_x, y: batch_y, keep_prob: 1.})

print("Iter " + str(step*batch_size) + ", Minibatch Loss= " + \

"{:.6f}".format(loss) + ", Training Accuracy= " + "{:.5f}".format(acc))

writer.add_summary(result, step)

step += 1

print("Optimization Finished!")

test_accuracy = sess.run(accuracy, feed_dict={x: mnist.test.images[:256],

y: mnist.test.labels[:256], keep_prob: 1.})

print("Testing Accuracy:", test_accuracy)