基于TensorRT 5.x的网络推理加速(python)

本文目的主要在于如何使用TensorRT 5.x的python api来进行神经网络的推理。因为目前TensorRT只支持ONNX,Caffe和Uff (Universal Framework Format)这三种格式。这里以tensorflow的pb模型为例(可以无缝转换为uff)进行说明。

0. TensoRT介绍

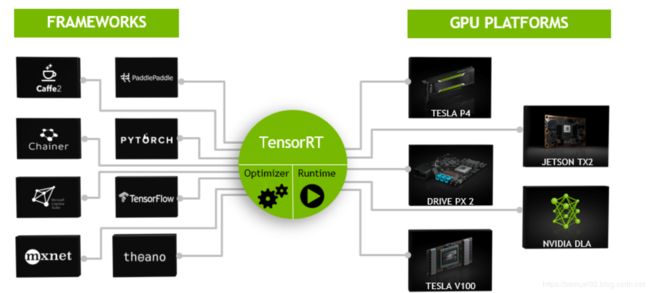

TensorRT是英伟达(NVIDIA)开发的一个可以在NVIDIA旗下的GPU上进行高性能推理的C++库。它的设计目标是与现有的深度学习框架无缝贴合:比如Mxnet, PyTorch, Tensorflow 以及Caffe等。TensorRT只关注推理阶段(inference stage)的优化。

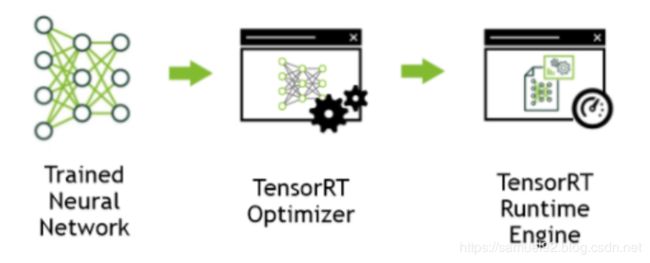

TensorRT的作用是将一个训练好的神经网络(需要部署的)经过TensorRT优化,然后放到TensorRT的Runtime引擎去执行。示例图如下:

下图可以看出,TensorRT支持很多的框架和对其下的GPU平台都做了特定的支持。

总的来说,使用TensorRT进行模型推理加速的工作流(Workflow)可以分为以下几个步骤:

- ① 训练神经网络,得到模型。(以Tensorflow为例,我们得到

***.pb格式的模型文件) - ② 将模型用TensorRT提供的工具进行parsing(解析)。

- ③ 将parsing后的结构通过TensorRT内部的优化选项(optimization options)对计算图结构进行优化。(包括不限于:1. 算子融合 Layer Funsion: 通过将Conv + BN等层的融合降低数据的吞吐量。 2. 精度校准 Precision Calibration : 当用户为了节省计算资源使用INT8进行推理的时候,需要作精度校准,这个操作TensorRT提供了官方的支持。 3. kernel auto-tuning : 根据计算逻辑,自动选择TensorRT实现的更高效的矩阵乘法,卷积运算等逻辑。)

- ④ 通过上述步骤,得到了一个优化后的推理引擎。我们就可以拿这个引擎进行推理了~

1. TensorRT 的关键接口

本部分将介绍TensorRT中的关键接口,这些接口在我们的例子中都有着确实的应用,所以有必要了解设计他们的目的和他们的功能。

Network Definition (高阶用法)

网络定义接口提供方法来指定网络的定义。我们可以指定输入输出的Tensor Name以及增加layer。我们可以通过自定义来扩展TensorRT不支持的层和功能。关于网络定义的API,请查看Network Definition API.

Builder

Builder接口让我们可以从一个网络定义中创建一个优化后的引擎。在这个步骤我们可以选择最大batch,workspace size,精度级别等参数,如果你想了解更多,请查看Builder API.

Engine

引擎接口就允许应用来执行推理了。它支持对引擎输入和输出的绑定进行同步和异步执行、分析、枚举和查询。

It supports synchronous and asynchronous execution, profiling, and

enumeration and querying of the bindings for the engine inputs and

outputs.

此外,引擎接口还允许单个引擎拥有多个执行上下文(execution contexts). 这可以让一个引擎同时对多组数据进行执行推理操作。如想了解更多,请查Execution API.

此外,TensorRT还提供了解析训练模型并创建TensorRT内部支持的模型定义的parser:

- Caffe Parser

This parser can be used to parse a Caffe network created in BVLC Caffe

or NVCaffe 0.16. It also provides the ability to register a plugin

factory for custom layers. For more details on the C++ Caffe Parser,

see NvCaffeParser or the Python Caffe Parser.

- UFF Parser

This parser can be used to parse a network in UFF format. It also

provides the ability to register a plugin factory and pass field

attributes for custom layers. For more details on the C++ UFF Parser,

see NvUffParser or the Python UFF Parser.

- ONNX Parser

This parser can be used to parse an ONNX model. For more details on

the C++ ONNX Parser, see NvONNXParser or the Python ONNX Parser.

Restriction: Since the ONNX format is quickly developing, you may

encounter a version mismatch between the model version and the parser

version. The ONNX Parser shipped with TensorRT 5.1.x supports ONNX IR

(Intermediate Representation) version 0.0.3, opset version 9.

2. TensorRT python api安装

环境准备:

- Ubuntu 18.04 (需要注意的是, TensorRT的python接口不支持windows,在windows上使用只能用C++接口)

- cuda 9.0

- cudnn 7.5.0

- python 3.6

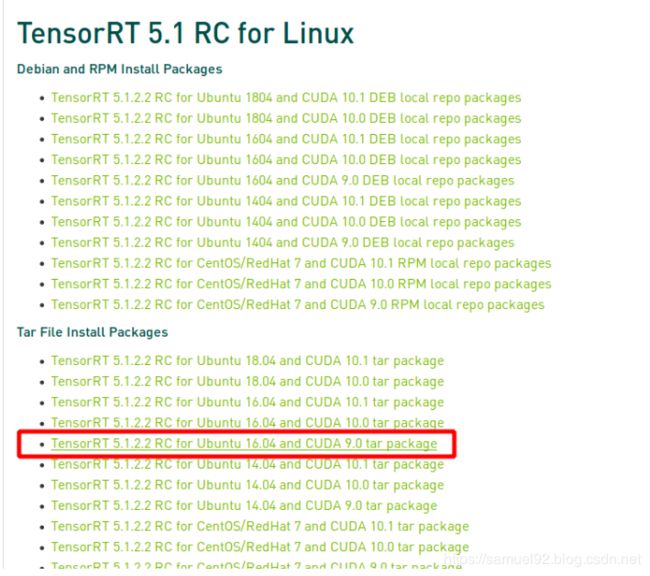

本着用新不用旧的原则,这里还是用TensorRT 5.x版本,下载地址[4]:

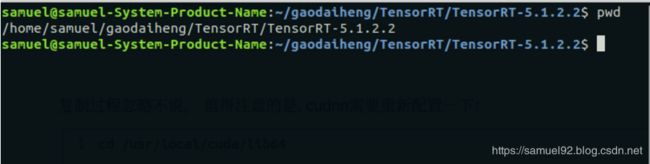

下好之后,我们看到有下面这个文件躺在你设定的下载路径中,将其解压即可:

正式开始:

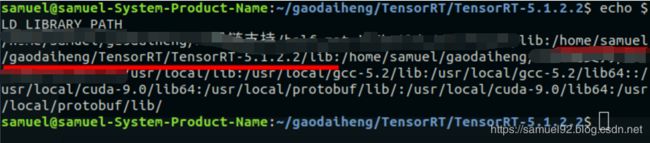

vim ~/.bashrc

...

export LD_LIBRARY_PATH=/home/samuel/gaodaiheng/TensorRT/TensorRT-5.1.2.2/lib:$LD_LIBRARY_PATH

...

source ~/.bashrc

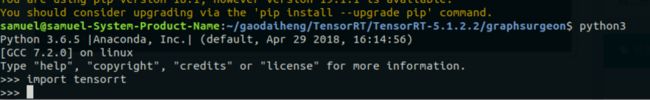

- b. 安装wheel包.

注意:只安装跟你环境一致的wheel包,因为我的python版本是python3.6, 所以这里安装的是cp36.whl)

TensorRT-5.1.2.2/python

TensorRT-5.1.2.2/uff

TensorRT-5.1.2.2/graphsurgeon

- c. 安装pycuda.

直接使用pip3 install pycuda会存在问题,其原因是:“因为我安装的cuda不是安装的debian版本,所以它默认找不到”

所以需要使用tar包编译的方式安装。

安装过程:https://wiki.tiker.net/PyCuda/Installation/Linux/Ubuntu

tar包下载地址: https://pypi.org/project/pycuda/#files

tar包下载完解压后的地址:

![]()

然后我们进行编译(可能根据情况需要改你的--python-exe后面的内容):

./configure.py --python-exe=/usr/bin/python3 --cuda-root=/usr/local/cuda

make -j 4

sudo python setup.py install

sudo pip install .

notice: 如第一步遇

setuptools找不到的时候,用python3 configure.py --python-exe=/usr/bin/python3 --cuda-root=/usr/local/cuda

3. TensorRT python 使用

这里以Tensorflow的pb模型出发(我假设你已经有了一个pb格式的模型),下面的内容将一步一步的阐明如何通过这个pb模型来转换为TensorRT的引擎并执行推理。

3.1 pb转uff*

首先,我们需要把pb格式的模型转换为TensorRT接收的3种模型结构之一: caffe, onnx, uff的uff.

# coding: UTF-8

"""

@func: 将pb模型转换为uff模型.

"""

import tensorflow as tf

import uff

# 需要改成你自己的output_names

output_names = ['output']

frozen_graph_filename = 'xxx.pb'

# 将frozen graph转换为uff格式

uff_model = uff.from_tensorflow_frozen_model(frozen_graph_filename, output_names)

转化成功,在graph_opt.pb的同级目录下,有了一个xxx.uff模型文件.

3.2 开始推理

■ 初始化

...

engine = build_engine() # 这个步骤比较重要, 下面会详细说明.

# engine.get_binding_shape(0) 对应的是输入的shape; 1对应的是输出的shape.

print(engine.get_binding_shape(0))

print(engine.get_binding_shape(1))

# 1. 为输入输出指定host和device的空间. h_input的h表示host, d_input的d表示device:

h_input = cuda.pagelocked_empty(trt.volume(engine.get_binding_shape(0)), dtype=trt.nptype(trt.float32))

h_output = cuda.pagelocked_empty(trt.volume(engine.get_binding_shape(1)), dtype=trt.nptype(trt.float32))

# 2. 分配显存空间.

d_input = cuda.mem_alloc(h_input.nbytes)

d_output = cuda.mem_alloc(h_output.nbytes)

# 3. 创建cuda stream, 其可以host和device之间动态的转换数据(显存到内存,内存到显存), 并执行推理.

stream = cuda.Stream()

context = engine.create_execution_context()

...

■ 构造引擎

...

# TRT_LOGGER是trt.Builder中必须要传的参数!

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

...

def build_engine():

uff_model = uff.from_tensorflow_frozen_model(ModelData.FROZEN_GRAPH, ModelData.OUTPUT_NAME)

# For more information on TRT basics, refer to the introductory samples.

with trt.Builder(TRT_LOGGER) as builder, builder.create_network() as network, trt.UffParser() as parser:

# 设置batch size和工作区大小.

builder.max_batch_size = 1

# builder.max_workspace_size = 1 ** 30

# Parse the Uff Network

parser.register_input(ModelData.INPUT_NAME, ModelData.INPUT_SHAPE, trt.UffInputOrder.NHWC)

parser.register_output('output')

# parse_buffer是parse一个已经在内存中的buffer.

# https://docs.nvidia.com/deeplearning/sdk/tensorrt-api/python_api/parsers/Uff/pyUff.html

parser.parse_buffer(uff_model, network)

# Build and return an engine.

return builder.build_cuda_engine(network)

...

好,现在引擎和初始化的流程已经完成。我们可以进行模型的推理了,完整代码如下:

# coding: UTF-8

"""

@author: samuel ko

"""

import os

import cv2

import time

import tensorflow as tf

import tensorrt as trt

import pycuda.driver as cuda

import pycuda.autoinit

import uff

import numpy as np

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

class ModelData(object):

FROZEN_GRAPH = "xxx.pb" # NHWC

INPUT_NAME ="image"

# ref: https://docs.nvidia.com/deeplearning/sdk/tensorrt-api/python_api/infer/FoundationalTypes/Dims.html#dimsnchw

INPUT_SHAPE = [432, 848, 3]

OUTPUT_NAME = ['output']

# 构建引擎.

def build_engine():

uff_model = uff.from_tensorflow_frozen_model(ModelData.FROZEN_GRAPH, ModelData.OUTPUT_NAME)

# For more information on TRT basics, refer to the introductory samples.

with trt.Builder(TRT_LOGGER) as builder, builder.create_network() as network, trt.UffParser() as parser:

# 设置batch size和工作区大小.

builder.max_batch_size = 1

# builder.max_workspace_size = 1 ** 30

# Parse the Uff Network

parser.register_input(ModelData.INPUT_NAME, ModelData.INPUT_SHAPE, trt.UffInputOrder.NHWC)

parser.register_output('Openpose/concat_stage7')

# parse_buffer是parse一个已经在内存中的buffer.

# https://docs.nvidia.com/deeplearning/sdk/tensorrt-api/python_api/parsers/Uff/pyUff.html

parser.parse_buffer(uff_model, network)

# Build and return an engine.

return builder.build_cuda_engine(network)

# 加载数据并将其喂入提供的pagelocked_buffer中.

def load_normalized_data(data_path, pagelocked_buffer, target_size=(848, 432)):

upsample_size = [int(target_size[1] / 8 * 4.0), int(target_size[0] / 8 * 4.0)]

img = cv2.imread(data_path)

img = cv2.resize(img, target_size, interpolation=cv2.INTER_CUBIC)

# 此时img.shape为H * W * C: 432, 848, 3

print("图片shape", img.shape)

# Flatten the image into a 1D array, normalize, and copy to pagelocked memory.

np.copyto(pagelocked_buffer, img.ravel())

return upsample_size

# 初始化(创建引擎,为输入输出开辟&分配显存/内存.)

def init():

engine = build_engine()

print(engine.get_binding_shape(0))

print(engine.get_binding_shape(1))

# 1. Allocate some host and device buffers for inputs and outputs:

h_input = cuda.pagelocked_empty(trt.volume(engine.get_binding_shape(0)), dtype=trt.nptype(trt.float32))

h_output = cuda.pagelocked_empty(trt.volume(engine.get_binding_shape(1)), dtype=trt.nptype(trt.float32))

# Allocate device memory for inputs and outputs.

d_input = cuda.mem_alloc(h_input.nbytes)

d_output = cuda.mem_alloc(h_output.nbytes)

# Create a stream in which to copy inputs/outputs and run inference.

stream = cuda.Stream()

context = engine.create_execution_context()

return context, h_input, h_output, stream, d_input, d_output

# 推理

def inference(data_path):

global context, h_input, h_output, stream, d_input, d_output

upsample = load_normalized_data(data_path, pagelocked_buffer=h_input)

t1 = time.time()

cuda.memcpy_htod_async(d_input, h_input, stream)

# Run inference.

context.execute_async(bindings=[int(d_input), int(d_output)], stream_handle=stream.handle)

# Transfer predictions back from the GPU.

cuda.memcpy_dtoh_async(h_output, d_output, stream)

# Synchronize the stream

stream.synchronize()

# Return the host output.

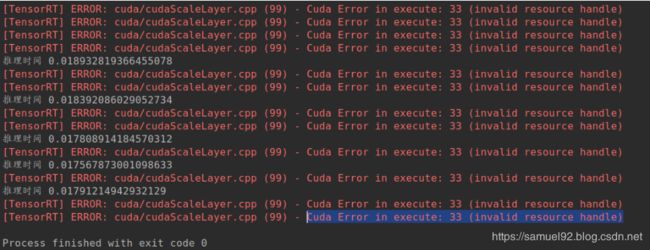

print("推理时间", time.time() - t1)

return h_output, upsample

if __name__ == '__main__':

context, h_input, h_output, stream, d_input, d_output = init()

img_path = "xxx.jpg"

image = cv2.imread(img_path)

for _ in range(10):

output, upsample = inference(data_path=img_path)

print(type(output))

print(output.shape)

···

4. 遇到的问题

- ① 如何使用

NHWC格式的模型进行推理。

之前误以为TensorRT只支持推理NCHWLayout的模型,这一步坑了好久,因为tensorRT不支持transpose算子,所以我将pb模型转成pbtxt,把data format都改成NCHW,还把concat的axis都从3改成1. 结果还是不对。

其实就很简单: 1. 在register_input的时候用trt.UffInputOrder.NHWC

parser.register_input("image", [432, 848, 3], trt.UffInputOrder.NHWC)

5. 总结

到这里,python使用TensorRT 5.x来推理模型的一整套流程就已经完毕了。如果有更高阶的需要,比如自定义层让TensorRT支持等,就需要大家深挖文档,进行魔改。遇到的最大的坑就是误以为TensorRT无法使用NHWC的布局,差点让我以NCHW的layour重新训练模型,所幸找到了解决办法。

参考资料

[1] 3. Working With TensorRT Using The Python API 中文翻译

[2] 3. Working With TensorRT Using The Python API 官方

[3] TensorRT 5.1.5.0 Python文档

[4] TensorRT 5.1.2.2 下载地址