数据分析案例——51job爬虫

介绍一个java爬虫的案例,使用jsoup进行HTML解析,进而获得数据。

简介

爬虫为获取数据的一种方式,目前流行Python爬虫,Python具有许多支持爬虫的框架。本文中使用java做一个简单的爬虫。

需求

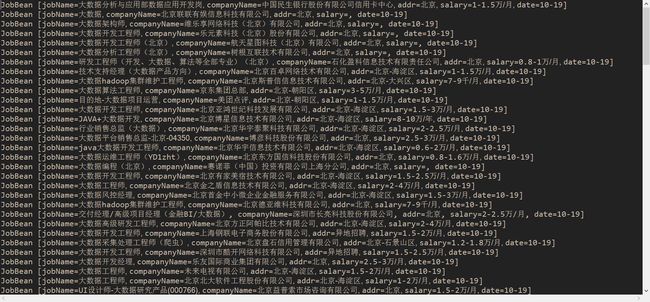

爬取51job网站的信息数据,爬取大数据相关岗位,主要爬取公司名称,职位名称,地区,薪资情况,发布日期。

工具jsoup

jsoup 是一款Java 的HTML解析器,可直接解析某个URL地址、HTML文本内容。它提供了一套非常省力的API,可通过DOM,CSS以及类似于jQuery的操作方法来取出和操作数据。

pom.xml导入依赖

<dependency>

<groupId>org.jsoupgroupId>

<artifactId>jsoupartifactId>

<version>1.11.3version>

dependency>

代码实现

JobBean.java

public class JobBean {

private String jobName;

private String companyName;

private String addr;

private String salary;

private String date;

public void set(String jobName, String companyName, String addr, String salary, String date) {

this.jobName = jobName;

this.companyName = companyName;

this.addr = addr;

this.salary = salary;

this.date = date;

}

@Override

public String toString() {

return "JobBean [jobName=" + jobName + ", companyName=" + companyName + ", addr=" + addr + ", salary=" + salary

+ ", date=" + date + "]";

}

public String getJobName() {

return jobName;

}

public void setJobName(String jobName) {

this.jobName = jobName;

}

public String getCompanyName() {

return companyName;

}

public void setCompanyName(String companyName) {

this.companyName = companyName;

}

public String getAddr() {

return addr;

}

public void setAddr(String addr) {

this.addr = addr;

}

public String getSalary() {

return salary;

}

public void setSalary(String salary) {

this.salary = salary;

}

public String getDate() {

return date;

}

public void setDate(String date) {

this.date = date;

}

}

PageBean.java

import org.jsoup.nodes.Document;

/**

* 封装页面信息

* @author Administrator

*

*/

public class PageBean {

private Document dom;

private String url;

private boolean hasNextPage;

public Document getDom() {

return dom;

}

public void setDom(Document dom) {

this.dom = dom;

}

public String getUrl() {

return url;

}

public void setUrl(String url) {

this.url = url;

}

public boolean isHasNextPage() {

return hasNextPage;

}

public void setHasNextPage(boolean hasNextPage) {

this.hasNextPage = hasNextPage;

}

@Override

public String toString() {

return "PageBean [dom=" + dom + ", url=" + url + ", hasNextPage=" + hasNextPage + "]";

}

public PageBean(Document dom, String url, boolean hasNextPage) {

super();

this.dom = dom;

this.url = url;

this.hasNextPage = hasNextPage;

}

public PageBean() {

super();

}

}

TestJsoup

public class TestJsoup {

/**

* 得到element之后,获取指定的值

* 1.标签对的值

* 2.属性值

* @param args

*/

public static void main(String[] args) {

Document document = getDom();

Elements select = document.select("#languagelist li a");

for (Element element : select) {

//得到每一个元素element

//标签对的值

String text = element.text();

System.out.println(text);

//获取属性值

String attr = element.attr("href");

System.out.println(attr);

}

}

/**

* 查找标签 1.id 2.class 3.tag(标签) select css选择形式

*/

private void getElement() {

Document document = getDom();

// 通过id查找 (唯一的)

Element elementById = document.getElementById("languagelist");

Elements elementsByTag2 = elementById.getElementsByTag("li");

Elements elementsByTag = document.getElementsByTag("div");

// 通过class查找数据

Elements elementsByClass = document.getElementsByClass("e");

// 通过css选择器 id class tag 联合形式 (空格)

Elements select = document.select("#languagelist li a");

}

/**

* 得到网站的所有数据

*

* @return

*/

private static Document getDom() {

URL url;

try {

url = new URL(

"https://search.51job.com/list/010000,000000,0000,00,9,99,%25E5%25A4%25A7%25E6%2595%25B0%25E6%258D%25AE,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=");

Document dom = Jsoup.parse(url, 4000);

//System.out.println(dom);

return dom;

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

return null;

}

}

主程序

public class TestMain {

public static void main(String[] args) {

String url = "https://search.51job.com/list/010000,000000,0000,00,9,99,%25E5%25A4%25A7%25E6%2595%25B0%25E6%258D%25AE,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=";

Document document = getDocument(url);

PageBean page = new PageBean();

page.setDom(document);

while (true) {

List<JobBean> job = getJob(page);

for (JobBean jobBean : job) {

System.out.println(jobBean);

}

getNextPage(page);

if (page.isHasNextPage()) {

Document document2 = getDocument(page.getUrl());

page.setDom(document2);

} else {

break;

}

try {

Thread.sleep(1000);

} catch (Exception e) {

e.printStackTrace();

}

}

}

/**

* 获取/判断是否有下一页

*/

private static void getNextPage(PageBean page) {

Document dom = page.getDom();

Element select = dom.select(".bk").get(1);

Elements select2 = select.select("a");

if (select2 != null && select2.size() > 0) {

String url = select2.get(0).attr("href");

page.setUrl(url);

page.setHasNextPage(true);

} else {

page.setHasNextPage(false);

}

}

/**

* 通过document获取具体的岗位数据

*/

private static List<JobBean> getJob(PageBean page) {

List<JobBean> list = new ArrayList<>();

Document dom = page.getDom();

Elements select = dom.select("#resultList .el");

select.remove(0);

for (Element element : select) {

Elements select1 = element.select(".t1 a");

String jobName = select1.text();

Elements select2 = element.select(".t2 a");

String companyName = select2.attr("title");

String addr = element.select(".t3").text();

String salary = element.select(".t4").text();

String date = element.select(".t5").text();

JobBean jobBean = new JobBean();

jobBean.set(jobName, companyName, addr, salary, date);

list.add(jobBean);

}

return list;

}

/**

* 获取document

*

* @param url

* @return

*/

private static Document getDocument(String url) {

try {

Document document = Jsoup.parse(new URL(url), 4000);

return document;

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

return null;

}

}