【python】结巴分词、PKUSeg分词之间的区别问题及词性标注

文章目录

- 【python】结巴分词与PKUSeg分词之间的区别

- 前言

- 一、测试文本内容样式

- 二、 分词

- 2.1 jieba分词

- 2.1.1 源码

- 2.1.2 结果

- 2.2 PKUSeg分词

- 2.2.1 源码

- 2.2.2 结果

- 三、词性标注

- 3.1 结巴词性标注

- 3.1.1 源码

- 3.1.2 结果

- 3.2 hanlp词性标注

- 3.2.1 源码

- 3.2.2 结果

- 四、说明

- 4.1 pkuseg模型下载

【python】结巴分词与PKUSeg分词之间的区别

前言

测试文本:现代汉语字典

一、测试文本内容样式

二、 分词

2.1 jieba分词

2.1.1 源码

"""

author:jjk

datetime:2019/3/13

coding:utf-8

project name:Pycharm_workstation

Program function:

"""

# 编码:utf-8

# 精确模式,试图将句子最精确地切开,适合文本分析

import jieba # 导入结巴分词库

import sys

# jieba.cut 方法接受三个输入参数: 需要分词的字符串;

# cut_all 参数用来控制是否采用全模式

# HMM 参数用来控制是否使用 HMM 模型

# 文件位置

# file = 'ceshi.txt'

#if __name__ == '__main__':

param = sys.argv # 获取控制台参数

if len(param) == 2:

with open(sys.argv[1], 'r', encoding='utf-8') as fp:

for line in fp.readlines(): # 全部加载

word = jieba.cut(line, cut_all=False) # 精确模式

print('/ '.join(word)) # 打印输出

# print(word)

else:

print('参数输入不合规范')

sys.exit()

2.1.2 结果

2.2 PKUSeg分词

2.2.1 源码

"""

author:jjk

datetime:2019/3/18

coding:utf-8

project name:Pycharm_workstation

Program function:

"""

# !usr/bin/env python

# encoding:utf-8

'''

功能: 基于北大开源的分词工具 pkuseg 进行分词实践 https://github.com/yishuihanhan/pkuseg-python

参数说明

pkuseg.pkuseg(model_name='msra', user_dict='safe_lexicon')

model_name 模型路径。默认是'msra'表示我们预训练好的模型(仅对pip下载的用户)。用户可以填自己下载或训练的模型所在的路径如model_name='./models'。

user_dict 设置用户词典。默认为'safe_lexicon'表示我们提供的一个中文词典(仅pip)。用户可以传入一个包含若干自定义单词的迭代器。

pkuseg.test(readFile, outputFile, model_name='msra', user_dict='safe_lexicon', nthread=10)

readFile 输入文件路径

outputFile 输出文件路径

model_name 同pkuseg.pkuseg

user_dict 同pkuseg.pkuseg

nthread 测试时开的进程数

pkuseg.train(trainFile, testFile, savedir, nthread=10)

trainFile 训练文件路径

testFile 测试文件路径

savedir 训练模型的保存路径

nthread 训练时开的进程数

'''

import pkuseg

import sys

def testFunc():

'''

分词

'''

seg = pkuseg.pkuseg(model_name='ctb8') # 假设用户已经下载好了ctb8的模型并放在了'./ctb8'目录下,通过设置model_name加载该模型

with open(sys.argv[1],'r', encoding='utf-8') as fp:

for line in fp.readlines(): # 全部加载

words = seg.cut(line)

print('/ '.join(words))

#print(words)

#print('=' * 60)

if __name__ == '__main__':

param = sys.argv # 获取参数的个数

if len(param) == 2:

testFunc()

else:

print('参数输入不合规范')

sys.exit()

2.2.2 结果

三、词性标注

3.1 结巴词性标注

3.1.1 源码

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

'''

@File : ceshi.py

@Time : 2019/03/22 14:33:05

@Author : jjk

@Version : 1.0

@Contact : None

@License : (C)Copyright 2019-2020, Liugroup-NLPR-CASIA

@Desc : None

'''

# here put the import lib

import jieba

import pkuseg

import jieba.posseg as pseg

# line = "我爱北京天安门"

# pseglist = pseg.cut(line)

# for j in pseglist:

# #也可以直接print出来看

# #wordtmp = j.word

# # flagtmp = j.flag

# print('%s,%s' % (j.word,j.flag))

# 正文

def jiebaCiXingBiaoZhu():

fp = open('ceshi.txt', 'r', encoding='utf-8') # 打开文件

for line in fp.readlines(): # 读取全文本

line = line.strip('\n')

# print(line)

words = pseg.cut(line)

for j in words:

print('%s,%s' % (j.word, j.flag))

fp.close()

if __name__ == '__main__':

jiebaCiXingBiaoZhu()

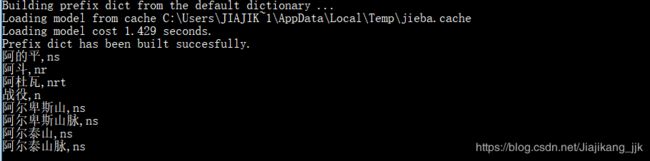

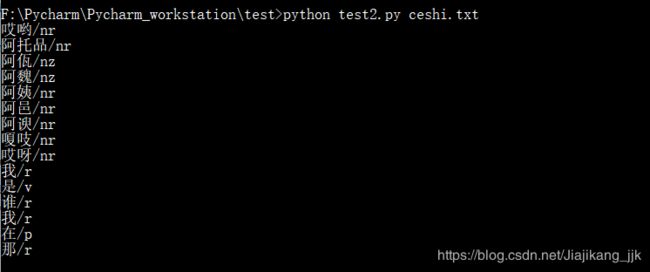

3.1.2 结果

3.2 hanlp词性标注

3.2.1 源码

"""

author:jjk

datetime:2019/3/19

coding:utf-8

project name:Pycharm_workstation

Program function: 词性标注

"""

import jpype

from jpype import *

from pyhanlp import *

import jieba.posseg as pseg

import pkuseg

def testFunc():

'''

分词

'''

# CRF 词法分析器

CRFLexicalAnalyzer = JClass("com.hankcs.hanlp.model.crf.CRFLexicalAnalyzer")

analyzer = CRFLexicalAnalyzer()

seg = pkuseg.pkuseg(model_name='ctb8') # 假设用户已经下载好了ctb8的模型并放在了'./ctb8'目录下,通过设置model_name加载该模型

with open(sys.argv[1], 'r', encoding='utf-8') as fp:

for line in fp.readlines(): # 全部加载

words = seg.cut(line)

# print('/ '.join(words))

# print(words)

# print('=' * 60)

for sentence in words:

print(analyzer.analyze(sentence))

if __name__ == '__main__':

param = sys.argv # 获取参数的个数

if len(param) == 2:

testFunc()

else:

print('参数输入不合规范')

sys.exit()

3.2.2 结果

四、说明

4.1 pkuseg模型下载

http://www.dataguru.cn/article-14485-1.html