python爬取小说(四)代码优化

为方便后续做前端展示,本次主要优化章节字段根据爬取顺序入库,各功能函数模块化。

# -*- coding: utf-8 -*-

import urllib.request

import bs4

import re

import sqlite3

import time

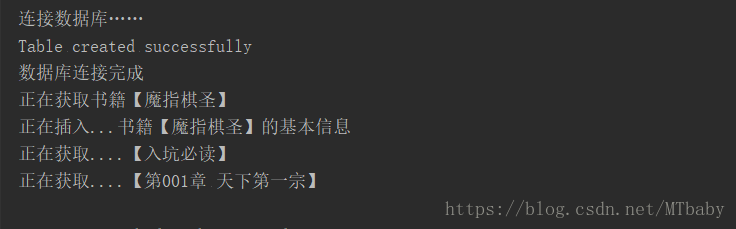

print ('连接数据库……')

cx = sqlite3.connect('PaChong.db')

# 在该数据库下创建表

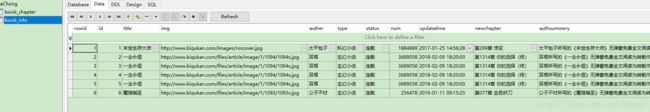

# cx.execute('''CREATE TABLE book_info(

# id INTEGER PRIMARY KEY AUTOINCREMENT,

# title verchar(128) not null,

# img verchar(512) null,

# auther verchar(64) null,

# type verchar(128) null,

# status verchar(64) null,

# num int null,

# updatatime datetime null,

# newchapter verchar(512) null,

# authsummery verchar(1024) null,

# summery verchar(1024) null,

# notipurl verchar(512) null);

# ''')

# cx.execute('''CREATE TABLE book_chapter(

# id INTEGER PRIMARY KEY AUTOINCREMENT,

# book_id int null ,

# chapter_no int null ,

# chapter_name verchar(128) null,

# chapter_url verchar(512) null,

# chapter_content text null);

# ''')

print("Table created successfully")

print("数据库连接完成")

def getHtml(url):

user_agent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.103 Safari/537.36"

headers = {"User-Agent":user_agent}

request = urllib.request.Request(url,headers=headers)

response = urllib.request.urlopen(request)

html = response.read()

return html

# 爬取整个网页

def parse(url):

html_doc = getHtml(url)

sp = bs4.BeautifulSoup(html_doc, 'html.parser', from_encoding="utf-8")

return sp

# 爬取书籍基本信息

def get_book_baseinfo(url):

# class = "info"信息获取

info = parse(url).find('div', class_='info')

book_info = {} # 存储书籍所有基本信息

pre_small = [] # 存储处理前的信息

aft_small = [] # 存储使用分号切割后的信息

pre_intro = [] # 存储简介开始之后的信息

if info:

# print(info)

book_info['title'] = ''

book_info['img'] = ''

# 标题

book_info['title'] = info.find('h2').string

# 图片链接

img = info.find('div',class_ = 'cover')

for im in img.children:

# 图片地址想要访问,显然需要拼接

book_info['img'] = 'http://www.biqukan.com' + im.attrs['src']

# 作者,分类,状态,字数,更新时间,最新章节

ifo = info.find('div',class_ = 'small')

for b in ifo:

for v in b.children:

t = v.string

if t:

pre_small.append(''.join(t))

# 将:后面的信息连起来

cv = ''

for v in pre_small:

if v.find(':') >= 0:

if cv:

aft_small.append(cv)

cv = v

else:

cv += v

aft_small.append(cv)

# 基本信息转成字典

for element in aft_small:

its = [v.strip() for v in element.split(':')]

if len(its) != 2:

continue

nm = its[0].lower() # 统一成小写

if type(nm).__name__ == 'unicode':

nm = nm.encode('utf-8')

vu = its[1]

book_info[nm] = vu

# 发现这里获取到的字典键与后面将要获取的键重复了,所以这里改一下

book_info['auther'] = book_info.pop('作者')

# 简介获取()

intro = info.find('div',class_ = 'intro')

for b in intro:

t = b.string

if t:

pre_intro.append(''.join(t))

ext_info = extract_book_ext_info(''.join(pre_intro))

# 使用笨办法将字典的key转成英文状态,这样方便数据库存储

book_info['type'] = book_info.pop('分类')

book_info['status'] = book_info.pop('状态')

book_info['num'] = book_info.pop('字数')

book_info['updatatime'] = book_info.pop('更新时间')

book_info['newchapter'] = book_info.pop('最新章节')

# 注意,以下三个是extract_book_ext_info函数返回

book_info['authsummery'] = ext_info['作者']

book_info['summery'] = ext_info['简介']

book_info['notipurl'] = ext_info['无弹窗推荐地址']

print("正在获取书籍【{}】".format(book_info['title']))

return book_info

# 简介、作者介绍、无弹窗地址获取

def extract_book_ext_info(ext_text):

tag = ['简介:', '作者:', '无弹窗推荐地址:']

# 查找分片位置

pos = []

cur_pos = 0

for t in tag:

tpos = ext_text.find(t, cur_pos)

pos.append(tpos)

# 分片

cur_pos = 0

items = []

for i in range(len(tag)):

items.append(ext_text[cur_pos:pos[i]])

cur_pos = pos[i]

items.append(ext_text[pos[-1]:])

ext_info = {}

for v in items:

txt = v.strip()

if not txt:

continue

dim_pos = txt.find(':')

if dim_pos < 0:

continue

ext_info[txt[:dim_pos].strip()] = txt[dim_pos+1:].strip()

return ext_info

# 获取书籍目录

def get_book_dir(url):

books_dir = []

name = parse(url).find('div', class_='listmain')

if name:

dd_items = name.find('dl')

dt_num = 0

for n in dd_items.children:

ename = str(n.name).strip()

if ename == 'dt':

dt_num += 1

if ename != 'dd':

continue

Catalog_info = {}

if dt_num == 2:

durls = n.find_all('a')[0]

Catalog_info['chapter_name'] = (durls.get_text())

Catalog_info['chapter_url'] = 'http://www.biqukan.com' + durls.get('href')

books_dir.append(Catalog_info)

# print(books_dir)

return books_dir

# 获取章节内容

def get_charpter_text(curl):

text = parse(curl).find('div', class_='showtxt')

if text:

cont = text.get_text()

cont = [str(cont).strip().replace('\r \xa0\xa0\xa0\xa0\xa0\xa0\xa0\xa0', '').replace('\u3000\u3000', '')]

c = " ".join(cont)

ctext = ' '.join(re.findall(r'^.*?html', c))

return ctext

else:

return ''

#数据存储

def SqlExec(conn,sql):

try:

cur = conn.cursor()

cur.execute(sql)

conn.commit()

except Exception as e:

print('exec sql error[%s]' % sql)

print(Exception, e)

cur = None

return cur

# 获取书籍章节内容

def get_book(burl):

# 目录

book = get_book_dir(burl)

if not book:

print('获取数据目录失败:', burl)

return book

for cno, d in enumerate(book):

curl = d['chapter_url']

d['chapter_no'] = str(cno)

try:

print('正在获取....【{}】'.format(d['chapter_name']))

ctext = get_charpter_text(curl)

d['chapter_content'] = ctext

except Exception as err:

d['chapter_content'] = 'get failed'

continue

return book

# 插入书籍章节内容

def insert_chapter(dic):

if not dic:

print("获取基本信息失败")

return dic

sql = 'insert into book_chapter(' + ','.join(dic.keys()) + ') '

sql += "values('" + "','".join(dic.values()) + "');"

# 调用数据库函数

if SqlExec(cx, sql):

print('正在插入...【{}】'.format(dic['chapter_name']))

else:

print(sql)

# 书籍基本信息入库

def insert_baseinfo(burl):

baseinfo = get_book_baseinfo(burl)

if not baseinfo:

print("获取基本信息失败")

return baseinfo

sql = 'insert into book_info(' + ','.join(baseinfo.keys()) + ')'

sql += " values('" + "','".join(baseinfo.values()) + "');"

# 调用数据库函数

if SqlExec(cx, sql):

print('正在插入...书籍【{}】的基本信息'.format(baseinfo['title']))

else:

print(sql)

if __name__ == '__main__':

url = 'http://www.biqukan.com/1_1093/'

insert_baseinfo(url) # 获取书籍基本信息并入库

books = get_book(url) # 获取书籍章节目录

for i in books: # 插入每章内容

insert_chapter(i)

同样,要获取更多书籍信息,可以加个循环

if __name__ == '__main__':

for i in range(1090,1100):

url = 'http://www.biqukan.com/1_' + str(i) + '/'

insert_baseinfo(url) # 获取书籍基本信息并入库

books = get_book(url) # 获取书籍章节目录

for per_ch in books: # 插入每章内容

insert_chapter(per_ch)