Python文本处理工具——TextRank

背景

TextRank是用与从文本中提取关键词的算法,它采用了PageRank算法,原始的论文在这里。Github地址。

这个工具使用POS( part-of-speech tagging : 词性标注 )然后抽取名词,这种方法对于关键词提取独具特色。

注意:

- 先安装NLTK再使用这个工具。

- NLTK版本要求3.2.1以上。

下载github上的文件

ls 驱动器 G 中的卷是 项目&工程

卷的序列号是 E272-EC3D

G:\CSDN_blog\textrank 的目录

2016/06/19 下午 05:01 .

2016/06/19 下午 05:01 ..

2016/06/19 下午 04:30 .ipynb_checkpoints

2016/06/19 下午 05:00 1,406 Python文本处理工具——TextRank.ipynb

2016/06/19 下午 05:01 textrank-master

1 个文件 1,406 字节

4 个目录 69,318,959,104 可用字节

cd textrank-master/G:\CSDN_blog\textrank\textrank-master

ls 驱动器 G 中的卷是 项目&工程

卷的序列号是 E272-EC3D

G:\CSDN_blog\textrank\textrank-master 的目录

2016/06/19 下午 05:01 .

2016/06/19 下午 05:01 ..

2016/06/19 下午 05:01 candidates

2016/06/19 下午 05:01 conferences

2016/04/26 下午 11:23 2,212 README.md

2016/04/26 下午 11:23 8,884 textrank.py

2 个文件 11,096 字节

4 个目录 69,318,959,104 可用字节

在textrank-master文件夹里有两个文件夹,分别是candidates和conferences。candidates是选举的演讲文件集(部分),conferences是nlp会议的论文集(部分)。

TextRank使用

python textrank.py folder

zang@ZANG-PC G:\CSDN_blog\textrank\textrank-master

> python textrank.py conferences

Traceback (most recent call last):

File "textrank.py", line 2, in <module>

import langid

ImportError: No module named langid运行程序的时候发现没有安装Python langid包。这个包的功能是识别语言的工具。

langid.py is a standalone Language Identification (LangID) tool.

pip 安装langid

zang@ZANG-PC G:\CSDN_blog\textrank\textrank-master

> pip install langid

Collecting langid

Downloading langid-1.1.6.tar.gz (1.9MB)

100% |████████████████████████████████| 1.9MB 339kB/s

Requirement already satisfied (use --upgrade to upgrade): numpy in c:\anaconda2\lib\site-packages (from langid)

Building wheels for collected packages: langid

Running setup.py bdist_wheel for langid ... done

Stored in directory: C:\Users\zang\AppData\Local\pip\Cache\wheels\6a\7b\7f\5d73ed7227652857010410aebdb279e46b78a6586493c2de6b

Successfully built langid

Installing collected packages: langid

Successfully installed langid-1.1.6再次运行TextRank

zang@ZANG-PC G:\CSDN_blog\textrank\textrank-master

> python textrank.py conferences

Traceback (most recent call last):

File "textrank.py", line 14, in <module>

tagger = nltk.tag.perceptron.PerceptronTagger()

File "C:\Anaconda2\lib\site-packages\nltk\tag\perceptron.py", line 140, in __init__

AP_MODEL_LOC = str(find('taggers/averaged_perceptron_tagger/'+PICKLE))

File "C:\Anaconda2\lib\site-packages\nltk\data.py", line 641, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource u'taggers/averaged_perceptron_tagger/averaged_perceptro

n_tagger.pickle' not found. Please use the NLTK Downloader to

obtain the resource: >>> nltk.download()

Searched in:

- 'C:\\Users\\zang/nltk_data'

- 'C:\\nltk_data'

- 'D:\\nltk_data'

- 'E:\\nltk_data'

- 'C:\\Anaconda2\\nltk_data'

- 'C:\\Anaconda2\\lib\\nltk_data'

- 'C:\\Users\\zang\\AppData\\Roaming\\nltk_data'

**********************************************************************unfortunately!!! 我本地没有taggers/averaged_perceptron_tagger/averaged_perceptron_tagger.pickle这个文件,打开本地nltk_data,发现还真是,只有下载了。

Resource u’taggers/averaged_perceptron_tagger/averaged_perceptro

n_tagger.pickle’ not found.

nltk下载POS模型文件

zang@ZANG-PC G:\CSDN_blog\textrank\textrank-master

> python

Python 2.7.11 |Anaconda 4.0.0 (64-bit)| (default, Feb 16 2016, 09:58:36) [MSC v.1500 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

Anaconda is brought to you by Continuum Analytics.

Please check out: http://continuum.io/thanks and https://anaconda.org

>>> import nltk

>>> nltk.download()

showing info https://raw.githubusercontent.com/nltk/nltk_data/gh-pages/index.xml

True

>>> quit()下载过程中会有个弹窗,要自己选择下载的文件,在Models里第一个averaged_perceptron_tagger,然后点击下载,如果网络环境比较好的话,很快就可以下载完成了。

再次运行TextRank

zang@ZANG-PC G:\CSDN_blog\textrank\textrank-master

> python textrank.py conferences

Traceback (most recent call last):

File "textrank.py", line 14, in

tagger = nltk.tag.perceptron.PerceptronTagger()

File "C:\Anaconda2\lib\site-packages\nltk\tag\perceptron.py", line 141, in __init__

self.load(AP_MODEL_LOC)

File "C:\Anaconda2\lib\site-packages\nltk\tag\perceptron.py", line 209, in load

self.model.weights, self.tagdict, self.classes = load(loc)

File "C:\Anaconda2\lib\site-packages\nltk\data.py", line 801, in load

opened_resource = _open(resource_url)

File "C:\Anaconda2\lib\site-packages\nltk\data.py", line 924, in _open

return urlopen(resource_url)

File "C:\Anaconda2\lib\urllib2.py", line 154, in urlopen

return opener.open(url, data, timeout)

File "C:\Anaconda2\lib\urllib2.py", line 431, in open

response = self._open(req, data)

File "C:\Anaconda2\lib\urllib2.py", line 454, in _open

'unknown_open', req)

File "C:\Anaconda2\lib\urllib2.py", line 409, in _call_chain

result = func(*args)

File "C:\Anaconda2\lib\urllib2.py", line 1265, in unknown_open

raise URLError('unknown url type: %s' % type)

urllib2.URLError: 又报错了….

仔细看下报错的信息,猜测是nltk版本低了,更新nltk。

zang@ZANG-PC G:\CSDN_blog\textrank\textrank-master

> pip install nltk --upgrade

Collecting nltk

Downloading nltk-3.2.1.tar.gz (1.1MB)

100% |████████████████████████████████| 1.1MB 423kB/s

Building wheels for collected packages: nltk

Running setup.py bdist_wheel for nltk ... done

Stored in directory: C:\Users\zang\AppData\Local\pip\Cache\wheels\55\0b\ce\960dcdaec7c9af5b1f81d471a90c8dae88374386efe6e54a50

Successfully built nltk

Installing collected packages: nltk

Found existing installation: nltk 3.2

Uninstalling nltk-3.2:

Successfully uninstalled nltk-3.2

Successfully installed nltk-3.2.1继续运行TextRank

zang@ZANG-PC G:\CSDN_blog\textrank\textrank-master

> python textrank.py conferences

Reading articles/acl15

Reading articles/acl16short

Reading articles/emnlp15

Reading articles/naacl2016

Reading articles/naacl2016long

Reading articles/naacl2016shortls 驱动器 G 中的卷是 项目&工程

卷的序列号是 E272-EC3D

G:\CSDN_blog\textrank\textrank-master 的目录

2016/06/19 下午 05:13 .

2016/06/19 下午 05:13 ..

2016/06/19 下午 05:01 candidates

2016/06/19 下午 05:01 conferences

2016/06/19 下午 05:14 keywords-conferences-textrank

2016/04/26 下午 11:23 2,212 README.md

2016/04/26 下午 11:23 8,884 textrank.py

2 个文件 11,096 字节

5 个目录 69,318,905,856 可用字节

成功了!!啦啦啦。keywords-conferences-textrank就是运行结果。

cd keywords-conferences-textrankG:\CSDN_blog\textrank\textrank-master\keywords-conferences-textrank

ls 驱动器 G 中的卷是 项目&工程

卷的序列号是 E272-EC3D

G:\CSDN_blog\textrank\textrank-master\keywords-conferences-textrank 的目录

2016/06/19 下午 05:14 .

2016/06/19 下午 05:14 ..

2016/06/19 下午 05:14 10,787 acl15

2016/06/19 下午 05:14 3,101 acl16short

2016/06/19 下午 05:14 10,964 emnlp15

2016/06/19 下午 05:14 6,045 naacl2016

2016/06/19 下午 05:14 2,997 naacl2016long

2016/06/19 下午 05:14 2,225 naacl2016short

6 个文件 36,119 字节

2 个目录 69,318,901,760 可用字节

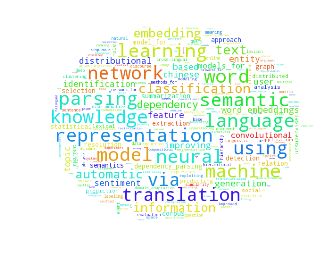

打开acl15:

learning:0.0153

neural:0.0122

word:0.0122

semantic:0.0118

parsing:0.0094

language:0.0093

representation:0.0086

model:0.0082

network:0.0079

via:0.0076

translation:0.0075

…..

对应的是关键词和重要程度打分。

词云可视化结果

cd textrank-master/G:\CSDN_blog\textrank\textrank-master

from os import path

from scipy.misc import imread

import matplotlib.pyplot as plt

from wordcloud import WordCloud, STOPWORDS, ImageColorGenerator

%matplotlib inline

text = open('./keywords-conferences-textrank/acl15')

word_scores_list = []

for line in text:

line = line.strip()

word,score = line.split(":")

word_scores_list.append((word,int(float(score)*10000)))

# read the mask / color image

# taken from http://jirkavinse.deviantart.com/art/quot-Real-Life-quot-Alice-282261010

acl_coloring = imread("acl1.png")

wc = WordCloud(background_color="white",mask=acl_coloring,stopwords=STOPWORDS.add("said"),max_font_size=40, random_state=42)

#wc.generate(text)

wc.generate_from_frequencies(word_scores_list)

image_colors = ImageColorGenerator(acl_coloring)

plt.imshow(wc)

plt.axis("off")

plt.figure()

# recolor wordcloud and show

# we could also give color_func=image_colors directly in the constructor

plt.imshow(wc.recolor(color_func=image_colors))

plt.axis("off")

plt.figure()

plt.imshow(alice_coloring, cmap=plt.cm.gray)

plt.axis("off")

plt.show()

wc.to_file("./keywords-conferences-textrank/acl15.png")