ELK日志分析平台环境部署

注释:原理部分粘贴自https://www.cnblogs.com/kevingrace/p/5919021.html

日志概念介绍:

日志主要包括系统日志、应用程序日志和安全日志。系统运维和开发人员可以通过日志了解服务器软硬件信息、检查配置过程中的错误及

错误发生的原因。经常分析日志可以了解服务器的负荷,性能安全性,从而及时采取措施纠正错误。

通常,日志被分散在储存不同的设备上。如果你管理数十上百台服务器,你还在使用依次登录每台机器的传统方法查阅日志。这样是不是

感觉很繁琐和效率低下。当务之急我们使用集中化的日志管理,例如:开源的syslog,将所有服务器上的日志收集汇总。集中化管理日志

后,日志的统计和检索又成为一件比较麻烦的事情,一般我们使用grep、awk和wc等Linux命令能实现检索和统计,但是对于要求更高的

查询、排序和统计等要求和庞大的机器数量依然使用这样的方法难免有点力不从心。

完整的日志数据体系:

通过我们需要对日志进行集中化管理,将所有机器上的日志信息收集、汇总到一起。

完整的日志数据具有非常重要的作用:

1)信息查找。通过检索日志信息,定位相应的bug,找出解决方案。

2)服务诊断。通过对日志信息进行统计、分析,了解服务器的负荷和服务运行状态,找出耗时请求进行优化等等。

3)数据分析。如果是格式化的log,可以做进一步的数据分析,统计、聚合出有意义的信息.

ELK平台部署:

开源实时日志分析ELK平台能够完美的解决我们上述的问题,ELK由ElasticSearch、Logstash和Kiabana三个开源工具组成:

1)ElasticSearch是一个基于Lucene的开源分布式搜索服务器。它的特点有:分布式,零配置,自动发现,索引自动分片,索引

副本机制,restful风格接口,多数据源自动搜索负载等。它提供了一个分布式多用户能力的全文搜索引擎,基于RESTful web接口。

Elasticsearch是用Java开发的,并作为Apache许可条款下的开放源码发布,是第二流行的企业搜索引擎。设计用于云计算中,

能够达到实时搜索,稳定,可靠,快速,安装使用方便。在elasticsearch中,所有节点的数据是均等的。

2)Logstash是一个完全开源的工具,它可以对你的日志进行收集、过滤、分析,支持大量的数据获取方法,并将其存储供以后

使用(如搜索)。说到搜索,logstash带有一个web界面,搜索和展示所有日志。一般工作方式为c/s架构,client端安装在需要

收集日志的主机上,server端负责将收到的各节点日志进行过滤、修改等操作在一并发往elasticsearch上去。

3)Kibana 是一个基于浏览器页面的Elasticsearch前端展示工具,也是一个开源和免费的工具,Kibana可以为 Logstash 和

ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助您汇总、分析和搜索重要数据日志。

为什么要用到ELK?

一般我们需要进行日志分析场景是:直接在日志文件中 grep、awk 就可以获得自己想要的信息。但在规模较大的场景中,此方法效率

低下,面临问题包括日志量太大如何归档、文本搜索太慢怎么办、如何多维度查询。需要集中化的日志管理,所有服务器上的日志收集

汇总。常见解决思路是建立集中式日志收集系统,将所有节点上的日志统一收集,管理,访问。一般大型系统是一个分布式部署的架构,

不同的服务模块部署在不同的服务器上,问题出现时,大部分情况需要根据问题暴露的关键信息,定位到具体的服务器和服务模块,

构建一套集中式日志系统,可以提高定位问题的效率。

一般大型系统是一个分布式部署的架构,不同的服务模块部署在不同的服务器上,问题出现时,大部分情况需要根据问题暴露的关键

信息,定位到具体的服务器和服务模块,构建一套集中式日志系统,可以提高定位问题的效率。

一个完整的集中式日志系统,需要包含以下几个主要特点:

1)收集-能够采集多种来源的日志数据

2)传输-能够稳定的把日志数据传输到中央系统

3)存储-如何存储日志数据

4)分析-可以支持 UI 分析

5)警告-能够提供错误报告,监控机制

ELK提供了一整套解决方案,并且都是开源软件,之间互相配合使用,完美衔接,高效的满足了很多场合的应用。目前主流的一种日志系统

实验环境,必须保证各个主机均有解析:

server1:master 172.25.38.1

server2:slave 172.25.38.2

server3:slave 172.25.38.3

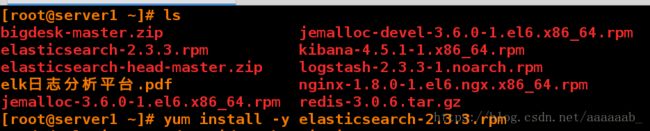

安装es开源分布式搜索服务器:

[root@server1 ~]# ls

bigdesk-master.zip jemalloc-devel-3.6.0-1.el6.x86_64.rpm

elasticsearch-2.3.3.rpm kibana-4.5.1-1.x86_64.rpm

elasticsearch-head-master.zip logstash-2.3.3-1.noarch.rpm

elk日志分析平台.pdf nginx-1.8.0-1.el6.ngx.x86_64.rpm

jemalloc-3.6.0-1.el6.x86_64.rpm redis-3.0.6.tar.gz

[root@server1 ~]# yum install -y elasticsearch-2.3.3.rpm

[root@server1 ~]# pwd

/root

[root@server1 ~]# ls

bigdesk-master.zip jemalloc-devel-3.6.0-1.el6.x86_64.rpm

elasticsearch-2.3.3.rpm kibana-4.5.1-1.x86_64.rpm

elasticsearch-head-master.zip logstash-2.3.3-1.noarch.rpm

elk日志分析平台.pdf nginx-1.8.0-1.el6.ngx.x86_64.rpm

jemalloc-3.6.0-1.el6.x86_64.rpm redis-3.0.6.tar.gz

[root@server1 ~]# cd /etc/elasticsearch/

[root@server1 elasticsearch]# ls

elasticsearch.yml logging.yml scripts

[root@server1 elasticsearch]# vim elasticsearch.yml 编辑主配置文件

[root@server1 elasticsearch]# /etc/init.d/elasticsearch start 无法安装,这是基于java环境下的

which: no java in (/sbin:/usr/sbin:/bin:/usr/bin)

Could not find any executable java binary. Please install java in your PATH or set JAVA_HOME

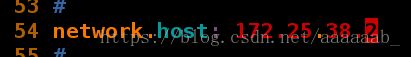

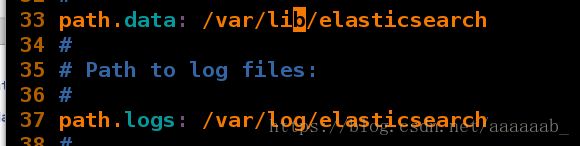

设置cluster和node节点的名字:

设置data和logs路径:

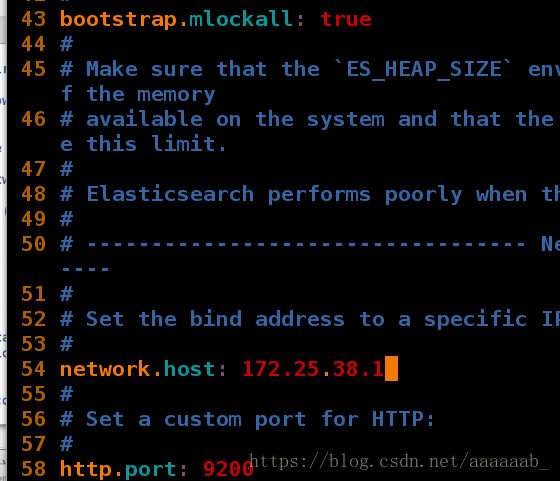

设置主机IP,打开9200端口,打开查询请求:

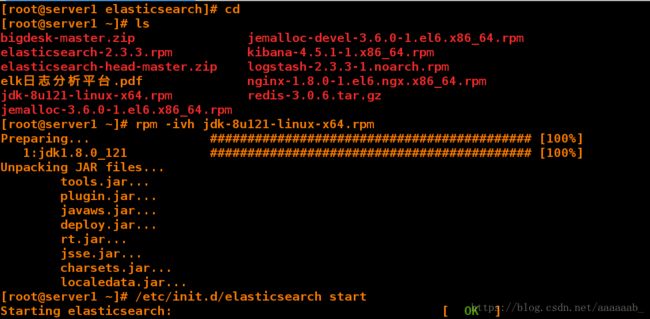

[root@server1 elasticsearch]# cd

[root@server1 ~]# ls

bigdesk-master.zip jemalloc-devel-3.6.0-1.el6.x86_64.rpm

elasticsearch-2.3.3.rpm kibana-4.5.1-1.x86_64.rpm

elasticsearch-head-master.zip logstash-2.3.3-1.noarch.rpm

elk日志分析平台.pdf nginx-1.8.0-1.el6.ngx.x86_64.rpm

jdk-8u121-linux-x64.rpm redis-3.0.6.tar.gz

jemalloc-3.6.0-1.el6.x86_64.rpm

[root@server1 ~]# rpm -ivh jdk-8u121-linux-x64.rpm 安装jdk

[root@server1 ~]# /etc/init.d/elasticsearch start 开启服务

Starting elasticsearch: [ OK ]

[root@server1 ~]# netstat -antlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 908/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 984/master

tcp 0 0 172.25.38.1:22 172.25.38.250:45526 ESTABLISHED 1070/sshd

tcp 0 0 ::ffff:172.25.38.1:9200 :::* LISTEN 1356/java

tcp 0 0 ::ffff:172.25.38.1:9300 :::* LISTEN 1356/java

tcp 0 0 :::22 :::* LISTEN 908/sshd

tcp 0 0 ::1:25 :::* LISTEN 984/master

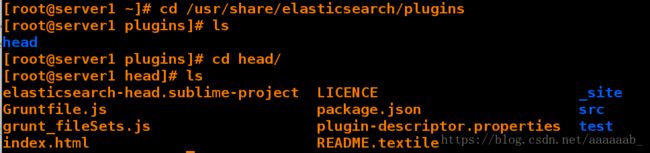

在网页访问可以看到集群信息:

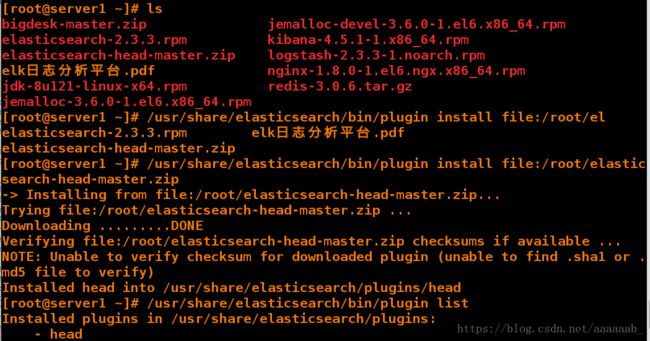

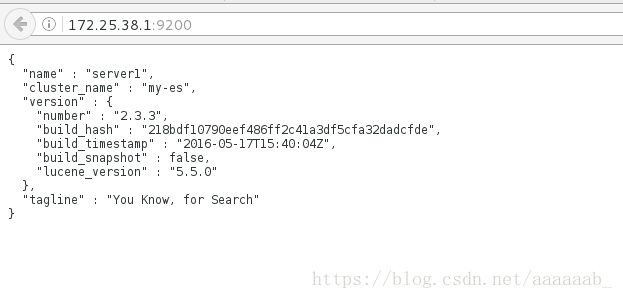

调用plugin安装elasticsearch-head-master插件:

[root@server1 ~]# ls

bigdesk-master.zip jemalloc-devel-3.6.0-1.el6.x86_64.rpm

elasticsearch-2.3.3.rpm kibana-4.5.1-1.x86_64.rpm

elasticsearch-head-master.zip logstash-2.3.3-1.noarch.rpm

elk日志分析平台.pdf nginx-1.8.0-1.el6.ngx.x86_64.rpm

jdk-8u121-linux-x64.rpm redis-3.0.6.tar.gz

jemalloc-3.6.0-1.el6.x86_64.rpm

[root@server1 ~]# /usr/share/elasticsearch/bin/plugin install file:/root/el

elasticsearch-2.3.3.rpm elk日志分析平台.pdf

elasticsearch-head-master.zip

[root@server1 ~]# /usr/share/elasticsearch/bin/plugin install file:/root/elasticsearch-head-master.zip 调用plugin安装插件

[root@server1 ~]# /usr/share/elasticsearch/bin/plugin list 可以列出信息

Installed plugins in /usr/share/elasticsearch/plugins:

- head

[root@server1 ~]# cd /usr/share/elasticsearch/plugins

[root@server1 plugins]# ls

head

[root@server1 plugins]# cd head/

[root@server1 head]# ls

elasticsearch-head.sublime-project LICENCE _site

Gruntfile.js package.json src

grunt_fileSets.js plugin-descriptor.properties test

index.html README.textile

在网页可以访问查看节点信息:

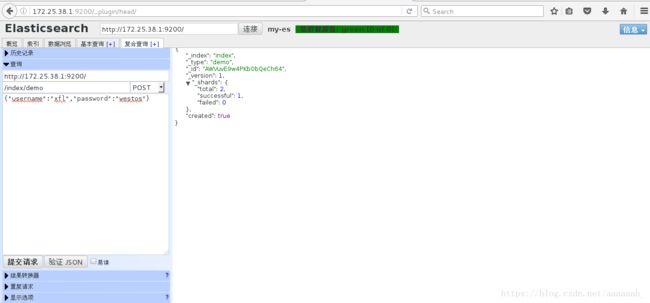

在复合查询之中可以提交请求:

黄色代表主分片未丢失。红色代表主分片丢失,绿色表示未提交

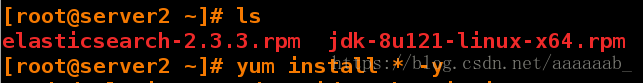

在server2和server3安装服务:

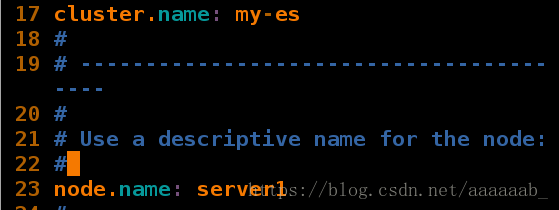

在server配置集群节点信息:

[root@server1 ~]# cd /etc/elasticsearch/

[root@server1 elasticsearch]# ls

elasticsearch.yml logging.yml scripts

[root@server1 elasticsearch]# vim elasticsearch.yml 设置集群节点

[root@server1 elasticsearch]# scp elasticsearch.yml server2:/etc/elasticsearch/ 将文件传递给从节点

root@server2's password:

elasticsearch.yml 100% 3197 3.1KB/s 00:00

[root@server1 elasticsearch]# scp elasticsearch.yml server3:/etc/elasticsearch/

root@server3's password:

elasticsearch.yml 100% 3197 3.1KB/s 00:00

[root@server1 elasticsearch]# /etc/init.d/elasticsearch reload 修改完配置文件记得重载服务

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ]

[root@server2 ~]# cd /etc/elasticsearch/

[root@server2 elasticsearch]# ls

elasticsearch.yml logging.yml scripts

[root@server2 elasticsearch]# vim elasticsearch.yml 更改IP和主机名

[root@server2 elasticsearch]# /etc/init.d/elasticsearch start 开启服务

Starting elasticsearch: [ OK ]

[root@server3 ~]# cd /etc/elasticsearch/

[root@server3 elasticsearch]# ls

elasticsearch.yml logging.yml scripts

[root@server3 elasticsearch]# vim elasticsearch.yml 更改IP和主机名

[root@server3 elasticsearch]# /etc/init.d/elasticsearch start 开启服务

Starting elasticsearch: [ OK ]

![]()

在网页访问刷新就可以看到节点分布:

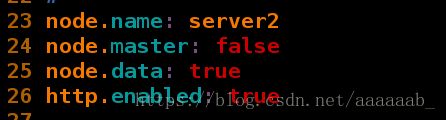

配置节点信息使主从节点分工明确:

[root@server1 elasticsearch]# ls

elasticsearch.yml logging.yml scripts

[root@server1 elasticsearch]# vim elasticsearch.yml 配置master端不收集信息

[root@server1 elasticsearch]# /etc/init.d/elasticsearch reload 重载服务

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ]

[root@server2 elasticsearch]# ls

elasticsearch.yml logging.yml scripts

[root@server2 elasticsearch]# vim elasticsearch.yml

[root@server2 elasticsearch]# /etc/init.d/elasticsearch reload

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ]

[root@server3 elasticsearch]# ls

elasticsearch.yml logging.yml scripts

[root@server3 elasticsearch]# vim elasticsearch.yml

[root@server3 elasticsearch]# /etc/init.d/elasticsearch reload

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ]

在网页访问进行刷新可以看到主从节点功能分离:

curl命令测试集群节点信息:

[root@server1 elasticsearch]# curl -XGET 'http://172.25.38.1:9200/_cluster/health?pretty=true'

{

"cluster_name" : "my-es",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 2,

"active_primary_shards" : 5,

"active_shards" : 10,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

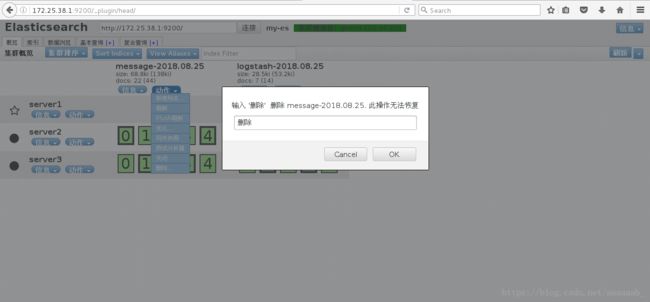

在网页删除索引的方式直接点击节点出来提示根据提示删除即可:

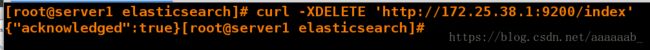

调用命令删除节点:

[root@server1 elasticsearch]# curl -XDELETE 'http://172.25.38.1:9200/index'

{"acknowledged":true}[root@server1 elasticsearch]#

在网页查看节点已经被删除:

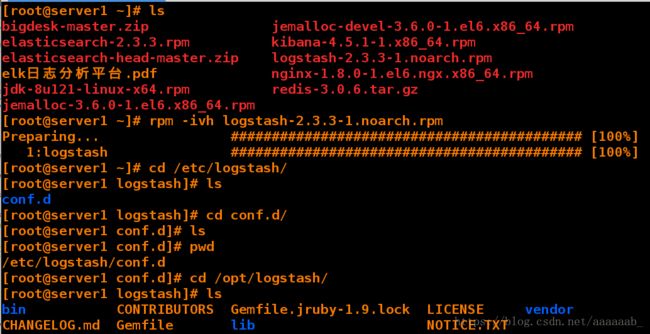

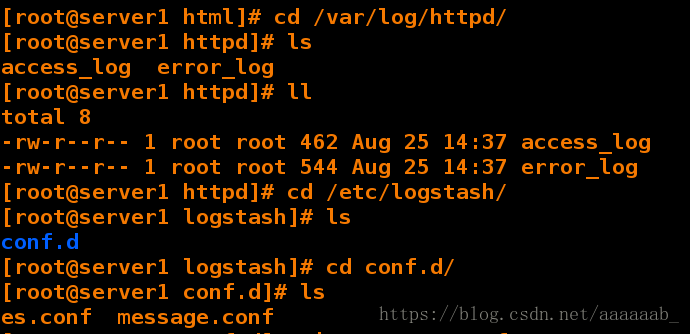

配置日志数据处理的工具logstash:

[root@server1 ~]# ls

bigdesk-master.zip jemalloc-devel-3.6.0-1.el6.x86_64.rpm

elasticsearch-2.3.3.rpm kibana-4.5.1-1.x86_64.rpm

elasticsearch-head-master.zip logstash-2.3.3-1.noarch.rpm

elk日志分析平台.pdf nginx-1.8.0-1.el6.ngx.x86_64.rpm

jdk-8u121-linux-x64.rpm redis-3.0.6.tar.gz

jemalloc-3.6.0-1.el6.x86_64.rpm

[root@server1 ~]# rpm -ivh logstash-2.3.3-1.noarch.rpm

Preparing... ########################################### [100%]

1:logstash ########################################### [100%]

[root@server1 ~]# cd /etc/logstash/

[root@server1 logstash]# ls

conf.d

[root@server1 logstash]# cd conf.d/

[root@server1 conf.d]# ls

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# cd /opt/logstash/

[root@server1 logstash]# ls

bin CONTRIBUTORS Gemfile.jruby-1.9.lock LICENSE vendor

CHANGELOG.md Gemfile lib NOTICE.TXT

[root@server1 logstash]# cd bin/

[root@server1 bin]# ls

logstash logstash.lib.sh logstash-plugin.bat plugin.bat rspec.bat

logstash.bat logstash-plugin plugin rspec setup.bat

[root@server1 bin]# /opt/logstash/bin/logstash -e 'input { stdin {} } output{ stdout {} }'

^[[ASettings: Default pipeline workers: 1 调用终端录入终端输出的方式来处理数据

Pipeline main started

hello

2018-08-25T02:42:39.801Z server1 hello

westos

2018-08-25T02:42:43.917Z server1 westos

xfl

2018-08-25T02:42:47.220Z server1 xfl

^CSIGINT received. Shutting down the agent. {:level=>:warn} 按ctrl+c可以结束

stopping pipeline {:id=>"main"}

Received shutdown signal, but pipeline is still waiting for in-flight events

to be processed. Sending another ^C will force quit Logstash, but this may cause

data loss. {:level=>:warn}

^CSIGINT received. Terminating immediately.. {:level=>:fatal}

[root@server1 bin]# /opt/logstash/bin/logstash -e 'input { stdin {} } output{ stdout { codec => rubydebug } }' 调用转换格式对终端录入内容进行转换输出

Settings: Default pipeline workers: 1

Pipeline main started

hello

{

"message" => "hello",

"@version" => "1",

"@timestamp" => "2018-08-25T02:44:54.249Z",

"host" => "server1"

}

westos

{

"message" => "westos",

"@version" => "1",

"@timestamp" => "2018-08-25T02:44:57.160Z",

"host" => "server1"

}

^CSIGINT received. Shutting down the agent. {:level=>:warn}

stopping pipeline {:id=>"main"}

^CSIGINT received. Terminating immediately.. {:level=>:fatal}

^CSIGINT received. Terminating immediately.. {:level=>:fatal}

[root@server1 bin]# cd /etc/logstash/conf.d/

[root@server1 conf.d]# ls

[root@server1 conf.d]# vim es.conf

[root@server1 conf.d]# cat es.conf

input {

stdin {}

}

output {

elasticsearch {

hosts => ["172.25.38.1"]

index => "logstash-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

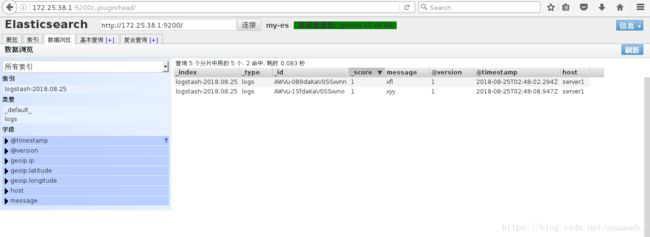

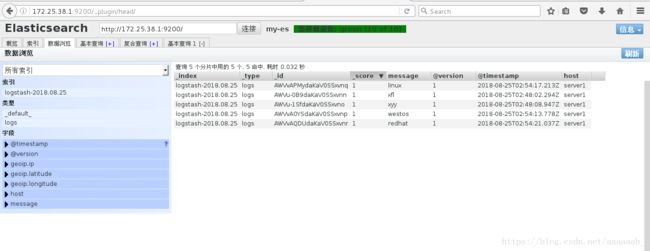

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1 每次提交会转换格式输出

Pipeline main started

westos

{

"message" => "westos",

"@version" => "1",

"@timestamp" => "2018-08-25T02:54:13.778Z",

"host" => "server1"

}

linux

{

"message" => "linux",

"@version" => "1",

"@timestamp" => "2018-08-25T02:54:17.213Z",

"host" => "server1"

}

redhat

{

"message" => "redhat",

"@version" => "1",

"@timestamp" => "2018-08-25T02:54:21.037Z",

"host" => "server1"

[root@server1 conf.d]# ls

es.conf

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

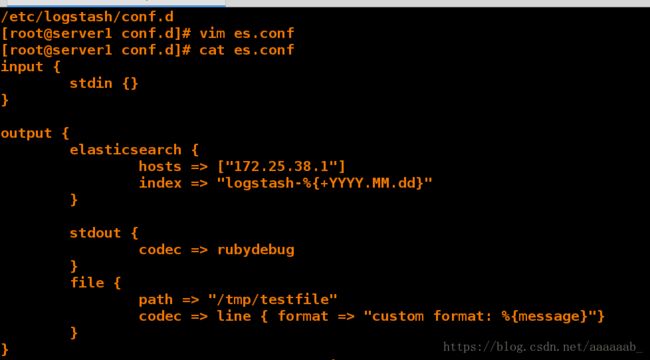

[root@server1 conf.d]# vim es.conf

[root@server1 conf.d]# cat es.conf

input {

stdin {}

}

output {

elasticsearch {

hosts => ["172.25.38.1"]

index => "logstash-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

file {

path => "/tmp/testfile"

codec => line { format => "custom format: %{message}"}

}

}

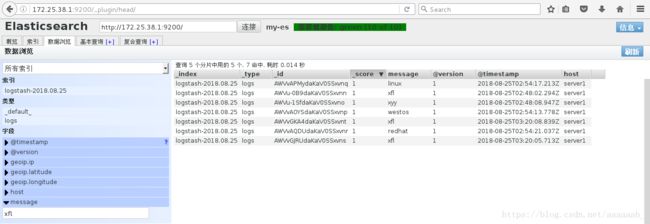

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started

xfl

{

"message" => "xfl",

"@version" => "1",

"@timestamp" => "2018-08-25T03:20:05.713Z",

"host" => "server1"

}

xfl

{

"message" => "xfl",

"@version" => "1",

"@timestamp" => "2018-08-25T03:20:08.839Z",

"host" => "server1"

}

^CSIGINT received. Shutting down the agent. {:level=>:warn}

stopping pipeline {:id=>"main"}

^CSIGINT received. Terminating immediately.. {:level=>:fatal}

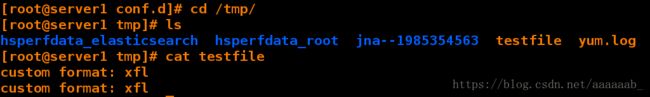

[root@server1 conf.d]# cd /tmp/

[root@server1 tmp]# ls

hsperfdata_elasticsearch hsperfdata_root jna--1985354563 testfile yum.log

[root@server1 tmp]# cat testfile 查看文件可以看到提交信息

custom format: xfl

custom format: xfl

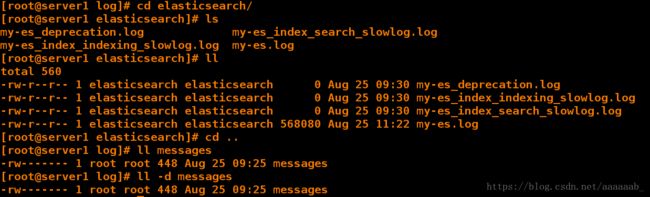

[root@server1 log]# pwd

/var/log

[root@server1 log]# ls

anaconda.ifcfg.log btmp lastlog secure-20180825

anaconda.log btmp-20180825 logstash spooler

anaconda.program.log cron maillog spooler-20180825

anaconda.storage.log cron-20180825 maillog-20180825 tallylog

anaconda.syslog dmesg messages wtmp

anaconda.yum.log dmesg.old messages-20180825 yum.log

audit dracut.log rhsm

boot.log elasticsearch secure

[root@server1 log]# cd elasticsearch/

[root@server1 elasticsearch]# ls

my-es_deprecation.log my-es_index_search_slowlog.log

my-es_index_indexing_slowlog.log my-es.log

[root@server1 elasticsearch]# ll

total 560

-rw-r--r-- 1 elasticsearch elasticsearch 0 Aug 25 09:30 my-es_deprecation.log

-rw-r--r-- 1 elasticsearch elasticsearch 0 Aug 25 09:30 my-es_index_indexing_slowlog.log

-rw-r--r-- 1 elasticsearch elasticsearch 0 Aug 25 09:30 my-es_index_search_slowlog.log

-rw-r--r-- 1 elasticsearch elasticsearch 568080 Aug 25 11:22 my-es.log

[root@server1 elasticsearch]# cd ..

[root@server1 log]# ll messages

-rw------- 1 root root 448 Aug 25 09:25 messages

[root@server1 log]# ll -d messages

-rw------- 1 root root 448 Aug 25 09:25 messages

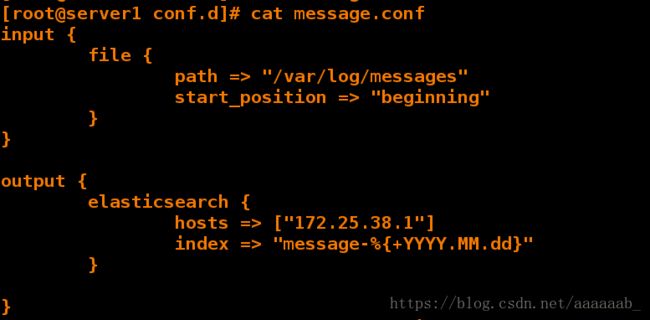

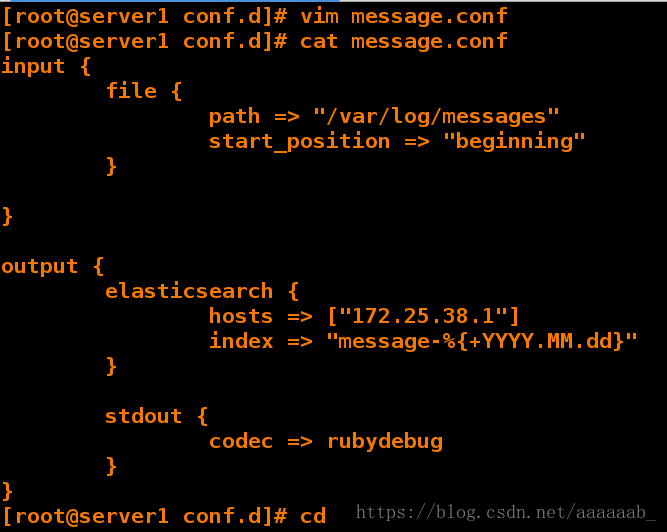

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# ls

es.conf message.conf

[root@server1 conf.d]# vim message.conf

[root@server1 conf.d]# vim es.conf

[root@server1 conf.d]# vim message.conf

[root@server1 conf.d]# cat message.conf

input {

file {

path => "/var/log/messages"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["172.25.38.1"]

index => "message-%{+YYYY.MM.dd}"

}

}

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started

![]()

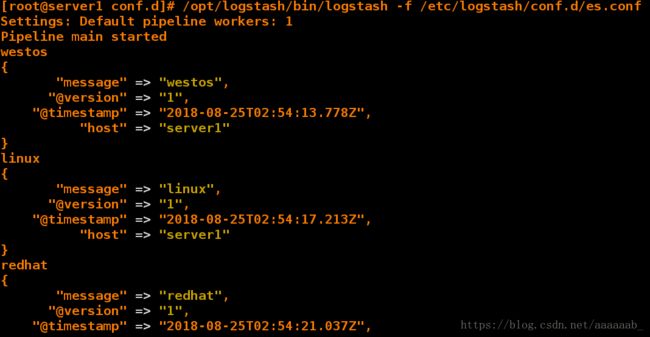

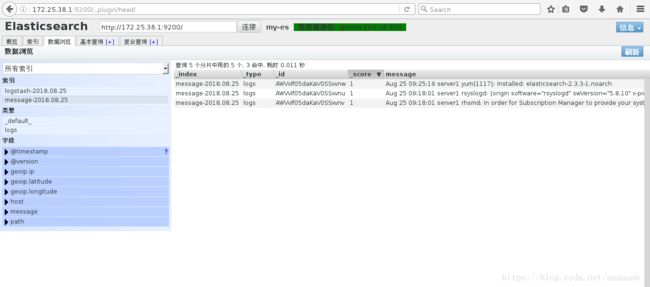

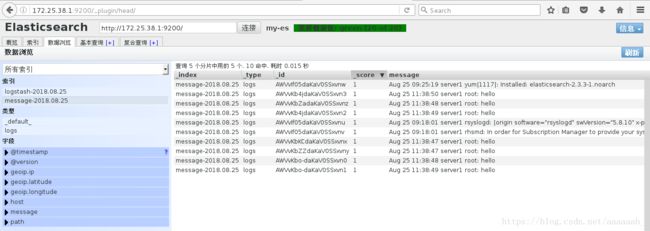

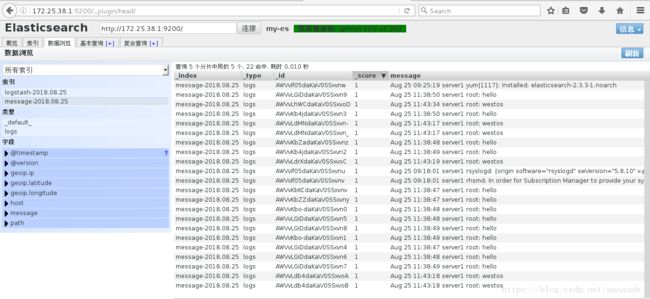

在网页刷新可以看到logstash信息:

查看数据浏览有信息:

重新打开一个终端进行提交日志内容:

[root@server1 ~]# logger hello

[root@server1 ~]# logger hello

[root@server1 ~]# logger hello

[root@server1 ~]# logger hello

[root@server1 ~]# logger hello

[root@server1 ~]# logger hello

[root@server1 ~]# logger hello

在网页查看数据浏览会实时同步:

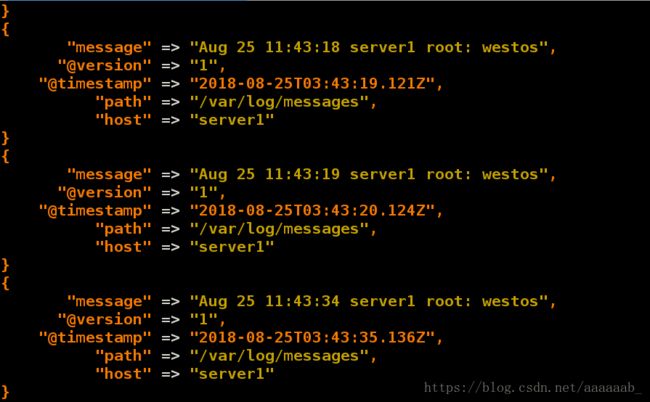

在之前打开的终端可以看到提交的信息:

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "Aug 25 11:38:47 server1 root: hello",

"@version" => "1",

"@timestamp" => "2018-08-25T03:41:43.157Z",

"path" => "/var/log/messages",

"host" => "server1"

}

{

"message" => "Aug 25 11:38:48 server1 root: hello",

"@version" => "1",

"@timestamp" => "2018-08-25T03:41:45.004Z",

"path" => "/var/log/messages",

"host" => "server1"

}

{

"message" => "Aug 25 11:38:48 server1 root: hello",

"@version" => "1",

"@timestamp" => "2018-08-25T03:41:45.005Z",

"path" => "/var/log/messages",

"host" => "server1"

}

{

"message" => "Aug 25 11:38:49 server1 root: hello",

"@version" => "1",

"@timestamp" => "2018-08-25T03:41:45.005Z",

"path" => "/var/log/messages",

"host" => "server1"

}

{

"message" => "Aug 25 11:38:49 server1 root: hello",

"@version" => "1",

"@timestamp" => "2018-08-25T03:41:45.005Z",

"path" => "/var/log/messages",

"host" => "server1"

}

{

"message" => "Aug 25 11:38:50 server1 root: hello",

"@version" => "1",

"@timestamp" => "2018-08-25T03:41:45.006Z",

"path" => "/var/log/messages",

"host" => "server1"

}

[root@server1 ~]# l.

. .bash_logout .cshrc .ssh

.. .bash_profile .oracle_jre_usage .tcshrc

.bash_history .bashrc .sincedb_452905a167cf4509fd08acb964fdb20c .viminfo

[root@server1 ~]# cat .sincedb_452905a167cf4509fd08acb964fdb20c

523024 0 64768 774

[root@server1 ~]# cat .sincedb_452905a167cf4509fd08acb964fdb20c 查看隐藏文件发生变更了才会读取日志文件,不然一直提交不上去

523024 0 64768 774

[root@server1 ~]# logger westos 提交日志

[root@server1 ~]# cat .sincedb_452905a167cf4509fd08acb964fdb20c 查看已经发生改变

523024 0 64768 922

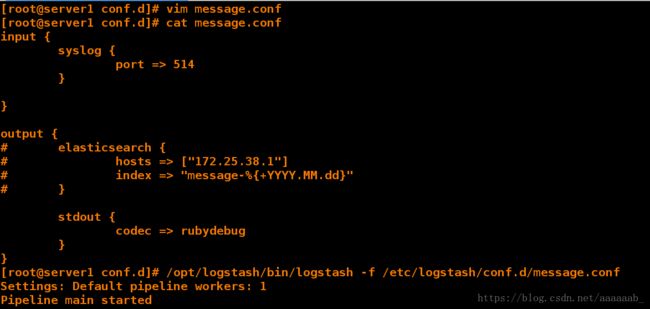

[root@server1 conf.d]# vim message.conf

[root@server1 conf.d]# cat message.conf

input {

syslog {

port => 514

}

}

output {

# elasticsearch {

# hosts => ["172.25.38.1"]

# index => "message-%{+YYYY.MM.dd}"

# }

stdout {

codec => rubydebug

}

}

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started

[root@server1 ~]# netstat -antulp | grep :514

tcp 0 0 :::514 :::* LISTEN 2104/java

udp 0 0 :::514 :::* 2104/java

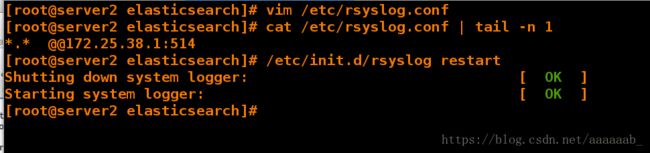

[root@server2 elasticsearch]# vim /etc/rsyslog.conf

[root@server2 elasticsearch]# cat /etc/rsyslog.conf | tail -n 1

*.* @@172.25.38.1:514 指定server1的IP,UDP格式

[root@server2 elasticsearch]# /etc/init.d/rsyslog restart 重启服务

Shutting down system logger: [ OK ]

Starting system logger: [ OK ]

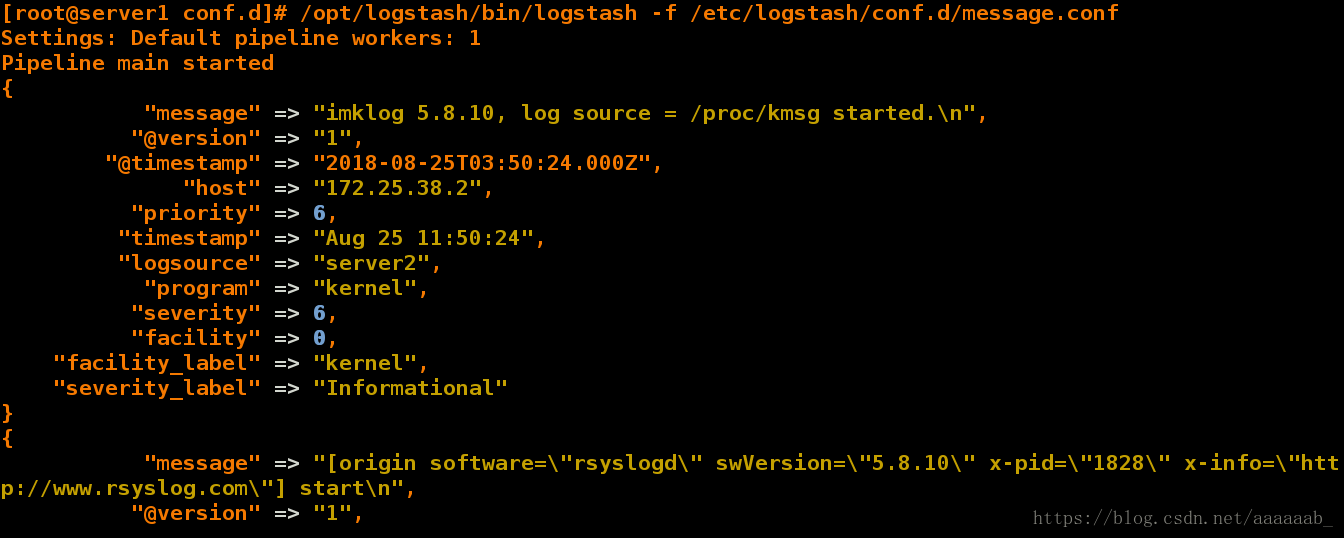

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "imklog 5.8.10, log source = /proc/kmsg started.\n",

"@version" => "1",

"@timestamp" => "2018-08-25T03:50:24.000Z",

"host" => "172.25.38.2",

"priority" => 6,

"timestamp" => "Aug 25 11:50:24",

"logsource" => "server2",

"program" => "kernel",

"severity" => 6,

"facility" => 0,

"facility_label" => "kernel",

"severity_label" => "Informational"

}

{

"message" => "[origin software=\"rsyslogd\" swVersion=\"5.8.10\" x-pid=\"1828\" x-info=\"http://www.rsyslog.com\"] start\n",

"@version" => "1",

"@timestamp" =>  "172.25.38.2",

"priority" => 46,

"timestamp" => "Aug 25 11:50:24",

"logsource" => "server2",

"program" => "rsyslogd",

"severity" => 6,

"facility" => 5,

"facility_label" => "syslogd",

"severity_label" => "Informational"

}

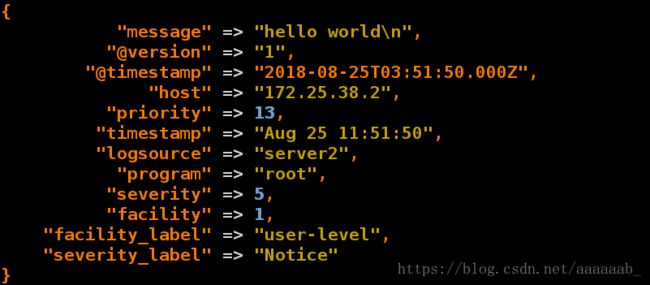

[root@server2 elasticsearch]# logger hello world

在server1可以实时同步数据:

在网页可以查看数据浏览信息:

删除messages节点:

日志递送原理:

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# ls

es.conf message.conf

[root@server1 conf.d]# vim message.conf

[root@server1 conf.d]# cat message.conf

input {

file {

path => "/var/log/messages"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["172.25.38.1"]

index => "message-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

[root@server1 conf.d]# cd

[root@server1 ~]# logger helloworld 必须提交日志使得.sincedb_452905a167cf4509fd08acb964fdb20c数值变化才会重新读取

[root@server1 ~]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "Aug 25 13:38:02 server1 kernel: Clocksource tsc unstable (delta = -1101891417301 ns)",

"@version" => "1",

"@timestamp" => "2018-08-25T05:59:45.358Z",

"path" => "/var/log/messages",

"host" => "server1"

}

{

"message" => "Aug 25 13:58:16 server1 root: helloworld",

"@version" => "1",

"@timestamp" => "2018-08-25T05:59:51.707Z",

"path" => "/var/log/messages",

"host" => "server1"

}

[root@server1 ~]# /var/log/elasticsearch/my-es.log 查看日志格式特别乱

[root@server1 ~]# vim /etc/logstash/conf.d/message.conf

[root@server1 ~]# cat /etc/logstash/conf.d/message.conf

input {

file {

path => "/var/log/elasticsearch/my-es.log" 将日志整合

start_position => "beginning" 从开头整合

}

}

filter {

multiline {

# type => "type"

pattern => "^\[" 将[开头的日志进行合并

negate => true

what => "previous"

}

}

output {

elasticsearch {

hosts => ["172.25.38.1"]

index => "es-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

执行命令查看日志的合并:

[root@server1 ~]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started

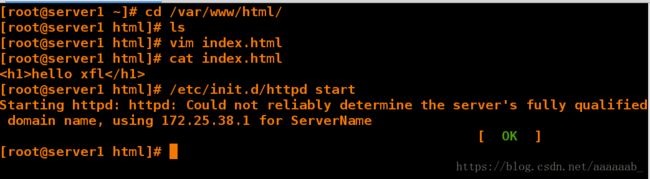

[root@server1 ~]# yum install httpd -y 安装http服务

[root@server1 ~]# cd /var/www/html/

[root@server1 html]# ls

[root@server1 html]# vim index.html

[root@server1 html]# cat index.html 编写测试页

hello xfl

[root@server1 html]# /etc/init.d/httpd start 开启服务

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.38.1 for ServerName

[ OK ]

[root@server1 html]# cd /var/log/httpd/

[root@server1 httpd]# ls

access_log error_log

[root@server1 httpd]# ll

total 8

-rw-r--r-- 1 root root 462 Aug 25 14:37 access_log

-rw-r--r-- 1 root root 544 Aug 25 14:37 error_log

[root@server1 httpd]# cd /etc/logstash/

[root@server1 logstash]# ls

conf.d

[root@server1 logstash]# cd conf.d/

[root@server1 conf.d]# ls

es.conf message.conf

[root@server1 conf.d]# vim message.conf

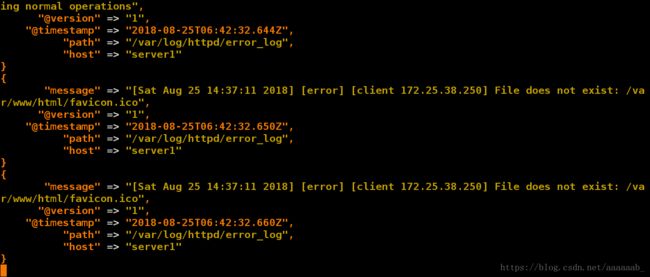

[root@server1 conf.d]# cat message.conf

input {

file {

path => ["/var/log/httpd/access_log","/var/log/httpd/error_log"]

start_position => "beginning"

}

}

#filter {

# multiline {

## type => "type"

# pattern => "^\["

# negate => true

# what => "previous"

# }

#}

output {

elasticsearch {

hosts => ["172.25.38.1"]

index => "apache-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

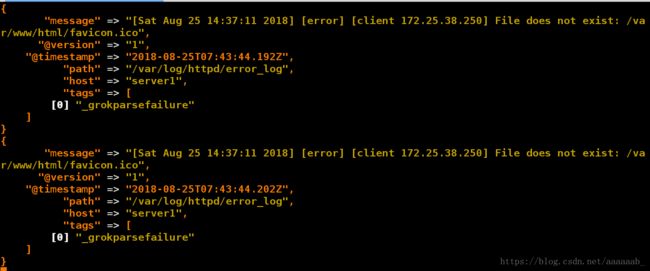

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started

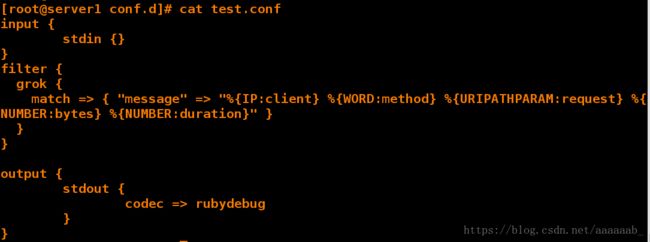

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# ls

es.conf message.conf test.conf

[root@server1 conf.d]# vim test.conf

[root@server1 conf.d]# cat test.conf

input {

stdin {}

}

filter {

grok {

match => { "message" => "%{IP:client} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{NUMBER:duration}" } 定义格式

}

}

output {

stdout {

codec => rubydebug

}

}

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/test.conf

Settings: Default pipeline workers: 1

Pipeline main started

55.3.244.1 GET /index.html 15824 0.043 输入指定格式

{

"message" => "55.3.244.1 GET /index.html 15824 0.043",

"@version" => "1",

"@timestamp" => "2018-08-25T07:34:15.257Z", 会自动按照切片形式输出

"host" => "server1",

"client" => "55.3.244.1",

"method" => "GET",

"request" => "/index.html",

"bytes" => "15824",

"duration" => "0.043"

}

[root@server1 conf.d]# ls

es.conf message.conf test.conf

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# vim message.conf

[root@server1 conf.d]# cat message.conf

input {

file {

path => ["/var/log/httpd/access_log","/var/log/httpd/error_log"]

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output {

elasticsearch {

hosts => ["172.25.38.1"]

index => "apache-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

[root@server1 conf.d]# ls -i /var/log/httpd/error_log

523011 /var/log/httpd/error_log

[root@server1 conf.d]# ls -i /var/log/httpd/access_log

527862 /var/log/httpd/access_log

[root@server1 conf.d]# cd

[root@server1 ~]# cat .sincedb_ef0edb00900aaa8dcb520b280cb2fb7d

527862 0 64768 462

523011 0 64768 544

[root@server1 ~]# rm -f .sincedb_ef0edb00900aaa8dcb520b280cb2fb7d 要不删除要不提交日志使数值改变不然没办法推送

[root@server1 ~]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started

在网页可以看到httpd日志信息:

数据展示:

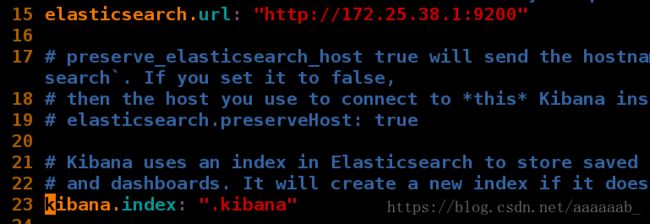

在sever3安装kibana:

[root@server3 ~]# ls

elasticsearch-2.3.3.rpm jdk-8u121-linux-x64.rpm kibana-4.5.1-1.x86_64.rpm

[root@server3 ~]# rpm -ivh kibana-4.5.1-1.x86_64.rpm

Preparing... ########################################### [100%]

1:kibana ########################################### [100%]

[root@server3 ~]# cd /opt/

[root@server3 opt]# ls

kibana

[root@server3 opt]# cd kibana/

[root@server3 kibana]# ls

bin installedPlugins node optimize README.txt webpackShims

config LICENSE.txt node_modules package.json src

[root@server3 kibana]# cd config/

[root@server3 config]# ls

kibana.yml

[root@server3 config]# vim kibana.yml 写入master的IP打开kibanna索引

[root@server3 config]# /etc/init.d/kibana start 开启服务

kibana started

[root@server3 config]# netstat -antlp | grep :5601 查看监听端口5601的开启

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 1921/node

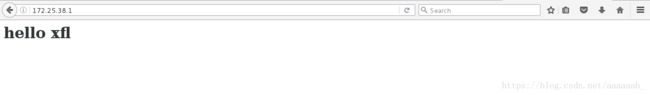

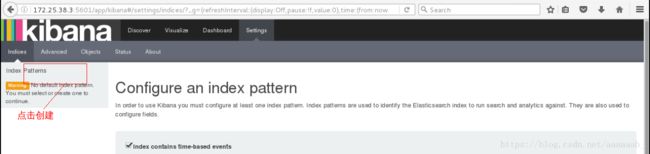

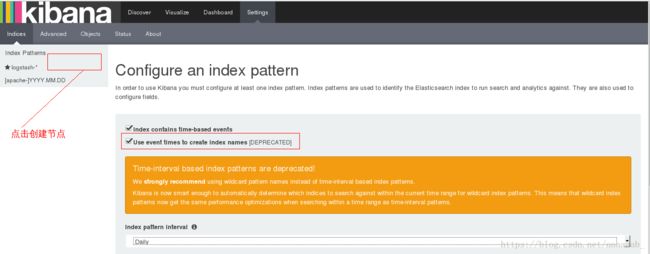

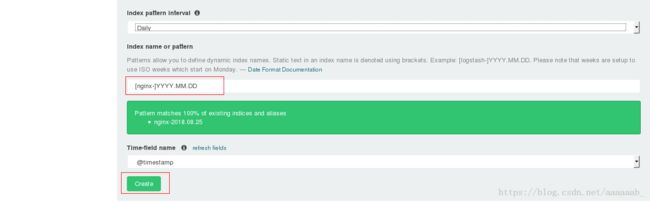

在网页输入172.25.38.3:5601来加载kabana:

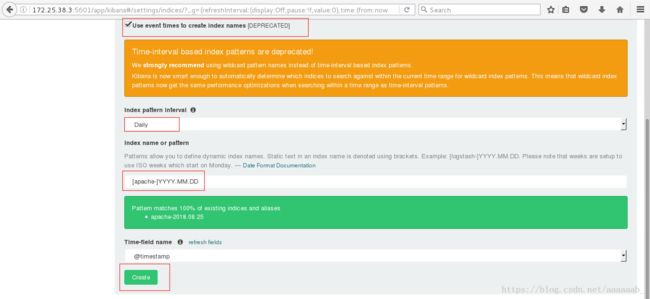

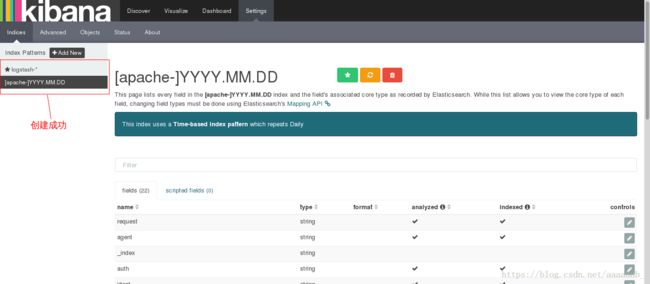

拉到最下方点击create:

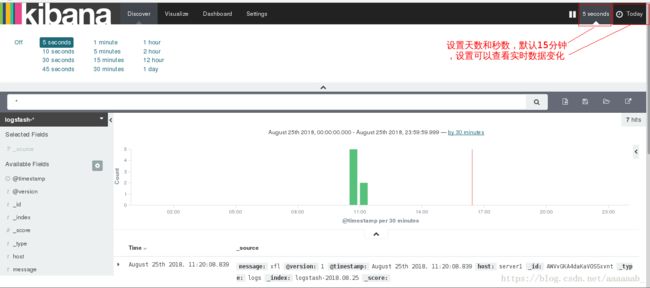

设置时间:

搭建redis消息队列进行联动:

[root@server2 ~]# ls

elasticsearch-2.3.3.rpm jdk-8u121-linux-x64.rpm redis-3.0.6.tar.gz

[root@server2 ~]# tar zxf redis-3.0.6.tar.gz

[root@server2 ~]# cd redis-3.0.6

[root@server2 redis-3.0.6]# ls

00-RELEASENOTES COPYING Makefile redis.conf runtest-sentinel tests

BUGS deps MANIFESTO runtest sentinel.conf utils

CONTRIBUTING INSTALL README runtest-cluster src

[root@server2 redis-3.0.6]# yum install -y gcc 安装gcc编译工具

[root@server2 redis-3.0.6]# make && make install 源码编译

[root@server2 redis-3.0.6]# ls

00-RELEASENOTES COPYING Makefile redis.conf runtest-sentinel tests

BUGS deps MANIFESTO runtest sentinel.conf utils

CONTRIBUTING INSTALL README runtest-cluster src

[root@server2 redis-3.0.6]# cd utils/

[root@server2 utils]# ./install_server.sh 进行初始化安装

Welcome to the redis service installer

This script will help you easily set up a running redis server

Please select the redis port for this instance: [6379]

Selecting default: 6379

Please select the redis config file name [/etc/redis/6379.conf]

Selected default - /etc/redis/6379.conf

Please select the redis log file name [/var/log/redis_6379.log]

Selected default - /var/log/redis_6379.log

Please select the data directory for this instance [/var/lib/redis/6379]

Selected default - /var/lib/redis/6379

Please select the redis executable path [/usr/local/bin/redis-server]

Selected config:

Port : 6379

Config file : /etc/redis/6379.conf

Log file : /var/log/redis_6379.log

Data dir : /var/lib/redis/6379

Executable : /usr/local/bin/redis-server

Cli Executable : /usr/local/bin/redis-cli

Is this ok? Then press ENTER to go on or Ctrl-C to abort.

Copied /tmp/6379.conf => /etc/init.d/redis_6379

Installing service...

Successfully added to chkconfig!

Successfully added to runlevels 345!

Starting Redis server...

Installation successful!

[root@server2 utils]# netstat -antlp|grep :6379 查看redis监听端口

tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 4860/redis-server *

tcp 0 0 :::6379 :::* LISTEN 4860/redis-server *

[root@server1 ~]# /etc/init.d/httpd stop 停止阿帕其

Stopping httpd: [ OK ]

[root@server1 ~]# rpm -ivh nginx-1.8.0-1.el6.ngx.x86_64.rpm 安装nginx服务

warning: nginx-1.8.0-1.el6.ngx.x86_64.rpm: Header V4 RSA/SHA1 Signature, key ID 7bd9bf62: NOKEY

Preparing... ########################################### [100%]

1:nginx ########################################### [100%]

----------------------------------------------------------------------

Thanks for using nginx!

Please find the official documentation for nginx here:

* http://nginx.org/en/docs/

Commercial subscriptions for nginx are available on:

* http://nginx.com/products/

----------------------------------------------------------------------

[root@server1 ~]# cd /etc/nginx/

[root@server1 nginx]# ls

conf.d koi-utf mime.types scgi_params win-utf

fastcgi_params koi-win nginx.conf uwsgi_params

[root@server1 nginx]# cd /etc/logstash/conf.d/

[root@server1 conf.d]# ls

es.conf message.conf test.conf

[root@server1 conf.d]# cp message.conf nginx.cof

[root@server1 conf.d]# ls

es.conf message.conf nginx.conf test.conf

[root@server1 conf.d]# cat nginx.conf

input {

file {

path => "/var/log/nginx/access_log"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output {

redis {

host => ["172.25.38.2"] 写入redis监听端口IP

port => 6379 默认端口

data_type => "list"

key => "logstash:redis"

}

stdout {

codec => rubydebug

}

}

进行调用脚本测试:

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/nginx.conf

在真机进行压测:

[kiosk@foundation38 Desktop]$ ab -c 1 -n 10 http://172.25.38.1/index.html 写入master端的IP

This is ApacheBench, Version 2.3 <$Revision: 1430300 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 172.25.38.1 (be patient).....done

Server Software: nginx/1.8.0

Server Hostname: 172.25.38.1

Server Port: 80

Document Path: /index.html

Document Length: 612 bytes

Concurrency Level: 1

Time taken for tests: 0.027 seconds

Complete requests: 10

Failed requests: 0

Write errors: 0

Total transferred: 8440 bytes

HTML transferred: 6120 bytes

Requests per second: 363.99 [#/sec] (mean)

Time per request: 2.747 [ms] (mean)

Time per request: 2.747 [ms] (mean, across all concurrent requests)

Transfer rate: 300.01 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.1 0 1

Processing: 0 2 5.3 1 17

Waiting: 0 2 5.3 0 17

Total: 1 3 5.4 1 18

Percentage of the requests served within a certain time (ms)

50% 1

66% 1

75% 1

80% 2

90% 18

95% 18

98% 18

99% 18

100% 18 (longest request)

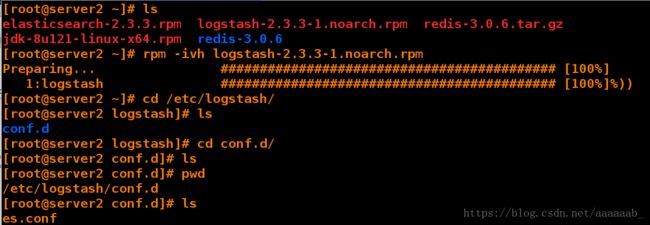

[root@server2 ~]# ls

elasticsearch-2.3.3.rpm logstash-2.3.3-1.noarch.rpm redis-3.0.6.tar.gz

jdk-8u121-linux-x64.rpm redis-3.0.6

[root@server2 ~]# rpm -ivh logstash-2.3.3-1.noarch.rpm

Preparing... ########################################### [100%]

1:logstash ########################################### [100%]%))

[root@server2 ~]# cd /etc/logstash/

[root@server2 logstash]# ls

conf.d

[root@server2 logstash]# cd conf.d/

[root@server2 conf.d]# ls

[root@server2 conf.d]# pwd

/etc/logstash/conf.d

[root@server2 conf.d]# ls

es.conf

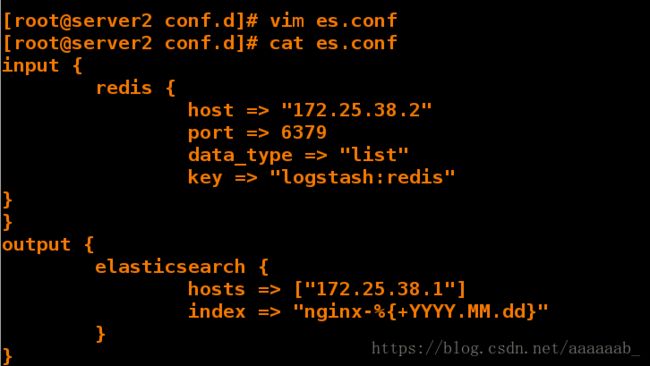

[root@server2 conf.d]# vim es.conf

[root@server2 conf.d]# cat es.conf

input {

redis {

host => "172.25.38.2" redis的IP

port => 6379 端口号

data_type => "list"

key => "logstash:redis"

}

}

output {

elasticsearch {

hosts => ["172.25.38.1"]

index => "nginx-%{+YYYY.MM.dd}"

}

}

[root@server2 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started

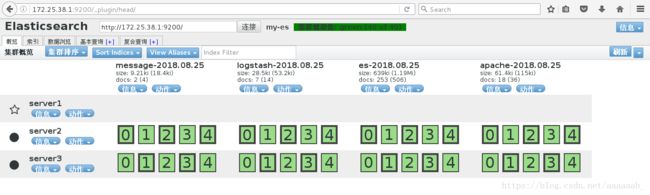

在网页可以看到nginx和kibana的节点信息:

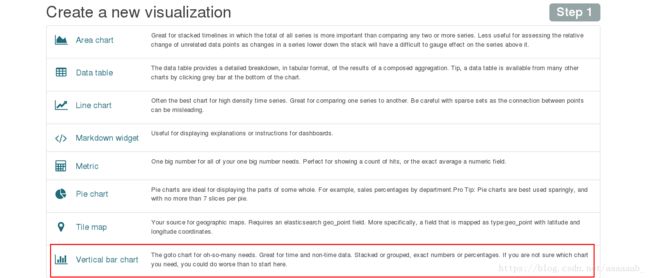

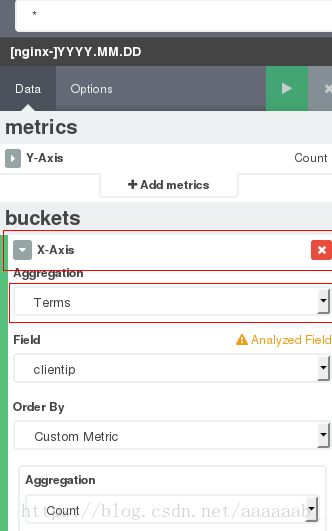

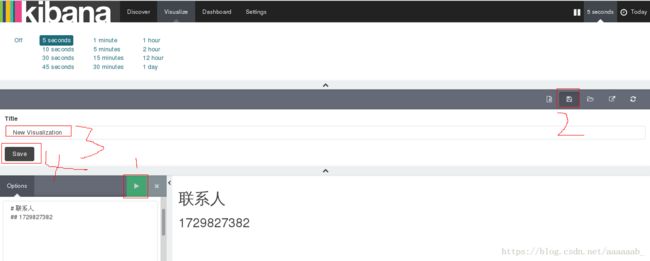

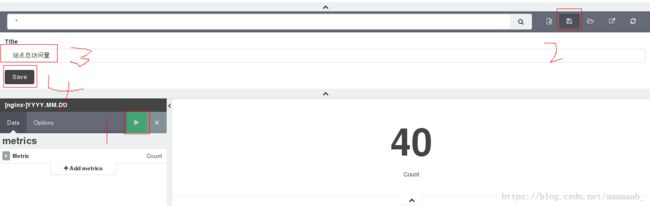

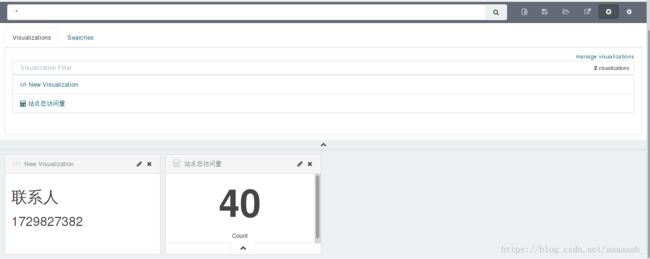

进去搭建图形来展示日志信息:

两个图表搭建成功:

当有人访问时会有数据变化:

在真机做压侧:

[kiosk@foundation38 Desktop]$ ab -c 1 -n 10 http://172.25.38.1/index.html

This is ApacheBench, Version 2.3 <$Revision: 1430300 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 172.25.38.1 (be patient).....done

Server Software: nginx/1.8.0

Server Hostname: 172.25.38.1

Server Port: 80

Document Path: /index.html

Document Length: 612 bytes

Concurrency Level: 1

Time taken for tests: 0.013 seconds

Complete requests: 10

Failed requests: 0

Write errors: 0

Total transferred: 8440 bytes

HTML transferred: 6120 bytes

Requests per second: 769.53 [#/sec] (mean)

Time per request: 1.299 [ms] (mean)

Time per request: 1.299 [ms] (mean, across all concurrent requests)

Transfer rate: 634.26 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 1 0.3 1 1

Processing: 1 1 0.2 1 1

Waiting: 0 1 0.2 0 1

Total: 1 1 0.3 1 2

ERROR: The median and mean for the waiting time are more than twice the standard

deviation apart. These results are NOT reliable.

Percentage of the requests served within a certain time (ms)

50% 1

66% 1

75% 1

80% 1

90% 2

95% 2

98% 2

99% 2

100% 2 (longest request)