tensorflow2.0笔记2:Numpy—实现线性回归问题!

| Numpy实现—线性回归问题实战 |

文章目录

- 一、思路分析

- 1.1、步骤1:计算损失函数

- 1.2、步骤2:损失函数的梯度

- 1.3、步骤3:设定w=w' 和循环

- 二、代码综合梳理

- 2.1、程序运行结果

- 2.2、实验的数据集

- 2.3、matplotlib可视化

- 2.4、线性回归的理解

一、思路分析

- 连续性预测

- 找出 w , b w,b w,b 的偏导数,

1.1、步骤1:计算损失函数

#计算损失函数

# y=wx+b

def computer_error_for_line_given_points(b,w,points):

totalError=0

for i in range(0,len(points)):

x=points[i,0] #等同于points[i][0]numpy,程序中的特殊形式,第0列的第i个元素。

y=points[i,1]

#computer mean-squared-error

totalError+=(y-(w*x+b))**2

# average loss for each point

return totallError/float(len(points))

1.2、步骤2:损失函数的梯度

#计算梯度,并且更新w,b

def step_gradient(b_current,w_current,points,learningRate):

b_gradient=0

w_gradient=0

N=float(len(points))

for i in range(0,len(points)):

x=points[i,0]

y=points[i,1]

#grad_b=2(wx+b-y)

b_gradient+=(2/N)*((w_current*x+b_current)-y)

#grad_w=2(wx+b-y)*x

w_gradient+=(2/N)*((w_current*x+b_current)-y)*x

#update w'的值

new_b=b_current-learningRate*b_gradient

new_w=w_current-learningRate*w_gradient

return [new_b,new_w]

1.3、步骤3:设定w=w’ 和循环

#迭代更新,最终更新出来的w和b

def gradient_descent_runner(points,starting_b,starting_w,learning_rate,num_iterations):

b=starting_b

w=starting_w

# update for servel times

for i in range(num_iterations):

b,w=step_gradient(b,w,np.array(points),learning_rate)

return [b,w]

二、代码综合梳理

import numpy as np

# y=wx+b 计算损失函数

def computer_error_for_line_given_points(b,w,points):

totalError=0

for i in range(0,len(points)):

x=points[i,0] #等同于传统的points[i][0],numpy程序中的特殊形式第0列,第i个值。

y=points[i,1]

#computer mean-squared-error

totalError+=(y-(w*x+b))**2

# average loss for each point

return totalError/float(len(points))

#计算损失函数梯度

def step_gradient(b_current,w_current,points,learningRate):

b_gradient=0

w_gradient=0

N=float(len(points))

for i in range(0,len(points)):

x=points[i,0] #等同于传统的points[i][0]

y=points[i,1] #等同于传统的points[i][1]

#grad_b=2(wx+b-y)

b_gradient+=(2/N)*((w_current*x+b_current)-y) #取一个平均值,更贴切。

#grad_w=2(wx+b-y)*x

w_gradient+=(2/N)*((w_current*x+b_current)-y)*x

#update w'的值

new_b=b_current-learningRate*b_gradient

new_w=w_current-learningRate*w_gradient

return [new_b,new_w]

#迭代更新,最终更新出来的w和b

def gradient_descent_runner(points,starting_b,starting_w,learning_rate,num_iterations):

b=starting_b

w=starting_w

# update for servel times

for i in range(num_iterations):

b,w=step_gradient(b,w,np.array(points),learning_rate)

return [b,w]

# 包括数据的加载,参数的初始化

def run():

points=np.genfromtxt("C:\\Users\\Devinzhang\\Desktop\\data.csv",delimiter=",") #加载数据集,以,分割。

learning_rate=0.0001 #初始学习率

initial_b=0 #初始b=0

initial_w=0 #初始w=0

num_iterations=1000 #迭代次数

#计算初始的误差 ; 使用'{0}','{1}'形式的占位符

print("Starting gradient descent at b={0},w={1},error={2}"

.format(initial_b,initial_w,computer_error_for_line_given_points(initial_b,initial_w,points)))

#开始送进去进行迭代

print("Runing...")

[b,w]=gradient_descent_runner(points,initial_b,initial_w,learning_rate,num_iterations)

print("after {0} iterations b={1},w={2},error={3}"

.format(num_iterations,b,w,computer_error_for_line_given_points(b,w,points)))

if __name__ =='__main__':

run()

2.1、程序运行结果

2.2、实验的数据集

![]()

- 数据内容如下:可以自己建立一个data.csv,把数据复制进去。

32.50234527 31.70700585

53.42680403 68.77759598

61.53035803 62.5623823

47.47563963 71.54663223

59.81320787 87.23092513

55.14218841 78.21151827

52.21179669 79.64197305

39.29956669 59.17148932

48.10504169 75.3312423

52.55001444 71.30087989

45.41973014 55.16567715

54.35163488 82.47884676

44.1640495 62.00892325

58.16847072 75.39287043

56.72720806 81.43619216

48.95588857 60.72360244

44.68719623 82.89250373

60.29732685 97.37989686

45.61864377 48.84715332

38.81681754 56.87721319

66.18981661 83.87856466

65.41605175 118.5912173

47.48120861 57.25181946

41.57564262 51.39174408

51.84518691 75.38065167

59.37082201 74.76556403

57.31000344 95.45505292

63.61556125 95.22936602

46.73761941 79.05240617

50.55676015 83.43207142

52.22399609 63.35879032

35.56783005 41.4128853

42.43647694 76.61734128

58.16454011 96.76956643

57.50444762 74.08413012

45.44053073 66.58814441

61.89622268 77.76848242

33.09383174 50.71958891

36.43600951 62.12457082

37.67565486 60.81024665

44.55560838 52.68298337

43.31828263 58.56982472

50.07314563 82.90598149

43.87061265 61.4247098

62.99748075 115.2441528

32.66904376 45.57058882

40.16689901 54.0840548

53.57507753 87.99445276

33.86421497 52.72549438

64.70713867 93.57611869

38.11982403 80.16627545

44.50253806 65.10171157

40.59953838 65.56230126

41.72067636 65.28088692

51.08863468 73.43464155

55.0780959 71.13972786

41.37772653 79.10282968

62.49469743 86.52053844

49.20388754 84.74269781

41.10268519 59.35885025

41.18201611 61.68403752

50.18638949 69.84760416

52.37844622 86.09829121

50.13548549 59.10883927

33.64470601 69.89968164

39.55790122 44.86249071

56.13038882 85.49806778

57.36205213 95.53668685

60.26921439 70.25193442

35.67809389 52.72173496

31.588117 50.39267014

53.66093226 63.64239878

46.68222865 72.24725107

43.10782022 57.81251298

70.34607562 104.2571016

44.49285588 86.64202032

57.5045333 91.486778

36.93007661 55.23166089

55.80573336 79.55043668

38.95476907 44.84712424

56.9012147 80.20752314

56.86890066 83.14274979

34.3331247 55.72348926

59.04974121 77.63418251

57.78822399 99.05141484

54.28232871 79.12064627

51.0887199 69.58889785

50.28283635 69.51050331

44.21174175 73.68756432

38.00548801 61.36690454

32.94047994 67.17065577

53.69163957 85.66820315

68.76573427 114.8538712

46.2309665 90.12357207

68.31936082 97.91982104

50.03017434 81.53699078

49.23976534 72.11183247

50.03957594 85.23200734

48.14985889 66.22495789

25.12848465 53.45439421

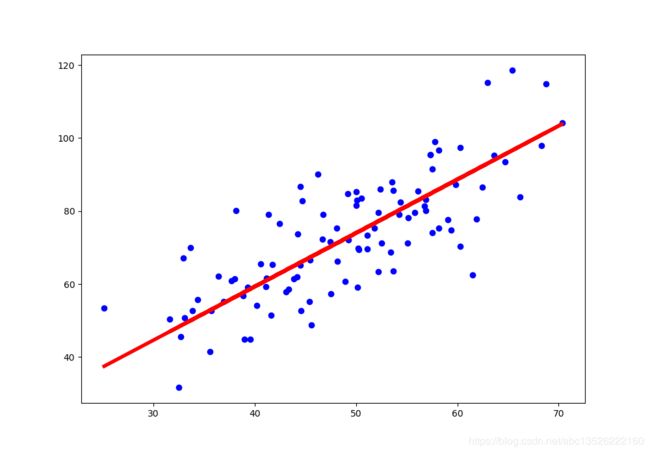

2.3、matplotlib可视化

plt.figure(num=3,figsize=(8,5))

plt.scatter(points[:,0],points[:,1],c="blue")

plt.plot(points[:,0],w*points[:,0]+b)

plt.show()

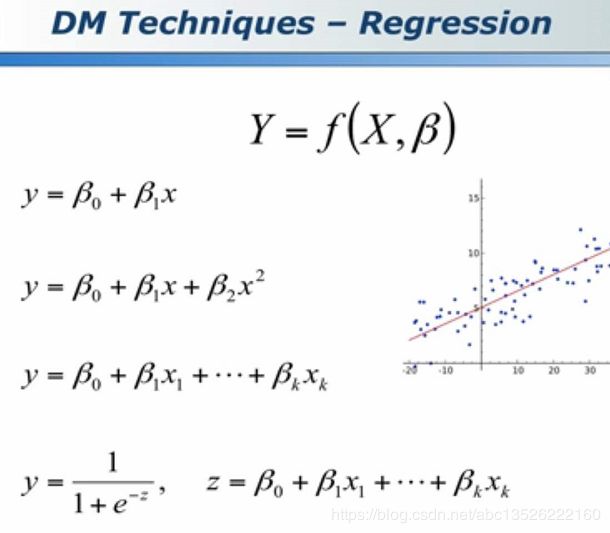

2.4、线性回归的理解

注意:不要以为线性回归拟合出来的都是直线,这个说法错误的,下面这些都是线性回归,比如第二个多项式拟合为曲线也是线性回归。 l i n e a r linear linear r e g r e s s i o n regression regression 中的 l i n e a r linear linear 指的是参数和变量之间是线性的 β × x β×x β×x,这个东西是线性的。