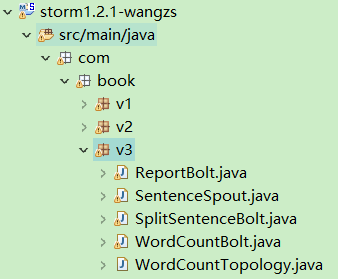

storm1.2.1-wordcount可靠的单词计数-2

源码下载:

https://download.csdn.net/download/adam_zs/10344447

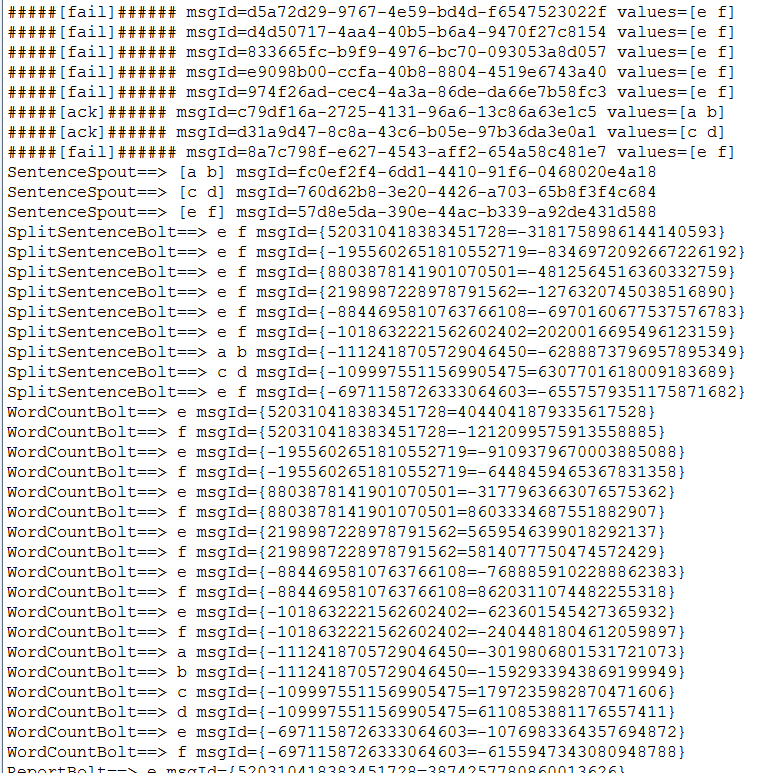

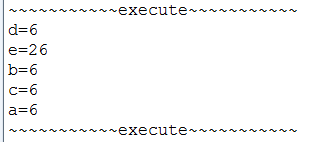

在ReportBolt.java中,如果word.equals("f")则返回fail,目的为了测试消息处理的可靠性;

遇到fail错误,"e f" 这个句子会重新发送,在统计结果中e单词的统计次数明显多于其它单词数量;

运行结果:

package com.book.v3;

import java.util.Map;

import java.util.UUID;

import java.util.concurrent.ConcurrentHashMap;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import org.apache.storm.utils.Utils;

/**

* @title: 数据源

* @author: wangzs

* @date: 2018年3月18日

*/

public class SentenceSpout extends BaseRichSpout {

private ConcurrentHashMap pending;

private SpoutOutputCollector spoutOutputCollector;

private String[] sentences = { "a b", "c d", "e f" };

@Override

public void open(Map map, TopologyContext topologycontext, SpoutOutputCollector spoutoutputcollector) {

this.spoutOutputCollector = spoutoutputcollector;

this.pending = new ConcurrentHashMap();

}

@Override

public void nextTuple() {

for (String sentence : sentences) {

Values values = new Values(sentence);

UUID msgId = UUID.randomUUID();

this.spoutOutputCollector.emit(values, msgId);

this.pending.put(msgId, values);

System.out.println("SentenceSpout==> " + values + " msgId=" + msgId);

}

Utils.sleep(1000);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer outputfieldsdeclarer) {

outputfieldsdeclarer.declare(new Fields("sentence"));

}

@Override

public void ack(Object msgId) {

System.out.println("#####[ack]###### msgId=" + msgId + " values=" + this.pending.get(msgId));

this.pending.remove(msgId);

}

@Override

public void fail(Object msgId) {

System.out.println("#####[fail]###### msgId=" + msgId + " values=" + this.pending.get(msgId));

this.spoutOutputCollector.emit(this.pending.get(msgId), msgId);

}

}

package com.book.v3;

import java.util.Map;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

/**

* @title: 分隔单词

* @author: wangzs

* @date: 2018年3月18日

*/

public class SplitSentenceBolt extends BaseRichBolt {

private OutputCollector outputCollector;

@Override

public void execute(Tuple tuple) {

String sentence = tuple.getStringByField("sentence");

String[] words = sentence.split(" ");

for (String word : words) {

this.outputCollector.emit(tuple, new Values(word));

}

this.outputCollector.ack(tuple);

System.out.println("SplitSentenceBolt==> " + sentence + " msgId=" + tuple.getMessageId());

}

@Override

public void prepare(Map map, TopologyContext topologycontext, OutputCollector outputcollector) {

this.outputCollector = outputcollector;

}

@Override

public void declareOutputFields(OutputFieldsDeclarer outputfieldsdeclarer) {

outputfieldsdeclarer.declare(new Fields("word"));

}

}

package com.book.v3;

import java.util.HashMap;

import java.util.Map;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

/**

* @title: 实现单词计数

* @author: wangzs

* @date: 2018年3月18日

*/

public class WordCountBolt extends BaseRichBolt {

private OutputCollector outputCollector;

private HashMap counts = null;

@Override

public void prepare(Map map, TopologyContext topologycontext, OutputCollector outputcollector) {

this.outputCollector = outputcollector;

this.counts = new HashMap();

}

@Override

public void execute(Tuple tuple) {

String word = tuple.getStringByField("word");

Integer count = counts.get(word);

if (count == null) {

count = 0;

}

count++;

this.counts.put(word, count);

this.outputCollector.emit(tuple, new Values(word, count));

this.outputCollector.ack(tuple);

System.out.println("WordCountBolt==> " + word + " msgId=" + tuple.getMessageId());

}

@Override

public void declareOutputFields(OutputFieldsDeclarer outputfieldsdeclarer) {

outputfieldsdeclarer.declare(new Fields("word", "count"));

}

}

package com.book.v3;

import java.util.HashMap;

import java.util.Map;

import java.util.Set;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Tuple;

/**

* @title: 实现上报bolt

* @author: wangzs

* @date: 2018年3月18日

*/

public class ReportBolt extends BaseRichBolt {

private OutputCollector outputcollector;

private HashMap reportCounts = null;

@Override

public void prepare(Map map, TopologyContext topologycontext, OutputCollector outputcollector) {

this.reportCounts = new HashMap();

this.outputcollector = outputcollector;

}

@Override

public void execute(Tuple tuple) {

String word = tuple.getStringByField("word");

int count = tuple.getIntegerByField("count");

if (word.equals("f")) {

this.outputcollector.fail(tuple);

} else {

this.reportCounts.put(word, count);

this.outputcollector.ack(tuple);

System.out.println("ReportBolt==> " + word + " msgId=" + tuple.getMessageId());

}

System.out.println("~~~~~~~~~~~execute~~~~~~~~~~~");

Set> entrySet = reportCounts.entrySet();

for (Map.Entry entry : entrySet) {

System.out.println(entry);

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer outputfieldsdeclarer) {

}

/**

* 在终止之前调用这个方法

*/

@Override

public void cleanup() {

Set> entrySet = reportCounts.entrySet();

System.out.println("---------- FINAL COUNTS -----------");

for (Map.Entry entry : entrySet) {

System.out.println(entry);

}

System.out.println("-----------------------------------");

}

}

package com.book.v3;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.generated.StormTopology;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

import org.apache.storm.utils.Utils;

/**

* @title: 可靠的单词计数

* @author: wangzs

* @date: 2018年3月18日

*/

public class WordCountTopology {

public static void main(String[] args) throws AlreadyAliveException, InvalidTopologyException, AuthorizationException {

SentenceSpout sentenceSpout = new SentenceSpout();

SplitSentenceBolt splitSentenceBolt = new SplitSentenceBolt();

WordCountBolt wordCountBolt = new WordCountBolt();

ReportBolt reportBolt = new ReportBolt();

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("sentenceSpout-1", sentenceSpout);

builder.setBolt("splitSentenceBolt-1", splitSentenceBolt).shuffleGrouping("sentenceSpout-1");

builder.setBolt("wordCountBolt-1", wordCountBolt).fieldsGrouping("splitSentenceBolt-1", new Fields("word"));

builder.setBolt("reportBolt-1", reportBolt).globalGrouping("wordCountBolt-1");

Config conf = new Config();

conf.setDebug(false);

if (args != null && args.length > 0) {

conf.setNumWorkers(1);

StormSubmitter.submitTopologyWithProgressBar(args[0], conf, builder.createTopology());

} else {

LocalCluster cluster = new LocalCluster();

StormTopology topology = builder.createTopology();

cluster.submitTopology("wordCountTopology-1", conf, topology);

Utils.sleep(40000);

cluster.killTopology("wordCountTopology-1");

cluster.shutdown();

}

}

}