inception网络中的resnet和concat,在最后的连接处的"累加",处理是否相同?

昨天被问到如下问题,这下应该记得住了,记录如下:

inception网络中的resnet和concat,在最后的连接处的"累加",处理是否相同?

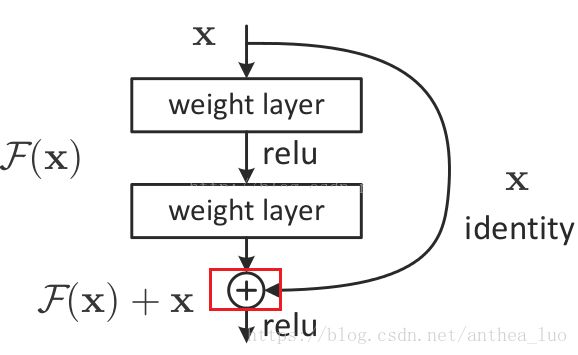

如图红色部分:

inception网络中的resnet和concat,在最后的连接处的"累加",处理是否相同?

如图红色部分:

下来看了一下代码,部分如下:

# Inception-Resnet-C

def block8(net, scale=1.0, activation_fn=tf.nn.relu, scope=None, reuse=None):

"""Builds the 8x8 resnet block."""

with tf.variable_scope(scope, 'Block8', [net], reuse=reuse):

with tf.variable_scope('Branch_0'):

tower_conv = slim.conv2d(net, 192, 1, scope='Conv2d_1x1')

with tf.variable_scope('Branch_1'):

tower_conv1_0 = slim.conv2d(net, 192, 1, scope='Conv2d_0a_1x1')

tower_conv1_1 = slim.conv2d(tower_conv1_0, 192, [1, 3],

scope='Conv2d_0b_1x3')

tower_conv1_2 = slim.conv2d(tower_conv1_1, 192, [3, 1],

scope='Conv2d_0c_3x1')

mixed = tf.concat([tower_conv, tower_conv1_2], 3)

up = slim.conv2d(mixed, net.get_shape()[3], 1, normalizer_fn=None,

activation_fn=None, scope='Conv2d_1x1')

net += scale * up

if activation_fn:

net = activation_fn(net)

return net

def reduction_a(net, k, l, m, n):

with tf.variable_scope('Branch_0'):

tower_conv = slim.conv2d(net, n, 3, stride=2, padding='VALID',

scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_1'):

tower_conv1_0 = slim.conv2d(net, k, 1, scope='Conv2d_0a_1x1')

tower_conv1_1 = slim.conv2d(tower_conv1_0, l, 3,

scope='Conv2d_0b_3x3')

tower_conv1_2 = slim.conv2d(tower_conv1_1, m, 3,

stride=2, padding='VALID',

scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_2'):

tower_pool = slim.max_pool2d(net, 3, stride=2, padding='VALID',

scope='MaxPool_1a_3x3')

net = tf.concat([tower_conv, tower_conv1_2, tower_pool], 3)

return net

concat在最后一维加大网络(最后一维的数据量增大),+则直接把内容加和

找到以前的笔记:

concatenate当需要用不同的维度去 组合成新观念的时候更有益。 而 sum则更适用于 并存的判断。"

来自推荐过的文章:

https://zhuanlan.zhihu.com/p/27642620

温故一下+和tf.concat的效果对比吧:

import tensorflow as tf

t1=tf.constant([[1,1,1],[2,2,2]],dtype=tf.int32)

t2=tf.constant([[3,3,3],[4,4,4]],dtype=tf.int32)

t3=t1+t2

t4=tf.concat([t1, t2], 0)

t5=tf.concat([t1, t2], 1)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for temp in [t1,t2,t3,t4,t5]:

print ('\n',sess.run(temp))

sess.run(tf.global_variables_initializer())

for temp in [t1,t2,t3,t4,t5]:

print ('\n',sess.run(temp))

以下为结果

[[1 1 1]

[2 2 2]]

[[1 1 1]

[2 2 2]]

[[3 3 3]

[4 4 4]]

[4 4 4]]

[[4 4 4]

[6 6 6]]

[6 6 6]]

[[1 1 1]

[2 2 2]

[3 3 3]

[4 4 4]]

[2 2 2]

[3 3 3]

[4 4 4]]

[[1 1 1 3 3 3]

[2 2 2 4 4 4]]

[2 2 2 4 4 4]]