Python-QQ聊天记录分析-jieba+wordcloud

QQ聊天记录简单分析

0. Description

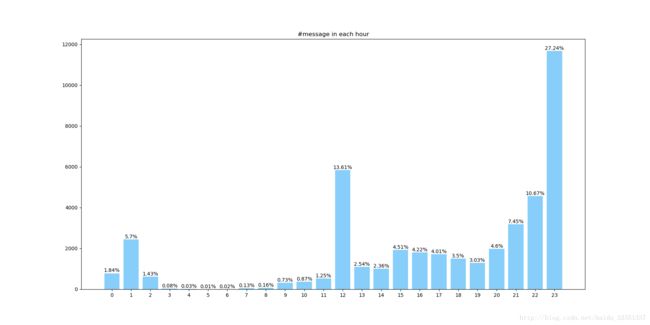

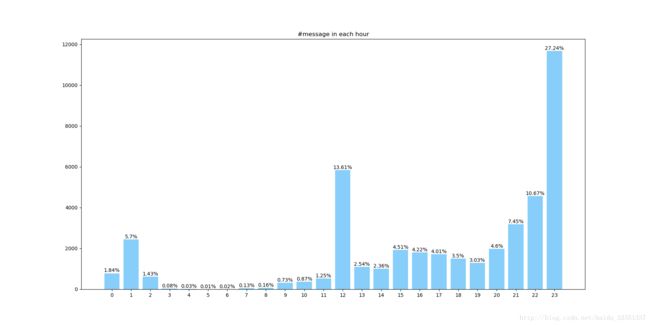

从QQ导出了和好友从2016-08-25到2017-11-18的消息记录,85874行,也算不少。于是就有了大致分析、可视化一下。步骤大致如下:

- 消息记录文件预处理

- 使用jieba分词

- 使用wordcloud生成词云

- 生成简单图表

结果大致如下:

|

|

1. Preprocessing

导出的文件大概格式如下:(已去掉多余空行)

2016-08-26 11:02:56 PM 少平

这……

2016-08-26 11:03:02 PM 少平

这bug都被你发现了

2016-08-26 11:03:04 PM C

反驳呀

2016-08-26 11:03:25 PM C

too young

2016-08-26 11:04:43 PM C

我去刷鞋子

2016-08-26 11:04:58 PM 少平

嗯嗯

好的

Observation&Notice:

- 每条消息上都有对应发送时间和发送者

- 列表内容

- 一条消息内可能有换行

由此,

- 可以依照发送者对消息分开为聊天双方。

- 将各自的内容分别放在文件中,便于后续分词和制作词云。

- 将所有聊天时间抽取出来,可以对聊天时段进行分析和图表绘制。

Arguments:

infile⇒ 原始导出消息记录文件

outfile1⇒ 对话一方的消息记录文件名

outfile2⇒ 对话另一方的消息记录文件名

Outputs:

预处理后的分别储存的消息记录文件(其中只包含一方聊天内容)以及一个消息时间文件

# -*- coding: utf-8 -*-

""" Spilt the original file into different types in good form. """

import re

import codecs

IN_FILE = './data.txt'

OUT_CONTENT_FILE_1 = './her_words.txt'

OUT_CONTENT_FILE_2 = './my_words.txt'

OUT_TIME_FILE = './time.txt'

UTF8='utf-8'

MY_NAME_PATTERN = u'少平'

TIME_PATTERN = r'\d{4,4}-\d\d-\d\d \d{1,2}:\d\d:\d\d [AP]M'

TEST_TPYE_LINE = u'2017-10-14 1:13:49 AM 少平'

def split(infile, outfile1, outfile2):

"""Spilt the original file into different types in good form."""

out_content_file_1 = codecs.open(outfile1, 'a', encoding=UTF8)

out_content_file_2 = codecs.open(outfile2, 'a', encoding=UTF8)

out_time_file = codecs.open(OUT_TIME_FILE, 'a', encoding=UTF8)

try:

with codecs.open(infile, 'r', encoding=UTF8) as infile:

line = infile.readline().strip()

while line:

if re.search(TIME_PATTERN, line) is not None: # type lines

time = re.search(TIME_PATTERN, line).group()

out_time_file.write(u'{}\n'.format(time))

content_line = infile.readline()

flag = 0 # stands for my words

if re.search(MY_NAME_PATTERN, line):

flag = 0

else:

flag = 1

while content_line and re.search(TIME_PATTERN, content_line) is None:

if flag == 1:

out_content_file_1.write(content_line)

else:

out_content_file_2.write(content_line)

content_line = infile.readline()

line = content_line

except OSError:

print 'error occured here.'

out_time_file.close()

out_content_file_1.close()

out_content_file_2.close()

if __name__ == '__main__':

split(IN_FILE, OUT_CONTENT_FILE_1, OUT_CONTENT_FILE_2)

2. Get word segmentations using jieba

使用jieba分词对聊天记录进行分词。

import codecs

import jieba

IN_FILE_NAME = ('./her_words.txt', './my_words.txt')

OUT_FILE_NAME = ('./her_words_out.txt', './my_words_out.txt')

def split(in_files, out_files):

"""Cut the lines into segmentations and save to files"""

for in_file, out_file in zip(in_files, out_files):

outf = codecs.open(out_file, 'a', encoding=UTF8)

with codecs.open(in_file, 'r', encoding=UTF8) as inf:

line = inf.readline()

while line:

line = line.strip()

seg_list = jieba.cut(line, cut_all=True, HMM=True)

for word in seg_list:

outf.write(word+'\n')

line = inf.readline()

outf.close()

if __name__ == '__main__':

split(IN_FILE_NAME, OUT_FILE_NAME)3. Make wordclouds using wordcloud

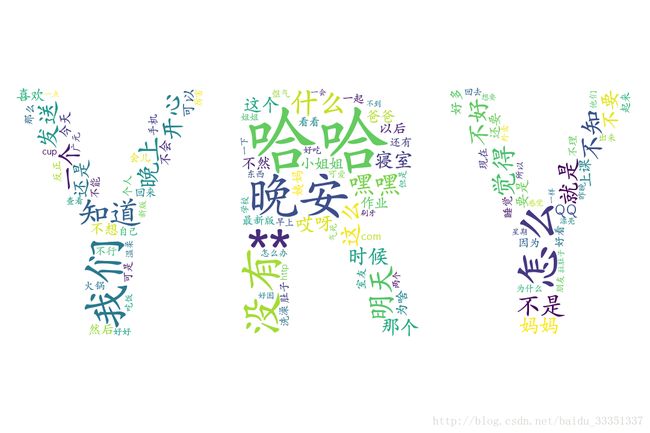

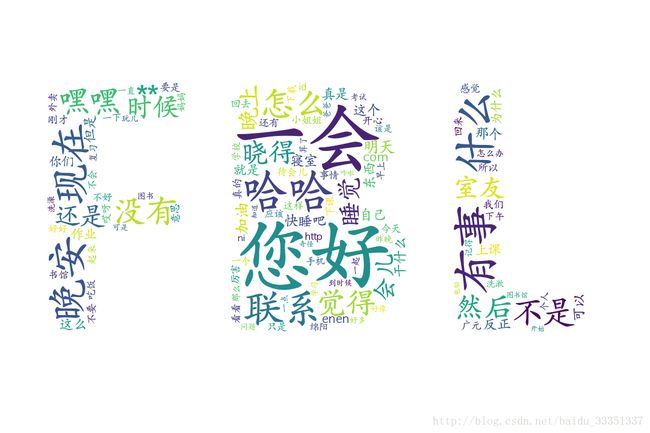

抽取分词结果中出现频率最高的120个词,使用wordcloud进行词云生成。并且,屏蔽部分词语(STOP_WORDS),替换部分词语(ALTER_WORDS)。

STOP_WORDS = [u'图片', u'表情', u'窗口', u'抖动', u'我要', u'小姐', u'哈哈哈', u'哈哈哈哈', u'啊啊啊', u'嘿嘿嘿']

ALTER_WORDS = {u'被替换词1':u'替换词1',u'被替换词2':u'替换词2'}Arguments:

in_files⇒ 分词产生的结果文件

out_files⇒ 保存词云的目标地址

shape_files⇒ 词云形状的图片文件

Output:

对话双方各自内容的词云

import jieba.analyse

import numpy as np

from PIL import Image

from wordcloud import WordCloud

OUT_FILE_NAME = ('./her_words_out.txt', './my_words_out.txt')

OUT_IMG_NAME = ('./her_wordcloud.png', './my_wordcloud.png')

SHAPE_IMG_NAME = ('./YRY.png', './FBL.png')

def make_wordcould(in_files, out_files, shape_files):

"""make wordcould"""

for in_file, out_file, shape_file in zip(in_files, out_files, shape_files):

shape = np.array(Image.open(shape_file))

content = codecs.open(in_file, 'r', encoding=UTF8).read()

tags = jieba.analyse.extract_tags(content, topK=120, withWeight=True)

text = {}

for word, freq in tags:

if word not in STOP_WORDS:

if word in ALTER_WORDS:

word = ALTER_WORDS[word]

text[word] = freq

wordcloud = WordCloud(background_color='white', font_path='./font.ttf', mask=shape, width=1080, height=720).generate_from_frequencies(text)

wordcloud.to_file(out_file)

if __name__ == '__main__':

make_wordcould(OUT_FILE_NAME, OUT_IMG_NAME, SHAPE_IMG_NAME)以下是指定的词云形状(对应WordCloud()中的mask参数):

|

|

以下是生成的词云:

|

|

4. Generate a simple bar plot about time

根据预处理中产生的时间文件制作简单柱状图。

#-*- coding: utf-8 -*-

""" make a simple bar plot """

import codecs

import matplotlib.pyplot as plt

FILE = 'time.txt'

def make_bar_plot(file_name):

"""make a simple bar plot"""

time_list = {}

message_cnt = 1

with codecs.open(file_name, 'r', encoding='utf-8') as infile:

line = infile.readline()

while line:

line = line.strip()

time_in_12, apm = line.split()[1:2]

time_in_24 = time_format(time_in_12, apm)

if time_in_24 in time_list:

time_list[time_in_24] = time_list[time_in_24] + 1

else:

time_list[time_in_24] = 1

line = infile.readline()

message_cnt = message_cnt + 1

plt.figure(figsize=(18, 9))

plt.bar(time_list.keys(), time_list.values(), width=.8,

facecolor='lightskyblue', edgecolor='white')

plt.xticks(range(len(time_list)), time_list.keys())

for x_axies in time_list:

y_axies = time_list[x_axies]

label = '{}%'.format(round(y_axies*1.0/message_cnt*100, 2))

plt.text(x_axies, y_axies+0.05, label, ha='center', va='bottom')

plt.title('#message in each hour')

plt.savefig('time.png')

def time_format(time_in_12, apm):

"""docstring"""

hour = time_in_12.split(':')[0]

hour = int(hour)

if apm == 'PM':

hour = hour + 12

time_in_24 = hour % 24

return time_in_24

if __name__ == '__main__':

make_bar_plot(FILE)