hadoop 完全分布式HA高可用集群(手工切换)搭建

实验环境

namenode1: 192.168.103.4

namenode2: 192.168.103.8

datanode1:192.168.103.15

datanode2: 192.168.103.5

datanode3: 192.168.103.3

操作系统: ubuntu-16.04-x64

hadoop版本: apache-hadoop-2.6.5

jdk版本:1.8

安装步骤

1.安装jdk

jdk的安装过程此处不赘述,不熟悉的话可以参考网上的资料。

2.修改主机映射并配置ssh免密码登录

为了方便配置信息的维护,我们在hadoop配置文件中使用主机名来标识一台主机,那么我们需要在集群中配置主机与ip的映射关系。

修改集群中每台主机/etc/hosts文件,添加如下内容。

192.168.103.4 namenode1

192.168.103.8 namenode2

192.168.103.15 datanode1

192.168.103.5 datanode2

192.168.103.3 datanode3

集群在启动的过程中需要ssh远程登录到别的主机上,为了避免每次输入对方主机的密码,我们需要对namenode1和namenode2配置免密码登录

在namenode1上生成公钥。

ssh-keygen

一路enter确认即可生成对应的公钥。

将namenode1的公钥拷贝到namenode2, datanode1, datanode2, datanode3节点上。

ssh-copy-id -i ~/.ssh/id_rsa.pub root@namenode2

ssh-copy-id -i ~/.ssh/id_rsa.pub root@datanode1

ssh-copy-id -i ~/.ssh/id_rsa.pub root@datanode2

ssh-copy-id -i ~/.ssh/id_rsa.pub root@datanode3

在namenode2上生成公钥后,并将namenode2的公钥拷贝到namenode1, datanode1, datanode2, datanode3节点上。具体的命令可以参考namenode1上的操作命令。

3.namenode1配置hadoop,并复制到其余节点

下载hadoop 安装包,点击这里获取hadoop-2.6.5。

解压安装包

tar xf hadoop-2.6.5.tar.gz修改etc/hadoop/hadoop-env.sh

export JAVA_HOME=/opt/jdk1.8.0_91修改etc/hadoop/core-site.xml

fs.defaultFS hdfs://mycluster hadoop.tmp.dir file:/opt/hadoop-2.6.5/tmp fs.defaultFS这里没有指定具体的namenode,hdfs://mycluster相当于对namenode1和namenode2起了一个虚拟名字。在hdfs-site.xml中会对mycluster进行具体的配置。

修改etc/hadoop/hdfs-site.xml

dfs.namenode.name.dir file:/opt/hadoop-2.6.5/tmp/dfs/name dfs.datanode.data.dir file:/opt/hadoop-2.6.5/tmp/dfs/data dfs.replication 3 dfs.nameservices mycluster dfs.ha.namenodes.mycluster nn1,nn2 dfs.namenode.rpc-address.mycluster.nn1 namenode1:9000 dfs.namenode.http-address.mycluster.nn1 namenode1:50070 dfs.namenode.rpc-address.mycluster.nn2 namenode2:9000 dfs.namenode.http-address.mycluster.nn2 namenode2:50070 dfs.ha.automic-failover.enabled.cluster false dfs.namenode.shared.edits.dir qjournal://datanode1:8485;datanode2:8485;datanode3:8485/mycluster dfs.client.failover.proxy.provider.mycluster org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider dfs.ha.fencing.methods sshfence dfs.ha.fencing.ssh.private-key-files /root/.ssh/id_rsa dfs.ha.fencing.ssh.connect-timeout 30000 dfs.journalnode.edits.dir /opt/hadoop-2.6.5/tmp/journal 修改etc/hadoop/slaves

datanode1 datanode2 datanode3将配置好的hadoop安装包拷贝到namenode2和其余的datanode上

scp -r hadoop-2.6.5 root@namenode2:/opt scp -r hadoop-2.6.5 root@datanode1:/opt scp -r hadoop-2.6.5 root@datanode2:/opt scp -r hadoop-2.6.5 root@datanode3:/opt

4.启动集群

启动journalnode集群(本例中我们将journalnode部署在datanode上,在datanode1, datanode2, datanode3上执行如下命令)

root# ./sbin/hadoop-daemon.sh start journalnode root# jps 552 JournalNode格式化namenode

在namenode1执行命令:root# /bin/hdfs namenode -format启动datanode

在datanode1,datanode2, datanode3执行命令:root# ./sbin/hadoop-daemons.sh start namenode root# jps 552 JournalNode 27724 Jps 783 DataNode启动namenode

namenode1:root# ./sbin/hadoop-daemons.sh start namenodenamenode2:

root# ./bin/hdfs namenode -bootstrapStandby root# ./sbin/hadoop-daemons.sh start namenode

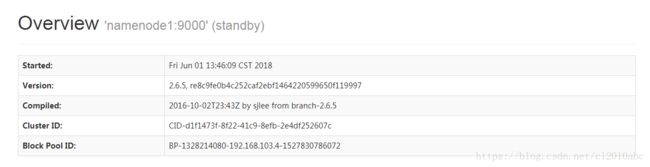

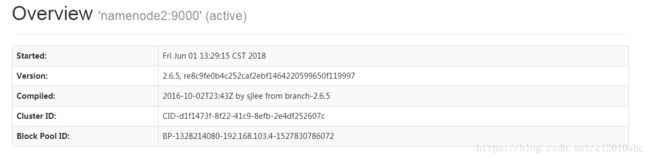

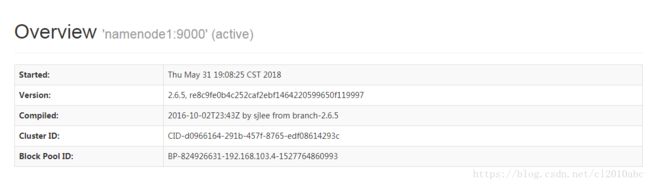

此时namenode1和namenode2都是standby状态(通过web服务查看),还不能正常提供服务,需要将将其中一个节点切换到active状态。

- 将namenode1切换为active状态

namenode1:

./bin/hdfs haadmin -failover --forceactive nn2 nn1

这里nn2, nn1表示namenode的服务名,需要与hdfs-sit.xml中的配置保持一致。然后查看namenode1和namenode2的状态。

5.验证功能

root# ./bin/hdfs dfs -mkdir /hello

root# ./bin/hdfs dfs -ls /

Found 1 items

drwxr-xr-x - root supergroup 0 2018-06-01 13:49 /hello

root# ./bin/hdfs dfs -put /root/a.txt /hello

root# ./bin/hdfs dfs -cat /hello/a.txt

hello world

HA手工切换

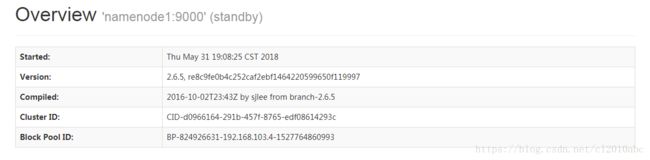

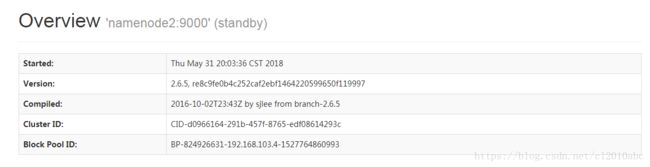

此时namenode1处于active状态,namenode2处于standby状态,我们模拟namenode1节点namenode服务挂掉,并手工恢复到namenode2上。

namenode1:

root# jps

7715 NameNode

6525 Jps

模拟namenode服务挂掉

root# kil -9 7715

切换namenode2为active节点

./bin/hdfs haadmin -failover --forceactive nn1 nn2

日志出现以下错误:

18/06/01 13:33:12 INFO ipc.Client: Retrying connect to server: namenode1/192.168.103.4:9000. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=1, sleepTime=1000 MILLISECONDS)

18/06/01 13:33:12 WARN ha.FailoverController: Unable to gracefully make NameNode at namenode1/192.168.103.4:9000 standby (unable to connect)

java.net.ConnectException: Call From namenode1/192.168.103.4 to namenode1:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:731)

at org.apache.hadoop.ipc.Client.call(Client.java:1474)

at org.apache.hadoop.ipc.Client.call(Client.java:1401)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232)

at com.sun.proxy.$Proxy8.transitionToStandby(Unknown Source)

at org.apache.hadoop.ha.protocolPB.HAServiceProtocolClientSideTranslatorPB.transitionToStandby(HAServiceProtocolClientSideTranslatorPB.java:112)

at org.apache.hadoop.ha.FailoverController.tryGracefulFence(FailoverController.java:172)

at org.apache.hadoop.ha.FailoverController.failover(FailoverController.java:210)

at org.apache.hadoop.ha.HAAdmin.failover(HAAdmin.java:295)

at org.apache.hadoop.ha.HAAdmin.runCmd(HAAdmin.java:455)

at org.apache.hadoop.hdfs.tools.DFSHAAdmin.runCmd(DFSHAAdmin.java:120)

at org.apache.hadoop.ha.HAAdmin.run(HAAdmin.java:384)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)

at org.apache.hadoop.hdfs.tools.DFSHAAdmin.main(DFSHAAdmin.java:132)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:609)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:707)

at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:370)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1523)

at org.apache.hadoop.ipc.Client.call(Client.java:1440)

... 13 more

18/06/01 13:33:12 INFO ha.NodeFencer: ====== Beginning Service Fencing Process... ======

18/06/01 13:33:12 INFO ha.NodeFencer: Trying method 1/1: org.apache.hadoop.ha.SshFenceByTcpPort(null)

18/06/01 13:33:12 INFO ha.SshFenceByTcpPort: Connecting to namenode1...

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: Connecting to namenode1 port 22

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: Connection established

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: Remote version string: SSH-2.0-OpenSSH_7.2p2 Ubuntu-4ubuntu2.1

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: Local version string: SSH-2.0-JSCH-0.1.42

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: CheckCiphers: aes256-ctr,aes192-ctr,aes128-ctr,aes256-cbc,aes192-cbc,aes128-cbc,3des-ctr,arcfour,arcfour128,arcfour256

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: aes256-ctr is not available.

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: aes192-ctr is not available.

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: aes256-cbc is not available.

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: aes192-cbc is not available.

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: arcfour256 is not available.

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: SSH_MSG_KEXINIT sent

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: SSH_MSG_KEXINIT received

18/06/01 13:33:12 INFO SshFenceByTcpPort.jsch: Disconnecting from namenode1 port 22

18/06/01 13:33:12 WARN ha.SshFenceByTcpPort: Unable to connect to namenode1 as user root

com.jcraft.jsch.JSchException: Algorithm negotiation fail

at com.jcraft.jsch.Session.receive_kexinit(Session.java:520)

at com.jcraft.jsch.Session.connect(Session.java:286)

at org.apache.hadoop.ha.SshFenceByTcpPort.tryFence(SshFenceByTcpPort.java:100)

at org.apache.hadoop.ha.NodeFencer.fence(NodeFencer.java:97)

at org.apache.hadoop.ha.FailoverController.failover(FailoverController.java:216)

at org.apache.hadoop.ha.HAAdmin.failover(HAAdmin.java:295)

at org.apache.hadoop.ha.HAAdmin.runCmd(HAAdmin.java:455)

at org.apache.hadoop.hdfs.tools.DFSHAAdmin.runCmd(DFSHAAdmin.java:120)

at org.apache.hadoop.ha.HAAdmin.run(HAAdmin.java:384)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)

at org.apache.hadoop.hdfs.tools.DFSHAAdmin.main(DFSHAAdmin.java:132)

18/06/01 13:33:12 WARN ha.NodeFencer: Fencing method org.apache.hadoop.ha.SshFenceByTcpPort(null) was unsuccessful.

18/06/01 13:33:12 ERROR ha.NodeFencer: Unable to fence service by any configured method.

Failover failed: Unable to fence NameNode at namenode1/192.168.103.4:9000. Fencing failed.

日志显示是算法协商失败,通过搜索得知是jsch.jar 与本机ssh配合有问题,需要更新hadoop安装包中的jsch-0.1.42.jar文件,我们将其更新到0.1.54版本,点击这里下载。

root@namenode1:# rm share/hadoop/common/lib/jsch-0.1.42.jar

root@namenode1:# mv jsch-0.1.54.jar share/hadoop/common/lib/

重新执行上面的命令:

root@namenode1:# ./bin/hdfs haadmin -failover --forceactive nn1 nn2

18/06/01 13:44:25 INFO ipc.Client: Retrying connect to server: namenode1/192.168.103.4:9000. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=1, sleepTime=1000 MILLISECONDS)

18/06/01 13:44:25 WARN ha.FailoverController: Unable to gracefully make NameNode at namenode1/192.168.103.4:9000 standby (unable to connect)

java.net.ConnectException: Call From namenode1/192.168.103.4 to namenode1:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:731)

at org.apache.hadoop.ipc.Client.call(Client.java:1474)

at org.apache.hadoop.ipc.Client.call(Client.java:1401)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232)

at com.sun.proxy.$Proxy8.transitionToStandby(Unknown Source)

at org.apache.hadoop.ha.protocolPB.HAServiceProtocolClientSideTranslatorPB.transitionToStandby(HAServiceProtocolClientSideTranslatorPB.java:112)

at org.apache.hadoop.ha.FailoverController.tryGracefulFence(FailoverController.java:172)

at org.apache.hadoop.ha.FailoverController.failover(FailoverController.java:210)

at org.apache.hadoop.ha.HAAdmin.failover(HAAdmin.java:295)

at org.apache.hadoop.ha.HAAdmin.runCmd(HAAdmin.java:455)

at org.apache.hadoop.hdfs.tools.DFSHAAdmin.runCmd(DFSHAAdmin.java:120)

at org.apache.hadoop.ha.HAAdmin.run(HAAdmin.java:384)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)

at org.apache.hadoop.hdfs.tools.DFSHAAdmin.main(DFSHAAdmin.java:132)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:609)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:707)

at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:370)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1523)

at org.apache.hadoop.ipc.Client.call(Client.java:1440)

... 13 more

18/06/01 13:44:25 INFO ha.NodeFencer: ====== Beginning Service Fencing Process... ======

18/06/01 13:44:25 INFO ha.NodeFencer: Trying method 1/1: org.apache.hadoop.ha.SshFenceByTcpPort(null)

18/06/01 13:44:25 INFO ha.SshFenceByTcpPort: Connecting to namenode1...

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: Connecting to namenode1 port 22

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: Connection established

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: Remote version string: SSH-2.0-OpenSSH_7.2p2 Ubuntu-4ubuntu2.1

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: Local version string: SSH-2.0-JSCH-0.1.54

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: CheckCiphers: aes256-ctr,aes192-ctr,aes128-ctr,aes256-cbc,aes192-cbc,aes128-cbc,3des-ctr,arcfour,arcfour128,arcfour256

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: aes256-ctr is not available.

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: aes192-ctr is not available.

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: aes256-cbc is not available.

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: aes192-cbc is not available.

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: CheckKexes: diffie-hellman-group14-sha1,ecdh-sha2-nistp256,ecdh-sha2-nistp384,ecdh-sha2-nistp521

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: CheckSignatures: ecdsa-sha2-nistp256,ecdsa-sha2-nistp384,ecdsa-sha2-nistp521

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: SSH_MSG_KEXINIT sent

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: SSH_MSG_KEXINIT received

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server: [email protected],ecdh-sha2-nistp256,ecdh-sha2-nistp384,ecdh-sha2-nistp521,diffie-hellman-group-exchange-sha256,diffie-hellman-group14-sha1

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server: ssh-rsa,rsa-sha2-512,rsa-sha2-256,ecdsa-sha2-nistp256,ssh-ed25519

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server: [email protected],aes128-ctr,aes192-ctr,aes256-ctr,[email protected],[email protected]

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server: [email protected],aes128-ctr,aes192-ctr,aes256-ctr,[email protected],[email protected]

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server: [email protected],[email protected],[email protected],[email protected],[email protected],[email protected],[email protected],hmac-sha2-256,hmac-sha2-512,hmac-sha1

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server: [email protected],[email protected],[email protected],[email protected],[email protected],[email protected],[email protected],hmac-sha2-256,hmac-sha2-512,hmac-sha1

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server: none,[email protected]

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server: none,[email protected]

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server:

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server:

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client: ecdh-sha2-nistp256,ecdh-sha2-nistp384,ecdh-sha2-nistp521,diffie-hellman-group14-sha1,diffie-hellman-group-exchange-sha256,diffie-hellman-group-exchange-sha1,diffie-hellman-group1-sha1

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client: ssh-rsa,ssh-dss,ecdsa-sha2-nistp256,ecdsa-sha2-nistp384,ecdsa-sha2-nistp521

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client: aes128-ctr,aes128-cbc,3des-ctr,3des-cbc,blowfish-cbc

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client: aes128-ctr,aes128-cbc,3des-ctr,3des-cbc,blowfish-cbc

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client: hmac-md5,hmac-sha1,hmac-sha2-256,hmac-sha1-96,hmac-md5-96

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client: hmac-md5,hmac-sha1,hmac-sha2-256,hmac-sha1-96,hmac-md5-96

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client: none

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client: none

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client:

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client:

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: server->client aes128-ctr hmac-sha1 none

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: kex: client->server aes128-ctr hmac-sha1 none

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: SSH_MSG_KEX_ECDH_INIT sent

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: expecting SSH_MSG_KEX_ECDH_REPLY

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: ssh_rsa_verify: signature true

18/06/01 13:44:25 WARN SshFenceByTcpPort.jsch: Permanently added 'namenode1' (RSA) to the list of known hosts.

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: SSH_MSG_NEWKEYS sent

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: SSH_MSG_NEWKEYS received

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: SSH_MSG_SERVICE_REQUEST sent

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: SSH_MSG_SERVICE_ACCEPT received

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: Authentications that can continue: publickey,keyboard-interactive,password

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: Next authentication method: publickey

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: Authentication succeeded (publickey).

18/06/01 13:44:25 INFO ha.SshFenceByTcpPort: Connected to namenode1

18/06/01 13:44:25 INFO ha.SshFenceByTcpPort: Looking for process running on port 9000

18/06/01 13:44:25 INFO ha.SshFenceByTcpPort: Indeterminate response from trying to kill service. Verifying whether it is running using nc...

18/06/01 13:44:25 INFO ha.SshFenceByTcpPort: Verified that the service is down.

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: Disconnecting from namenode1 port 22

18/06/01 13:44:25 INFO ha.NodeFencer: ====== Fencing successful by method org.apache.hadoop.ha.SshFenceByTcpPort(null) ======

18/06/01 13:44:25 INFO SshFenceByTcpPort.jsch: Caught an exception, leaving main loop due to Socket closed

Failover from nn1 to nn2 successful

故障切换成功,namenode2切换为active状态。