DQN(1)

DQN(1)

- DQN(1)

- 资料

- 为什么需要DQN

- 伪代码

- 需要

- 复现莫烦PYTHON的核心代码

- 效果

- 下一步任务

资料

莫烦PYTHON

DeepMind

《强化学习精要》

Deep Reinforcement Learning 基础知识(DQN方面)

用Tensorflow基于Deep Q Learning DQN 玩Flappy Bird

Human-level control through deep reinforcement learning

为什么需要DQN

q-learning需要一张q table来表示q value,如果state和action数量巨大,那么这张q table就会很大,导致存储查找都极其耗费时间和空间。解决的思路就是,用一个神经网络来代替这张q table。可以将这个神经网络想象成一个函数,即 q_values,a=f(state,action) q _ v a l u e s , a = f ( s t a t e , a c t i o n ) ,或者更近一步,我们只需要输入state,因为q learning在选择action的时候比较激进,直接找最大的q value对应的action。给网络输入state,网络输出所有的action对应的q value,我们自己再在这个输出的tensor中

找到q value最大的对应的action,完成action的选择。

那么怎么更新q value的值呢?怎么训练网络。一个问题是监督学习需要大量数据,一个问题是强化学习的数据是有顺序的序列,而神经网络需要的是独立同分布的数据。

解决办法是,设置一个样本回放缓冲区replay buffer,也就是存储bot跟环境交互产生的(s, a, r, s_)。其容量很大,当replay buffer满了以后会用新数据覆盖老数据。每次训练的时候都从replay buffer中随机的抽取一批数据。这样一来,打乱了数据的相关性,向独立同分布靠近。同时也提高了数据的使用效率。

另外一个要解决的问题是不稳定的问题。在q-learning中,本次更新由上次的q-value和q target决定。

那么如果用一个神经网络来完成更新的时候,由于样本之间的差异会造成一定的波动性,数据本身的这种不稳定性,在迭代的时候可能会有波动,如果有波动,会随着迭代传递下去。我们无法得到一个稳定的模型。

为了增加模型的稳定性,要将更新的两部分拆分开,解耦。

那么增加一个一模一样的网络target network。target network和behavior network模型一模一样,初始化参数也相同。但是behavior network和环境交互去获取(s, a, r, s_),同时决定action的产生。target network仅仅一个作用,就是替代上边公式中的一部分。

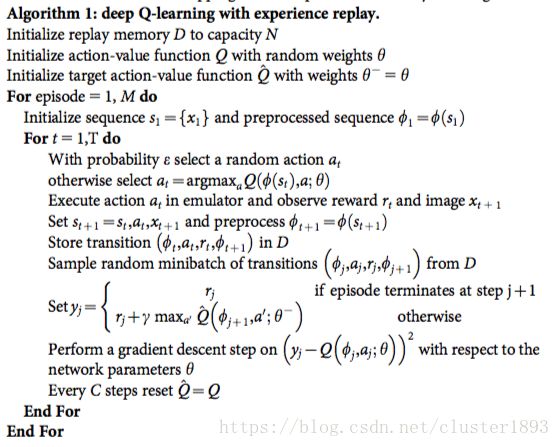

伪代码

depp Q-learning with experience replay:

Initialize replay memory D D to capacity N N .

Initialize action-value function Q Q with random weights θ θ

Initialize target action-value function Q̂ Q ^ with weights θ−=θ θ − = θ

For episode=1,M e p i s o d e = 1 , M do:

- Initialize sequense s1={x1} s 1 = { x 1 } and preprocessed sequence ϕ1=ϕ(s1) ϕ 1 = ϕ ( s 1 )

- For t=1,T t = 1 , T do:

- With probability ϵ ϵ select a random aciton at a t , otherwise select at=argmaxaQ(ϕt,a;θ) a t = a r g m a x a Q ( ϕ t , a ; θ )

- Execute action at a t in emulator and observe reward rt r t and image xt+1 x t + 1

- Set st+1=xt+1, s t + 1 = x t + 1 , and preprecess ϕt+1=ϕ(st+1) ϕ t + 1 = ϕ ( s t + 1 )

- Store transition (ϕt,at,rt,ϕt+1) ( ϕ t , a t , r t , ϕ t + 1 ) in D

- Sample random minibatch of transitions (ϕj,aj,rj,ϕj+1) ( ϕ j , a j , r j , ϕ j + 1 )

- Set:

- Perform a gradient descent step on (yj−Q(ϕj,aj;θ))2 ( y j − Q ( ϕ j , a j ; θ ) ) 2 with respect to the network parameters \theta

- Set st=st+1 s t = s t + 1

- Every C steps reset Q̂ =Q Q ^ = Q

- End for

- End for

改正了论文中的错误。论文中的算法如下:

需要

一个容量为N的容器D,存储大量的( s, a,r,s’ ),同时设置从容器抽取样本的数量batch_size;

两个相同结构的网络,Q、Q‘,并且初始化的参数相同。

复现莫烦PYTHON的核心代码

# coding:utf-8

import os

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

class DeepQNet:

def __init__(

self,

n_features,

n_actions,

learning_rate=0.01,

reward_decay=0.9,

e_greedy_max=0.9,

e_greedy_increment=None,

replace_target_iter=300,

memory_pool_size=500,

batch_size=32,

output_graph=False,

):

# 强化学习需要的部分

self.gamma = reward_decay # 折扣因子

self.e_greedy_max = e_greedy_max # =0.9,90%利用,10%探索 e 即: epsilon

self.e_greedy_increment = e_greedy_increment # epsilon greedy的增量

self.e_greedy = 0 if e_greedy_increment is not None else e_greedy_max # 即有增量就从100%探索开始到100%利用,无就固定一个值

# 神经网络需要的部分

self.lr = learning_rate # 学习率alpha

self.n_features = n_features # state,state_的特征数量

self.n_actions = n_actions # action的数量

self.replace_target_iter = replace_target_iter # 每隔replace_target_iter个action以后更新一次q target网络的参数,更新q target的步数

self.learn_step_counter = 0 # 记录学习的步数,便于进行更新q target的参数更新

# 记忆池

self.memory_pool_size = memory_pool_size # 记忆池的容量,一般比较大,比如100万

self.memory_pool_counter = 0

self.memory_pool = np.zeros((memory_pool_size, n_features * 2 + 2)) # 全零初始化记忆池

self.batch_size = batch_size # 每次从记忆池取出数据的数量

self._build_net() # 建立网络q target net, q evaluate net

target_params = tf.get_collection('target_net_params') # 从collection中提取q target的参数

eval_params = tf.get_collection('eval_net_params') # 提取q eval的参数

self.replace_q_target_op_params = [tf.assign(t, e) for t, e in zip(target_params, eval_params)]

self.cost_history = [] # cost的更改数据,用来监测网络学习的结果

self.sess = tf.Session()

if output_graph:

# 需要从根目录开始写完整路径# FIXME 可改进仅写一次,不要每次运行都生成一个图,if图存在,则不生成

# tensorboard --logdir=name1:/Users/tu/PycharmProjects/myFirstPythonDir/DQN/logs

tf.summary.FileWriter('logs/', self.sess.graph)

self.sess.run(tf.global_variables_initializer())

# 建立神经网络

def _build_net(self):

self.state = tf.placeholder(tf.float32, [None, self.n_features], name='state')

self.state_ = tf.placeholder(tf.float32, [None, self.n_features], name='state_')

self.q_target = tf.placeholder(tf.float32, [None, self.n_actions], name='Q_target') # target net 的输出值

w_initializer, b_initializer = tf.random_normal_initializer(0., 0.3), tf.constant_initializer(0.1)

with tf.variable_scope('eval_net'):

my_collections = ['eval_net_params', tf.GraphKeys.GLOBAL_VARIABLES]

with tf.variable_scope('l1'):

w1 = tf.get_variable('w1', [self.n_features, 20], initializer=w_initializer, collections=my_collections)

b1 = tf.get_variable('b1', [1, 20], initializer=b_initializer, collections=my_collections)

l1 = tf.nn.relu(tf.matmul(self.state, w1) + b1)

with tf.variable_scope('l2'):

w2 = tf.get_variable('w2', [20, self.n_actions], initializer=w_initializer, collections=my_collections)

b2 = tf.get_variable('b2', [1, self.n_actions], initializer=w_initializer, collections=my_collections)

self.q_eval = tf.matmul(l1, w2) + b2 # 输出某一个state的actions value

with tf.variable_scope('loss'):

self.loss = tf.reduce_mean(tf.squared_difference(self.q_target, self.q_eval))

with tf.variable_scope('train'):

self.train_op = tf.train.RMSPropOptimizer(self.lr).minimize(self.loss)

with tf.variable_scope('target_net'): # fixme 初始化的时候两个网络的参数不同,目标是相同

my_collections = ['target_net_params', tf.GraphKeys.GLOBAL_VARIABLES]

with tf.variable_scope('l1'):

w1 = tf.get_variable('w1', [self.n_features, 20], initializer=w_initializer, collections=my_collections)

b1 = tf.get_variable('b1', [1, 20], initializer=b_initializer, collections=my_collections)

l1 = tf.nn.relu(tf.matmul(self.state_, w1) + b1)

with tf.variable_scope('l2'):

w2 = tf.get_variable('w2', [20, self.n_actions], initializer=w_initializer, collections=my_collections)

b2 = tf.get_variable('b2', [1, self.n_actions], initializer=b_initializer, collections=my_collections)

self.q_next = tf.matmul(l1, w2) + b2

# 存储记忆

def store_memory(self, state, action, reward, state_):

transition = np.hstack((state, action, reward, state_))

index = self.memory_pool_counter % self.memory_pool_size

self.memory_pool[index, :] = transition

self.memory_pool_counter += 1

# 选择行为

def choose_action(self, observation):

observation = observation[np.newaxis, :]

if np.random.uniform() < self.e_greedy:

actions_value = self.sess.run(self.q_eval, feed_dict={self.state: observation})

action = np.argmax(actions_value)

else:

action = np.random.randint(0, self.n_actions)

return action

# 更新q网络

def learn(self):

# 从memory poll 中随机获取一批数据

if self.memory_pool_counter >= self.memory_pool_size:

sample_index = np.random.choice(self.memory_pool_size, self.batch_size)

else:

sample_index = np.random.choice(self.memory_pool_counter, self.batch_size)

batch_memory = self.memory_pool[sample_index, :]

# 计算出实际值

q_eval, q_next = self.sess.run([self.q_eval, self.q_next],

feed_dict={self.state: batch_memory[:, :self.n_features],

self.state_: batch_memory[:, -self.n_features:]})

q_target = q_eval.copy()

batch_index = np.arange(self.batch_size, dtype=np.int32)

eval_action_index = batch_memory[:, self.n_features].astype(int)

reward = batch_memory[:, self.n_features + 1]

q_target[batch_index, eval_action_index] = reward + self.gamma * np.max(q_next, axis=1) # axis = 1才为行向

# 实际值与预测值构成lose,更新q eval参数

_, cost = self.sess.run([self.train_op, self.loss], feed_dict={self.state: batch_memory[:, :self.n_features],

self.q_target: q_target})

# 到达一定局数,更新q target网络

if (self.learn_step_counter % self.replace_target_iter) == 0:

self.sess.run(self.replace_q_target_op_params)

print('q target net has updated')

# 添加loss

self.cost_history.append(cost)

# epsilon

self.e_greedy = self.e_greedy + self.e_greedy_increment \

if self.e_greedy < self.e_greedy_max else self.e_greedy_max

self.learn_step_counter += 1

# 代价函数下降线

def plot_cost(self):

plt.plot(np.arange(len(self.cost_history)), self.cost_history)

plt.xlabel('my training steps')

plt.ylabel('my cost')

plt.show()效果

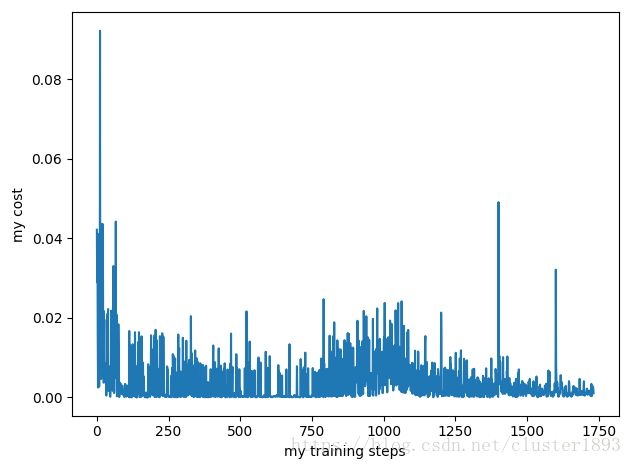

代价函数的曲线:

可以看到确实是有波动性的。

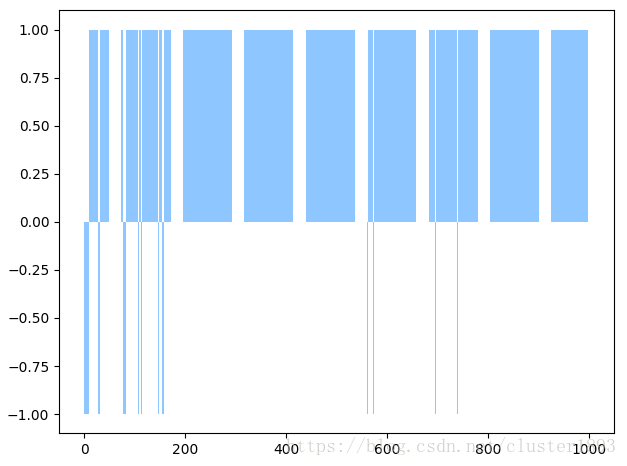

胜利和失败情况:

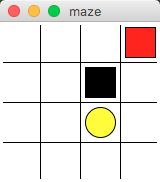

玩的游戏比较简单:

下一步任务

flappy bird