一、线性回归

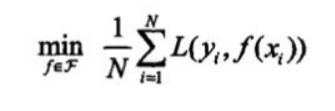

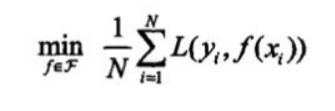

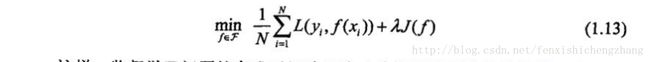

LinearRegression:使用经验风险最小化=损失函数(平方损失)

>>> from sklearn import linear_model

>>> reg = linear_model.LinearRegression()

>>> reg.fit ([[0, 0], [1, 1], [2, 2]], [0, 1, 2])

LinearRegression(copy_X=True, fit_intercept=True, n_jobs=1, normalize=False)

normalize:是否标准化

>>> reg.coef_

array([ 0.5, 0.5])

>>> reg.intercept_

1.1102230246251565e-16

>>> reg.predict([[2,5]])

array([ 3.5])

>>> reg.normalize

False

>>> reg.get_params

True, fit_intercept=True, n_jobs=1, normalize=False)>

二、岭回归

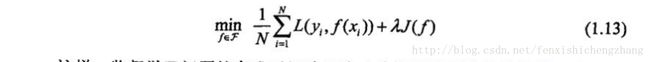

Ridge/RidgeCV:使用结构风险最小化=损失函数(平方损失)+正则化(L2范数)

Ridge:固定阿尔法,求出最佳w,阿尔法与w的范数成反比

>>> from sklearn import linear_model

>>> reg = linear_model.Ridge (alpha = .5)

>>> reg.fit ([[0, 0], [0, 0], [1, 1]], [0, .1, 1])

Ridge(alpha=0.5, copy_X=True, fit_intercept=True, max_iter=None,

normalize=False, random_state=None, solver='auto', tol=0.001)

>>> reg.coef_

array([ 0.34545455, 0.34545455])

>>> reg.intercept_

0.13636...

>>> reg.set_params(alpha=0.6)

Ridge(alpha=0.6, copy_X=True, fit_intercept=True, max_iter=None,

normalize=False, random_state=None, solver='auto', tol=0.001)

>>> reg.fit ([[0, 0], [0, 0], [1, 1]], [0, .1, 1])

Ridge(alpha=0.6, copy_X=True, fit_intercept=True, max_iter=None,

normalize=False, random_state=None, solver='auto', tol=0.001)

>>> reg.coef_

array([ 0.32758621, 0.32758621])

RidgeCV:多个阿尔法,得出多个对应最佳的w,然后得到最佳的w及对应的阿尔法

>>> from sklearn import linear_model

>>> reg = linear_model.RidgeCV(alphas=[0.1, 1.0, 10.0])

>>> reg.fit([[0, 0], [0, 0], [1, 1]], [0, .1, 1])

RidgeCV(alphas=[0.1, 1.0, 10.0], cv=None, fit_intercept=True, scoring=None,normalize=False)

>>> reg.alpha_

0.1

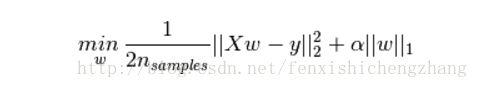

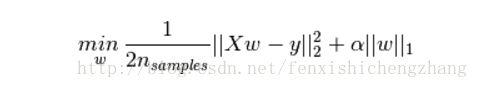

三、套索(适合稀疏数据集)

Lasso:使用结构风险最小化=损失函数(平方损失)+正则化(L1范数)

>>> from sklearn import linear_model # 导入模型参数

>>> reg = linear_model.Lasso(alpha = 0.1)#导入模型传入参数alpha=0.1

>>> reg.fit([[0, 0], [1, 1]], [0, 1])#训练数据

Lasso(alpha=0.1, copy_X=True, fit_intercept=True, max_iter=1000,normalize=False, positive=False, precompute=False, random_state=None,selection='cyclic', tol=0.0001, warm_start=False)

#max_iter:迭代次数,tol:收敛精度

>>> reg.predict([[1, 1]])#模型预测

array([ 0.8])