集群脑裂问题分析

1.什么是集群脑裂

集群的脑裂通常是发生在集群中部分节点之间不可达而引起的(或者因为节点请求压力较大,导致其他节点与该节点的心跳检测不可用)。当上述情况发生时,不同分裂的小集群会自主的选择出master节点,造成原本的集群会同时存在多个master节点。

2.elasticsearch集群的脑裂

假设已经有安装好elasticsearch集群的三台机器:

192.168.31.88 hadoop-master

192.168.31.234 hadoop-slave01

192.168.31.186 hadoop-slave02其中一台elasticsearch节点hadoop-slave02节点的配置

# ======================== Elasticsearch Configuration =========================

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

#

cluster.name: elasticsearch-cluster

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#

node.name: node-slave02

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

#

path.data: /home/hadoop/workspace/elasticsearch/log/data

#

# Path to log files:

#

path.logs: /home/hadoop/workspace/elasticsearch/log/logs

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#

#bootstrap.memory_lock: true

#

# Make sure that the heap size is set to about half the memory available

# on the system and that the owner of the process is allowed to use this

# limit.

#

# Elasticsearch performs poorly when the system is swapping the memory.

#

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

#

network.host: 0.0.0.0

#

# Set a custom port for HTTP:

#

http.port: 9200

#

# For more information, consult the network module documentation.

#

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when new node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

#discovery.zen.ping.unicast.hosts: ["172.18.157.45"]

discovery.zen.ping.unicast.hosts: ["192.168.31.88"]

#

# Prevent the "split brain" by configuring the majority of nodes (total number of master-eligible nodes / 2 + 1):

#

#discovery.zen.minimum_master_nodes: 3

#

# For more information, consult the zen discovery module documentation.

#

# ---------------------------------- Gateway -----------------------------------

#

# Block initial recovery after a full cluster restart until N nodes are started:

#

#gateway.recover_after_nodes: 3

#

# For more information, consult the gateway module documentation.

#

# ---------------------------------- Various -----------------------------------

#

# Require explicit names when deleting indices:

#

#action.destructive_requires_name: true

index.analysis.analyzer.ik.type : "ik"注意其他节点的配置除去node.name不同外其他都设置都一样。另外由于没有设置节点的node.master和node.data属性(默认都为true),因此该节点既可以存储索引数据又可以成为集群中真正的master节点。另外elasticsearch集群的选举是通过配置文件中的:

discovery.zen.ping.unicast.hosts: ["192.168.31.88"]这里为了测试脑裂的发生,所以这个配置是有问题的,正确的配置是

discovery.zen.ping.unicast.hosts: ["192.168.31.88","192.168.31.234","192.168.31.186"]包含所有可能成为master节点的所有节点,这样可以避免重启导致的脑裂情况。

2.1 elasticsearch索引的变化

首先我们先看下elasticsearch集群在索引上的变化:

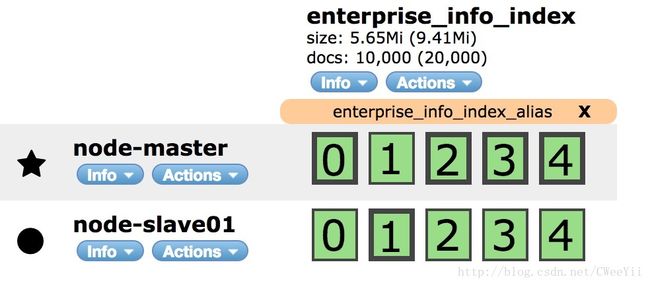

只有一个master节点时索引情况:

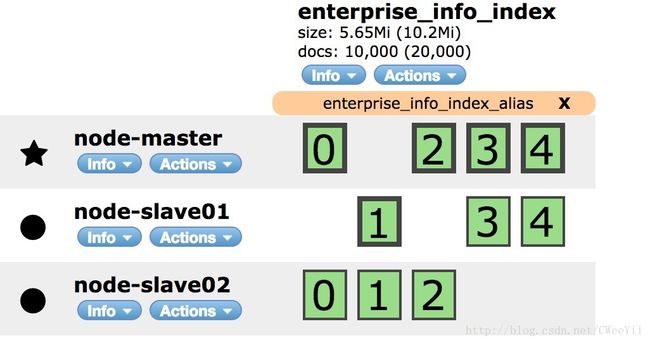

启动slave01节点时索引情况:

启动slave02节点时索引的情况:

2.2 elasticsearch脑裂分析

elasticsearch集群节点中kill掉slave01节点

hadoop@hadoop-slave01:~/workspace/elasticsearch$ jps

2357 Elasticsearch

2413 Jps

hadoop@hadoop-slave01:~/workspace/elasticsearch$ kill -9 2357

hadoop@hadoop-slave01:~/workspace/elasticsearch$kill掉集群中其中一台机器后,索引重建后的稳定情况

重新启动集群中节点slave01服务:

hadoop@hadoop-slave01:~/workspace/elasticsearch$ bin/elasticsearch -d

hadoop@hadoop-slave01:~/workspace/elasticsearch$重新启动slave01的elastic服务后,三台集群状态:

如下三台机器访问都是相同的集群视图:没有出现脑裂问题

http://hadoop-master:9200/_plugin/head/

http://hadoop-slave02:9200/_plugin/head/

http://hadoop-slave01:9200/_plugin/head/

其中本质上就是通过配置discovery.zen.ping.unicast.hosts来实现。

如果kill master节点之后集群情况:

由于master节点被kill掉后,集群会进行重新的选主,其中slave02被选主成为主节点。同时slave01和slave02的视图都相同。

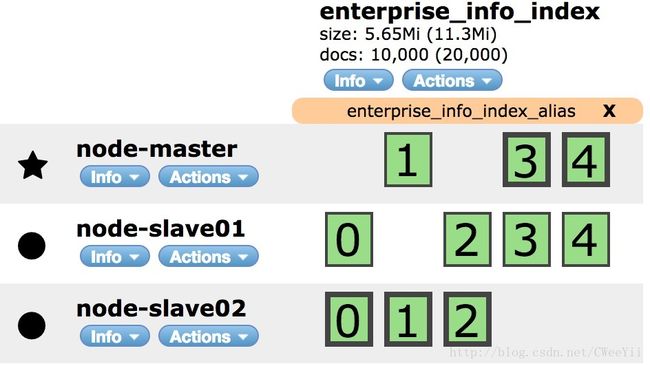

重新启动master节点后,我们看先elasticsearch的集群情况:

可以发现node-slave01和node-slave02节点的视图没有变化,并没有将重新启动后node-master加入集群中。

我们再看下node-master节点的集群视图:

如下同一个集群就出现了脑裂的情况,node-master、node-slave01、node-slave02中同时出现两个master节点:node-master和node-slave02。至于脑裂产生会出现的问题就是:用户通过node-master进行更新的索引数据,通过node-slave01和node-slave02都不能得到访问。

2.3 elasticsearch解决脑裂

elasticsearch解决脑裂只需要把集群中可能成为主节点的机器节点都配置到elasticsearch的选主配置中:

discovery.zen.ping.unicast.hosts: ["192.168.31.88","192.168.31.234","192.168.31.186"]启动是集群状态:

Kill掉node-master节点后,再重启时集群状态

可以发现elasticsearch的cluster集群在node-master节点失败重启后,重新选node-slave01为主节点。node-master节点重新加入到集群中,并且以node-slave01为主节点,并进行索引的重新分配。