深度学习 语义分割

上一章介绍了FCN技术,本章将结合代码介绍FCN模型在tensorflow中的实现。

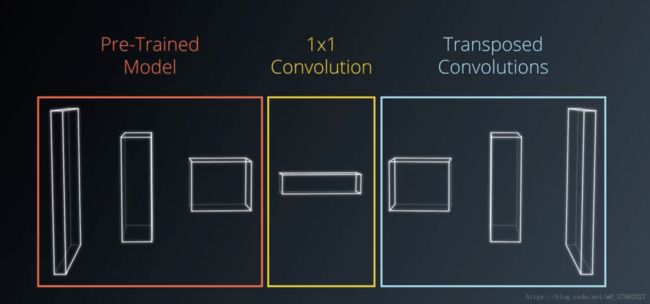

FCN模型分为编码和解码部分,其中编码部分使用卷积神经网络模型,主要处理对图像进行分类的问题,而实际上已经有很多性能良好的卷积神经网络网络模型例如AlexNet、VGG16和GoogLeNet。所以在搭建FCN模型时,可以直接利用tensorflow提供的接口使用已经训练好的模型,再用1x1卷积神经网络替换全连接层,结合上采样和跳跃连接对图像进行语义分割。

构造模型

加载VGG16

使用tf.save_model.loader.load加载vgg16模型,提取模型中第7层全连接层和第3、4层的池化层。

def load_vgg(sess, vgg_path):

"""

Load Pretrained VGG Model into TensorFlow.

:param sess: TensorFlow Session

:param vgg_path: Path to vgg folder, containing "variables/" and "saved_model.pb"

:return: Tuple of Tensors from VGG model (image_input, keep_prob, layer3_out, layer4_out, layer7_out)

"""

# Use tf.saved_model.loader.load to load the model and weights

vgg_tag = 'vgg16'

vgg_input_tensor_name = 'image_input:0'

vgg_keep_prob_tensor_name = 'keep_prob:0'

vgg_layer3_out_tensor_name = 'layer3_out:0'

vgg_layer4_out_tensor_name = 'layer4_out:0'

vgg_layer7_out_tensor_name = 'layer7_out:0'

tf.saved_model.loader.load(sess, [vgg_tag], vgg_path)

image_input = tf.get_default_graph().get_tensor_by_name(vgg_input_tensor_name)

keep_prob = tf.get_default_graph().get_tensor_by_name(vgg_keep_prob_tensor_name)

layer3_out = tf.get_default_graph().get_tensor_by_name(vgg_layer3_out_tensor_name)

layer4_out = tf.get_default_graph().get_tensor_by_name(vgg_layer4_out_tensor_name)

layer7_out = tf.get_default_graph().get_tensor_by_name(vgg_layer7_out_tensor_name)

return image_input, keep_prob, layer3_out, layer4_out, layer7_out- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

构造解码器

- 使用1x1卷积替换全连接层输出layer7_conv;

- 对layer7_conv逆卷积(kernel size=2)得到得到分辨率更高的layer7_trans,这一步即上采样;

- 对上一步加载的vgg_layer4_out使用1x1卷积得到与layer7_trans维度相同的layer4_conv;

- 将layer4_conv和layer7_trans相加,这一步即跳跃连接,输出layer4_out;

- 对vgg_layer3_out使用1x1卷积,对layer4_out使用逆卷积(kernel size=2)上采样、相加,输出layer3_out;

- 对layer3_out逆卷积(kernel size=8)上采样,得到和原始图像分辨率相同的输出图像;

需要注意的是在在卷积和逆卷积过程中要进行正则化,否则训练的图像会导致过拟合。

def layers(vgg_layer3_out, vgg_layer4_out, vgg_layer7_out, num_classes):

"""

Create the layers for a fully convolutional network. Build skip-layers using the vgg layers.

:param vgg_layer7_out: TF Tensor for VGG Layer 7 output

:param vgg_layer4_out: TF Tensor for VGG Layer 4 output

:param vgg_layer3_out: TF Tensor for VGG Layer 3 output

:param num_classes: Number of classes to classify

:return: The Tensor for the last layer of output

"""

layer7_conv = tf.layers.conv2d(vgg_layer7_out, num_classes, 1,

padding= 'SAME',

kernel_regularizer= tf.contrib.layers.l2_regularizer(1e-3))

layer7_trans = tf.layers.conv2d_transpose(layer7_conv, num_classes, 4, 2,

padding= 'SAME',

kernel_regularizer= tf.contrib.layers.l2_regularizer(1e-3))

layer4_conv = tf.layers.conv2d(vgg_layer4_out, num_classes, 1,

padding= 'SAME',

kernel_regularizer= tf.contrib.layers.l2_regularizer(1e-3))

layer4_out = tf.add(layer7_trans, layer4_conv)

layer4_trans = tf.layers.conv2d_transpose(layer4_out, num_classes, 4, 2,

padding= 'SAME',

kernel_regularizer= tf.contrib.layers.l2_regularizer(1e-3))

layer3_conv = tf.layers.conv2d(vgg_layer3_out, num_classes, 1,

padding= 'SAME',

kernel_regularizer= tf.contrib.layers.l2_regularizer(1e-3))

layer3_out = tf.add(layer3_conv, layer4_trans)

last_layer = tf.layers.conv2d_transpose(layer3_out, num_classes, 16, 8,

padding= 'SAME',

kernel_regularizer= tf.contrib.layers.l2_regularizer(1e-3))

return last_layer - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

定义优化函数

def optimize(nn_last_layer, correct_label, learning_rate, num_classes):

"""

Build the TensorFLow loss and optimizer operations.

:param nn_last_layer: TF Tensor of the last layer in the neural network

:param correct_label: TF Placeholder for the correct label image

:param learning_rate: TF Placeholder for the learning rate

:param num_classes: Number of classes to classify

:return: Tuple of (logits, train_op, cross_entropy_loss)

"""

logits = tf.reshape(nn_last_layer, (-1, num_classes))

correct_label = tf.reshape(correct_label, (-1,num_classes))

cross_entropy_loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits= logits, labels= correct_label))

optimizer = tf.train.AdamOptimizer(learning_rate= learning_rate)

train_op = optimizer.minimize(cross_entropy_loss)

return logits, train_op, cross_entropy_loss- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

定义训练函数

def train_nn(sess, epochs, batch_size, get_batches_fn, train_op, cross_entropy_loss, input_image,

correct_label, keep_prob, learning_rate):

"""

Train neural network and print out the loss during training.

:param sess: TF Session

:param epochs: Number of epochs

:param batch_size: Batch size

:param get_batches_fn: Function to get batches of training data. Call using get_batches_fn(batch_size)

:param train_op: TF Operation to train the neural network

:param cross_entropy_loss: TF Tensor for the amount of loss

:param input_image: TF Placeholder for input images

:param correct_label: TF Placeholder for label images

:param keep_prob: TF Placeholder for dropout keep probability

:param learning_rate: TF Placeholder for learning rate

"""

sess.run(tf.global_variables_initializer())

print("Training...")

print()

for i in range(epochs):

print("EPOCH {} ...".format(i+1))

for image, label in get_batches_fn(batch_size):

_, loss = sess.run([train_op, cross_entropy_loss],

feed_dict={input_image: image, correct_label: label, keep_prob: 0.5, learning_rate: 0.0009})

print("Loss: = {:.3f}".format(loss))

print()- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

训练模型

注意可以调节的一些超参数,batch_size, epoch, learning_rate。batch_size表示每次训练使用的训练集的数量,当训练数据分辨率高时,内存只能保存一部分训练集,使用batch_size防止内存溢出。epoh表示对所有训练集训练的次数,如果模型没有加入正则化,则随着epoch的增加,模型预测错误率呈U形,当训练次数过多是会出现过拟合,如果epoch太小则导致欠拟合。learning_rate调节的是权重增加或减少的幅度,当learning_rate太大时,权重调整幅度太大使模型不能达到最优点,当learning_rate太小,会降低训练速度。所以当模型确定以后,需要仔细调整超参数以使模型性能达到最优。

def run():

num_classes = 2

image_shape = (160, 576)

data_dir = 'data'

runs_dir = './runs'

tests.test_for_kitti_dataset(data_dir)

# Download pretrained vgg model

helper.maybe_download_pretrained_vgg(data_dir)

with tf.Session() as sess:

# Path to vgg model

vgg_path = os.path.join(data_dir, 'vgg')

# Create function to get batches

get_batches_fn = helper.gen_batch_function(os.path.join(data_dir, 'data_road/training'), image_shape)

epochs = 50

batch_size = 16

# TF placeholders

correct_label = tf.placeholder(tf.int32, [None, None, None, num_classes], name='correct_label')

learning_rate = tf.placeholder(tf.float32, name='learning_rate')

input_image, keep_prob, vgg_layer3_out, vgg_layer4_out, vgg_layer7_out = load_vgg(sess, vgg_path)

nn_last_layer = layers(vgg_layer3_out, vgg_layer4_out, vgg_layer7_out, num_classes)

logits, train_op, cross_entropy_loss = optimize(nn_last_layer, correct_label, learning_rate, num_classes)

train_nn(sess, epochs, batch_size, get_batches_fn, train_op, cross_entropy_loss, input_image,

correct_label, keep_prob, learning_rate)

helper.save_inference_samples

helper.save_inference_samples(runs_dir, data_dir, sess, image_shape, logits, keep_prob, input_image)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

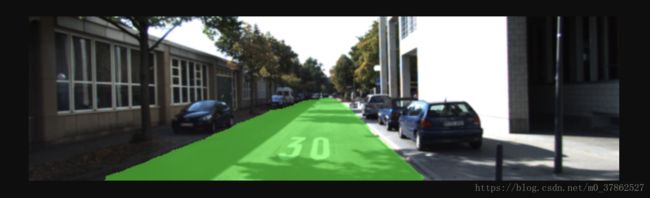

使用FCN对道路进行语义分割结果

本章主要介绍了使用tensorflow实现FCN模型的过程,首先加载了训练好的VGG16卷积神经网络模型,然后提取其中的全连接层和3、4层池化层,利用1x1卷积,上采样和跳跃连接技术最后得到和输入层分辨率相同的输出层。定义优化函数,训练模型,并在结尾给出了使用FCN模型对道路进行语义分割的结果。完整的代码戳这里。

下一章介绍训练模型结果过拟合和欠拟合问题的方法。