打开网址输入 news.sina.com.cn

这里我选择了国际新闻,然后点击检查 通过查看可以发现新闻的相关信息存放在如下图的js文件里面

在上图中我们通过点击相关元素便能轻易的找到需要的信息,通过层层分析最后编写爬虫:

代码如下:

import json

import requests

from bs4 import BeautifulSoup

import pymongo

from pymongo import MongoClient

'''

需用用到的库有json requests BeautifulSoup

新闻的存储方式采用pymongo进行数据存储

'''

def get_html(page):

url = 'http://api.roll.news.sina.com.cn/zt_list?channel=news&cat_1=gjxw&level==1||=2&show_ext=1&show_all=1&show_num=22&tag=1&format=json&page={}'

try:

res=requests.get(url.format(page))

if res.status_code==200:

return res.text

except TimeoutError:

print('请求失败!')

return None

'''

该函数用来获取的新闻链接

'''

def parser_url(html):

urls=[]

jd=json.loads(html)['result']['data']

for data in jd:

url=data['url']

urls.append(url)

return urls

def parse_content(urls):

'''

采用CSS选择器对网页进行解析

:param urls:

:return:

'''

for url in urls:

srcs = []

article = []

res=requests.get(url)

res.encoding='utf-8'

soup=BeautifulSoup(res.text,'lxml')

show_author=soup.select('.show_author')[0].text[5:]

date=soup.select('.date')[0].text

title = soup.select('.main-title')[0].text

source = soup.select('.source')[0].text

print(title)

for i in soup.select('.article p'):

print(i.text)

article.append(i.text)

print(show_author)

print(source)

print(date)

for i in soup.select('.img_wrapper img'):

srcs.append(i.get('src'))

print(i.get('src'))

information={

'title':title,

'article:':article,

'show_author': show_author,

'source':source,

'data':date,

'src':srcs

}

save_mongodb(information)

return information

def save_mongodb(information):

client = MongoClient('mongodb://localhost:27017/')

db=client.mongodb_news

if db['news'].insert(information):

print("插入mongodb数据库成功!")

else:

print("插入mongodb数据库失败!")

def start():

for i in range(1,10):

html=get_html(i)

urls=parser_url(html)

parse_content(urls)

if __name__=='__main__':

start()

运行如下:

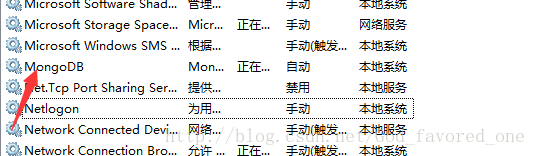

数据库连接

小提示:在运行程序时一定要记得先连接mongodb数据库