TF-Slim学习

import tensorflow.contrib.slim as slim

TF-Slim是一个让定义、训练、评估神经网络更简单的库:

- 让使用者定义模型时更紧凑不用写一些冗余又不得不写的代码(boilerplate code)。这些都可以通过参数范围(argument scoping)和许多的高级层次(layers)和变量(variables)来实现,这些工具增加了可读性可维护性,减少了在复制粘贴超参数(hyperparameter)值以及超参数调优时候的错误的可能性。

- 提供常用的正则化器使得开发模型更简单。

- slim中还包含了几个广泛使用的计算机视觉模型,提供给使用者。这些可以直接使用,也可以通过在不同的层次间添加“multiple heads"来用不同的方式扩展。

- SIim可以更容易的扩展复杂模型和用已存在的模型检查点(checkpoint)来warm start(预热?) 训练算法。

TF-Slim is a library that makes defining, training and evaluating neural networks simple:

1.Allows the user to define models much more compactly by eliminating boilerplate code. This is accomplished through the use of argument scoping and numerous high level layers and variables. These tools increase readability and maintainability, reduce the likelihood of an error from copy-and-pasting hyperparameter values and simplifies hyperparameter tuning.

2.Makes developing models simple by providing commonly used regularizers.

3.Several widely used computer vision models (e.g., VGG, AlexNet) have been developed in slim, and are available to users. These can either be used as black boxes, or can be extended in various ways, e.g., by adding “multiple heads” to different internal layers.

4.Slim makes it easy to extend complex models, and to warm start training algorithms by using pieces of pre-existing model checkpoints.

TF-Slim由许多个独立的部分组成:

- arg_scope: provides a new scope named arg_scope that allows a user to define default arguments for specific operations within that scope.

- data: contains TF-slim’s dataset definition, data providers, parallel_reader, and decoding utilities.

- evaluation: contains routines for evaluating models.

- layers: contains high level layers for building models using tensorflow.

- learning: contains routines for training models.

- losses: contains commonly used loss functions.

- metrics: contains popular evaluation metrics.

- nets: contains popular network definitions such as VGG and AlexNet models.

- queues: provides a context manager for easily and safely starting and closing QueueRunners.

- regularizers: contains weight regularizers.

- variables: provides convenience wrappers for variable creation and manipulation

定义模型

模型可以通过组合vaiables,layers,scopes来简洁的定义。

Variables:TF-Slim在variables.py中提供一套简洁的包装函数来让调用者可以简洁的定义variables。

例如:创建一个weights variable,用truncated normal distribution初始化,l2_loss正则化,并存放在CPU上:

weights = slim.variable('weights',

shape=[10, 10, 3 , 3],

initializer=tf.truncated_normal_initializer(stddev=0.1),

regularizer=slim.l2_regularizer(0.05),

device='/CPU:0')

在基础的TensorFlow中有两种variables:创建一次,使用saver存放在disk里的regular variables和不用保存在disk里,在工作时存在一段时间的local variables。

TF-Slim提出了model variables,用来表示一个model的参数。Model variables在学习的过程中被训练和调整,在评估或引用时从断点(checkpoint)处加载。non-model variables是在学习和评估时使用但不需要引用的variables。

model variables和regular variables可以被TF-Slim轻松的创建和检索:

# Model Variables

weights = slim.model_variable('weights',

shape=[10, 10, 3 , 3],

initializer=tf.truncated_normal_initializer(stddev=0.1),

regularizer=slim.l2_regularizer(0.05),

device='/CPU:0')

model_variables = slim.get_model_variables()

# Regular variables

my_var = slim.variable('my_var',

shape=[20, 1],

initializer=tf.zeros_initializer())

regular_variables_and_model_variables = slim.get_variables()

当直接通过slim.model_variable函数或layers创建model variable时,TF-Slim

将这些variables添加到tf.GraphKeys.MODEL_VARIABLES集合中。

如果你有自定的layers或者variable创建程序,但希望使用TF-Slim来管理,TF-Slim提供了一个方便的函数来将他们加入到集合中:

my_model_variable=CreateViaCustomCode()

# Letting TF-Slim know about the additional variable.

slim.add_model_variable(my_model_variable)

Layers:当用TensorFlow的基础的函数来实现神经网络的各种层时,代码会写的非常复杂。为了简化,TF-Slim提供了许多在更抽象的级别定义的操作,例如,一个卷积层在Slim里的调用:

input = ...

net = slim.conv2d(input,128,[3,3],scope='conv1_1')

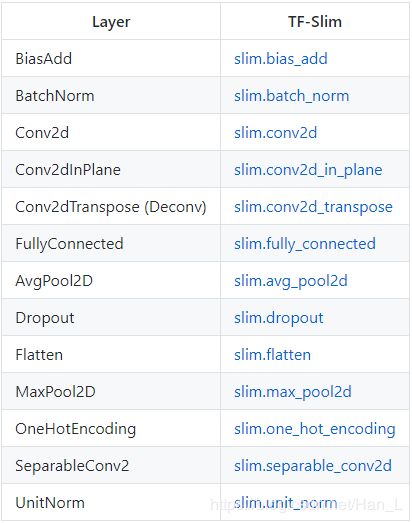

TF-Slim为构建神经网络许多组成都提供了标准的实现,包括:

TF-Slim提供了两种元操作repeat和stack来让使用者重复的执行相同的操作,例如下面的代码片段:

net = ...

net = slim.conv2d(net, 256, [3, 3], scope='conv3_1')

net = slim.conv2d(net, 256, [3, 3], scope='conv3_2')

net = slim.conv2d(net, 256, [3, 3], scope='conv3_3')

net = slim.max_pool2d(net, [2, 2], scope='pool2')

一种是通过for循环来减少代码:

net = ...

for i in range(3):

net = slim.conv2d(net, 256, [3, 3], scope='conv3_%d' % (i+1))

net = slim.max_pool2d(net, [2, 2], scope='pool2')

这可以通过使用TF-Slim的repeat操作来使得更简洁:

net = slim.repeat(net, 3, slim.conv2d, 256, [3, 3], scope='conv3')

net = slim.max_pool2d(net, [2, 2], scope='pool2')

slim.repeat会自己把scope展开,例子中的the scopes would be named ‘conv3/conv3_1’, ‘conv3/conv3_2’ and ‘conv3/conv3_3’.

slim.stack允许用不同的参数来重复使用相同的操作。slim.stack为每个操作创建一个新的tf.variable_scope。例如:

# Verbose way:

x = slim.fully_connected(x, 32, scope='fc/fc_1')

x = slim.fully_connected(x, 64, scope='fc/fc_2')

x = slim.fully_connected(x, 128, scope='fc/fc_3')

# Equivalent, TF-Slim way using slim.stack:

slim.stack(x, slim.fully_connected, [32, 64, 128], scope='fc')

# Verbose way:

x = slim.conv2d(x, 32, [3, 3], scope='core/core_1')

x = slim.conv2d(x, 32, [1, 1], scope='core/core_2')

x = slim.conv2d(x, 64, [3, 3], scope='core/core_3')

x = slim.conv2d(x, 64, [1, 1], scope='core/core_4')

# Using stack:

slim.stack(x, slim.conv2d, [(32, [3, 3]), (32, [1, 1]), (64, [3, 3]), (64, [1, 1])], scope='core')

Scopes:除了tensorflow的name_scope 和 variable_scope,Slim给出以一种arg_scope的机制。这种scope允许用户指定一个或多个操作和参数通过定义在arg_scope中。

例如下面的代码片段:

net = slim.conv2d(inputs, 64, [11, 11], 4, padding='SAME',

weights_initializer=tf.truncated_normal_initializer(stddev=0.01),

weights_regularizer=slim.l2_regularizer(0.0005), scope='conv1')

net = slim.conv2d(net, 128, [11, 11], padding='VALID',

weights_initializer=tf.truncated_normal_initializer(stddev=0.01),

weights_regularizer=slim.l2_regularizer(0.0005), scope='conv2')

net = slim.conv2d(net, 256, [11, 11], padding='SAME',

weights_initializer=tf.truncated_normal_initializer(stddev=0.01),

weights_regularizer=slim.l2_regularizer(0.0005), scope='conv3')

一种简化方式是用variable来指定默认值:

padding = 'SAME'

initializer = tf.truncated_normal_initializer(stddev=0.01)

regularizer = slim.l2_regularizer(0.0005)

net = slim.conv2d(inputs, 64, [11, 11], 4,

padding=padding,

weights_initializer=initializer,

weights_regularizer=regularizer,

scope='conv1')

net = slim.conv2d(net, 128, [11, 11],

padding='VALID',

weights_initializer=initializer,

weights_regularizer=regularizer,

scope='conv2')

net = slim.conv2d(net, 256, [11, 11],

padding=padding,

weights_initializer=initializer,

weights_regularizer=regularizer,

scope='conv3')

通过用arg_scope,可以确保每个layer用相同的值并简化代码:

with slim.arg_scope([slim.conv2d], padding='SAME',

weights_initializer=tf.truncated_normal_initializer(stddev=0.01)

weights_regularizer=slim.l2_regularizer(0.0005)):

net = slim.conv2d(inputs, 64, [11, 11], scope='conv1')

net = slim.conv2d(net, 128, [11, 11], padding='VALID', scope='conv2')

net = slim.conv2d(net, 256, [11, 11], scope='conv3')

这样可以使得代码更简洁更可维护。

arg_scope可以嵌套使用:

with slim.arg_scope([slim.conv2d, slim.fully_connected],

activation_fn=tf.nn.relu,

weights_initializer=tf.truncated_normal_initializer(stddev=0.01),

weights_regularizer=slim.l2_regularizer(0.0005)):

with slim.arg_scope([slim.conv2d], stride=1, padding='SAME'):

net = slim.conv2d(inputs, 64, [11, 11], 4, padding='VALID', scope='conv1')

net = slim.conv2d(net, 256, [5, 5],

weights_initializer=tf.truncated_normal_initializer(stddev=0.03),

scope='conv2')

net = slim.fully_connected(net, 1000, activation_fn=None, scope='fc')

第一个arg_scope用同样的weights_initializer和weights_regularizer参数给con2d和fully_connected层,在第二个arg_scope中,给出了额外的针对con2d的参数。

Training Models

训练模型需要model、损失函数、梯度计算和不断计算与loss相关的权重的梯度并优化相关权重的训练程序。TF-Slim提供了通常的loss function和一堆有用的函数。

Losses:损失函数定义了一个需要最小化的量,分类问题和拟合问题的回归函数是不同的.TF-Slim通过losses模块提供了方便使用的机制来定义和记录损失函数。

例如:

import tensorflow as tf

import tensorflow.contrib.slim.nets as nets

vgg = nets.vgg

# Load the images and labels.

images, labels = ...

# Create the model.

predictions, _ = vgg.vgg_16(images)

# Define the loss functions and get the total loss.

loss = slim.losses.softmax_cross_entropy(predictions, labels)

我们还可以将它用在多任务模型:

# Load the images and labels.

images, scene_labels, depth_labels = ...

# Create the model.

scene_predictions, depth_predictions = CreateMultiTaskModel(images)

# Define the loss functions and get the total loss.

classification_loss = slim.losses.softmax_cross_entropy(scene_predictions, scene_labels)

sum_of_squares_loss = slim.losses.sum_of_squares(depth_predictions, depth_labels)

# The following two lines have the same effect:

total_loss = classification_loss + sum_of_squares_loss

total_loss = slim.losses.get_total_loss(add_regularization_losses=False)

在这个例子中我们使用slim.get_total_loss()或直接相加来获得total loss。TF-Slim会把他们添加进一个TensorFlow的损失函数集合。这使得我们既可以通过TF-Slim来管理他们也可以自己手动管理。

如果有自定义的损失函数,也可以添加进TF-Slim的损失函数集合:

# Load the images and labels.

images, scene_labels, depth_labels, pose_labels = ...

# Create the model.

scene_predictions, depth_predictions, pose_predictions = CreateMultiTaskModel(images)

# Define the loss functions and get the total loss.

classification_loss = slim.losses.softmax_cross_entropy(scene_predictions, scene_labels)

sum_of_squares_loss = slim.losses.sum_of_squares(depth_predictions, depth_labels)

pose_loss = MyCustomLossFunction(pose_predictions, pose_labels)

slim.losses.add_loss(pose_loss) # Letting TF-Slim know about the additional loss.

# The following two ways to compute the total loss are equivalent:

regularization_loss = tf.add_n(slim.losses.get_regularization_losses())

total_loss1 = classification_loss + sum_of_squares_loss + pose_loss + regularization_loss

# (Regularization Loss is included in the total loss by default).

total_loss2 = slim.losses.get_total_loss()

Training Loop:TF-Slim在learning.py中提供了一些十分有用的用来训练模型的工具。包括一个重复计算损失、梯度和保存模型的函数,和几个操作梯度计算的函数。一旦我们确定了模型、损失函数、优化机制,我们可以通过调用slim.learning.create_train_op和slim.learning.train来执行。:

g = tf.Graph()

# Create the model and specify the losses...

...

total_loss = slim.losses.get_total_loss()

optimizer = tf.train.GradientDescentOptimizer(learning_rate)

# create_train_op ensures that each time we ask for the loss, the update_ops

# are run and the gradients being computed are applied too.

train_op = slim.learning.create_train_op(total_loss, optimizer)

logdir = ... # Where checkpoints are stored.

slim.learning.train(

train_op,

logdir,

number_of_steps=1000,

save_summaries_secs=300,

save_interval_secs=600):

在这个例子中,slim.learning.train is provided with the train_op which is used to (a) compute the loss and (b) apply the gradient step. logdir specifies the directory where the checkpoints and event files are stored. We can limit the number of gradient steps taken to any number. In this case, we’ve asked for 1000 steps to be taken. Finally, save_summaries_secs=300 indicates that we’ll compute summaries every 5 minutes and save_interval_secs=600 indicates that we’ll save a model checkpoint every 10 minutes。

再来一个例子:

import tensorflow as tf

import tensorflow.contrib.slim.nets as nets

slim = tf.contrib.slim

vgg = nets.vgg

...

train_log_dir = ...

if not tf.gfile.Exists(train_log_dir):

tf.gfile.MakeDirs(train_log_dir)

with tf.Graph().as_default():

# Set up the data loading:

images, labels = ...

# Define the model:

predictions = vgg.vgg_16(images, is_training=True)

# Specify the loss function:

slim.losses.softmax_cross_entropy(predictions, labels)

total_loss = slim.losses.get_total_loss()

tf.summary.scalar('losses/total_loss', total_loss)

# Specify the optimization scheme:

optimizer = tf.train.GradientDescentOptimizer(learning_rate=.001)

# create_train_op that ensures that when we evaluate it to get the loss,

# the update_ops are done and the gradient updates are computed.

train_tensor = slim.learning.create_train_op(total_loss, optimizer)

# Actually runs training.

slim.learning.train(train_tensor, train_log_dir)

Fine-Tuning Existing Models

在一个模型训练好之后,可以使用tf.train.Saver()从一个给定的断点(checkpoint)还原variables。通常,tf.train.Saver()提供简单的机制来还原所有的或者部分variables。

# Create some variables.

v1 = tf.Variable(..., name="v1")

v2 = tf.Variable(..., name="v2")

...

# Add ops to restore all the variables.

restorer = tf.train.Saver()

# Add ops to restore some variables.

restorer = tf.train.Saver([v1, v2])

# Later, launch the model, use the saver to restore variables from disk, and

# do some work with the model.

with tf.Session() as sess:

# Restore variables from disk.

restorer.restore(sess, "/tmp/model.ckpt")

print("Model restored.")

# Do some work with the model

...

经常需要在一个新的数据集或者新的任务上调整一个已经训练好的模型。这时可以使用TF-Slim的辅助函数来选择一部分variables来还原:

# Create some variables.

v1 = slim.variable(name="v1", ...)

v2 = slim.variable(name="nested/v2", ...)

...

# Get list of variables to restore (which contains only 'v2'). These are all

# equivalent methods:

variables_to_restore = slim.get_variables_by_name("v2")

# or

variables_to_restore = slim.get_variables_by_suffix("2")

# or

variables_to_restore = slim.get_variables(scope="nested")

# or

variables_to_restore = slim.get_variables_to_restore(include=["nested"])

# or

variables_to_restore = slim.get_variables_to_restore(exclude=["v1"])

# Create the saver which will be used to restore the variables.

restorer = tf.train.Saver(variables_to_restore)

with tf.Session() as sess:

# Restore variables from disk.

restorer.restore(sess, "/tmp/model.ckpt")

print("Model restored.")

# Do some work with the model

...

当从一个断点还原variables时,Saver将断点文件中的variable names和当前图中的variables对应起来。这种情况下,断点中的variable可通过他的var.op.name里获取。当断点(checkpoints)中的variable名字和当前图中的variable名字不同时,必须给Saver一个对应的字典来指明对应的关系:

# Assuming that 'conv1/weights' should be restored from 'vgg16/conv1/weights'

def name_in_checkpoint(var):

return 'vgg16/' + var.op.name

# Assuming that 'conv1/weights' and 'conv1/bias' should be restored from 'conv1/params1' and 'conv1/params2'

def name_in_checkpoint(var):

if "weights" in var.op.name:

return var.op.name.replace("weights", "params1")

if "bias" in var.op.name:

return var.op.name.replace("bias", "params2")

variables_to_restore = slim.get_model_variables()

variables_to_restore = {name_in_checkpoint(var):var for var in variables_to_restore}

restorer = tf.train.Saver(variables_to_restore)

with tf.Session() as sess:

# Restore variables from disk.

restorer.restore(sess, "/tmp/model.ckpt")

当遇到着这种情况,我们有一个在ImageNet数据集中训练的有100个分类的VGG16模型,想将它应用到只有20个分类的Pascal VOC 数据集,为此,我们可以使用训练好的模型除了最后一层之外的其他部分来初始化新的模型:

# Load the Pascal VOC data

image, label = MyPascalVocDataLoader(...)

images, labels = tf.train.batch([image, label], batch_size=32)

# Create the model

predictions = vgg.vgg_16(images)

train_op = slim.learning.create_train_op(...)

# Specify where the Model, trained on ImageNet, was saved.

model_path = '/path/to/pre_trained_on_imagenet.checkpoint'

# Specify where the new model will live:

log_dir = '/path/to/my_pascal_model_dir/'

# Restore only the convolutional layers:

variables_to_restore = slim.get_variables_to_restore(exclude=['fc6', 'fc7', 'fc8'])

init_fn = assign_from_checkpoint_fn(model_path, variables_to_restore)

# Start training.

slim.learning.train(train_op, log_dir, init_fn=init_fn)

Evaluating Models

一旦我们训练了模型(或者甚至在模型繁忙训练时),我们希望看到模型在实践中的表现如何。这是通过选择一组评估指标来实现的,评估指标将对模型的性能进行评级,评估代码会加载数据,执行推理,将结果与事实进行比较并记录评估得分。该步骤可以执行一次或周期性地重复。

Metrics:我们定义了一个metric作为性能度量,目的仍然是评估模型。

TF-Slim提供了一套metric操作让评估模型更简单。计算metric的值被分为了三个部分:

1.initialization:初始化计算metrics需要用到的值。

2.Aggregation:执行计算metric时用到的操作。

3.Finalization:执行一些最终的计算metric的操作。

例如计算mean_absolute_error时,首先(count和total)两个variables会被初始化为0,然后aggregation时,计算predictions和labels的绝对差(absolute_differences)并将他们加到total上,每次计算count值也会增加。最后finalization时,total除以count来获得均值。

接下来的例子展示了如何申明metrics:

images, labels = LoadTestData(...)

predictions = MyModel(images)

mae_value_op, mae_update_op = slim.metrics.streaming_mean_absolute_error(predictions, labels)

mre_value_op, mre_update_op = slim.metrics.streaming_mean_relative_error(predictions, labels)

pl_value_op, pl_update_op = slim.metrics.percentage_less(mean_relative_errors, 0.3)

例子中创建一个metric会返回一个value_op和一个update_op。value_op会返回metric的当前值。update_op会执行aggregation步骤并返回metric的值。

时刻记录跟踪value_op和update_op会非常的麻烦,TF-Slim提供了两个方便的函数:

# Aggregates the value and update ops in two lists:

value_ops, update_ops = slim.metrics.aggregate_metrics(

slim.metrics.streaming_mean_absolute_error(predictions, labels),

slim.metrics.streaming_mean_squared_error(predictions, labels))

# Aggregates the value and update ops in two dictionaries:

names_to_values, names_to_updates = slim.metrics.aggregate_metric_map({

"eval/mean_absolute_error": slim.metrics.streaming_mean_absolute_error(predictions, labels),

"eval/mean_squared_error": slim.metrics.streaming_mean_squared_error(predictions, labels),

})

下面是一个tracking 多个metrics的例子:

import tensorflow as tf

import tensorflow.contrib.slim.nets as nets

slim = tf.contrib.slim

vgg = nets.vgg

# Load the data

images, labels = load_data(...)

# Define the network

predictions = vgg.vgg_16(images)

# Choose the metrics to compute:

names_to_values, names_to_updates = slim.metrics.aggregate_metric_map({

"eval/mean_absolute_error": slim.metrics.streaming_mean_absolute_error(predictions, labels),

"eval/mean_squared_error": slim.metrics.streaming_mean_squared_error(predictions, labels),

})

# Evaluate the model using 1000 batches of data:

num_batches = 1000

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

sess.run(tf.local_variables_initializer())

for batch_id in range(num_batches):

sess.run(names_to_updates.values())

metric_values = sess.run(names_to_values.values())

for metric, value in zip(names_to_values.keys(), metric_values):

print('Metric %s has value: %f' % (metric, value))

Evaluation Loop:TF-Slim提供一个evaluation模块(evaluation.py)里面有一些评估模型的的有用的函数。包括一个周期性运行评估的函数,每batches评估一次metrics和打印总结metric结果。

例如:

import tensorflow as tf

slim = tf.contrib.slim

# Load the data

images, labels = load_data(...)

# Define the network

predictions = MyModel(images)

# Choose the metrics to compute:

names_to_values, names_to_updates = slim.metrics.aggregate_metric_map({

'accuracy': slim.metrics.accuracy(predictions, labels),

'precision': slim.metrics.precision(predictions, labels),

'recall': slim.metrics.recall(mean_relative_errors, 0.3),

})

# Create the summary ops such that they also print out to std output:

summary_ops = []

for metric_name, metric_value in names_to_values.iteritems():

op = tf.summary.scalar(metric_name, metric_value)

op = tf.Print(op, [metric_value], metric_name)

summary_ops.append(op)

num_examples = 10000

batch_size = 32

num_batches = math.ceil(num_examples / float(batch_size))

# Setup the global step.

slim.get_or_create_global_step()

output_dir = ... # Where the summaries are stored.

eval_interval_secs = ... # How often to run the evaluation.

slim.evaluation.evaluation_loop(

'local',

checkpoint_dir,

log_dir,

num_evals=num_batches,

eval_op=names_to_updates.values(),

summary_op=tf.summary.merge(summary_ops),

eval_interval_secs=eval_interval_secs)

TF-Slim的github地址