deeplearning练手之 transfer learning极速实现 之 猫狗分类器 (keras实现)

这是一个无聊的猫狗分类器!

这是一个transfer learning的简单试手!

原理什么乱七八糟的别人说的够多了!我看了这几个:

点击打开链接

点击打开链接

分类器我用了keras里的ResNet50,源码是这个:

点击打开链接

所谓极速实现,是代码不超过100行啊,很爽啊

preprocess_image.py 这个是图片预处理,我用了

点击打开链接

这个库的数据,可是我的电脑处理不了那么大的数据,就随便选了3500张作为训练,500张用于测试。

每张图片规格调整到(224,224,3),所以最后训练集维度是(3500,224,224,3),测试集维度是(500,224,224,3)

import cv2 # working with, mainly resizing, images

import numpy as np # dealing with arrays

import os # dealing with directories

from random import shuffle # mixing up or currently ordered data that might lead our network astray in training.

from tqdm import tqdm # a nice pretty percentage bar for tasks. Thanks to viewer Daniel BA1/4hler for this suggestion

TRAIN_DIR = 'D:/Documents/GitHub/deep-learning-models/dataset/train_wu'

TEST_DIR = 'D:/Documents/GitHub/deep-learning-models/dataset/test'

IMG_SIZE = 224

def label_img(img):

word_label = img.split('.')[-3]

# conversion to one-hot array [cat,dog]

# [much cat, no dog]

if word_label == 'cat': return [1,0]

# [no cat, very doggo]

elif word_label == 'dog': return [0,1]

def create_train_data():

training_data = []

for img in tqdm(os.listdir(TRAIN_DIR)):

label = label_img(img)

path = os.path.join(TRAIN_DIR,img)

img = cv2.imread(path,cv2.IMREAD_COLOR)

img = cv2.resize(img, (IMG_SIZE,IMG_SIZE))

training_data.append([np.array(img),np.array(label)])

shuffle(training_data)

np.save('train_data.npy', training_data)

return training_data

def process_test_data():

testing_data = []

for img in tqdm(os.listdir(TEST_DIR)):

path = os.path.join(TEST_DIR,img)

img_num = img.split('.')[0]

img = cv2.imread(path,cv2.IMREAD_COLOR)

img = cv2.resize(img, (IMG_SIZE,IMG_SIZE))

testing_data.append([np.array(img), img_num])

shuffle(testing_data)

np.save('test_data.npy', testing_data)

return testing_data

train_data = create_train_data()

# If you have already created the dataset:

#train_data = np.load('train_data.npy')

#test_data = process_test_data()

#test_data = np.load('test_data.npy')

train = train_data[:-500]

test = train_data[-500:]

X = np.array([i[0] for i in train]).reshape(-1,IMG_SIZE,IMG_SIZE,3)

Y = np.array([i[1] for i in train])

test_x = np.array([i[0] for i in test]).reshape(-1,IMG_SIZE,IMG_SIZE,3)

test_y = np.array([i[1] for i in test])

CAT.py

# -*- coding: utf-8 -*-

"""

Created on Fri Dec 1 16:22:52 2017

@author: Administrator

"""

from keras.applications.resnet50 import ResNet50

from keras.preprocessing import image

from keras.models import Model

from keras.layers import Dense, GlobalAveragePooling2D

from keras import backend as k

from matplotlib.pyplot import imshow

from keras.applications.imagenet_utils import preprocess_input

import imageio

from IPython.display import SVG

from keras.utils import plot_model

from keras.utils.vis_utils import model_to_dot

from keras.models import load_model

import numpy as np

# parameters have been trained with the 'imagenet' dataset (1000 classes)

base_model = ResNet50(weights='imagenet',include_top=False)

x = base_model.output

# add top layers which we will learn by our target dataset

x = GlobalAveragePooling2D()(x)

x = Dense(1024, activation= 'relu')(x)

predictions = Dense(2, activation='softmax')(x)

# new model!

model = Model(inputs = base_model.input, outputs= predictions)

# lock the basemodel, so we can just learn the parameters of the top layers

for layer in base_model.layers:

layer.trainable = False

model.compile(optimizer='rmsprop', loss='categorical_crossentropy',metrics=['accuracy'])

# step 1:train dataset(4000 samples)

model.fit(X,Y,epochs=3,batch_size = 64)

# step 2:test dataset

preds = model.evaluate(test_x, test_y)

print ("Loss = " + str(preds[0]))

print ("Test Accuracy = " + str(preds[1]))

#model.save('catdog_4000.h5')

model = load_model('catdog_4000.h5')

# step 3:test with my image

img_path = 'cat2.jpg'

img = image.load_img(img_path, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

my_image = imageio.imread(img_path)

imshow(my_image)

result = model.predict(x)

if result[0][0]>result[0][1]:

print("this is a cat!")

else:

print("this is a dog!")

#model.summary()

#plot_model(model, to_file='model.png')

#SVG(model_to_dot(model).create(prog='dot', format='svg'))

我只训练了3个epoch(太慢了),训练集accuracy在96%左右。我们看看测试集的识别率:

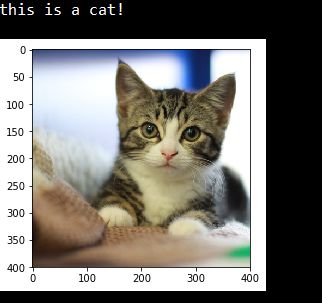

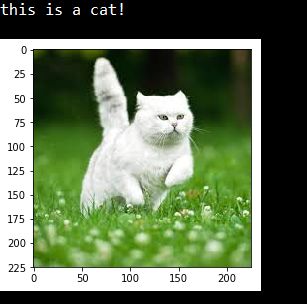

效果就嘎嘎滴,然后用我自己的图片测试一下:

后记:数据量如过比较多,可以多训练几层,进行fine-turning(这样理解没毛病吧)。参考:点击打开链接

请大家多多指教!

图片资源和我训练好的模型我都上传到csdn中了,大家可选择下载:

图片资源:点击打开链接

训练好的分类器,直接load使用:点击打开链接