爬虫实践

#蜘蛛:spider

from kgc_demo.items import *

class KgcKe(scrapy.Spider): name = 'ke' allowed_domains = ['kgc.cn'] start_urls = ['http://www.kgc.cn/list/230-1-6-9-9-0.shtml'] def parse(self, response): for i in response.css("li.course_detail"): name = i.css('a.course-title-a::text').extract_first() print(name) price = i.css('span.view0-price::text').extract_first() print(price) count = i.css('span.course-pepo::text').extract_first() print(count) item = KeItem() item["name"] = name item["price"] = price item["count"] = count yield item next_page = response.css("li.next a::attr('href')").extract_first() if next_page is not None: yield response.follow(next_page,self.parse)

#管道:pipelines

import pymysql

class DBPipeline(object): def open_spider(self,spider):#当spider开始工作的时候调用,打开一个文件写入对象 self.db= pymysql.connect(host='localhost',port=3306,user='root',passwd='123456',db='kgc',charset='utf8') def close_spider(self,spider):#当spider停止时调用,文件写入的文件关闭 print('关闭数据库') self.db.close() def process_item(self,item,spider):#当蜘蛛解析出内容item我把item的信息写入到tb_kegc数据表中中 try: cursor = self.db.cursor() price = None if item['price'] is not None: price = float(item['price'][1:]) print(price) else: price = 0 cursor.execute("insert into tb_kegc(name,price,t_count) values (%s,%s,%s)",(item["name"],price,int(item["count"]))) self.db.commit() print('成功添加一条数据!') except: print('error......')

#数据存储:items

import scrapy class KgcDemoItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() pass class FirstDemoItem(scrapy.Item): name = scrapy.Field() job = scrapy.Field() class KeItem(scrapy.Item): name = scrapy.Field() price = scrapy.Field() count = scrapy.Field()

#start :启动蜘蛛

import scrapy.cmdline as cmdline if __name__=='__main__': # spiderName = input('请输入蜘蛛!') # cmdline.execute("scrapy crawl {spiderName}".format(spiderName=spiderName).split()) cmdline.execute('scrapy crawl ke'.split())

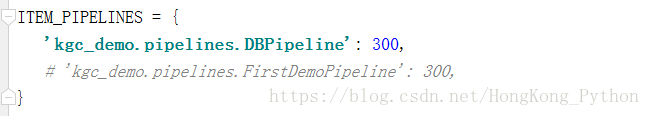

settings:配置文件