TensorFlow入门(2):前向传播

环境:Ubuntu 16.04,Python 3,Tensoflow-gpu v1.9.0 ,CUDA 9.0

1. 神经网络实现步骤

- 准备数据集,提取特征,作为输入喂给神经网络(Neural Network,NN)

- 搭建NN结构,从输入到输出(先搭建计算图,后用会话执行)。即使用NN前向传播算法,计算输出。

- 大量特征数据喂给NN,迭代优化参数。即使用NN反向传播算法,优化参数。

- 应用训练好的模型做预测和分类等。

2. 参数

神经网络的参数即是神经元线上的权重w,如下图的w1和w2:

一般做法是先随机生成参数:

w = tf.Variable(tf.random_normal([2, 3], stddev=2, mean=0, seed=1))

其中:

- tf.random_normal():生成方式为正态分布,类似还可用去掉过大偏离点的正态分布(随机生成的数据若偏离均值超过两个标准差则重新生成)tf.truncated_normal()、平均分布tf.random_uniform()

- [2, 3]:生成2行3列的矩阵

- stddev:标准差

- mean:均值

- seed:随机种子

一般后三项可以略去。还有一些特定值的生成器: - tf.zeros(),生成全零数组

- tf.ones(),生成全1数组

- tf.fill(),生成定值数组

- tf.constant(),直接给值

举例:

tf.zeros([3, 2], int32) # 生成[[0, 0], [0, 0], [0, 0]]

tf.ones([3, 2], int32) # 生成[[1, 1], [1, 1], [1, 1]]

tf.fill([3, 2], 4) # 生成[[4, 4], [4, 4], [4, 4]]

tf.constant([1, 2, 3]) #生成[1, 2, 3]

3. 前向传播

前向传播即搭建模型、实现推理的过程。下面以一个搭建某全连接网络为例说明。

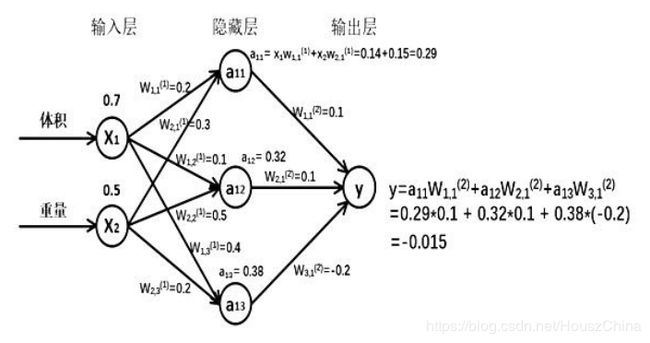

假设:生产一批零件,将体积X1和重量X2为特征输入NN,通过NN后输出一个数值。下图以 X 1 = 0.7 , X 2 = 0.5 X1=0.7,X2=0.5 X1=0.7,X2=0.5为例:

由搭建的神经网络,隐藏层节点 a 11 = X 1 ∗ w 11 + X 2 ∗ w 21 = 0.29 a_{11} = X_1*w_{11} + X_2*w_{21} = 0.29 a11=X1∗w11+X2∗w21=0.29,同理求得 a 12 a12 a12和 a 13 a13 a13,最终求得 y y y,实现了前向传播。

TensorFlow描述:

第一层:

- X X X是1×2的矩阵,表示一次输入的一组特征,这组特征包括体积和重量两个分量;

- W ( 1 ) W^{(1)} W(1)为待优化的参数。2×3矩阵 W ( 1 ) = [ w 1 , 1 ( 1 ) w 1 , 2 ( 1 ) w 1 , 3 ( 1 ) w 2 , 1 ( 1 ) w 2 , 2 ( 1 ) w 2 , 3 ( 1 ) ] W^{(1)}=\begin{bmatrix} w_{1,1^{(1)}}&w_{1,2^{(1)}}&w_{1,3^{(1)}} \\ w_{2,1^{(1)}}&w_{2,2^{(1)}}&w_{2,3^{(1)}} \\ \end{bmatrix} W(1)=[w1,1(1)w2,1(1)w1,2(1)w2,2(1)w1,3(1)w2,3(1)]表示第一层 W W W前有两个节点,有三个节点;

- 神经网络的层指的是计算层,所以图中 x x x表示的输入不计入层数;对于第一层的 a 1 a_{1} a1可表示为 a 1 = [ a 11 a 12 a 13 ] = X W ( 1 ) a_{1} = \begin{bmatrix} a_{11} & a_{12} & a_{13}\end{bmatrix}=XW^{(1)} a1=[a11a12a13]=XW(1)

第二层:

4. W ( 2 ) W^{(2)} W(2)前三个节点,后1个节点,所以为3×1矩阵: W ( 1 ) = [ w 1 , 1 ( 2 ) w 2 , 1 ( 2 ) w 3 , 1 ( 2 ) ] W^{(1)} = \begin{bmatrix} w_{1,1^{(2)}} \\ w_{2,1^{(2)}} \\ w_{3,1^{(2)}}\\ \end{bmatrix} W(1)=⎣⎡w1,1(2)w2,1(2)w3,1(2)⎦⎤

把每层输入乘以线上权重 w w w,做矩阵乘法即可计算出 y y y

a = tf.matmul(X, W1)

y = tf.matmul(a, W2)

编写程序时注意:

- 变量初始化、计算图节点运算都要用会话(with结构)实现

with tf.Session() as sess:

sess.run()

- 变量初始化:在sess.run()中使用

tf.global_variables_initalizer()

init_op = tf.global_variables_initalizer()

sess.run(init_op)

- 计算图节点运算:在

sess.run()中写入待计算节点

sess.run(y)

- 用

tf.placeholder()占位,在sess.run()中用feed_dict喂数据

# 喂一组数据

x = tf.placeholder(tf.float32, shape=(1, 2)) #喂入1组数据,每组数据2个特征(分量)

sess.run(y, feed_dict={x: [[0.7, 0.5]]})

# 喂多组数据

x = tf.placeholder(tf.float32, shape=(1, 2)) #喂入多组数据,每组数据2个特征(分量)

sess.run(y, feed_dict={x: [[0.1, 0.2], [0.2, 0.3], [0.3, 0.4]]})

前向传播举例

- 简单的两层神经网络(全连接)前向传播代码:

#coding:utf-8

import tensorflow as tf

# 定义输入和参数

x = tf.constant([[0.7, 0.5]])

w1 = tf.Variable(tf.random_normal([2, 3], stddev=1, seed=1))

w2 = tf.Variable(tf.random_normal([3, 1], stddev=1, seed=1))

# 定义前向传播过程

a = tf.matmul(x, w1)

y = tf.matmul(a, w2)

# 计算结果

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

print(sess.run(y))

结果为 [[3.0904665]]

- placeholder喂入一组数据

#coding:utf-8

import tensorflow as tf

from keras import backend as K

K.clear_session()

# 定义输入和参数

x = tf.placeholder(tf.float32, shape=(1, 2)) # 用placeholder定义输入

w1 = tf.Variable(tf.random_normal([2, 3], stddev=1, seed=1))

w2 = tf.Variable(tf.random_normal([3, 1], stddev=1, seed=1))

# 定义前向传播过程

a = tf.matmul(x, w1)

y = tf.matmul(a, w2)

# 计算结果

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

print(sess.run(y, feed_dict={x: [[0.7, 0.5]]})) # 喂入一组x

结果为 [[3.0904665]]

- placeholder喂入多组数据

#coding:utf-8

import tensorflow as tf

# 定义输入和参数

x = tf.placeholder(tf.float32, shape=(None, 2)) # 用placeholder定义输入

w1 = tf.Variable(tf.random_normal([2, 3], stddev=1, seed=1))

w2 = tf.Variable(tf.random_normal([3, 1], stddev=1, seed=1))

# 定义前向传播过程

a = tf.matmul(x, w1)

y = tf.matmul(a, w2)

# 计算结果

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

print("y:\n", sess.run(y, feed_dict={x: [[0.7, 0.5], [0.2, 0.3], [0.3, 0.4], [0.4, 0.5], [0.5, 0.6]]})) # 喂入多组x

print("w1:\n", sess.run(w1))

print("w2:\n", sess.run(w2))

结果为

y:

[[3.0904665]

[1.2236414]

[1.7270732]

[2.2305048]

[2.7339368]]

w1:

[[-0.8113182 1.4845988 0.06532937]

[-2.4427042 0.0992484 0.5912243 ]]

w2:

[[-0.8113182 ]

[ 1.4845988 ]

[ 0.06532937]]