AutoEncoder自编码学习

Table of Contents

- 一、autoencoder demo练习1

- 1. autoencoder函数

- 2. 数据处理-2828变为3232

- 3. 图关系网络创建

- 4. 数据读入与训练

- 二、autoencoder 练习2

-

- 网络搭建-encoder和decoder单独拆分

- 数据准备

- 训练

- 模型应用结果

- 关于由拆分的decoder对新数据预测的结果是否与全网络训练得到的autoencoder预测结果是否相同??

-

autoencoder理解

-

学习训练:前向传播+后向误差传播

-

激活函数:隐藏层relu+输出层sigmoid

-

目标函数:

-

算法:梯度下降求解

-

学习过程面临问题:梯度消失——缓解方法(pretraining,Hinton等提出)

-

主要应用:数据压缩(图像中的效果不佳) 数据去噪。具体应用:

- 异常值检测

- 数据去噪(图像、音频)

- 图像修复

- 信息检索

-

几种类型

- Denoising AutoEncoder

- Sparse AutoEncoder

- Constructive AutoEncoder

- Variational AutoEncoder

-

发展历程:

1986——1989——1994 -

特点

- 数据相关性。

- 数据有损性。

- 自动学习能力。

-

模型构成

encoder: X→F

decoder: F→X’

目标函数:argmin L2(X - X’)

一、autoencoder demo练习1

http://machinelearninguru.com/deep_learning/tensorflow/neural_networks/autoencoder/autoencoder.html

# To support both python 2 and python 3

from __future__ import division, print_function, unicode_literals

# Common imports

import numpy as np

import os

import sys

# to make this notebook's output stable across runs

def reset_graph(seed=42):

tf.reset_default_graph()

tf.set_random_seed(seed)

np.random.seed(seed)

# To plot pretty figures

get_ipython().run_line_magic('matplotlib', 'inline')

import matplotlib

import matplotlib.pyplot as plt

plt.rcParams['axes.labelsize'] = 14

plt.rcParams['xtick.labelsize'] = 12

plt.rcParams['ytick.labelsize'] = 12

# Where to save the figures

PROJECT_ROOT_DIR = "."

CHAPTER_ID = "autoencoders"

# 忽视warning

import warnings

warnings.filterwarnings("ignore")

1. autoencoder函数

import tensorflow.contrib.layers as lays

import tensorflow as tf

def autoencoder(inputs):

net = lays.conv2d(inputs, 32, [5,5], stride=2, padding='SAME')

net = lays.conv2d(net, 16, [5,5], stride=2, padding='SAME')

net = lays.conv2d(net, 8, [5,5], stride=4, padding='SAME')

net = lays.conv2d_transpose(net, 16, [5,5], stride=4, padding='SAME')

net = lays.conv2d_transpose(net, 32, [5,5], stride=2, padding='SAME')

net = lays.conv2d_transpose(net, 1, [5,5], stride=2, padding='SAME')

return net

2. 数据处理-2828变为3232

# resize the shape of MNIST image from 28*28 to 32*32

import numpy as np

from skimage import transform

def resize_batch(imgs):

imgs = imgs.reshape((-1, 28, 28, 1))

resized_imgs = np.zeros((imgs.shape[0], 32, 32, 1))

for i in range(imgs.shape[0]):

resized_imgs[i, ..., 0] = transform.resize(imgs[i, ..., 0], (32, 32))

return resized_imgs

3. 图关系网络创建

batch_size = 500

epoch_num = 5

lr = 0.1

ae_inputs = tf.placeholder(tf.float32, (None, 32, 32, 1))

ae_outputs = autoencoder(ae_inputs)

loss = tf.reduce_mean(tf.square(ae_outputs - ae_inputs))

train_op = tf.train.AdamOptimizer(learning_rate=lr).minimize(loss)

# initialize the network

init = tf.global_variables_initializer()

4. 数据读入与训练

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets(r'C:\Users\Administrator\Documents\MNIST', one_hot=True)

batch_per_ep = mnist.train.num_examples//batch_size

with tf.Session() as sess:

sess.run(init)

for ep in range(epoch_num):

for batch_n in range(5):

batch_img, batch_label = mnist.train.next_batch(batch_size)

batch_img = batch_img.reshape((-1, 28, 28, 1))

batch_img = resize_batch(batch_img)

_, c = sess.run([train_op, loss], feed_dict={ae_inputs:batch_img})

print('*'*100)

print('Epoch: {} - cost = {:.5f}'.format((ep +1), c))

batch_img, batch_label = mnist.test.next_batch(50)

batch_img = resize_batch(batch_img)

recon_img = sess.run([ae_outputs], feed_dict={ae_inputs:batch_img})[0]

plt.figure(1)

plt.suptitle('reconstructed images')

for i in range(50):

plt.subplot(5, 10, i+1)

plt.imshow(recon_img[i,..., 0], cmap='Blues')

plt.figure(2)

plt.title('input images')

for i in range(50):

plt.subplot(5, 10, i+1)

plt.imshow(batch_img[i,...,0], cmap='gray')

plt.show()

Extracting C:\Users\Administrator\Documents\MNIST\train-images-idx3-ubyte.gz

Extracting C:\Users\Administrator\Documents\MNIST\train-labels-idx1-ubyte.gz

Extracting C:\Users\Administrator\Documents\MNIST\t10k-images-idx3-ubyte.gz

Extracting C:\Users\Administrator\Documents\MNIST\t10k-labels-idx1-ubyte.gz

****************************************************************************************************

Epoch: 1 - cost = 0.10160

****************************************************************************************************

Epoch: 1 - cost = 37594284.00000

****************************************************************************************************

Epoch: 1 - cost = 0.08536

****************************************************************************************************

Epoch: 1 - cost = 0.09980

****************************************************************************************************

Epoch: 1 - cost = 0.09825

****************************************************************************************************

Epoch: 2 - cost = 0.10323

****************************************************************************************************

Epoch: 2 - cost = 0.10384

****************************************************************************************************

Epoch: 2 - cost = 0.09964

****************************************************************************************************

Epoch: 2 - cost = 0.09995

****************************************************************************************************

Epoch: 2 - cost = 0.10047

****************************************************************************************************

Epoch: 3 - cost = 0.10135

****************************************************************************************************

Epoch: 3 - cost = 0.10175

****************************************************************************************************

Epoch: 3 - cost = 0.10085

****************************************************************************************************

Epoch: 3 - cost = 0.09877

****************************************************************************************************

Epoch: 3 - cost = 0.09804

****************************************************************************************************

Epoch: 4 - cost = 0.10006

****************************************************************************************************

Epoch: 4 - cost = 0.10135

****************************************************************************************************

Epoch: 4 - cost = 0.10089

****************************************************************************************************

Epoch: 4 - cost = 0.10399

****************************************************************************************************

Epoch: 4 - cost = 0.09836

****************************************************************************************************

Epoch: 5 - cost = 0.10019

****************************************************************************************************

Epoch: 5 - cost = 0.10136

****************************************************************************************************

Epoch: 5 - cost = 0.10194

****************************************************************************************************

Epoch: 5 - cost = 0.09951

****************************************************************************************************

Epoch: 5 - cost = 0.10232

二、autoencoder 练习2

理解encoder和decoder的过程

https://blog.keras.io/building-autoencoders-in-keras.html

import numpy as np

from keras.layers import Input, Dense

from keras.models import Model

# 网络搭建-全网模型

encoding_dim = 32

input_img = Input(shape=(784, ))

encoded = Dense(encoding_dim, activation='relu')(input_img)

decoded = Dense(784, activation='sigmoid')(encoded)

autoencoder = Model(input_img, decoded)

网络搭建-encoder和decoder单独拆分

便于获取单独的encoder模型,类如转换特征

encoder = Model(input_img, encoded)

encoded_input = Input(shape=(encoding_dim, ))

decoded_layer = autoencoder.layers[-1]

decoder = Model(encoded_input, decoded_layer(encoded_input))

autoencoder.compile(optimizer='adadelta', loss='binary_crossentropy')

数据准备

from keras.datasets import mnist

(x_train, _), (x_test, _) = mnist.load_data()

x_train = x_train.astype('float32')/255.

x_test = x_test.astype('float32')/255.

x_train = x_train.reshape((len(x_train), np.prod(x_train.shape[1:])))

x_test = x_test.reshape((len(x_test), np.prod(x_test.shape[1:])))

print(x_train.shape)

print(x_test.shape)

(60000, 784)

(10000, 784)

训练

autoencoder.fit(x_train, x_train, verbose=2, epochs=20, batch_size=256, shuffle=True, validation_data=(x_test, x_test))

Train on 60000 samples, validate on 10000 samples

Epoch 1/20

- 15s - loss: 0.2631 - val_loss: 0.2517

Epoch 2/20

- 14s - loss: 0.2410 - val_loss: 0.2284

Epoch 3/20

- 13s - loss: 0.2209 - val_loss: 0.2113

Epoch 4/20

- 14s - loss: 0.2065 - val_loss: 0.1992

Epoch 5/20

- 14s - loss: 0.1960 - val_loss: 0.1900

Epoch 6/20

- 14s - loss: 0.1879 - val_loss: 0.1830

Epoch 7/20

- 15s - loss: 0.1814 - val_loss: 0.1771

Epoch 8/20

- 16s - loss: 0.1757 - val_loss: 0.1717

Epoch 9/20

- 16s - loss: 0.1706 - val_loss: 0.1669

Epoch 10/20

- 14s - loss: 0.1660 - val_loss: 0.1623

Epoch 11/20

- 14s - loss: 0.1617 - val_loss: 0.1583

Epoch 12/20

- 14s - loss: 0.1578 - val_loss: 0.1546

Epoch 13/20

- 16s - loss: 0.1543 - val_loss: 0.1514

Epoch 14/20

- 14s - loss: 0.1512 - val_loss: 0.1483

Epoch 15/20

- 14s - loss: 0.1483 - val_loss: 0.1455

Epoch 16/20

- 14s - loss: 0.1455 - val_loss: 0.1428

Epoch 17/20

- 14s - loss: 0.1429 - val_loss: 0.1402

Epoch 18/20

- 13s - loss: 0.1404 - val_loss: 0.1379

Epoch 19/20

- 14s - loss: 0.1381 - val_loss: 0.1354

Epoch 20/20

- 14s - loss: 0.1358 - val_loss: 0.1332

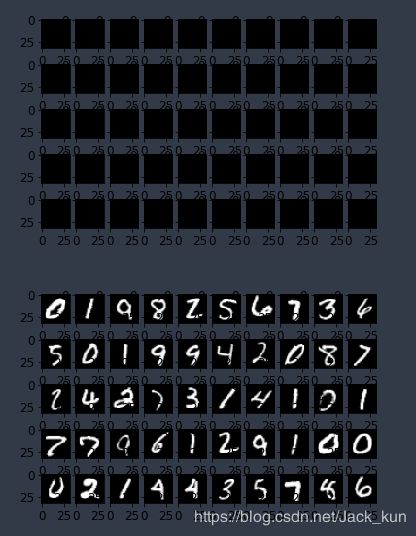

模型应用结果

encoded_imgs = encoder.predict(x_test)

decoded_imgs = decoder.predict(encoded_imgs)

import matplotlib.pyplot as plt

def plt_plot(x_test, decoded):

n = 10 # how many digits we will display

plt.figure(figsize=(20, 4))

for i in range(n):

# display original

ax = plt.subplot(2, n, i + 1)

plt.imshow(x_test[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

# display reconstruction

ax = plt.subplot(2, n, i + 1 + n)

plt.imshow(decoded[i].reshape(28, 28),)

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.suptitle('upper is original, under is reconstruction',fontsize=20)

plt.show()

plt_plot(x_test, decoded_imgs)

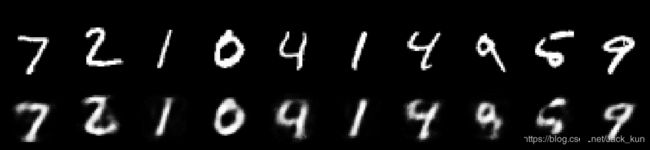

关于由拆分的decoder对新数据预测的结果是否与全网络训练得到的autoencoder预测结果是否相同??

print(autoencoder.layers,'\n',

autoencoder.summary)

print('模型结果:\n {}'.format(autoencoder.predict(x_test[1:3,])

))

print('\n')

print('拆分的解编码结果:\n {}'.format(decoder.predict(encoder.predict(x_test[1:3,]))))

模型结果:

[[ 4.77199501e-05 1.71007414e-04 1.32167725e-05 ..., 5.18762681e-05

1.27580452e-05 1.14900060e-04]

[ 1.05760782e-03 2.56783795e-04 7.73304608e-04 ..., 2.13044506e-04

4.67815640e-04 5.78384090e-04]]

拆分的解编码结果:

[[ 4.77199501e-05 1.71007414e-04 1.32167725e-05 ..., 5.18762681e-05

1.27580452e-05 1.14900060e-04]

[ 1.05760782e-03 2.56783795e-04 7.73304608e-04 ..., 2.13044506e-04

4.67815640e-04 5.78384090e-04]]

两者一样的