强化学习ActorCritic

ActorCritic

Actor负责进行动作的奖惩,而Critic将对奖惩进行评估,从而对下一步的奖惩做出影响

Actor的算法基础是PolicyGradients,Critic的算法基础是Q-learning

Actor只能回合更新,而Critic部分可以单步更新

缺点就是空间的连续性,从而导致神经网络学不到东西,相关性比较强

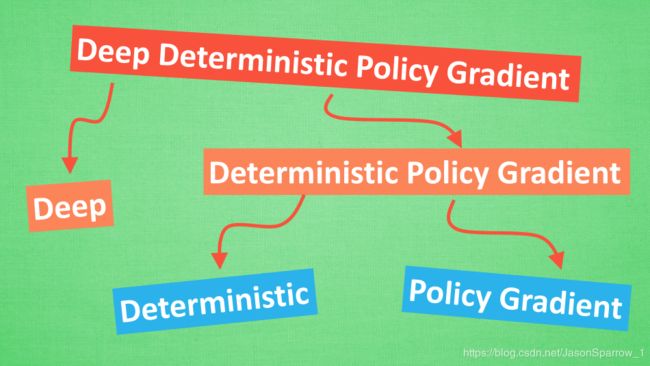

为了解决这种问题,DeepMind将ActorCritic和DQN结合,从而产生了Deep Deterministic Policy Gradient

DDPG

- Deep方面,网络相比较DQN复杂一点点

- Deterministic方面,在连续动作上生成一个动作值

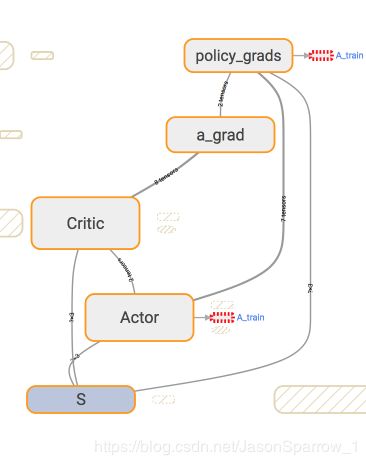

网络设计上面,Critic部分需要利用Actor的网络输出结果进行最后的状态值输出 - Actor和Critic两部分的网络构建

with tf.variable_scope('Actor'): # input s, output a self.a = self._build_net(S, scope='eval_net', trainable=True) # 输出的a_是用于给Critic网络提供参考,从而进行更好的预测下一步的action self.a_ = self._build_net(S_, scope='target_net', trainable=False) with tf.variable_scope('Critic'): # Input (s, a), output q self.a = tf.stop_gradient(a) # stop critic update flows to actor self.q = self._build_net(S, self.a, 'eval_net', trainable=True) # 此处的a_正是从Actor中得到的 self.q_ = self._build_net(S_, a_, 'target_net', trainable=False) - Actor的学习方式及更新内容

根据下图的网络结构,Actor根据Critic得到了a_grad,并利用S计算出了下一步的a,

def choose_action(self, s): s = s[np.newaxis, :] # single state return self.sess.run(self.a, feed_dict={S: s})[0] # single action def add_grad_to_graph(self, a_grads): with tf.variable_scope('policy_grads'): # ys = policy; # xs = policy's parameters; # a_grads = the gradients of the policy to get more Q # tf.gradients will calculate dys/dxs with a initial gradients for ys, so this is dq/da * da/dparams self.policy_grads = tf.gradients(ys=self.a, xs=self.e_params, grad_ys=a_grads) with tf.variable_scope('A_train'): opt = tf.train.AdamOptimizer(-self.lr) # (- learning rate) for ascent policy self.train_op = opt.apply_gradients(zip(self.policy_grads, self.e_params)) # 在Critic class中 with tf.variable_scope('a_grad'): # 这个a是choose_action中计算出来的 self.a_grads = tf.gradients(self.q, a)[0] - Critic中的网络结构和代码

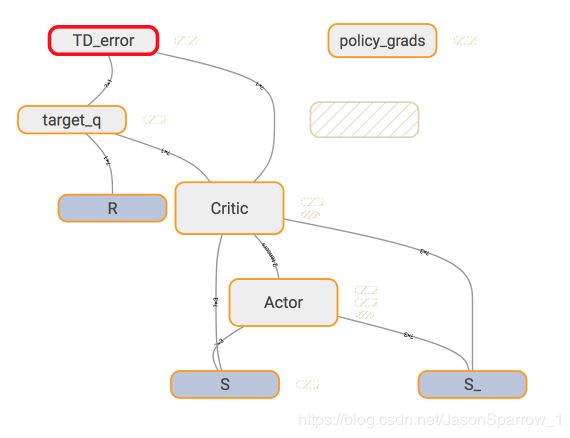

相比Actor中的内容,Critic就比较简单和常规

利用TD_error进行反向误差传播,target_q进行reward计算

# 其中的self.q_是根据Actor的target_q计算出来的 with tf.variable_scope('target_q'): self.target_q = R + self.gamma * self.q_ with tf.variable_scope('TD_error'): self.loss = tf.reduce_mean(tf.squared_difference(self.target_q, self.q)) with tf.variable_scope('C_train'): self.train_op = tf.train.AdamOptimizer(self.lr).minimize(self.loss)

A3C

通过在三个环境中进行学习,将reward反馈到最终要执行的环境中,从而得出更优的结果

基于python多线程

中央大脑有global_net,而每个玩家有一个local_net,不定时将local_net的参数更新到global_net中

- 同步的实现

with tf.name_scope('sync'): with tf.name_scope('pull'): # 从global中获取参数集合 self.pull_a_params_op = [l_p.assign(g_p) for l_p, g_p in zip(self.a_params, globalAC.a_params)] self.pull_c_params_op = [l_p.assign(g_p) for l_p, g_p in zip(self.c_params, globalAC.c_params)] with tf.name_scope('push'): # 将worker中的参数更新到global参数中 self.update_a_op = OPT_A.apply_gradients(zip(self.a_grads, globalAC.a_params)) self.update_c_op = OPT_C.apply_gradients(zip(self.c_grads, globalAC.c_params)) - 利用正态分布进行action选择

with tf.name_scope('choose_a'): # use local params to choose action self.A = tf.clip_by_value(tf.squeeze(normal_dist.sample(1), axis=[0, 1]), A_BOUND[0], A_BOUND[1]) - python多线程

COORD = tf.train.Coordinator() worker_threads = [] for worker in workers: job = lambda: worker.work() t = threading.Thread(target=job) t.start() worker_threads.append(t) COORD.join(worker_threads) - worker的数据更新

for ep_t in range(MAX_EP_STEP): # if self.name == 'W_0': # self.env.render() a = self.AC.choose_action(s) s_, r, done, info = self.env.step(a) done = True if ep_t == MAX_EP_STEP - 1 else False # 添加缓存 ep_r += r buffer_s.append(s) buffer_a.append(a) buffer_r.append((r+8)/8) # normalize if total_step % UPDATE_GLOBAL_ITER == 0 or done: # update global and assign to local net if done: v_s_ = 0 # terminal 根据原算法 else: v_s_ = SESS.run(self.AC.v, {self.AC.s: s_[np.newaxis, :]})[0, 0] buffer_v_target = [] # 计算TD error for r in buffer_r[::-1]: # reverse buffer r v_s_ = r + GAMMA * v_s_ buffer_v_target.append(v_s_) buffer_v_target.reverse() buffer_s, buffer_a, buffer_v_target = np.vstack(buffer_s), np.vstack(buffer_a), np.vstack(buffer_v_target) feed_dict = { self.AC.s: buffer_s, self.AC.a_his: buffer_a, self.AC.v_target: buffer_v_target, } # 推送更新到global self.AC.update_global(feed_dict) # 清空缓存 buffer_s, buffer_a, buffer_r = [], [], [] # 获取最新的global参数 self.AC.pull_global()