淘宝评论爬虫python

参考:http://www.10tiao.com/html/284/201608/2652390011/1.html

由于链接里的requests跑着跑着就不能连远程主机了,所以我这里修改了一下,网页地址:https://detail.tmall.com/item.htm?spm=a230r.1.14.6.ebc5950lJXMdL&id=546515778506&cm_id=140105335569ed55e27b&abbucket=3&skuId=3467681798994,打开F12找到network下面开头是list_detail_rate.htm?itemId字样的,用新标签页打开会发现就是评论,如果不懂可以返回去看原链接的图文。这里我采用的是selenium抓的,然后发现只能抓到99页,100页及以上的页面源码都是重复的,有没有会的高手解释下?

from selenium import webdriver

import re

import json

import time

options = webdriver.ChromeOptions()

options.add_argument('disable-infobars')

driver = webdriver.Chrome(chrome_options=options)

comments=[]

for num in range(1,100):

print (num)

url="https://rate.tmall.com/list_detail_rate.htm?itemId=546515778506&spuId=811996209&sellerId=2996832334&order=3¤tPage="+str(num)+"&append=0&content=1&tagId=&posi=&picture=&ua&isg=AlBQD2WkPRrw9uEfSCoGx8wDIZ5isWQcWE_u1EohMat_hfAv8ikE86b1K3ue&needFold=0&_ksTS=1506407969283_562&callback=jsonp563"

success=False

id=1

while id<=5 and not success:

time.sleep(1)

driver.get(url)

source=driver.page_source

find=source.find("rgv587_flag")

if find==-1:

success=True

else:

id+=1

rex=re.compile(r'\w+[(]{1}(.*)[)]{1}')

content=rex.findall(source)[0]

con=json.loads(content,"gbk")

count=len(con['rateDetail']['rateList'])

for i in range(count):

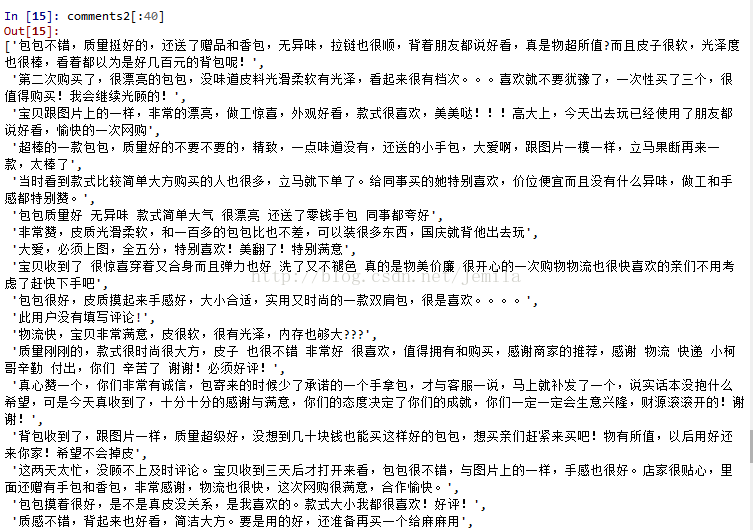

comments.append(con['rateDetail']['rateList'][i]['rateContent']) 最后抓取的内容保存在comments里面,另外网址后面的几个输入是动态更新的,所以过几分钟刷新一下网页会发现可能评论的顺序变了,结果如下: