Map和Reduce多表合并

MapReduce中多表合并:

合并选择:

Map:使用于一个小表一个大表

reduce:使用于同时为大表的情况

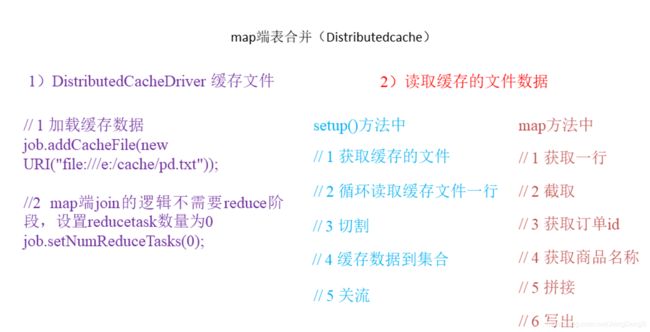

Map端表合并:

优点:适用于关联表中有小表的情形;

可以将小表分发到所有的map节点,这样,map节点就可以在本地对自己所读到的大表数据进行合并并输出最终结果,可以大大提高合并操作的并发度,加快处理速度。

代码实现:

MapJoin.java

public class MapJoin extends Mapper<LongWritable, Text, Text, NullWritable> {

HashMap hashMap = new HashMap<String,String>();

/**

* 初始化

* @param context

* @throws IOException

* @throws InterruptedException

*/

@Override

protected void setup(Context context) throws IOException, InterruptedException {

//获取缓存文件(小表)

BufferedReader reader = new BufferedReader(new InputStreamReader(new FileInputStream("C:\\Users\\Jds\\Desktop\\Data\\pd.txt"), "UTF-8"));

//一行一行读取

String line;

while (StringUtils.isNotEmpty(line = reader.readLine())){

//切分

String[] split = line.split("\t");

//数据存入集合

hashMap.put(split[0],split[1]);

}

}

/**

* 连接

* @param key

* @param value

* @param context

* @throws IOException

* @throws InterruptedException

*/

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//获取数据(大表)

String line = value.toString();

//切分

String[] split = line.split("\t");

//判断

String pid = split[1];

if (hashMap.containsKey(pid)){

context.write(new Text(split[0] + "\t" + hashMap.get(pid) + "\t" + split[2]),NullWritable.get());

}

}

}

JoinDriver.java

public class JoinDriver {

public static void main(String[] args) throws Exception{

args = new String[]{"C:\\Users\\Jds\\Desktop\\Data\\order.txt",

"C:\\Users\\Jds\\Desktop\\Data\\Join1"};

//获取配置信息

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

//反射

job.setJarByClass(JoinDriver.class);

job.setMapperClass(MapJoin.class);

//输入输出

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

//输入输出路径

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

/**

* 加载缓存数据

*/

job.addCacheFile(new URI("file:///C:/Users/Jds/Desktop/Data/pd.txt"));

//提交

job.waitForCompletion(true);

}

}

Reduce端表合并

通过将关联条件作为map输出的key,将两表满足join条件的数据并携带数据所来源的文件信息,发往同一个reduce task,在reduce中进行数据的串联。

代码实现:

Joinbean.java

@Getter

@Setter

@AllArgsConstructor

@NoArgsConstructor

public class Joinbean implements Writable {

/**

* 订单id

*/

private String order_id;

/**

* 产品id

*/

private String p_id;

/**

* 产品数量

*/

private int amount;

/**

* 产品名称

*/

private String pname;

/**

* 表的标记

*/

private String flag;

/**

* 序列化

* @param dataOutput

* @throws IOException

*/

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(order_id);

out.writeUTF(p_id);

out.writeInt(amount);

out.writeUTF(pname);

out.writeUTF(flag);

}

@Override

public void readFields(DataInput in) throws IOException {

this.order_id = in.readUTF();

this.p_id = in.readUTF();

this.amount = in.readInt();

this.pname = in.readUTF();

this.flag = in.readUTF();

}

@Override

public String toString() {

return order_id + "\t" + pname + "\t" + amount;

}

}

JoinMap.java

package Com.Join.Map.Reduce;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import java.io.IOException;

public class JoinMap extends Mapper<LongWritable, Text,Text, Joinbean> {

Joinbean bean = new Joinbean();

Text k = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//获取输入文件类型

FileSplit split = (FileSplit) context.getInputSplit();

String name = split.getPath().getName();

//获取输入数据

String line = value.toString();

//不同文件分别处理

if (name.startsWith("order")){

//切割

String[] fields = line.split("\t");

//封装bean对象

bean.setOrder_id(fields[0]);

bean.setP_id(fields[1]);

bean.setAmount(Integer.parseInt(fields[2]));

bean.setPname("");

bean.setFlag("0");

k.set(fields[1]);

} else {

//切割

String[] fields = line.split("\t");

//封装bean对象

bean.setP_id(fields[0]);

bean.setPname(fields[1]);

bean.setFlag("1");

bean.setAmount(0);

bean.setOrder_id("");

k.set(fields[0]);

}

//写出

context.write(k,bean);

}

}

ReduceJoin.java

package Com.Join.Map.Reduce;

import org.apache.commons.beanutils.BeanUtils;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

import java.lang.reflect.InvocationTargetException;

import java.util.ArrayList;

import static org.apache.commons.beanutils.BeanUtils.copyProperties;

public class ReduceJoin extends Reducer<Text,Joinbean,Joinbean, NullWritable> {

@Override

protected void reduce(Text key, Iterable<Joinbean> values, Context context) throws IOException, InterruptedException {

//准备存储订单的集合

ArrayList<Joinbean> orderbean = new ArrayList<>();

//准备bean对象

Joinbean pdbean = new Joinbean();

for (Joinbean bean:values

) {

if ("0".equals(bean.getFlag())){

//拷贝传递过来的每条订单数据到集合中

Joinbean orderBean = new Joinbean();

try {

copyProperties(orderBean,bean);

} catch (Exception e) {

e.printStackTrace();

}

orderbean.add(orderBean);

} else {

//拷贝传递过来的产品表到内存中

try {

copyProperties(pdbean,bean);

} catch (Exception e) {

e.printStackTrace();

}

}

}

//表连接

for (Joinbean bean:orderbean

) {

bean.setPname(pdbean.getPname());

//数据写出去

context.write(bean,NullWritable.get());

}

}

}

DriverJion.java

package Com.Join.Map.Reduce;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class DriveJoin {

public static void main(String[] args) throws Exception{

//文件路径

args = new String[]{"C:\\Users\\Jds\\Desktop\\Data\\Join\\",

"C:\\Users\\Jds\\Desktop\\Data\\A1"};

//获取配置信息

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

//反射

job.setJarByClass(DriveJoin.class);

job.setMapperClass(JoinMap.class);

job.setReducerClass(ReduceJoin.class);

//输入输出类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Joinbean.class);

job.setOutputKeyClass(Joinbean.class);

job.setOutputValueClass(NullWritable.class);

//输入输出目录

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

//提交

job.waitForCompletion(true);

}

}