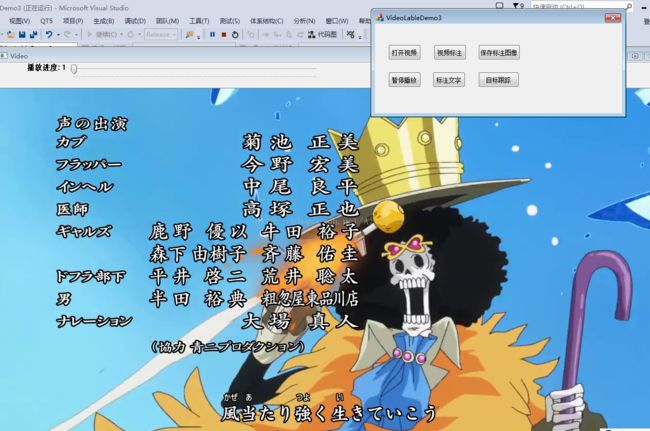

opencv +MFC实现视频播放、暂停、视频标注、跟踪

最近在做视频标注、跟踪这一块,参考了好多资料。

简易功能已实现。

先把代码和效果图贴出来。

环境:VS2013+opencv2.4.8

注:vs2013工程师基于MFC,对话框的

代码如下:

#include "CvvImage.h"

#include "opencv2/opencv.hpp"

#include

#include

#include

#include

CEvent start_event;

int terminate_flag;

#ifdef _DEBUG

#define new DEBUG_NEW

#endif

using namespace std;

using namespace cv;

// Global variables

bool is_drawing = false;

vector rectVec;

vector biaozhu_boxs;

Rect drawing_box;

Mat img_original, img_drawing;

IplImage* pFrame;

bool flag;

Scalar blue = Scalar(255, 0, 0);

Scalar red = Scalar(0, 0, 255);

Scalar black = Scalar(0, 0, 0);

Scalar white = Scalar(255, 255, 255);

DWORD WINAPI PlayVideo(LPVOID lpParam);

VideoCapture capture;

char* trackBarName = "播放进度"; //trackbar控制条名称

double totalFrame = 1.0; //视频总帧数

double currentFrame = 1.0; //当前播放帧

int trackbarValue = 1; //trackbar控制量

int trackbarMax = 255; //trackbar控制条最大值

double frameRate = 1.0; //视频帧率

double controlRate = 0.1;

////控制条回调函数

void TrackBarFunc(int, void(*))

{

controlRate = (double)trackbarValue / trackbarMax*totalFrame; //trackbar控制条对视频播放进度的控制

capture.set(CV_CAP_PROP_POS_FRAMES, controlRate);//设置当前播放帧

}

//播放视频

DWORD WINAPI PlayVideo(LPVOID lpParam)

{

CVideoLableDemo3Dlg* pThis = (CVideoLableDemo3Dlg*)lpParam;

//【1】读入视频

capture.open("HaiZeiWang.mp4");

//【2】检测是否已经打开

if (!capture.isOpened())

{

return -1;

}

totalFrame = capture.get(CV_CAP_PROP_FRAME_COUNT); //获取总帧数

frameRate = capture.get(CV_CAP_PROP_FPS); //获取帧率

double pauseTime = 1000 / frameRate; // 由帧率计算两幅图像间隔时间

//在图像窗口上创建控制条

createTrackbar(trackBarName, "Video", &trackbarValue, trackbarMax, TrackBarFunc);

TrackBarFunc(0, 0);

while (1)

{

capture >> img_original;

if (img_original.empty())

{

break;

}

img_original.copyTo(img_drawing);

//保证视频标注后跟随

for (vector::iterator it = biaozhu_boxs.begin(); it != biaozhu_boxs.end(); ++it)

{

rectangle(img_drawing, (*it), Scalar(0, 255, 0));

}

WaitForSingleObject(start_event, INFINITE);

start_event.SetEvent();

if (terminate_flag == -1)

{

terminate_flag = 0;

_endthreadex(0);

};

if (flag)

{

CvxText text("simhei.ttf");

const char *msg = "在OpenCV中输出汉字!";

float p = 1;

text.setFont(NULL, NULL, NULL, &p);// 透明处理

text.putText(&(IplImage)img_drawing, msg, cvPoint(100, 400), blue);

}

char c = cvWaitKey(33);

imshow("Video", img_drawing); //在窗口显示图像

}

}

//打开视频

void CVideoLableDemo3Dlg::OnBnClickedOpenvideo()

{

HANDLE hThreadSend;//创建独立线程发送数据

DWORD ThreadSendID;

start_event.SetEvent();

hThreadSend = CreateThread(NULL, 0, (LPTHREAD_START_ROUTINE)PlayVideo, (LPVOID)this, 0, &ThreadSendID);

CloseHandle(hThreadSend);

}

//暂停播放

void CVideoLableDemo3Dlg::OnBnClickedSuspendvideo()

{

//f_capture_update = false;

CString buttonText;

m_StopButton.GetWindowText(buttonText);

if (buttonText.Compare(_T("暂停播放")) == 0)

{

start_event.ResetEvent();

m_StopButton.SetWindowTextW(_T("继续"));

}

else

{

start_event.SetEvent();

m_StopButton.SetWindowText(_T("暂停播放"));

}

}

//视频标注

void CVideoLableDemo3Dlg::OnBnClickedLable()

{

// TODO: 在此添加控件通知处理程序代码

//start_event.ResetEvent();

//m_StopButton.SetWindowTextW(_T("继续"));

img_original.copyTo(img_drawing);

setMouseCallback("Video", onMouse, 0);

int frame_counter = 0;

while (1)

{

int c = waitKey(0);

if ((c & 255) == 27)

{

cout << "Exiting ...\n";

break;

}

switch ((char)c)

{

case 'n':

//read the next frame

++frame_counter;

capture >> img_original;

if (img_original.empty())

{

cout << "\nVideo Finished!" << endl;

}

img_original.copyTo(img_drawing);

//save all of the labeling rects

for (vector::iterator it = biaozhu_boxs.begin(); it != biaozhu_boxs.end(); ++it)

{

rectangle(img_drawing, (*it), Scalar(0, 255, 0));

}

break;

case 'z':

//undo the latest labeling

if (!biaozhu_boxs.empty())

{

vector::iterator it_end = biaozhu_boxs.end();

--it_end;

biaozhu_boxs.erase(it_end);

}

img_original.copyTo(img_drawing);

for (vector::iterator it = biaozhu_boxs.begin(); it != biaozhu_boxs.end(); ++it)

{

rectangle(img_drawing, (*it), Scalar(0, 255, 0));

}

break;

case 'c':

//clear all the rects on the image

biaozhu_boxs.clear();

img_original.copyTo(img_drawing);

}

imshow("Video", img_drawing);

}

}

//这一块暂时还没用上

void CVideoLableDemo3Dlg::ImageText(Mat& img, const char *text, Point left_top, Point right_bottom, Scalar fonts_color, int thickness, float row_spacing)

{

CvxText fonts("..\\3rdparty\\script\\msyh.ttc");

CvPoint point;

point.x = left_top.x;

point.y = left_top.y + thickness;//putText函数中的point是以左下角坐标为起始输入位置的,所以要转换坐标

float p = 1; //字体透明度

CvScalar type;

type.val[0] = thickness; // 字体大小

type.val[1] = 0.5; // 空白字符大小比例

type.val[2] = 0.1; // 间隔大小比例

type.val[3] = 0; // 旋转角度(不支持)

fonts.setFont(NULL, &type, NULL, &p);

}

string readTxt(string file)

{

string final;

ifstream fin;

fin.open(file);

string str;

while (!fin.eof())

{

getline(fin, str);

final = final + str + "\n";

}

cout << final << endl;

fin.close();

return final;

}

//文字标注

void CVideoLableDemo3Dlg::OnBnClickedVideotextlable()

{

flag = true;

}

void CVideoLableDemo3Dlg::OnBnClickedSavepicture()

{

time_t t = time(NULL); //获取当前系统的日历时间

tm *local = localtime(&t);

char time_name[30];

int i = 0;

const char* path;

//保存的图片名,可以把保存路径写在filename中

sprintf(time_name, "%d.%d.%d.%d.%d %s", \

local->tm_year + 1900, local->tm_mon + 1, \

local->tm_mday, local->tm_hour, local->tm_min, ".jpg");

imwrite(time_name, img_drawing);//没有说明保存路径时,图片自动存放在vs当前工程的文件夹里;

}

//目标跟踪的鼠标移动

void onMouseTargetTracking(int event, int x, int y, int, void*)

{

if (selectObject)//只有当鼠标左键按下去时才有效,然后通过if里面代码就可以确定所选择的矩形区域selection了

{

selection.x = MIN(x, origin.x);//矩形左上角顶点坐标

selection.y = MIN(y, origin.y);

selection.width = std::abs(x - origin.x);//矩形宽

selection.height = std::abs(y - origin.y);//矩形高

selection &= Rect(0, 0, image.cols, image.rows);//用于确保所选的矩形区域在图片范围内

}

switch (event)

{

case CV_EVENT_LBUTTONDOWN:

origin = Point(x, y);

selection = Rect(x, y, 0, 0);//鼠标刚按下去时初始化了一个矩形区域

selectObject = true;

break;

case CV_EVENT_LBUTTONUP:

selectObject = false;

if (selection.width > 0 && selection.height > 0)

trackObject = -1;

break;

}

}

//目标跟踪

void CVideoLableDemo3Dlg::OnBnClickedObjecttracking()

{

VideoCapture cap; //定义一个摄像头捕捉的类对象

Rect trackWindow;

RotatedRect trackBox;//定义一个旋转的矩阵类对象

int hsize = 16;

float hranges[] = { 0, 180 };//hranges在后面的计算直方图函数中要用到

const float* phranges = hranges;

cap.open("out3.mp4");

if (!cap.isOpened())

{

cout << "***Could not initialize capturing...***\n";

cout << "Current parameter's value: \n";

}

namedWindow("Histogram", 0);

namedWindow("CamShift Demo", 0);

setMouseCallback("CamShift Demo", onMouseTargetTracking, 0);//消息响应机制

Mat frame, hsv, hue, mask, hist, histimg = Mat::zeros(200, 320, CV_8UC3), backproj;

bool paused = false;

//进入视频帧处理主循环

for (;;)

{

if (!paused)//没有暂停

{

cap >> frame;//从视频中输出一帧图像到frame中

if (frame.empty())

break;

}

frame.copyTo(image);//复制一幅图像到image

if (!paused)//没有按暂停键

{

cvtColor(image, hsv, CV_BGR2HSV);//将rgb转化成hsv空间的

if (trackObject)//trackObject初始化为0,或者按完键盘的'c'键后也为0,当鼠标单击松开后为-1

{

int _vmin = vmin, _vmax = vmax;

//inRange函数的功能是检查输入数组每个元素大小是否在2个给定数值之间,可以有多通道,mask保存0通道的最小值,也就是h分量

//这里利用了hsv的3个通道,比较h,0~180,s,smin~256,v,min(vmin,vmax),max(vmin,vmax)。如果3个通道都在对应的范围内,则

//mask对应的那个点的值全为1(0xff),否则为0(0x00).

inRange(hsv, Scalar(0, smin, MIN(_vmin, _vmax)),

Scalar(180, 256, MAX(_vmin, _vmax)), mask);

int ch[] = { 0, 0 };

hue.create(hsv.size(), hsv.depth());//hue初始化为与hsv大小深度一样的矩阵,色调的度量是用角度表示的,红绿蓝之间相差120度,反色相差180度

mixChannels(&hsv, 1, &hue, 1, ch, 1);//将hsv第一个通道(也就是色调)的数复制到hue中,0索引数组

if (trackObject < 0)//鼠标选择区域松开后,该函数内部又将其赋值1

{

//此处的构造函数roi用的是Mat hue的矩阵头,且roi的数据指针指向hue,即共用相同的数据,select为其感兴趣的区域

Mat roi(hue, selection), maskroi(mask, selection);//mask保存的hsv的最小值

//calcHist()函数第一个参数为输入矩阵序列,第2个参数表示输入的矩阵数目,第3个参数表示将被计算直方图维数通道的列表,第4个参数表示可选的掩码函数

//第5个参数表示输出直方图,第6个参数表示直方图的维数,第7个参数为每一维直方图数组的大小,第8个参数为每一维直方图bin的边界

calcHist(&roi, 1, 0, maskroi, hist, 1, &hsize, &phranges);//将roi的0通道计算直方图并通过mask放入hist中,hsize为每一维直方图的大小

normalize(hist, hist, 0, 255, CV_MINMAX);//将hist矩阵进行数组范围归一化,都归一化到0~255

trackWindow = selection;

trackObject = 1;//只要鼠标选完区域松开后,且没有按键盘清0键'c',则trackObject一直保持为1,因此该if函数只能执行一次,除非重新选择跟踪区域

histimg = Scalar::all(0);//与按下'c'键是一样的,这里的all(0)表示的是标量全部清0

int binW = histimg.cols / hsize; //histing是一个200*300的矩阵,hsize应该是每一个bin的宽度,也就是histing矩阵能分出几个bin出来

Mat buf(1, hsize, CV_8UC3);//定义一个缓冲单bin矩阵

for (int i = 0; i < hsize; i++)//saturate_case函数为从一个初始类型准确变换到另一个初始类型

buf.at(i) = Vec3b(saturate_cast(i*180. / hsize), 255, 255);//Vec3b为3个char值的向量

cvtColor(buf, buf, CV_HSV2BGR);//将hsv又转换成bgr

for (int i = 0; i < hsize; i++)

{

int val = saturate_cast(hist.at(i)*histimg.rows / 255);//at函数为返回一个指定数组元素的参考值

rectangle(histimg, Point(i*binW, histimg.rows), //在一幅输入图像上画一个简单抽的矩形,指定左上角和右下角,并定义颜色,大小,线型等

Point((i + 1)*binW, histimg.rows - val),

Scalar(buf.at(i)), -1, 8);

}

}

calcBackProject(&hue, 1, 0, hist, backproj, &phranges);//计算直方图的反向投影,计算hue图像0通道直方图hist的反向投影,并让入backproj中

backproj &= mask;

//opencv2.0以后的版本函数命名前没有cv两字了,并且如果函数名是由2个意思的单词片段组成的话,且前面那个片段不够成单词,则第一个字母要

//大写,比如Camshift,如果第一个字母是个单词,则小写,比如meanShift,但是第二个字母一定要大写

RotatedRect trackBox = CamShift(backproj, trackWindow, //trackWindow为鼠标选择的区域,TermCriteria为确定迭代终止的准则

TermCriteria(CV_TERMCRIT_EPS | CV_TERMCRIT_ITER, 10, 1));//CV_TERMCRIT_EPS是通过forest_accuracy,CV_TERMCRIT_ITER

if (trackWindow.area() <= 1) //是通过max_num_of_trees_in_the_forest

{

int cols = backproj.cols, rows = backproj.rows, r = (MIN(cols, rows) + 5) / 6;

trackWindow = Rect(trackWindow.x - r, trackWindow.y - r,

trackWindow.x + r, trackWindow.y + r) &

Rect(0, 0, cols, rows);//Rect函数为矩阵的偏移和大小,即第一二个参数为矩阵的左上角点坐标,第三四个参数为矩阵的宽和高

}

if (backprojMode)

cvtColor(backproj, image, CV_GRAY2BGR);//因此投影模式下显示的也是rgb图?

ellipse(image, trackBox, Scalar(0, 0, 255), 3, CV_AA);//跟踪的时候以椭圆为代表目标

}

}

//后面的代码是不管pause为真还是为假都要执行的

else if (trackObject < 0)//同时也是在按了暂停字母以后

paused = false;

if (selectObject && selection.width > 0 && selection.height > 0)

{

Mat roi(image, selection);

bitwise_not(roi, roi);//bitwise_not为将每一个bit位取反

}

imshow("CamShift Demo", image);

imshow("Histogram", histimg);

char c = (char)waitKey(10);

if (c == 27) //退出键

break;

switch (c)

{

case 'b': //反向投影模型交替

backprojMode = !backprojMode;

break;

case 'c': //清零跟踪目标对象

trackObject = 0;

histimg = Scalar::all(0);

break;

case 'h': //显示直方图交替

showHist = !showHist;

if (!showHist)

destroyWindow("Histogram");

else

namedWindow("Histogram", 1);

break;

case 'p': //暂停跟踪交替

paused = !paused;

break;

default:

}

}

}