【Semantic Segmentation】语义分割综述 -- Encoder And Decoder

【Semantic Segmentation】语义分割综述 -- Encoder And Decoder

- Encoder And Decoder

- [FCN] Fully Convolutional Networks for Semantic Segmentation 2016-05

- FCN-32

- FCN-16

- FCN-8

- code by pytorch

- [U-Net] Convolutional Networks for Biomedical Image Segmentation 2015-05

- [SegNet] A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation 2015-11

Encoder And Decoder

encoder-decoder是语义分割最基础的网络结构。主要论文如下:

[FCN] Fully Convolutional Networks for Semantic Segmentation 2016-05

https://arxiv.org/abs/1605.06211

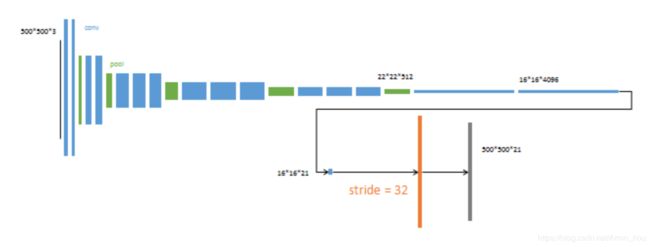

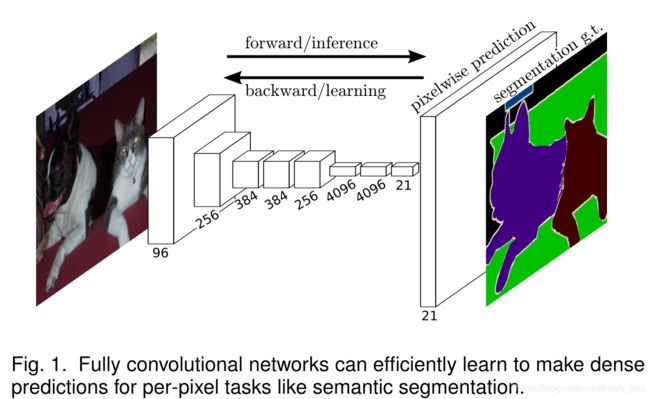

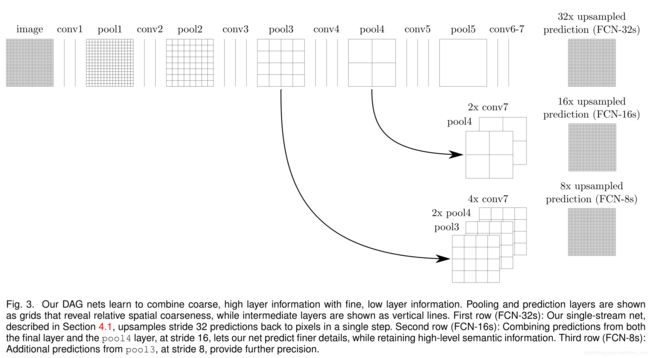

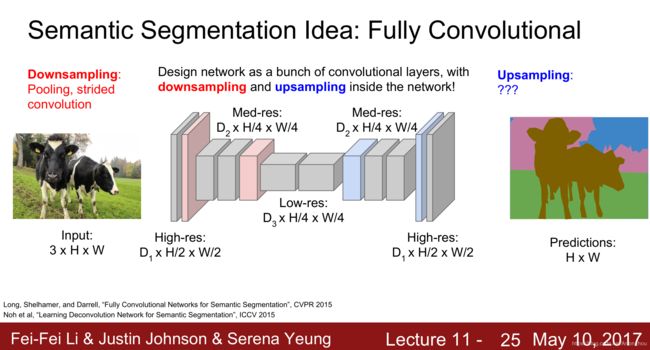

在前面的backbone做传统的卷积操作,不断增大感受野的同时,feature map size 不断缩小。为了解决feature map size 变小不能给每个像素分类的问题,进行上采样将feature map放大到原图。

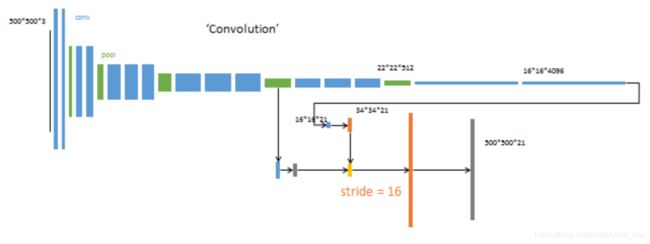

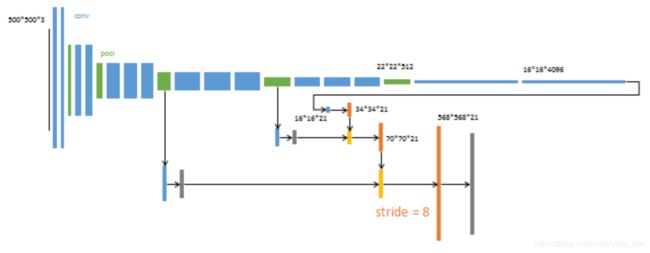

为了提高对细节像素的分类准确度,将encoder不同pooling大小的feature map 按照pixel-wise 与decoder层的pool相加,再做上采样。

FCN-32

FCN-16

FCN-8

上图摘自 深度学习500问

code by pytorch

使用resnet 作为backbone 实现了fcn-4s如下,参考了torchvision的版本。

torchvision实现的是fcn-32s.

下面的变量pool与图中的pool并不是对应的。

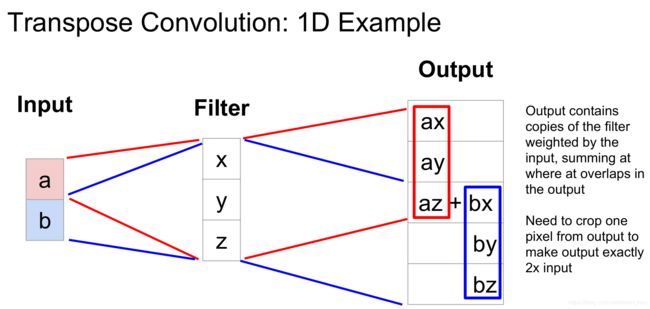

使用双线性插值初始化转置卷积参数。

class FCNHead(nn.Sequential):

'''

To merge the feature mapping with different scale in the middle with the feature mapping by

upsampling need to change channel dimensionality to the same.

'''

def __init__(self, in_channels, channels):

inter_channels = in_channels // 4

layers = [

nn.Conv2d(in_channels, inter_channels, 3, padding=1, bias=False),

nn.BatchNorm2d(inter_channels),

nn.LeakyReLU(),

nn.Dropout(0.1),

nn.Conv2d(inter_channels, channels, 1)

]

super(FCNHead, self).__init__(*layers)

class FCNUpsampling(nn.Sequential):

'''

'''

def __init__(self, num_classes, kernel_size, stride=1, padding=0):

layers = [

nn.ConvTranspose2d(num_classes, num_classes, kernel_size,

stride=stride, padding=padding, bias=False)

]

super(FCNUpsampling, self).__init__(*layers)

class FCN(nn.Module):

def __init__(self, backbone, num_classes, aux_classifier=None):

super(FCN, self).__init__()

# Using the modified resNet to get 4 different scales of the tensor,

# in fact, the last three used in the paper,

# first reserved for experiment

self.backbone = getBackBone(backbone)

self.pool1_FCNHead = FCNHead(256, num_classes)

self.pool2_FCNHead = FCNHead(512, num_classes)

self.pool3_FCNHead = FCNHead(1024, num_classes)

self.pool4_FCNHead = FCNHead(2048, num_classes)

# upsampling using transposeConvolution

# out = s(in-1)+d(k-1)+1-2p

# while s = s , d =1, k=2s, p = s/2, we will get out = s*in

# we need to zoom in 32 times by 2 x 2 x 2 x 4

self.up_score2 = FCNUpsampling(num_classes, 4, stride=2, padding=1)

self.up_score4 = FCNUpsampling(num_classes, 8, stride=4, padding=2)

self.up_score8 = FCNUpsampling(num_classes, 16, stride=8, padding=4)

self.up_score32 = FCNUpsampling(num_classes, 64, stride=32, padding=16)

self.aux_classifier = aux_classifier

self.initial_weight()

def forward(self, x):

result = OrderedDict()

input_shape = x.shape[-2:]

# pool1 scaling = 1/4 channel = 256

# pool2 scaling = 1/8 channel = 512

# pool3 scaling = 1/16 channel = 1024

# pool4 scaling = 1/32 channel = 2048

pool1, pool2, pool3, pool4 = self.backbone(x)

# pool1_same_channel scaling = 1/4 channel = num_classes

# pool2_same_channel scaling = 1/8 channel = num_classes

# pool3_same_channel scaling = 1/16 channel = num_classes

# pool4_same_channel scaling = 1/32 channel = num_classes

pool1_same_channel = self.pool1_FCNHead(pool1)

pool2_same_channel = self.pool2_FCNHead(pool2)

pool3_same_channel = self.pool3_FCNHead(pool3)

pool4_same_channel = self.pool4_FCNHead(pool4)

if self.aux_classifier is not None:

result["aux"] = self.up_score32(pool4_same_channel)

# merge x and pool3 scaling = 1/16

x = self.up_score2(pool4_same_channel) + pool3_same_channel

# merge x and pool2 scaling = 1/8

x = self.up_score2(x) + pool2_same_channel

# merge x and pool2 scaling = 1/4

x = self.up_score2(x) + pool1_same_channel

# scaling = 1

result["out"] = self.up_score4(x)

return result

def initial_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='leaky_relu')

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m,nn.ConvTranspose2d):

m.weight = torch.nn.Parameter(self.bilinear_kernel(m.in_channels,m.out_channels,m.kernel_size[0]))

def bilinear_kernel(self, in_channels, out_channels, kernel_size):

factor = (kernel_size + 1) // 2

if kernel_size % 2 == 1:

center = factor - 1

else:

center = factor - 0.5

og = np.ogrid[:kernel_size, :kernel_size]

filt = (1 - abs(og[0] - center) / factor) * \

(1 - abs(og[1] - center) / factor)

weight = np.zeros((in_channels, out_channels, kernel_size, kernel_size), dtype='float32')

weight[range(in_channels), range(out_channels), :, :] = filt

return torch.from_numpy(weight)

完整代码

上采样 最近插值 双线性插值

>>> input = torch.arange(1, 5, dtype=torch.float32).view(1, 1, 2, 2) >>> input tensor([[[[ 1., 2.], [ 3., 4.]]]]) >>> m = nn.Upsample(scale_factor=2, mode='nearest') >>> m(input) tensor([[[[ 1., 1., 2., 2.], [ 1., 1., 2., 2.], [ 3., 3., 4., 4.], [ 3., 3., 4., 4.]]]]) >>> m = nn.Upsample(scale_factor=2, mode='bilinear') # align_corners=False >>> m(input) tensor([[[[ 1.0000, 1.2500, 1.7500, 2.0000], [ 1.5000, 1.7500, 2.2500, 2.5000], [ 2.5000, 2.7500, 3.2500, 3.5000], [ 3.0000, 3.2500, 3.7500, 4.0000]]]]) >> m = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True) >> m(input) tensor([[[[ 1.0000, 1.3333, 1.6667, 2.0000], [ 1.6667, 2.0000, 2.3333, 2.6667], [ 2.3333, 2.6667, 3.0000, 3.3333], [ 3.0000, 3.3333, 3.6667, 4.0000]]]]) >>> # Try scaling the same data in a larger tensor >>> >>> input_3x3 = torch.zeros(3, 3).view(1, 1, 3, 3) >>> input_3x3[:, :, :2, :2].copy_(input) tensor([[[[ 1., 2.], [ 3., 4.]]]]) >>> input_3x3 tensor([[[[ 1., 2., 0.], [ 3., 4., 0.], [ 0., 0., 0.]]]]) >>> m = nn.Upsample(scale_factor=2, mode='bilinear') # align_corners=False >>> # Notice that values in top left corner are the same with the small input (except at boundary) >>> m(input_3x3) tensor([[[[ 1.0000, 1.2500, 1.7500, 1.5000, 0.5000, 0.0000], [ 1.5000, 1.7500, 2.2500, 1.8750, 0.6250, 0.0000], [ 2.5000, 2.7500, 3.2500, 2.6250, 0.8750, 0.0000], [ 2.2500, 2.4375, 2.8125, 2.2500, 0.7500, 0.0000], [ 0.7500, 0.8125, 0.9375, 0.7500, 0.2500, 0.0000], [ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000]]]]) >>> m = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True) >>> # Notice that values in top left corner are now changed >>> m(input_3x3) tensor([[[[ 1.0000, 1.4000, 1.8000, 1.6000, 0.8000, 0.0000], [ 1.8000, 2.2000, 2.6000, 2.2400, 1.1200, 0.0000], [ 2.6000, 3.0000, 3.4000, 2.8800, 1.4400, 0.0000], [ 2.4000, 2.7200, 3.0400, 2.5600, 1.2800, 0.0000], [ 1.2000, 1.3600, 1.5200, 1.2800, 0.6400, 0.0000], [ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000]]]])

参考

【翻译】https://www.cnblogs.com/xuanxufeng/p/6249834.html

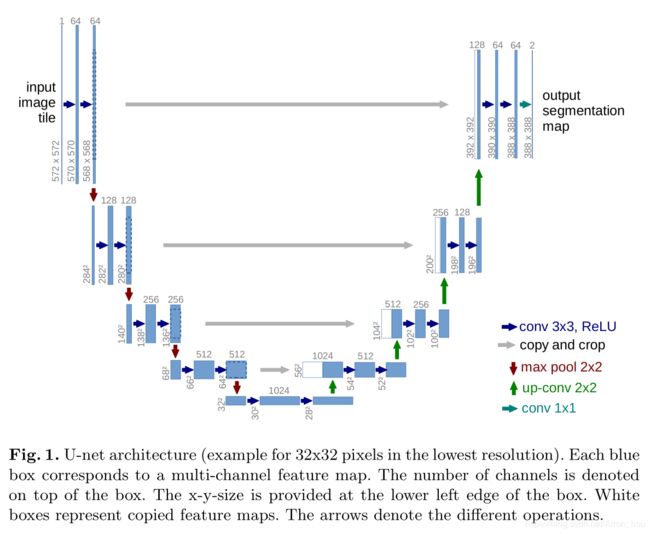

[U-Net] Convolutional Networks for Biomedical Image Segmentation 2015-05

https://arxiv.org/abs/1505.04597v1

U-Net结构与FCN类似。

区别主要在于:

FCN根据放缩的程度有8,16,32的版本,U-Net 每一次缩小feature map都有对应的上采样操作恢复到原来feature map size.

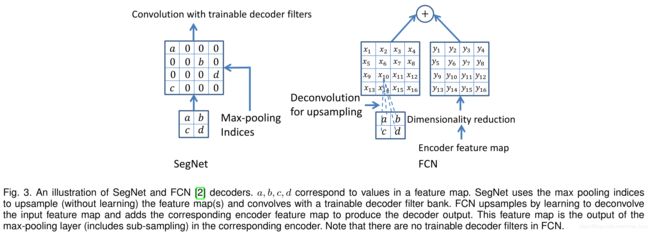

[SegNet] A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation 2015-11

https://arxiv.org/abs/1511.00561v3

SegNe类似于U-Net,但是为了减少冗余,通过记录pooling indices 将 encoder的信息输入到decoder之中。

1)提升边缘刻画度;

2)减少训练的参数;

3)这种上采样模式可以包含到任何编码-解码网络中。

MaxUnpool1d

>>> pool = nn.MaxPool1d(2, stride=2, return_indices=True) >>> unpool = nn.MaxUnpool1d(2, stride=2) >>> input = torch.tensor([[[1., 2, 3, 4, 5, 6, 7, 8]]]) >>> output, indices = pool(input) >>> unpool(output, indices) tensor([[[ 0., 2., 0., 4., 0., 6., 0., 8.]]]) >>> # Example showcasing the use of output_size >>> input = torch.tensor([[[1., 2, 3, 4, 5, 6, 7, 8, 9]]]) >>> output, indices = pool(input) >>> unpool(output, indices, output_size=input.size()) tensor([[[ 0., 2., 0., 4., 0., 6., 0., 8., 0.]]]) >>> unpool(output, indices) tensor([[[ 0., 2., 0., 4., 0., 6., 0., 8.]]])MaxUnpool2d

>>> pool = nn.MaxPool2d(2, stride=2, return_indices=True) >>> unpool = nn.MaxUnpool2d(2, stride=2) >>> input = torch.tensor([[[[ 1., 2, 3, 4], [ 5, 6, 7, 8], [ 9, 10, 11, 12], [13, 14, 15, 16]]]]) >>> output, indices = pool(input) >>> unpool(output, indices) tensor([[[[ 0., 0., 0., 0.], [ 0., 6., 0., 8.], [ 0., 0., 0., 0.], [ 0., 14., 0., 16.]]]]) >>> # specify a different output size than input size >>> unpool(output, indices, output_size=torch.Size([1, 1, 5, 5])) tensor([[[[ 0., 0., 0., 0., 0.], [ 6., 0., 8., 0., 0.], [ 0., 0., 0., 14., 0.], [ 16., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0.]]]])