随机森林&极限随机树(ensemble learning >Bagging)

随机森林与极限随机树的Python代码:

from sklearn.model_selection import cross_val_score

from sklearn.datasets import make_blobs

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import ExtraTreesClassifier

from sklearn.tree import DecisionTreeClassifier

X,y = make_blobs(n_samples=10000,n_features=10,centers=100,random_state=0)

# make_blobs函数是为聚类产生数据集,样本中心数

clf = DecisionTreeClassifier(max_depth=None,min_samples_split=2,random_state=0)

scores = cross_val_score(clf,X,y,cv=5)

print("DecisionTree:%f" %scores.mean())

clf2 = RandomForestClassifier(n_estimators=10,max_depth=None,min_samples_split=2,random_state=0)

scores2 =cross_val_score(clf2,X,y,cv=5)

print("RandomForestClassifier:%f" %scores2.mean())

clf3 = ExtraTreesClassifier(n_estimators=10,max_depth=None,min_samples_split=2,random_state=0)

scores3 = cross_val_score(clf3,X,y,cv=5)

print("ExtraTreesClassifier:%f" %scores3.mean())

Out:

DecisionTree:0.982300

RandomForestClassifier:0.999700

ExtraTreesClassifier:1.000000

Q:如何通过cross_val_score来评判一个模型的优劣???

森林的重要特征python代码

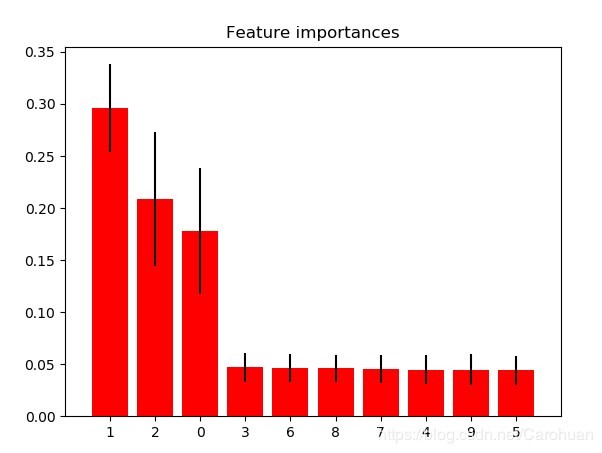

这个例子展示了使用树木的森林来评估特征在人工分类任务中的重要性。红条是森林的重要特征,以及它们在树间的变异性。

正如所料,图中显示了3个特征是有用的,而其余的则不是。

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_classification

from sklearn.ensemble import ExtraTreesClassifier

# Build a classification task using 3 informative features

X,y = make_classification(n_samples=100,

n_features=10,

n_informative=3,

n_redundant=0,

n_repeated=0,

n_classes=2,

random_state=0,

shuffle=False)

'''

n_informative:多信息特征的个数;n_redundant:冗余信息,informative特征的随机线性组合;

n_repeated :重复信息,随机提取n_informative和n_redundant 特征;

返回值:

X:形状数组[n_samples,n_features]

生成的样本。

y:形状数组[n_samples]

每个样本的类成员的整数标签

'''

# Build a forest and compute the feature importances

forest = ExtraTreesClassifier(n_estimators=250,random_state=0)

forest.fit(X,y)

importances = forest.feature_importances_

std = np.std([tree.feature_importances_ for tree in forest.estimators_],axis=0)

# 计算矩阵标准差

# np中求平均的时候除以的是数据的总数N,而pd中却是N-1

indices = np.argsort(importances)[::-1]#将重要性按升序排列再转为降序

#[开始:结束:步进],[::-1]为切片,步进为-1:0,0-1,0-1-1,。。。

# Print the feature ranking

print("Feature ranking")

for f in range(X.shape[1]):

print("%d. feature %d (%f)" %(f+1,indices[f],importances[indices[f]]))

# Plot the feature importances of the forest

plt.figure()

plt.title("Feature importances")

plt.bar(range(X.shape[1]),importances[indices],color='r',yerr=std[indices],align='center')

#yerr生成y轴的错误栏

plt.xticks(range(X.shape[1]),indices)

plt.xlim([-1,X.shape[1]])

plt.show()

Out:

Feature ranking: 1. feature 1 (0.295902) 2. feature 2 (0.208351) 3. feature 0 (0.177632) 4. feature 3 (0.047121) 5. feature 6 (0.046303) 6. feature 8 (0.046013) 7. feature 7 (0.045575) 8. feature 4 (0.044614) 9. feature 9 (0.044577) 10. feature 5 (0.043912)

figure:

平行森林的像素重要性Python代码:

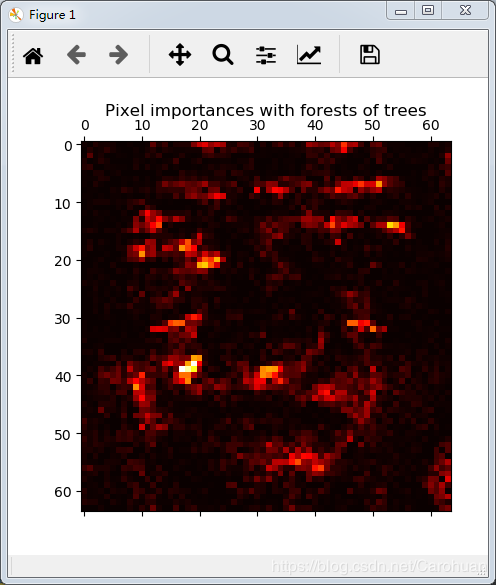

这个例子展示了如何使用树木的森林来评估图像分类任务(人脸)中像素的重要性。像素越高越重要。

下面的代码还说明了如何在多个作业中并行化预测的构造和计算。

from time import time

import matplotlib.pyplot as plt

from sklearn.datasets import fetch_olivetti_faces

from sklearn.ensemble import ExtraTreesClassifier

#用于对森林模型进行并行拟合的核数

# Number of cores to use to perform parallel fitting of the forest model

'''

这个模块还支持树的并行构建和预测结果的并行计算,这可以通过 n_jobs 参数实现。

如果设置 n_jobs = k ,则计算被划分为 k 个作业,并运行在机器的 k 个核上。

如果设置 n_jobs = -1 ,则使用机器的所有核。

注意由于进程间通信具有一定的开销,这里的提速并不是线性的(即,使用 k 个作业不会快 k 倍)。

当然,在建立大量的树,或者构建单个树需要相当长的时间(例如,在大数据集上)时,

(通过并行化)仍然可以实现显著的加速。

'''

n_jobs = 1

# Load the faces dataset人脸识别数据集

data = fetch_olivetti_faces()

X = data.images.reshape(len(data.images),-1)

y = data.target#限制为5个类

mask = y<5

#mask为掩膜,表达式理解为如果小于5就录入?

X = X[mask]

y = y[mask]

# Build a forest and compute the pixel importances像素重要性

print("Fitting ExtraTreesClassifier on faces data with %d cores..." %n_jobs)

t0 = time()

forest = ExtraTreesClassifier(n_estimators=1000,max_features=128,n_jobs=n_jobs,random_state=0)

forest.fit(X,y)

print("done in %0.3fs" %(time()-t0))

importances = forest.feature_importances_

importances = importances.reshape(data.images[0].shape)

# Plot pixel importances

plt.matshow(importances,cmap=plt.cm.hot)

#矩阵可视化

plt.title("Pixel importances with forests of trees")

plt.show()out:

Fitting ExtraTreesClassifier on faces data with 1 cores...

done in 0.975s

figure: