slurm+nfs

推荐使用 https://www.slothparadise.com/how-to-install-slurm-on-centos-7-cluster/ 教程

我写的太乱了 而且走了好多坑 ..下文是自言自语 请忽略

准备俩台干净环境的测试机 预装系统(cenos7_1810_everything cenos7_1810_minimal)

dependence prepartion

yum install gcc openssl-devel readline-devel -y

yum install bzip2-devel.bz2* -y

yum install zlib-devel* -y

yum install pam* -y

yum install perl* -y

install munge (for authentication)

参考 :https://launchpad.net/ubuntu/+source/munge/0.5.10-1/

mkdir -p /home/slurm

cd /home/slurm

wget https://launchpad.net/ubuntu/+archive/primary/+sourcefiles/munge/0.5.10-1/munge_0.5.10.orig.tar.bz2

yum install -y rpm-build

mv munge_0.5.10.orig.tar.bz2 munge-0.5.10.tar.bz2

rpmbuild -tb --clean munge-0.5.10.tar.bz2

cd /root/rpmbuild/RPMS/x86_64

rpm --install munge*.rpm

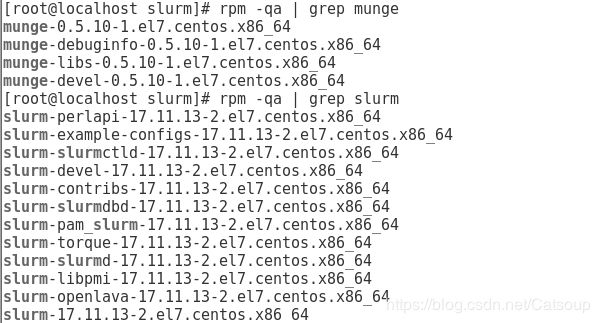

#rpm -qa | grep munge

systemctl start munge

#systemctl status munge

install mariadb (Make sure the MariaDB packages were installed before you built the Slurm RPM)

参考 :

https://wiki.fysik.dtu.dk/niflheim/Slurm_database

https://www.slothparadise.com/how-to-install-slurm-on-centos-7-cluster/

yum -y install mariadb-server mariadb-devel

install slurm(此处有坑 安装slurm前一定要把global account 和文件夹的权限调整好!!!参照本文底下的踩坑总结)

http://slurm.schedmd.com/

cd -

wget https://download.schedmd.com/slurm/slurm-18.08.5-2.tar.bz2

rpmbuild -ta --clean slurm-18.08.5-2.tar.bz2

cd /root/rpmbuild/RPMS/x86_64

rpm --install slurm*.rpm 或者 yum nogpgcheck localinstall slurm*.rpm

节点间的连接验证准备:

1.生成key

sudo bash

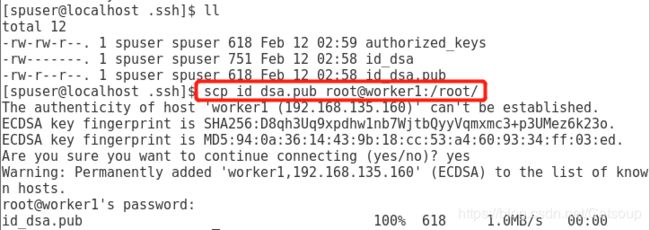

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

#ssh-keygen -t rsa

#ssh-copy-id spuser@spgpu 秘钥rsa.pub自动拷贝到对方机器上的./~/.ssh/authorized_keys里

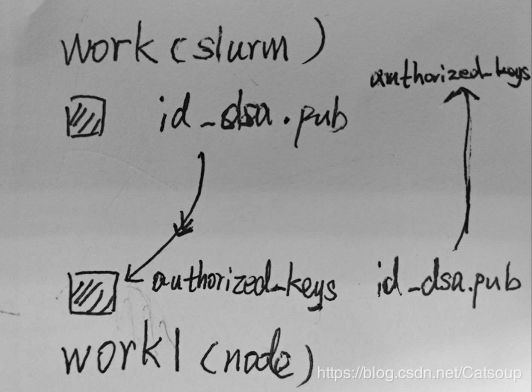

2.把生成的公钥这样放

https://blog.csdn.net/lemontree1945/article/details/79162031

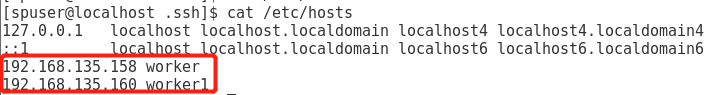

3.给机器的hostname 和ip 重命名一下

hostnamectl --static set-hostname worker

hostnamectl --static set-hostname worker1

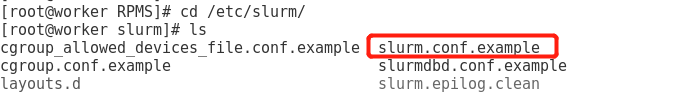

配置slurm文件(参考自带范本):

cat /etc/slurm/slurm.conf 配置好的文件

# Example slurm.conf file. Please run configurator.html

# (in doc/html) to build a configuration file customized

# for your environment.

#

#

# slurm.conf file generated by configurator.html.

#

# See the slurm.conf man page for more information.

#

ClusterName=worker

ControlMachine=worker1

ControlAddr=192.168.135.158

#BackupController=

#BackupAddr=

#

SlurmUser=slurm

#SlurmdUser=root

SlurmctldPort=6817

SlurmdPort=6818

AuthType=auth/munge

#JobCredentialPrivateKey=

#JobCredentialPublicCertificate=

StateSaveLocation=/var/spool/slurm/ctld

SlurmdSpoolDir=/var/spool/slurm/d

SwitchType=switch/none

MpiDefault=none

SlurmctldPidFile=/var/run/slurmctld.pid

SlurmdPidFile=/var/run/slurmd.pid

ProctrackType=proctrack/pgid

#PluginDir=

#FirstJobId=

ReturnToService=0

#MaxJobCount=

#PlugStackConfig=

#PropagatePrioProcess=

#PropagateResourceLimits=

#PropagateResourceLimitsExcept=

#Prolog=

#Epilog=

#SrunProlog=

#SrunEpilog=

#TaskProlog=

#TaskEpilog=

#TaskPlugin=

#TrackWCKey=no

#TreeWidth=50

#TmpFS=

#UsePAM=

#

# TIMERS

SlurmctldTimeout=300

SlurmdTimeout=300

InactiveLimit=0

MinJobAge=300

KillWait=30

Waittime=0

#

# SCHEDULING

SchedulerType=sched/backfill

#SchedulerAuth=

#SelectType=select/linear

FastSchedule=1

#PriorityType=priority/multifactor

#PriorityDecayHalfLife=14-0

#PriorityUsageResetPeriod=14-0

#PriorityWeightFairshare=100000

#PriorityWeightAge=1000

#PriorityWeightPartition=10000

#PriorityWeightJobSize=1000

#PriorityMaxAge=1-0

#

# LOGGING

SlurmctldDebug=3

SlurmctldLogFile=/var/log/slurmctld.log

SlurmdDebug=3

SlurmdLogFile=/var/log/slurmd.log

JobCompType=jobcomp/none

#JobCompLoc=

#

# ACCOUNTING

#JobAcctGatherType=jobacct_gather/linux

#JobAcctGatherFrequency=30

#

#AccountingStorageType=accounting_storage/slurmdbd

#AccountingStorageHost=

#AccountingStorageLoc=

#AccountingStoragePass=

#AccountingStorageUser=

#

# COMPUTE NODES

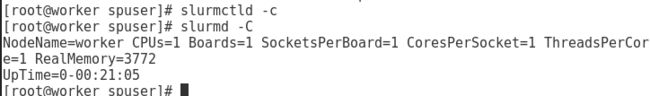

NodeName=worker NodeAddr=192.168.135.158 CPUs=1 Procs=1 State=UNKNOWN

NodeName=worker1 NodeAddr=192.168.135.160 CPUs=1 Procs=1 State=UNKNOWN

PartitionName=debug Nodes=ALL Default=YES MaxTime=INFINITE State=UP

把worker的munge.key和slurm.conf分发给worker1

参考:https://blog.csdn.net/mr_zhang2014/article/details/78680167

scp /etc/munge/munge.key root@worker1:/etc/munge/

scp /etc/slurm/slurm.conf root@worker1:/etc/slurm/

头结点和子节点为启动做的准备 开启服务关闭防火墙 :

1.头结点

#adduser --system slurm 正确的方法:https://wiki.fysik.dtu.dk/niflheim/Slurm_installation

systemctl stop firewalld

systemctl start munge

systemctl enable slurmd.service

systemctl start slurmd.service

systemctl status slurmd.service

systemctl enable slurmctld.service

systemctl start slurmctld.service

systemctl status slurmctld.service

2.子节点

systemctl stop firewalld

#systemctl start munge 子节点不用开启munge

systemctl enable slurmd.service

systemctl start slurmd.service

systemctl status slurmd.service

给自己的提醒 遇到的坑:

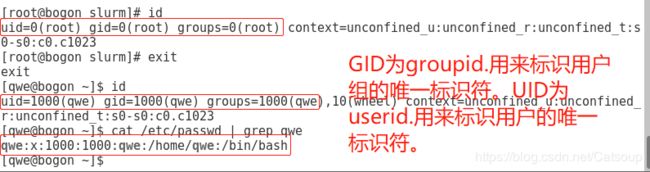

1. you should create global accounts!!!Slurm and Munge require consistent UID and GID across every node in the cluster.

https://wiki.fysik.dtu.dk/niflheim/Slurm_installation

https://www.cnblogs.com/carltonx/p/5403001.html

export MUNGEUSER=981

groupadd -g $MUNGEUSER munge

useradd -m -c "MUNGE Uid 'N' Gid Emporium" -d /var/lib/munge -u $MUNGEUSER -g munge -s /sbin/nologin munge

export SlurmUSER=982

groupadd -g $SlurmUSER slurm

useradd -m -c "Slurm workload manager" -d /var/lib/slurm -u $SlurmUSER -g slurm -s /bin/bash slurm

*特殊的shell——/sbin/nologin:

使用了这个 shell 的用户即使有了密码,你想要登入时他也无法登入,会出现如下的信息:

This account is currently not available.

我们所谓的『无法登入』指的仅是:『这个使用者无法使用 bash 或其他 shell 来登入系统』而已, 并不是说这个账号就无法使用其他的系统资源喔!

2. Make sure to set the correct ownership and mode on all nodes!!!到各个节点上纠正scp munge.key造成的权限问题

https://www.cnblogs.com/carltonx/p/5403001.html

chown -R munge: /etc/munge /var/log/munge

chmod 0700 /etc/munge /var/log/munge

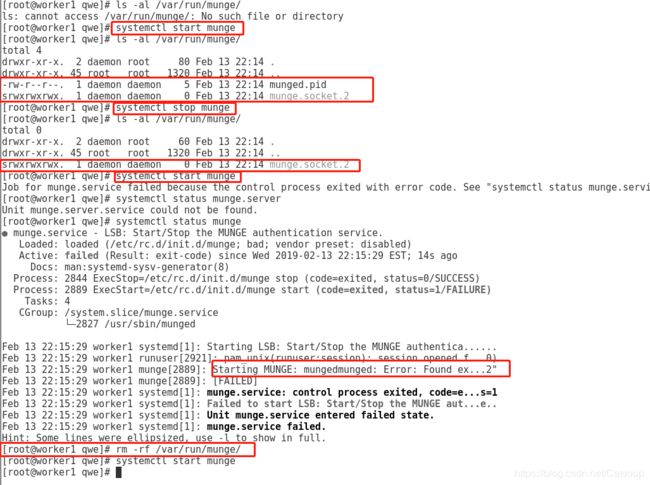

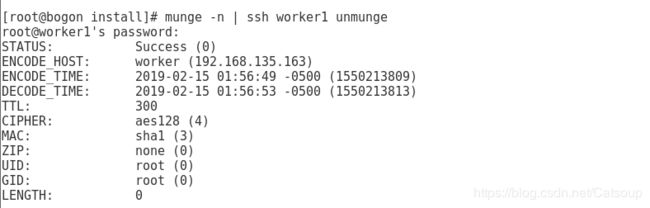

3. munge开启的注意事项:

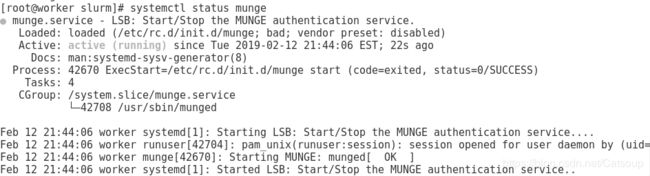

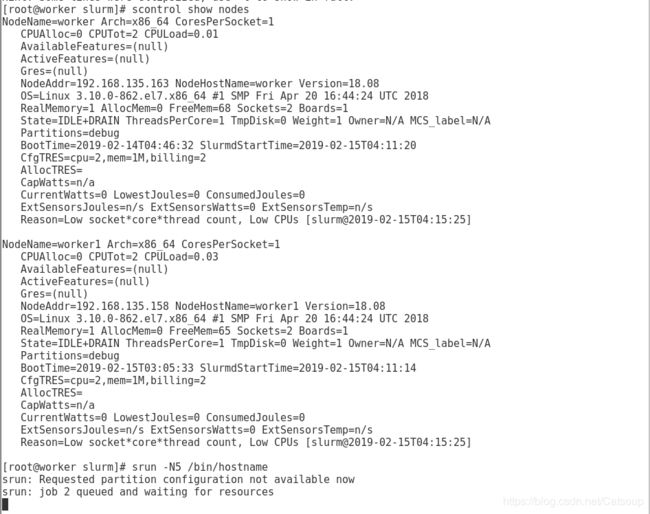

最后跑起来是这样的:

参考帮助:

李博

bgfs:https://www.kclouder.cn/centos7-beegfs/