在上一篇介绍了Redis Cluster的部署实战《Redis Cluster 集群部署实战》

若因业务无法支撑现有访问或对以后容量进行扩容预案,如何扩容?可以提前做好快速扩容的脚本,进行一键扩容或是手工进行扩容

这里是手工进行扩容

背景:

假设因业务快速增长需要,现上面的Redis集群已经无法满足支撑业务系统,先需要快速扩容Redis集群,这里假设只扩容一台Redis(两个实例)

扩容清单:

主机名 |

IP地址 |

Redis端口划分 |

备注 |

node174 |

172.20.20.174 |

16001,16002 |

说明:这里的各个主机的hosts就不配置了,若是自建DNS,则内部解析需要配置

Redis部署略

参见上面的链接,另外可以将打成镜像,修改IP地址即可

/opt/redis/bin/redis-server /opt/redis/conf/redis-16001.conf

/opt/redis/bin/redis-server /opt/redis/conf/redis-16002.conf

./bin/redis-cli --cluster add-node 172.20.20.174:16001 172.20.20.171:16001

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Adding node 172.20.20.174:16001 to cluster 172.20.20.171:16001

>>> Performing Cluster Check (using node 172.20.20.171:16001)

S: a9ab7a12884d505efcf066fcc3aae74c2b3f101d 172.20.20.171:16001

slots: (0 slots) slave

replicates 6dec89e63a48a9a9f393011a698a0bda21b70f1e

M: 6dec89e63a48a9a9f393011a698a0bda21b70f1e 172.20.20.172:16002

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 04d9c29ef2569b1fc8abd9594d64fca33e4ad4f2 172.20.20.173:16001

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 761348a0107f5b009cabc22c214e39578d0aa707 172.20.20.172:16001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 81d1b25ae1ea85421bd4abb2be094c258026c505 172.20.20.171:16002

slots: (0 slots) slave

replicates 04d9c29ef2569b1fc8abd9594d64fca33e4ad4f2

S: 14e79155f78065e4518e00cd5bd057336b17e3a7 172.20.20.173:16002

slots: (0 slots) slave

replicates 761348a0107f5b009cabc22c214e39578d0aa707

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 172.20.20.174:16001 to make it join the cluster.

[OK] New node added correctly.

登陆171--173中的任意一台查看

[root@node172 redis]# ./bin/redis-cli -h 172.20.20.172 -p 16002

172.20.20.172:16002> auth zjkj

OK

172.20.20.172:16002> cluster nodes

761348a0107f5b009cabc22c214e39578d0aa707 172.20.20.172:16001@26001 master - 0 1564038119398 3 connected 5461-10922

ea77d7d10c27a1d99de00f4f81034d2d82a3c765 172.20.20.174:16001@26001 master - 0 1564038119000 0 connected

6dec89e63a48a9a9f393011a698a0bda21b70f1e 172.20.20.172:16002@26002 myself,master - 0 1564038120000 7 connected 0-5460

a9ab7a12884d505efcf066fcc3aae74c2b3f101d 172.20.20.171:16001@26001 slave 6dec89e63a48a9a9f393011a698a0bda21b70f1e 0 1564038121413 7 connected

04d9c29ef2569b1fc8abd9594d64fca33e4ad4f2 172.20.20.173:16001@26001 master - 0 1564038118396 5 connected 10923-16383

81d1b25ae1ea85421bd4abb2be094c258026c505 172.20.20.171:16002@26002 slave 04d9c29ef2569b1fc8abd9594d64fca33e4ad4f2 0 1564038118000 5 connected

14e79155f78065e4518e00cd5bd057336b17e3a7 172.20.20.173:16002@26002 slave 761348a0107f5b009cabc22c214e39578d0aa707 0 1564038120405 6 connected

发现172.20.20.174是没有分配slot的,那如何给174主机分配slot呢?

在标注红色的有Master字样的机器上,登陆任意一台master,给174分配slot

[root@node174 redis]# ./bin/redis-cli --cluster reshard 172.20.20.171:16001 -a zjkj

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing Cluster Check (using node 172.20.20.172:16002)

M: 6dec89e63a48a9a9f393011a698a0bda21b70f1e 172.20.20.172:16002

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 761348a0107f5b009cabc22c214e39578d0aa707 172.20.20.172:16001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: ea77d7d10c27a1d99de00f4f81034d2d82a3c765 172.20.20.174:16001

slots: (0 slots) master

S: a9ab7a12884d505efcf066fcc3aae74c2b3f101d 172.20.20.171:16001

slots: (0 slots) slave

replicates 6dec89e63a48a9a9f393011a698a0bda21b70f1e

M: 04d9c29ef2569b1fc8abd9594d64fca33e4ad4f2 172.20.20.173:16001

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 81d1b25ae1ea85421bd4abb2be094c258026c505 172.20.20.171:16002

slots: (0 slots) slave

replicates 04d9c29ef2569b1fc8abd9594d64fca33e4ad4f2

S: 14e79155f78065e4518e00cd5bd057336b17e3a7 172.20.20.173:16002

slots: (0 slots) slave

replicates 761348a0107f5b009cabc22c214e39578d0aa707

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)?

How many slots do you want to move (from 1 to 16384)? 4096

What is the receiving node ID? ea77d7d10c27a1d99de00f4f81034d2d82a3c765 #新添加的主库Id

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all

shell>./bin/redis-cli -h 172.20.20.174 -p 16002 -a zjkj

172.20.20.174:16002> cluster replicate ea77d7d10c27a1d99de00f4f81034d2d82a3c765 #这里的Id是 #新库ID

172.20.20.174:16002> cluster nodes

761348a0107f5b009cabc22c214e39578d0aa707 172.20.20.172:16001@26001 master - 0 1564039099770 3 connected 6827-10922

a9ab7a12884d505efcf066fcc3aae74c2b3f101d 172.20.20.171:16001@26001 slave 6dec89e63a48a9a9f393011a698a0bda21b70f1e 0 1564039096756 7 connected

14e79155f78065e4518e00cd5bd057336b17e3a7 172.20.20.173:16002@26002 slave 761348a0107f5b009cabc22c214e39578d0aa707 0 1564039097762 3 connected

6dec89e63a48a9a9f393011a698a0bda21b70f1e 172.20.20.172:16002@26002 master - 0 1564039098764 7 connected 1365-5460

04d9c29ef2569b1fc8abd9594d64fca33e4ad4f2 172.20.20.173:16001@26001 master - 0 1564039097000 5 connected 12288-16383

81d1b25ae1ea85421bd4abb2be094c258026c505 172.20.20.171:16002@26002 slave 04d9c29ef2569b1fc8abd9594d64fca33e4ad4f2 0 1564039099000 5 connected

7d697261f0a4dd31f422e2fbe593d5cdd575fbb5 172.20.20.174:16002@26002 myself,slave ea77d7d10c27a1d99de00f4f81034d2d82a3c765 0 1564039094000 0 connected

ea77d7d10c27a1d99de00f4f81034d2d82a3c765 172.20.20.174:16001@26001 master - 0 1564039097000 8 connected 0-1364 5461-6826 10923-12287

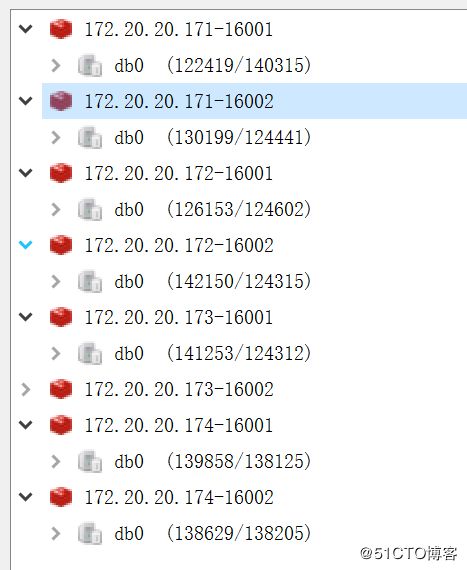

至此扩容成功,看看各个实例的分配情况

这里有数据量不相等的情况,因为我在171的node上还在批量插入数据

当然扩容的反方案,之后就是缩容,一般对抗突发流量之后需要缩容,正常的数据量增长是不要缩容的,另外缩容也是有一定风险存在的